datasetId

large_stringlengths 6

116

| author

large_stringlengths 2

42

| last_modified

large_stringdate 2021-04-29 15:34:29

2025-06-06 00:37:09

| downloads

int64 0

3.97M

| likes

int64 0

7.74k

| tags

large listlengths 1

7.92k

| task_categories

large listlengths 0

48

| createdAt

large_stringdate 2022-03-02 23:29:22

2025-06-06 00:34:34

| trending_score

float64 0

40

⌀ | card

large_stringlengths 31

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Yuto2007/scFoundationEmbeddings_Detailed_Clusters | Yuto2007 | 2025-06-03T15:14:48Z | 0 | 0 | [

"size_categories:1M<n<10M",

"format:parquet",

"modality:text",

"modality:timeseries",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T13:48:37Z | null | ---

dataset_info:

features:

- name: Detailed_Cluster_names

dtype: string

- name: input_ids

sequence: float32

- name: labels

dtype: int64

splits:

- name: train

num_bytes: 18877493822

num_examples: 1533093

- name: test

num_bytes: 2359689710

num_examples: 191637

- name: validation

num_bytes: 2359691130

num_examples: 191637

download_size: 24771881770

dataset_size: 23596874662

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

- split: validation

path: data/validation-*

---

|

VGraf/self-talk_gpt3.5_gpt4o_prefpairs_with_Meta-Llama-3.1-8B-Instruct_chosen | VGraf | 2025-06-03T15:11:06Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T15:10:54Z | null | ---

dataset_info:

features:

- name: messages

list:

- name: content

dtype: string

- name: role

dtype: string

- name: chosen

list:

- name: content

dtype: string

- name: role

dtype: string

- name: rejected

list:

- name: content

dtype: string

- name: role

dtype: string

splits:

- name: train

num_bytes: 24629

num_examples: 2

download_size: 25672

dataset_size: 24629

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

cobordism/LC_train_3_no_percep | cobordism | 2025-06-03T15:05:50Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T15:05:47Z | null | ---

dataset_info:

features:

- name: conversations

list:

- name: from

dtype: string

- name: value

dtype: string

- name: image

dtype: image

splits:

- name: train

num_bytes: 22820687.0

num_examples: 999

download_size: 21800134

dataset_size: 22820687.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

thomas-kuntz/MNLP_M2_dpo_dataset | thomas-kuntz | 2025-06-03T14:54:23Z | 57 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-05-26T15:17:34Z | null | ---

dataset_info:

features:

- name: prompt

dtype: string

- name: chosen

dtype: string

- name: rejected

dtype: string

- name: dataset

dtype: string

splits:

- name: train

num_bytes: 3777155.9881329113

num_examples: 1011

- name: test

num_bytes: 945223.0118670886

num_examples: 253

download_size: 2440962

dataset_size: 4722379.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

LivingOptics/hyperspectral-grapes | LivingOptics | 2025-06-03T14:48:38Z | 37 | 0 | [

"language:en",

"license:mit",

"size_categories:n<1K",

"format:imagefolder",

"modality:image",

"library:datasets",

"library:mlcroissant",

"region:us"

] | [] | 2025-01-23T09:22:15Z | null | ---

license: mit

language:

- en

size_categories:

- n<1K

---

# Non-contact sugar estimation with hyperpsectral data

## Access the data

You can now access this dataset via the [Living Optics Cloud Portal](https://cloud.livingoptics.com/shared-resources?file=data/annotated-datasets/Grapes-Dataset.zip)

## Motivation

### Precision viticulture and adaptive harvesting

Wine quality is heavily dependent on the grape maturity at harvest and can decline by 10\% in a week. To maximise quality, the harvest time of grape berries should be optimised for

- **sugar levels**, usually measured as total soluble solids (TSS) or Brix

- berry acidity, often expressed as pH and titratable acidity (TA);

- concentrations of the main organic acids in the berry, such as tartaric and malic acid; and for red varieties anthocyanin and

- total phenol concentrations.

These values are typically analysed using wet chemistry procedures on periodically sampled berries one to three weeks before harvest. These analytical methods are **destructive, require time-consuming berry sampling, as well as sample preparation in most instances**.

### Why Hyperspectral imaging?

Hyperspectral imaging offers a method for non-destructive, high-throughput testing of grape berries. These measurements require less specialised labour, no reagents and can have relatively low cost per analysis. Hyperspectral imagers, combined with statistical modelling techniques, have been shown to accurately predict grape parameters in a non-destructive manner for table and wine grapes using methods such as partial least squares regression analysis (PLSA).

Living Optics are developing pioneering hyperspectral cameras for the mass market. Our mission is to enable the next generation of computer vision through Spatial Spectral Information.

> This is a notebook showing how hyperspectral data, collected with the Living Optics camera, can be paired with statistical analysis to train a regressor for extracting grape parameters.

## Method

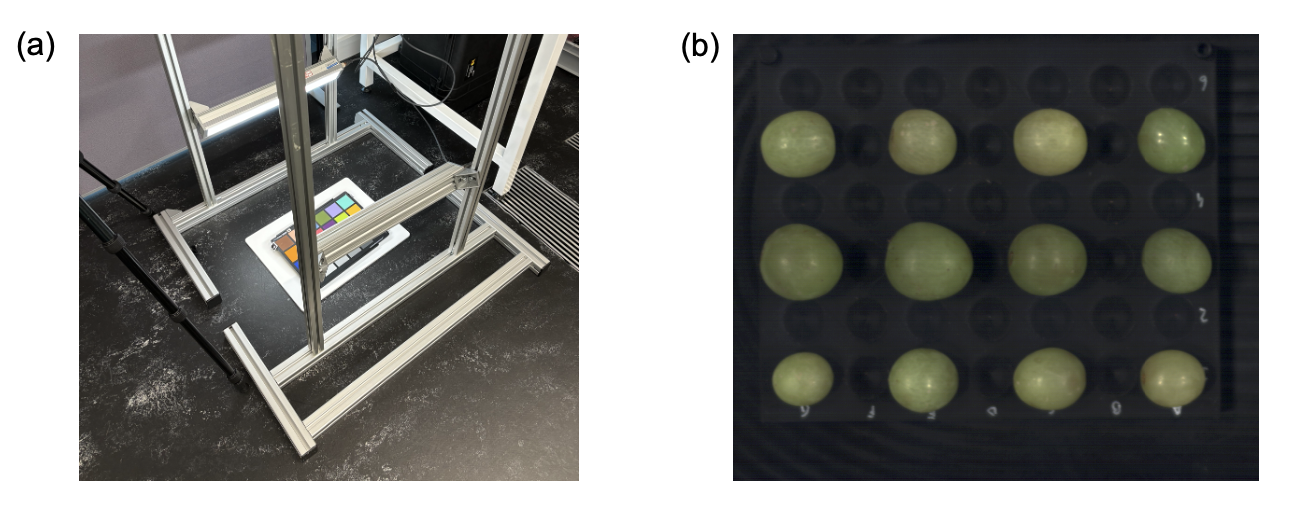

Individual grapes were extracted from six boxes of white table grapes (Agrimessina, Italy). These 300 individual table grapes were imaged using a custom lighting rig. The Living Optics camera was mounted on a downwards facing tripod directly above the sample to achieve a (45/0◦) imaging geometry. Twelve grape samples were placed on a black PLA tray per imaging round shown in Figure 9(b). Additionally, a white reference was collected by imaging a sheet of Tyvek in place of the tray. Using an objective lens focal length of 18 mm, approximately 150 sampling points were obtained per grape on average.

After imaging, 3-4 drops of juice from each grape ( 0.2ml) were extracted and measured with a handheld

BRIX refractometer (AS-Q6, Aicevoos, China). The error of the instrument is given as ±0.2 ◦Bx.

## Dataset contains

- 🍇 300 processed diffuse reflectance spectra of white grapes collected with the Living Optics camera

- 🧑🔬 Paired sugar content values for each grape

## Citation

Raw data is available by request |

LivingOptics/hyperspectral-plant-virus | LivingOptics | 2025-06-03T14:47:12Z | 51 | 0 | [

"language:en",

"license:mit",

"size_categories:n<1K",

"format:imagefolder",

"modality:image",

"library:datasets",

"library:mlcroissant",

"region:us"

] | [] | 2025-05-29T14:11:05Z | null | ---

license: mit

language:

- en

size_categories:

- n<1K

---

# Super Beet Virus Classification Dataset

## Access the Data

You can access this dataset via the [Living Optics Cloud Portal](https://cloud.livingoptics.com/shared-resources?file=data/annotated-datasets/Field-Crop-Classification-Dataset.zip).

## Motivation

### Enhancing Sugar Beet Health Monitoring

Sugar beet crops are susceptible to various viral infections that can significantly impact yield and quality. Early and accurate detection of these viruses is crucial for effective disease management and crop protection.

### Leveraging Hyperspectral Imaging for Disease Classification

Hyperspectral imaging provides a non-destructive and high-throughput method for detecting plant diseases by capturing detailed spectral information. This technology, combined with machine learning techniques, enables the classification of different virus types affecting sugar beet plants.

## Method

The dataset comprises 97 high-resolution images of sugar beet plants, each annotated to indicate the presence of specific viral infections. A total of 146 annotations are included, covering the following classes:

- **BChV (Beet Chlorosis Virus)**: 24 instances

- **BMYV (Beet Mild Yellowing Virus)**: 16 instances

- **BYV (Beet Yellows Virus)**: 24 instances

- **Uninoculated (Healthy Plants)**: 30 instances

Annotations were performed considering clear gaps between plants, ensuring accurate labeling. Some images include white reference targets to aid in spectral calibration.

## Dataset Contains

- 🖼️ 97 images of sugar beet plants under various inoculation statuses

- 🔖 146 annotations across 4 classes (3 virus types and healthy plants)

- 🎯 Labels indicating inoculation status or reference targets

- ⚠️ Note: The dataset exhibits some class imbalance

## Virus Descriptions

- **Beet Chlorosis Virus (BChV)**: A polerovirus causing interveinal yellowing in sugar beet leaves. Transmitted by aphids, BChV can lead to significant yield losses if not managed properly.

- **Beet Mild Yellowing Virus (BMYV)**: Another polerovirus spread by aphids, BMYV results in mild yellowing symptoms and can reduce sugar content in beets.

- **Beet Yellows Virus (BYV)**: A closterovirus known for causing severe yellowing and necrosis in sugar beet leaves. BYV is considered one of the most damaging viruses affecting sugar beet crops.

## Citation

Raw data is available upon request.

For more information on the viruses and their impact on sugar beet crops, refer to the following resources:

- [Virus Yellows - Bayer Crop Science UK](https://cropscience.bayer.co.uk/agronomy-id/diseases/sugar-beet-diseases/virus-yellows-beet)

- [Disease control: Learn about Virus Yellows - NFU](https://www.nfuonline.com/updates-and-information/disease-control-learn-about-virus-yellows/)

- [Beet yellows virus - Wikipedia](https://en.wikipedia.org/wiki/Beet_yellows_virus) |

adamezzaim/M3_mcqa_context | adamezzaim | 2025-06-03T14:39:08Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T14:38:54Z | null | ---

dataset_info:

features:

- name: id

dtype: string

- name: dataset

dtype: string

- name: question

dtype: string

- name: options

sequence: string

- name: answer

dtype: string

- name: explanation

dtype: string

splits:

- name: train

num_bytes: 303770784

num_examples: 35460

download_size: 173517952

dataset_size: 303770784

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

baogui123/test | baogui123 | 2025-06-03T14:36:40Z | 0 | 0 | [

"license:apache-2.0",

"region:us"

] | [] | 2025-06-03T14:36:40Z | null | ---

license: apache-2.0

---

|

CHOOSEIT/MCQA_small_alignment_1000 | CHOOSEIT | 2025-06-03T14:10:43Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T14:10:39Z | null | ---

dataset_info:

features:

- name: source_dataset

dtype: string

- name: question

dtype: string

- name: choices

sequence: string

- name: answer

dtype: string

- name: rationale

dtype: string

- name: split

dtype: string

- name: subject

dtype: string

splits:

- name: train

num_bytes: 3391469

num_examples: 4898

- name: test

num_bytes: 373739

num_examples: 1080

- name: validation

num_bytes: 133973

num_examples: 346

download_size: 2391571

dataset_size: 3899181

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

- split: validation

path: data/validation-*

---

|

anonloftune/insurance-10-facttune-mc | anonloftune | 2025-06-03T13:42:16Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T13:42:13Z | null | ---

dataset_info:

features:

- name: question

dtype: string

- name: chosen

dtype: string

- name: rejected

dtype: string

splits:

- name: train

num_bytes: 40642652

num_examples: 23371

- name: validation

num_bytes: 4778090

num_examples: 2866

download_size: 3915129

dataset_size: 45420742

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

---

|

while0628/vqasynth_sample_spatial_new_ttt | while0628 | 2025-06-03T13:39:00Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:image",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"vqasynth",

"remyx"

] | [] | 2025-06-03T13:38:54Z | null | ---

dataset_info:

features:

- name: image

dtype: image

- name: messages

sequence: 'null'

splits:

- name: train

num_bytes: 1162100.0

num_examples: 8

download_size: 1163534

dataset_size: 1162100.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

tags:

- vqasynth

- remyx

---

|

Kyleyee/train_data_Helpful_drdpo_preference | Kyleyee | 2025-06-03T13:36:05Z | 80 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-03-17T16:07:59Z | null | ---

dataset_info:

features:

- name: chosen

list:

- name: content

dtype: string

- name: role

dtype: string

- name: rejected

list:

- name: content

dtype: string

- name: role

dtype: string

- name: prompt

list:

- name: content

dtype: string

- name: role

dtype: string

- name: a_1

list:

- name: content

dtype: string

- name: role

dtype: string

- name: a_2

list:

- name: content

dtype: string

- name: role

dtype: string

- name: chosen_preference

dtype: float64

- name: rejected_preference

dtype: float64

- name: a_1_preference

dtype: float64

- name: a_2_preference

dtype: float64

splits:

- name: train

num_bytes: 69438428

num_examples: 43835

- name: test

num_bytes: 3812201

num_examples: 2354

download_size: 42617495

dataset_size: 73250629

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

ManTang034/so101_test | ManTang034 | 2025-06-03T13:32:28Z | 0 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:tabular",

"modality:timeseries",

"modality:video",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot",

"so101",

"tutorial"

] | [

"robotics"

] | 2025-06-03T13:32:11Z | null | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

- so101

- tutorial

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.1",

"robot_type": "so101",

"total_episodes": 10,

"total_frames": 5960,

"total_tasks": 1,

"total_videos": 10,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 30,

"splits": {

"train": "0:10"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"action": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.state": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.images.wrist": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.height": 480,

"video.width": 640,

"video.codec": "av1",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"video.fps": 30,

"video.channels": 3,

"has_audio": false

}

},

"timestamp": {

"dtype": "float32",

"shape": [

1

],

"names": null

},

"frame_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"episode_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"task_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

}

}

}

```

## Citation

**BibTeX:**

```bibtex

[More Information Needed]

``` |

Tamnemtf/SGU_BOOK | Tamnemtf | 2025-06-03T13:23:10Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T13:23:06Z | null | ---

dataset_info:

features:

- name: id

dtype: string

- name: description

dtype: string

- name: question

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 2632329

num_examples: 2252

download_size: 351845

dataset_size: 2632329

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

youssefbelghmi/MNLP_M3_mcqa_dataset_2 | youssefbelghmi | 2025-06-03T13:16:38Z | 44 | 0 | [

"task_categories:multiple-choice",

"task_ids:multiple-choice-qa",

"annotations_creators:expert-generated",

"multilinguality:monolingual",

"language:en",

"license:mit",

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"multiple-choice"

] | 2025-06-03T11:12:01Z | null | ---

annotations_creators:

- expert-generated

language:

- en

license: mit

multilinguality:

- monolingual

size_categories:

- 10K<n<100K

task_categories:

- multiple-choice

task_ids:

- multiple-choice-qa

pretty_name: MNLP M3 MCQA Dataset

---

# MNLP M3 MCQA Dataset

The **MNLP M3 MCQA Dataset** is a carefully curated collection of **Multiple-Choice Question Answering (MCQA)** examples, unified from several academic and benchmark datasets.

Developed as part of the *CS-552: Modern NLP* course at EPFL (Spring 2025), this dataset is designed for training and evaluating models on multiple-choice QA tasks, particularly in the **STEM** and general knowledge domains.

## Key Features

- ~30,000 MCQA questions

- 6 diverse sources: `SciQ`, `OpenBookQA`, `MathQA`, `ARC-Easy`, `ARC-Challenge`, and `MedMCQA`

- Each question has exactly 4 options (A–D) and one correct answer

- Covers a wide range of topics: science, technology, engineering, mathematics, and general knowledge

## Dataset Structure

Each example is a dictionary with the following fields:

| Field | Type | Description |

|-----------|----------|---------------------------------------------------|

| `dataset` | `string` | Source dataset (`sciq`, `openbookqa`, etc.) |

| `id` | `string` | Unique identifier for the question |

| `question`| `string` | The question text |

| `choices` | `list` | List of 4 answer options (corresponding to A–D) |

| `answer` | `string` | The correct option, as a letter: `"A"`, `"B"`, `"C"`, or `"D"` |

```markdown

Example:

```json

{

"dataset": "sciq",

"id": "sciq_01_00042",

"question": "What does a seismograph measure?",

"choices": ["Earthquakes", "Rainfall", "Sunlight", "Temperature"],

"answer": "A"

}

```

## Source Datasets

This dataset combines multiple high-quality MCQA sources to support research and fine-tuning in STEM education and reasoning. The full corpus contains **29,870 multiple-choice questions** from the following sources:

| Source (Hugging Face) | Name | Size | Description & Role in the Dataset |

| ------------------------------------------- | ------------------- | ------ | ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `allenai/sciq` | **SciQ** | 11,679 | **Science questions** (Physics, Chemistry, Biology, Earth science). Crowdsourced with 4 answer choices and optional supporting evidence. Used to provide **well-balanced, factual STEM questions** at a middle/high-school level. |

| `allenai/openbookqa` | **OpenBookQA** | 4,957 | Science exam-style questions requiring **multi-step reasoning** and use of **commonsense or external knowledge**. Contributes more **challenging** and **inference-based** questions. |

| `allenai/math_qa` | **MathQA** | 5,000 | Subsample of quantitative math word problems derived from AQuA-RAT, annotated with structured answer options. Introduces **numerical reasoning** and **problem-solving** components into the dataset. |

| `allenai/ai2_arc` (config: `ARC-Easy`) | **ARC-Easy** | 2,140 | Science questions at the middle school level. Useful for testing **basic STEM understanding** and **factual recall**. Filtered to retain only valid 4-choice entries. |

| `allenai/ai2_arc` (config: `ARC-Challenge`) | **ARC-Challenge** | 1,094 | More difficult science questions requiring **reasoning and inference**. Widely used as a benchmark for evaluating LLMs. Also filtered for clean MCQA format compatibility. |

| `openlifescienceai/medmcqa` | **MedMCQA** | 5,000 | A subsample of multiple-choice questions on **medical topics** from various exams, filtered for a single-choice format. Contains real-world and domain-specific **clinical reasoning** questions covering various medical disciplines. |

## Intended Applications and Structure

This dataset is split into three parts:

- `train` (~70%) — for training MCQA models

- `validation` (~15%) — for tuning and monitoring performance during training

- `test` (~15%) — for final evaluation on unseen questions

It is suitable for multiple-choice question answering tasks, especially in the **STEM** domain (Science, Technology, Engineering, Mathematics).

## Author

This dataset was created and published by [Youssef Belghmi](https://huggingface.co/youssefbelghmi) as part of the *CS-552: Modern NLP* course at EPFL (Spring 2025).

|

ricdomolm/lawma-reasoning-qwen4b-v0 | ricdomolm | 2025-06-03T13:13:34Z | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T13:13:06Z | null | ---

dataset_info:

features:

- name: index

dtype: int64

- name: response

dtype: string

splits:

- name: train

num_bytes: 1555621301

num_examples: 272800

download_size: 504545897

dataset_size: 1555621301

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

anfindsen/M3_fixed_ds | anfindsen | 2025-06-03T13:10:06Z | 78 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-05-30T13:09:27Z | null | ---

dataset_info:

features:

- name: question

dtype: string

- name: answer

dtype: string

- name: openr1_source

dtype: string

- name: id

dtype: string

- name: dataset

dtype: string

- name: choices

sequence: string

splits:

- name: open_train

num_bytes: 261175677.3129466

num_examples: 209341

- name: open_eval

num_bytes: 29020628.687053423

num_examples: 23261

- name: train

num_bytes: 148520607.22336814

num_examples: 99920

- name: test

num_bytes: 16503445.77663187

num_examples: 11103

- name: final_train

num_bytes: 150518.10557768925

num_examples: 451

- name: final_test

num_bytes: 17020.894422310757

num_examples: 51

download_size: 252044861

dataset_size: 455387898.00000006

configs:

- config_name: default

data_files:

- split: open_train

path: data/open_train-*

- split: open_eval

path: data/open_eval-*

- split: train

path: data/train-*

- split: test

path: data/test-*

- split: final_train

path: data/final_train-*

- split: final_test

path: data/final_test-*

---

|

interstellarninja/atropos_salesforce_apigen | interstellarninja | 2025-06-03T13:03:52Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T13:03:27Z | null | ---

dataset_info:

features:

- name: conversations

list:

- name: from

dtype: string

- name: value

dtype: string

splits:

- name: train

num_bytes: 111375968

num_examples: 4574

download_size: 20591781

dataset_size: 111375968

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

vietnhat/grandpa-interview-dataset | vietnhat | 2025-06-03T12:35:06Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:audio",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T12:34:59Z | null | ---

dataset_info:

features:

- name: text

dtype: string

- name: audio

dtype: audio

splits:

- name: train

num_bytes: 2356619.0

num_examples: 9

download_size: 2168827

dataset_size: 2356619.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

albertfares/NLP4Education_filtered | albertfares | 2025-06-03T12:04:36Z | 37 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-05-31T13:15:39Z | null | ---

dataset_info:

features:

- name: id

dtype: string

- name: question

dtype: string

- name: option_a

dtype: string

- name: option_b

dtype: string

- name: option_c

dtype: string

- name: option_d

dtype: string

- name: answer

dtype: string

- name: num_options

dtype: int64

splits:

- name: train

num_bytes: 978662

num_examples: 2656

download_size: 572520

dataset_size: 978662

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

RainS/MEG-Multi-Exposure-Gradient-dataset | RainS | 2025-06-03T11:42:10Z | 0 | 0 | [

"license:mit",

"size_categories:1K<n<10K",

"format:webdataset",

"modality:image",

"modality:text",

"library:datasets",

"library:webdataset",

"library:mlcroissant",

"region:us"

] | [] | 2025-06-03T08:39:45Z | null | ---

license: mit

---

This is a Multi-Exposure Gradient dataset for low light enhancement and also for overexposure recovery, which contains about 1000 group of photos.

The contents include variety of scenes such as indoor, outdoor, sunny, rainy, cloudy, nightime, city, rural, campus and so on.

There are 5 photos in each group with different exposure gradient, from low light to overexposure noted as 01 to 05.

We provide two versions, one is continuous shooting data, whose contents of each group maybe a little bit different because of the moving scene, but they have more natural illumination and color.

The other is post processing data, whose contents of each group are strictly same, and the exposure is adjuste by PS.

You can choose one version according to your requirement, for example the continuous shooting data for unsupervised learning and the post processing data for supervised learning. |

ljnlonoljpiljm/BIGstockimage-1.5M-scored-pt-one | ljnlonoljpiljm | 2025-06-03T11:19:37Z | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T10:58:10Z | null | ---

dataset_info:

features:

- name: id

dtype: string

- name: image

dtype: image

- name: text

dtype: string

- name: similarity

dtype: float64

splits:

- name: train

num_bytes: 29323222699.0

num_examples: 750000

download_size: 29309357098

dataset_size: 29323222699.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

louisebrix/smk_only_paintings | louisebrix | 2025-06-03T11:13:11Z | 44 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T11:12:56Z | null | ---

dataset_info:

features:

- name: smk_id

dtype: string

- name: period

dtype: string

- name: start_year

dtype: int64

- name: title

dtype: string

- name: first_artist

dtype: string

- name: all_artists

sequence: string

- name: num_artists

dtype: int64

- name: main_type

dtype: string

- name: all_types

sequence: string

- name: image_thumbnail

dtype: string

- name: gender

sequence: string

- name: birth_death

sequence: string

- name: nationality

sequence: string

- name: history

sequence: string

- name: artist_roles

sequence: string

- name: creator_roles

sequence: string

- name: num_creators

dtype: int64

- name: techniques

sequence: string

- name: enrichment_url

dtype: string

- name: content_person

sequence: string

- name: has_text

dtype: bool

- name: colors

sequence: string

- name: geo_location

dtype: string

- name: entropy

dtype: float64

- name: tags_en

sequence: string

- name: image

dtype: image

- name: rgb

dtype: string

splits:

- name: train

num_bytes: 237193836.69

num_examples: 1687

download_size: 232341252

dataset_size: 237193836.69

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

sumuks/openalex | sumuks | 2025-06-03T11:11:08Z | 0 | 0 | [

"size_categories:100M<n<1B",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-02T23:57:53Z | null | ---

dataset_info:

- config_name: authors

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 782950242225

num_examples: 103480180

download_size: 128603695157

dataset_size: 782950242225

- config_name: concepts

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 434567730

num_examples: 65073

download_size: 149586112

dataset_size: 434567730

- config_name: domains

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 5899

num_examples: 4

download_size: 8723

dataset_size: 5899

- config_name: fields

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 56037

num_examples: 26

download_size: 21602

dataset_size: 56037

- config_name: funders

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 127287864

num_examples: 32437

download_size: 27402892

dataset_size: 127287864

- config_name: institutions

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 2247783574

num_examples: 114883

download_size: 391692914

dataset_size: 2247783574

- config_name: publishers

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 35165382

num_examples: 10741

download_size: 7180922

dataset_size: 35165382

- config_name: sources

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 4985902135

num_examples: 260798

download_size: 767043697

dataset_size: 4985902135

- config_name: subfields

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 986219

num_examples: 252

download_size: 245766

dataset_size: 986219

- config_name: topics

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 29540660

num_examples: 4516

download_size: 8240326

dataset_size: 29540660

- config_name: works

features:

- name: id

dtype: string

- name: data

dtype: string

- name: updated_date

dtype: string

splits:

- name: train

num_bytes: 47184276960

num_examples: 2322509

- name: updated_2025_05_28

num_bytes: 44222267882

num_examples: 2634576

- name: updated_2025_05_27

num_bytes: 33156479642

num_examples: 2099881

download_size: 31002445366

dataset_size: 124563024484

configs:

- config_name: authors

data_files:

- split: train

path: authors/train-*

- config_name: concepts

data_files:

- split: train

path: concepts/train-*

- config_name: domains

data_files:

- split: train

path: domains/train-*

- config_name: fields

data_files:

- split: train

path: fields/train-*

- config_name: funders

data_files:

- split: train

path: funders/train-*

- config_name: institutions

data_files:

- split: train

path: institutions/train-*

- config_name: publishers

data_files:

- split: train

path: publishers/train-*

- config_name: sources

data_files:

- split: train

path: sources/train-*

- config_name: subfields

data_files:

- split: train

path: subfields/train-*

- config_name: topics

data_files:

- split: train

path: topics/train-*

- config_name: works

data_files:

- split: train

path: works/train-*

- split: updated_2025_05_28

path: works/updated_2025_05_28-*

- split: updated_2025_05_27

path: works/updated_2025_05_27-*

---

|

jaeyong2/Reason-Qwen3-06B-En-3 | jaeyong2 | 2025-06-03T11:09:11Z | 203 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-05-25T05:46:08Z | null | ---

dataset_info:

features:

- name: content

dtype: string

- name: response

sequence: string

splits:

- name: train

num_bytes: 2235927107

num_examples: 18000

download_size: 738754934

dataset_size: 2235927107

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

hassno/synth_cv_parser_faker | hassno | 2025-06-03T10:53:32Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T10:52:56Z | null | ---

dataset_info:

features:

- name: prompt

dtype: string

- name: completion

dtype: string

splits:

- name: train

num_bytes: 18326097.0

num_examples: 9000

- name: test

num_bytes: 2036233.0

num_examples: 1000

download_size: 10180009

dataset_size: 20362330.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

haraouikouceil/doc | haraouikouceil | 2025-06-03T10:33:27Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T10:33:22Z | null | ---

dataset_info:

features:

- name: prompt

dtype: string

splits:

- name: train

num_bytes: 31117380

num_examples: 71452

download_size: 2843773

dataset_size: 31117380

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

davanstrien/dataset_cards_with_metadata | davanstrien | 2025-06-03T10:20:08Z | 422 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-04-17T09:48:47Z | null | ---

dataset_info:

features:

- name: datasetId

dtype: large_string

- name: author

dtype: large_string

- name: last_modified

dtype: large_string

- name: downloads

dtype: int64

- name: likes

dtype: int64

- name: tags

large_list: large_string

- name: task_categories

large_list: large_string

- name: createdAt

dtype: large_string

- name: trending_score

dtype: float64

- name: card

dtype: large_string

splits:

- name: train

num_bytes: 110530629

num_examples: 32315

download_size: 30124925

dataset_size: 110530629

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

EQX55/test_voice2 | EQX55 | 2025-06-03T10:14:58Z | 17 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:audio",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T10:14:55Z | null | ---

dataset_info:

features:

- name: audio

dtype: audio

- name: text

dtype: string

splits:

- name: train

num_bytes: 17464862.0

num_examples: 26

download_size: 13153100

dataset_size: 17464862.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

gaianet/gaianet | gaianet | 2025-06-03T10:14:47Z | 13 | 1 | [

"license:apache-2.0",

"region:us"

] | [] | 2024-05-08T04:01:05Z | null | ---

license: apache-2.0

---

|

daniel-dona/sparql-dataset-reasoning-test3 | daniel-dona | 2025-06-03T10:13:56Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T10:13:51Z | null | ---

dataset_info:

features:

- name: qid

dtype: string

- name: lang

dtype: string

- name: nlq

dtype: string

- name: classes

sequence: string

- name: properties

sequence: string

- name: features

sequence: string

- name: sparql

dtype: string

- name: reasoning

dtype: string

splits:

- name: train

num_bytes: 11712015

num_examples: 2500

download_size: 961054

dataset_size: 11712015

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

gisako/multiwoz-chat | gisako | 2025-06-03T09:28:19Z | 0 | 0 | [

"task_categories:text-generation",

"language:en",

"license:mit",

"size_categories:10K<n<100K",

"format:json",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"region:us"

] | [

"text-generation"

] | 2025-06-03T09:23:31Z | null | ---

license: mit

task_categories:

- text-generation

language:

- en

pretty_name: multiwoz-chat-llama-gpt

size_categories:

- 1K<n<10K

--- |

burtenshaw/testing-dedup-in-space | burtenshaw | 2025-06-03T09:20:07Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T09:19:57Z | null | ---

dataset_info:

features:

- name: id

dtype: string

- name: label

dtype: int64

- name: text

dtype: string

- name: label_text

dtype: string

splits:

- name: train

num_bytes: 146629

num_examples: 2195

download_size: 72048

dataset_size: 146629

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

yycgreentea/so100_test_v2 | yycgreentea | 2025-06-03T09:17:26Z | 0 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:tabular",

"modality:timeseries",

"modality:video",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot",

"so100",

"tutorial"

] | [

"robotics"

] | 2025-06-03T06:57:34Z | null | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

- so100

- tutorial

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.1",

"robot_type": "so100",

"total_episodes": 2,

"total_frames": 1491,

"total_tasks": 1,

"total_videos": 4,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 25,

"splits": {

"train": "0:2"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"action": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.state": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.images.laptop": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.height": 480,

"video.width": 640,

"video.codec": "av1",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"video.fps": 25,

"video.channels": 3,

"has_audio": false

}

},

"observation.images.phone": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.height": 480,

"video.width": 640,

"video.codec": "av1",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"video.fps": 25,

"video.channels": 3,

"has_audio": false

}

},

"timestamp": {

"dtype": "float32",

"shape": [

1

],

"names": null

},

"frame_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"episode_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"task_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

}

}

}

```

## Citation

**BibTeX:**

```bibtex

[More Information Needed]

``` |

ustc-zyt/time-r1-data | ustc-zyt | 2025-06-03T09:13:46Z | 0 | 0 | [

"task_categories:time-series-forecasting",

"language:en",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"time-series-forecasting"

] | 2025-06-03T09:01:15Z | null | ---

license: apache-2.0

task_categories:

- time-series-forecasting

language:

- en

pretty_name: a

size_categories:

- 1K<n<10K

---

# 📊 Time-R1 RL Training Dataset

This dataset is used in the **Reinforcement Learning (RL)** phase of the paper:

**"Time Series Forecasting as Reasoning: A Slow-Thinking Approach with Reinforced LLMs"**.

---

## 📁 Data Format Overview

The dataset is stored in **Parquet** format. Each sample includes:

| Field | Type | Description |

| -------------- | ------------ | ---------------------------------------------------------------------------- |

| `prompt` | `list[dict]` | Natural language instruction including 96-step historical input sequence. |

| `reward_model` | `dict` | Contains the `ground_truth` field – the target values for the next 96 steps. |

| `data_source` | `string` | Dataset name (e.g., `"ETTh1"`). |

| `ability` | `string` | Task type – here always `"TimeSeriesForecasting"`. |

| `extra_info` | `dict` | Metadata including sample `index` and data `split` (e.g., `"train"`). |

---

## 🧾 Example Sample

```json

{

"prompt": [

{

"content": "Here is the High Useful Load data of the transformer. (dataset is ETTh1)..."

}

],

"data_source": "ETTh1",

"ability": "TimeSeriesForecasting",

"reward_model": {

"ground_truth": "date HUFL\n2016-07-05 00:00:00 11.989\n2016-07-05 01:00:00 12.525\n..."

},

"extra_info": {

"index": 0,

"split": "train"

}

}

```

Each prompt contains structured temporal input (96 steps) in a language-style format.

The `ground_truth` contains corresponding 96-step future targets with timestamps and values. |

pepijn223/record-test | pepijn223 | 2025-06-03T09:08:47Z | 0 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:timeseries",

"modality:video",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot"

] | [

"robotics"

] | 2025-06-03T09:08:43Z | null | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.1",

"robot_type": "so101_follower",

"total_episodes": 2,

"total_frames": 510,

"total_tasks": 1,

"total_videos": 2,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 30,

"splits": {

"train": "0:2"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"action": {

"dtype": "float32",

"shape": [

6

],

"names": [

"shoulder_pan.pos",

"shoulder_lift.pos",

"elbow_flex.pos",

"wrist_flex.pos",

"wrist_roll.pos",

"gripper.pos"

]

},

"observation.state": {

"dtype": "float32",

"shape": [

6

],

"names": [

"shoulder_pan.pos",

"shoulder_lift.pos",

"elbow_flex.pos",

"wrist_flex.pos",

"wrist_roll.pos",

"gripper.pos"

]

},

"observation.images.front": {

"dtype": "video",

"shape": [

1080,

1920,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.height": 1080,

"video.width": 1920,

"video.codec": "av1",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"video.fps": 30,

"video.channels": 3,

"has_audio": false

}

},

"timestamp": {

"dtype": "float32",

"shape": [

1

],

"names": null

},

"frame_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"episode_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"task_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

}

}

}

```

## Citation

**BibTeX:**

```bibtex

[More Information Needed]

``` |

StonyBrook-CVLab/ZoomLDM-demo-dataset | StonyBrook-CVLab | 2025-06-03T09:07:27Z | 333 | 0 | [

"language:en",

"license:apache-2.0",

"size_categories:n<1K",

"region:us"

] | [] | 2025-05-19T10:57:35Z | null | ---

license: apache-2.0

language:

- en

size_categories:

- n<1K

---

Demo dataset for our CVPR 2025 paper "ZoomLDM: Latent Diffusion Model for multi-scale image generation". We extract patches from TCGA-BRCA Whole slide images.

## Usage

```python

from datasets import load_dataset

ds = load_dataset("StonyBrook-CVLab/ZoomLDM-demo-dataset", name="5x", trust_remote_code=True, split='train')

print(np.array(ds[0]['ssl_feat']).shape)

>>> (1024, 16, 16)

```

## Citations

```bibtex

@InProceedings{Yellapragada_2025_CVPR,

author = {Yellapragada, Srikar and Graikos, Alexandros and Triaridis, Kostas and Prasanna, Prateek and Gupta, Rajarsi and Saltz, Joel and Samaras, Dimitris},

title = {ZoomLDM: Latent Diffusion Model for Multi-scale Image Generation},

booktitle = {Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR)},

month = {June},

year = {2025},

pages = {23453-23463}

}

```

```

@article{lingle2016cancer,

title={The cancer genome atlas breast invasive carcinoma collection (TCGA-BRCA)},

author={Lingle, Wilma and Erickson, Bradley J and Zuley, Margarita L and Jarosz, Rose and Bonaccio, Ermelinda and Filippini, Joe and Net, Jose M and Levi, Len and Morris, Elizabeth A and Figler, Gloria G and others},

year={2016},

publisher={The Cancer Imaging Archive}

}

```

|

3sara/colpali_italian_documents | 3sara | 2025-06-03T09:06:53Z | 129 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-05-29T17:04:02Z | null | ---

dataset_info:

features:

- name: image

dtype: image

- name: domanda1

dtype: string

- name: risposta1

dtype: string

- name: domanda2

dtype: string

- name: risposta2

dtype: string

- name: domanda3

dtype: string

- name: risposta3

dtype: string

- name: query_generica

dtype: string

- name: query_specifica

dtype: string

- name: query_visuale

dtype: string

- name: documento

dtype: string

- name: anno

dtype: string

splits:

- name: train

num_bytes: 1465913549.0

num_examples: 934

download_size: 1464198933

dataset_size: 1465913549.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

imdatta0/aime | imdatta0 | 2025-06-03T09:05:30Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T09:05:19Z | null | ---

dataset_info:

features:

- name: problem

dtype: string

- name: answer

dtype: string

- name: solution

dtype: string

splits:

- name: aime_2025

num_bytes: 16342

num_examples: 30

- name: aime_2024

num_bytes: 136649

num_examples: 30

download_size: 93497

dataset_size: 152991

configs:

- config_name: default

data_files:

- split: aime_2025

path: data/aime_2025-*

- split: aime_2024

path: data/aime_2024-*

---

|

Nitish906099/dream11-eng-wi-_7 | Nitish906099 | 2025-06-03T08:58:46Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T08:58:44Z | null | ---

dataset_info:

features:

- name: Name

dtype: string

- name: Mat

dtype: int64

- name: Inns

dtype: int64

- name: 'NO'

dtype: float64

- name: Runs

dtype: int64

- name: Ball

dtype: int64

- name: Avg

dtype: float64

- name: SR

dtype: float64

- name: HS

dtype: int64

- name: 100s

dtype: float64

- name: 50s

dtype: float64

- name: 0s

dtype: float64

- name: 6s

dtype: float64

- name: 4s

dtype: float64

- name: SR.1

dtype: float64

- name: Dream Team

dtype: int64

- name: Tot Pts

dtype: int64

- name: Bat Pts

dtype: int64

- name: Bowl Pts

dtype: float64

- name: Field Pts

dtype: float64

- name: Pace Bowl

dtype: float64

- name: Spin Bowl

dtype: float64

- name: RHB

dtype: float64

- name: LHB

dtype: float64

- name: Match Type

dtype: string

splits:

- name: train

num_bytes: 1027

num_examples: 5

download_size: 10323

dataset_size: 1027

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

# Dataset Card for "dream11-eng-wi-_7"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) |

Nitish906099/dream11-eng-wi-___ | Nitish906099 | 2025-06-03T08:58:32Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T08:58:31Z | null | ---

dataset_info:

features:

- name: Player

dtype: string

- name: Avg Fpts

dtype: float64

- name: Runs

dtype: int64

- name: WK

dtype: int64

- name: RR1

dtype: int64

- name: RR2

dtype: int64

- name: RR3

dtype: int64

- name: RR4

dtype: int64

- name: RR5

dtype: int64

- name: RW1

dtype: int64

- name: RW2

dtype: int64

- name: RW3

dtype: int64

- name: RW4

dtype: int64

- name: RW5

dtype: int64

splits:

- name: train

num_bytes: 622

num_examples: 5

download_size: 5893

dataset_size: 622

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

# Dataset Card for "dream11-eng-wi-___"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) |

Nitish906099/dream11-eng-wi-__ | Nitish906099 | 2025-06-03T08:58:30Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T08:58:29Z | null | ---

dataset_info:

features:

- name: Player Name

dtype: string

- name: Team

dtype: string

- name: Bowling Style

dtype: string

- name: Avg Fpts

dtype: int64

- name: Avg Fpts Bowling 1st

dtype: string

- name: Avg Fpts Bowling 2nd

dtype: string

- name: Avg Fpts vs Opposition

dtype: string

- name: Avg Fpts at Venue

dtype: string

- name: Wkts

dtype: int64

- name: PP Wkts

dtype: int64

- name: Death Wkts

dtype: int64

- name: Overs

dtype: float64

- name: Bowled PP

dtype: string

- name: Bowled Death

dtype: string

splits:

- name: train

num_bytes: 571

num_examples: 5

download_size: 6095

dataset_size: 571

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

# Dataset Card for "dream11-eng-wi-__"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) |

EQUES/YakugakuQA | EQUES | 2025-06-03T08:46:36Z | 68 | 0 | [

"task_categories:question-answering",

"language:ja",

"license:cc-by-sa-4.0",

"arxiv:2505.16661",

"region:us"

] | [

"question-answering"

] | 2025-04-26T03:33:34Z | null | ---

license: cc-by-sa-4.0

task_categories:

- question-answering

language:

- ja

viewer: true

columns:

- name: problem_id

type: string

- name: problem_text

type: string

- name: choices

type: list[string]

- name: text_only

type: bool

- name: answer

type: list[string]

- name: comment

type: string

- name: num_images

type: int

---

# YakugakuQA

<!-- Provide a quick summary of the dataset. -->

YakugakuQA is a question answering dataset, consisting of 13 years (2012-2024) of past questions and answers from the Japanese National License Examination for Pharmacists. It contains over 4K pairs of questions, answers, and commentaries.

**2025-5-29: Leaderboard added.**

**2025-2-17: Image data added.**

**2024-12-10: Dataset release.**

## Leaderboard

3-shot Accuracy (%)

|| [YakugakuQA](https://huggingface.co/datasets/EQUES/YakugakuQA/) | [IgakuQA](https://github.com/jungokasai/IgakuQA)|

| ---- | ---- | ---- |

| o1-preview | 87.9 | |

| GPT-4o | 83.6 | 86.6 |

| [pfnet/Preferred-MedLLM-Qwen-72B](https://huggingface.co/pfnet/Preferred-MedLLM-Qwen-72B) | 77.2 | |

| [Qwen/Qwen2.5-72B-Instruct](https://huggingface.co/Qwen/Qwen2.5-72B-Instruct) | 73.6 | |

| [google/medgemma-27b-text-it](https://huggingface.co/google/medgemma-27b-text-it) | 62.2 (*)| |

| [EQUES/JPharmatron-7B](https://huggingface.co/EQUES/JPharmatron-7B) | 62.0 | 64.7 |

| [Qwen/Qwen3-14B](https://huggingface.co/Qwen/Qwen3-14B) (**) | 59.9 | |

(*) Several issues in instruction-following, e.g., think and reason too much to reach token limit.

(**) enable_thinking=False for fair evaluation.

## Dataset Details

### Dataset Description

<!-- Provide a longer summary of what this dataset is. -->

- **Curated by:** EQUES Inc.

- **Funded by [optional]:** [GENIAC Project](https://www.meti.go.jp/policy/mono_info_service/geniac/index.html)

- **Shared by [optional]:**

- **Language(s) (NLP):** Japanese

- **License:** cc-by-sa-4.0

## Uses

<!-- Address questions around how the dataset is intended to be used. -->

### Direct Use

<!-- This section describes suitable use cases for the dataset. -->

YakugakuQA is intended to be used as a benchmark for evaluating the knowledge of large language models (LLMs) in the field of pharmacy.

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the dataset will not work well for. -->

Any usage except above.

## Dataset Structure

<!-- This section provides a description of the dataset fields, and additional information about the dataset structure such as criteria used to create the splits, relationships between data points, etc. -->

YakugakuQA consists of two files: `data.jsonl`, which contains the questions, answers, and commentaries, and `metadata.jsonl`, which holds supplementary information about the question categories and additional details related to the answers.

### data.jsonl

- "problem_id" : unique ID, represented by a six-digit integer. The higher three digits indicate the exam number, while the lower three digits represent the question number within that specific exam.

- "problem_text" : problem statement.

- "choices" : choices corresponding to each question. Note that the Japanese National License Examination for Pharmacists is a multiple-choice format examination.

- "text_only" : whether the question includes images or tables. The corresponding images or tables are not included in this dataset, even if `text_only` is marked as `false`.

- "answer" : list of indices of the correct choices. Note the following points:

- the choices are 1-indexed.

- multiple choices may be included, depending on the question format.

- "解なし" indicates there is no correct choice. The reason for this is documented in `metadata.jsonl` in most cases.

- "comment" : commentary text.

- "num_images" : number of images included in the question.

### metadata.jsonl

- "problem_id" : see above.

- "category" : question caterogy. One of the `["Physics", "Chemistry", "Biology", "Hygiene", "Pharmacology", "Pharmacy", "Pathology", "Law", "Practice"]`.

- "note" : additional information about the question.

### images

The image filenames follow the format:

`problem_id_{image_id}.png`

## Dataset Creation

### Curation Rationale

<!-- Motivation for the creation of this dataset. -->

YakugakuQA aims to provide a Japanese-language evaluation benchmark for assessing the domain knowledge of LLMs.

### Source Data

<!-- This section describes the source data (e.g. news text and headlines, social media posts, translated sentences, ...). -->

#### Data Collection and Processing

<!-- This section describes the data collection and processing process such as data selection criteria, filtering and normalization methods, tools and libraries used, etc. -->

All questions, answers and commentaries for the target years have been collected. The parsing process has been performed automatically.

#### Who are the source data producers?

<!-- This section describes the people or systems who originally created the data. It should also include self-reported demographic or identity information for the source data creators if this information is available. -->

All question, answers, and commentaries have been obtained from [yakugaku lab](https://yakugakulab.info/). All metadata has been obtained from the website of the Ministry of Health, Labour and Welfare. It should be noted that the original questions and answers are also sourced from materials published by the Ministry of Health, Labour and Welfare.

## Citation

<!-- If there is a paper or blog post introducing the dataset, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

```

@misc{sukeda2025japaneselanguagemodelnew,

title={A Japanese Language Model and Three New Evaluation Benchmarks for Pharmaceutical NLP},

author={Issey Sukeda and Takuro Fujii and Kosei Buma and Shunsuke Sasaki and Shinnosuke Ono},

year={2025},

eprint={2505.16661},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2505.16661},

}

```

## Contributions

Thanks to [@shinnosukeono](https://github.com/shinnosukeono) for adding this dataset.

## Acknowledgement

本データセットは、経済産業省及び国立研究開発法人新エネルギー・産業技術総合開発機構(NEDO)による生成AI開発力強化プロジェクト「GENIAC」により支援を受けた成果の一部である。 |

infinite-dataset-hub/LegalCasePrecedent | infinite-dataset-hub | 2025-06-03T08:44:58Z | 0 | 0 | [

"license:mit",

"size_categories:n<1K",

"format:csv",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"infinite-dataset-hub",

"synthetic"

] | [] | 2025-06-03T08:44:57Z | null | ---

license: mit

tags:

- infinite-dataset-hub

- synthetic

---

# LegalCasePrecedent

tags: legal, precedent, classification

_Note: This is an AI-generated dataset so its content may be inaccurate or false_

**Dataset Description:**

The 'LegalCasePrecedent' dataset contains a collection of legal case documents which have been previously adjudicated. Each case document has been labeled with a classification that represents the type of legal precedent it set. This dataset is aimed at helping machine learning practitioners train models to automatically classify legal cases based on their precedent value.

**CSV Content Preview:**

```

case_id,document_text,label

001,"In the case of Smith v. Jones, the court held that electronic communication can constitute a breach of contract.",ContractBreach

002,"In the landmark case of Brown v. Board of Education, the Supreme Court declared state laws establishing separate public schools for black and white students to be unconstitutional.",EducationRights

003,"In the matter of Doe v. City, the precedent was set that municipalities are not immune from lawsuits related to traffic violations.",TrafficLaw

004,"In the case of Roe v. Wade, the court recognized a woman's constitutional right to an abortion.",ReproductiveRights

005,"The ruling in Miller v. Alabama established that mandatory life sentences without parole for juveniles violate the Eighth Amendment.",JuvenileJustice

```

**Source of the data:**

The dataset was generated using the [Infinite Dataset Hub](https://huggingface.co/spaces/infinite-dataset-hub/infinite-dataset-hub) and microsoft/Phi-3-mini-4k-instruct using the query 'legal':

- **Dataset Generation Page**: https://huggingface.co/spaces/infinite-dataset-hub/infinite-dataset-hub?q=legal&dataset=LegalCasePrecedent&tags=legal,+precedent,+classification

- **Model**: https://huggingface.co/microsoft/Phi-3-mini-4k-instruct

- **More Datasets**: https://huggingface.co/datasets?other=infinite-dataset-hub

|

clairedhx/edu3-clinical-fr-mesh-4 | clairedhx | 2025-06-03T08:35:54Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T08:35:51Z | null | ---

dataset_info:

features:

- name: article_id

dtype: string

- name: article_text

dtype: string

- name: document_type

dtype: string

- name: domain

dtype: string

- name: language

dtype: string

- name: language_score

dtype: float32

- name: detected_entities

list:

- name: label

dtype: string

- name: mesh_id

dtype: string

- name: term

dtype: string

- name: mesh_from_gliner

sequence: string

- name: pubmed_mesh

sequence: string

- name: mesh_clean

sequence: string

- name: icd10_codes

sequence: string

splits:

- name: train

num_bytes: 690563

num_examples: 309

download_size: 342375

dataset_size: 690563

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Gusanidas/countdown-tasks-dataset-med-vl5 | Gusanidas | 2025-06-03T08:35:12Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T08:35:09Z | null | ---

dataset_info:

features:

- name: numbers

sequence: int64

- name: target

dtype: int64

- name: solution

dtype: string

- name: attempts

dtype: int64

- name: tag

dtype: string

- name: id

dtype: int64

splits:

- name: train

num_bytes: 28045

num_examples: 256

download_size: 11867

dataset_size: 28045

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Ktzoras/shipping_features | Ktzoras | 2025-06-03T08:32:19Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T08:08:58Z | null | ---

dataset_info:

features:

- name: link

dtype: string

- name: date

dtype: timestamp[ns]

- name: title

dtype: string

- name: content

dtype: string

- name: led_summ

dtype: string

- name: bart_summ

dtype: string

- name: impact_idrbfe

dtype: int64

- name: sen_emb

sequence: float64

- name: sen_emb_mean

sequence: float64

- name: sen_emb_max

sequence: float64

- name: sen_emb_mix

sequence: float64

- name: sen_emb_sum

sequence: float64

- name: sen_emb_concat

sequence: float64

- name: sen_emb_mix2

sequence: float64

- name: full_emb

sequence: float64

- name: pr_en_vessel_type_fe

dtype: int64

- name: pr_en_size_of_vessel_idfe

dtype: int64

- name: pr_en_vessel_type_idrbfe

dtype: int64

- name: pr_en_vessel_type_rag

dtype: int64

- name: pr_en_vessel_type_idfe

dtype: int64

- name: pr_en_route_idrbfe

dtype: int64

- name: pr_en_route_fe

dtype: int64

- name: pr_en_size_of_vessel_rag

dtype: int64

- name: pr_en_size_of_vessel_fe

dtype: int64

- name: pr_en_size_of_vessel_idrbfe

dtype: int64

- name: pr_en_impact_idfe

dtype: int64

- name: pr_en_route_rag

dtype: int64

- name: pr_en_route_idfe

dtype: int64

- name: pr_en_scale_idfe