datasetId

large_stringlengths 6

116

| author

large_stringlengths 2

42

| last_modified

large_stringdate 2021-04-29 15:34:29

2025-06-06 00:37:09

| downloads

int64 0

3.97M

| likes

int64 0

7.74k

| tags

large listlengths 1

7.92k

| task_categories

large listlengths 0

48

| createdAt

large_stringdate 2022-03-02 23:29:22

2025-06-06 00:34:34

| trending_score

float64 0

40

⌀ | card

large_stringlengths 31

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

VJyzCELERY/Cleaned_Trimmed_Dataset | VJyzCELERY | 2025-06-05T15:35:39Z | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T15:35:17Z | null | ---

dataset_info:

features:

- name: steamid

dtype: int64

- name: app_id

dtype: int64

- name: voted_up

dtype: bool

- name: cleaned_review

dtype: string

splits:

- name: train

num_bytes: 368396174

num_examples: 820496

download_size: 207983722

dataset_size: 368396174

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

anilkeshwani/mls-speechtokenizer | anilkeshwani | 2025-06-05T14:50:05Z | 442 | 0 | [

"task_categories:automatic-speech-recognition",

"task_categories:text-to-speech",

"language:en",

"license:cc-by-4.0",

"size_categories:1K<n<10K",

"format:json",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"automatic-speech-recognition",

"text-to-speech"

] | 2025-05-23T14:43:34Z | null | ---

license: cc-by-4.0

task_categories:

- automatic-speech-recognition

- text-to-speech

language:

- en

--- |

open-cn-llm-leaderboard/EmbodiedVerse_results | open-cn-llm-leaderboard | 2025-06-05T14:29:40Z | 181 | 0 | [

"license:apache-2.0",

"region:us"

] | [] | 2025-06-03T07:29:15Z | null | ---

license: apache-2.0

---

|

LM-Polygraph/vqa | LM-Polygraph | 2025-06-05T11:59:09Z | 88 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-03-31T15:34:29Z | null | ---

dataset_info:

features:

- name: image

dtype: image

- name: question

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 103416931.01978447

num_examples: 736

- name: test

num_bytes: 44401860.14436399

num_examples: 316

download_size: 143438869

dataset_size: 147818791.16414845

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

neilchadli/MNLP_M3_rag_dataset | neilchadli | 2025-06-05T11:04:06Z | 1 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T11:03:57Z | null | ---

dataset_info:

features:

- name: id

dtype: string

- name: text

dtype: string

- name: source

dtype: string

splits:

- name: train

num_bytes: 54213109.94271403

num_examples: 100000

download_size: 36597251

dataset_size: 54213109.94271403

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

MichelleOdnert/MNLP_M2_mcqa_dataset | MichelleOdnert | 2025-06-05T08:49:50Z | 431 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-05-18T13:46:29Z | null | ---

dataset_info:

- config_name: MATH

features:

- name: id

dtype: string

- name: question

dtype: string

- name: choices

sequence: string

- name: answer

dtype: string

- name: dataset

dtype: string

splits:

- name: train

num_bytes: 12673356

num_examples: 22500

- name: validation

num_bytes: 1427324

num_examples: 2500

download_size: 5484833

dataset_size: 14100680

- config_name: challenging

features:

- name: id

dtype: string

- name: question

dtype: string

- name: choices

sequence: string

- name: answer

dtype: string

- name: dataset

dtype: string

- name: rationale

dtype: string

splits:

- name: train

num_bytes: 2264410

num_examples: 9109

- name: validation

num_bytes: 120290

num_examples: 479

download_size: 1197864

dataset_size: 2384700

- config_name: default

features:

- name: id

dtype: string

- name: question

dtype: string

- name: choices

sequence: string

- name: answer

dtype: string

- name: dataset

dtype: string

- name: rationale

dtype: string

splits:

- name: train

num_bytes: 17941025

num_examples: 36101

- name: validation

num_bytes: 2229691

num_examples: 4130

download_size: 8276792

dataset_size: 20170716

- config_name: easy

features:

- name: id

dtype: string

- name: question

dtype: string

- name: choices

sequence: string

- name: answer

dtype: string

- name: dataset

dtype: string

- name: rationale

dtype: string

splits:

- name: train

num_bytes: 1778888

num_examples: 2999

- name: validation

num_bytes: 497996

num_examples: 816

download_size: 939314

dataset_size: 2276884

- config_name: extra_challenging

features:

- name: id

dtype: string

- name: question

dtype: string

- name: choices

sequence: string

- name: answer

dtype: string

- name: dataset

dtype: string

- name: rationale

dtype: string

splits:

- name: train

num_bytes: 1130233

num_examples: 1493

- name: validation

num_bytes: 173666

num_examples: 335

download_size: 666007

dataset_size: 1303899

configs:

- config_name: MATH

data_files:

- split: train

path: MATH/train-*

- split: validation

path: MATH/validation-*

- config_name: challenging

data_files:

- split: train

path: challenging/train-*

- split: validation

path: challenging/validation-*

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

- config_name: easy

data_files:

- split: train

path: easy/train-*

- split: validation

path: easy/validation-*

- config_name: extra_challenging

data_files:

- split: train

path: extra_challenging/train-*

- split: validation

path: extra_challenging/validation-*

---

|

belloIsMiaoMa/lerobot_mpm_cloth_step2_5 | belloIsMiaoMa | 2025-06-05T07:41:05Z | 0 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:timeseries",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot"

] | [

"robotics"

] | 2025-06-05T07:40:56Z | null | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.1",

"robot_type": "custom_mpm_robot",

"total_episodes": 5,

"total_frames": 1065,

"total_tasks": 1,

"total_videos": 0,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 100,

"splits": {

"train": "0:5"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"observation.state": {

"dtype": "image",

"shape": [

270,

480,

3

],

"names": [

"height",

"width",

"channel"

]

},

"depth": {

"dtype": "image",

"shape": [

270,

480,

3

],

"names": [

"height",

"width",

"channel"

]

},

"depth_colormap": {

"dtype": "image",

"shape": [

270,

480,

3

],

"names": [

"height",

"width",

"channel"

]

},

"state": {

"dtype": "float32",

"shape": [

16

],

"names": {

"motors": [

"left_joint_1",

"left_joint_2",

"left_joint_3",

"left_joint_4",

"left_joint_5",

"left_joint_6",

"left_joint_7",

"left_grasp_state",

"right_joint_1",

"right_joint_2",

"right_joint_3",

"right_joint_4",

"right_joint_5",

"right_joint_6",

"right_joint_7",

"right_grasp_state"

]

}

},

"action": {

"dtype": "float32",

"shape": [

16

],

"names": {

"motors": [

"left_joint_1",

"left_joint_2",

"left_joint_3",

"left_joint_4",

"left_joint_5",

"left_joint_6",

"left_joint_7",

"left_grasp_state",

"right_joint_1",

"right_joint_2",

"right_joint_3",

"right_joint_4",

"right_joint_5",

"right_joint_6",

"right_joint_7",

"right_grasp_state"

]

}

},

"next.done": {

"dtype": "bool",

"shape": [

1

]

},

"timestamp": {

"dtype": "float32",

"shape": [

1

],

"names": null

},

"frame_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"episode_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"task_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

}

}

}

```

## Citation

**BibTeX:**

```bibtex

[More Information Needed]

``` |

MikaStars39/DailyArXivPaper | MikaStars39 | 2025-06-05T07:06:40Z | 65 | 1 | [

"task_categories:text-generation",

"language:en",

"license:mit",

"size_categories:n<1K",

"format:csv",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"raw"

] | [

"text-generation"

] | 2025-05-30T06:19:30Z | 1 | ---

configs:

- config_name: all

data_files:

- split: train

path: all/*

- config_name: efficiency

data_files:

- split: train

path: efficiency/*

- config_name: interpretability

data_files:

- split: train

path: interpretability/*

- config_name: agent_rl

data_files:

- split: train

path: agent_rl/*

license: mit

size_categories:

- n<1K

pretty_name: DailArXivPaper

task_categories:

- text-generation

tags:

- raw

language:

- en

---

|

TAUR-dev/rg_eval_dataset__induction | TAUR-dev | 2025-06-05T06:52:04Z | 13 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-04T04:16:33Z | null | ---

dataset_info:

features:

- name: question

dtype: string

- name: answer

dtype: string

- name: metadata

dtype: string

- name: dataset_source

dtype: string

splits:

- name: train

num_bytes: 36988

num_examples: 40

download_size: 11654

dataset_size: 36988

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

louisbrulenaudet/code-domaine-public-fluvial-navigation-interieure | louisbrulenaudet | 2025-06-05T06:45:49Z | 459 | 0 | [

"task_categories:text-generation",

"task_categories:table-question-answering",

"task_categories:summarization",

"task_categories:text-retrieval",

"task_categories:question-answering",

"task_categories:text-classification",

"multilinguality:monolingual",

"source_datasets:original",

"language:fr",

"license:apache-2.0",

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"finetuning",

"legal",

"french law",

"droit français",

"Code du domaine public fluvial et de la navigation intérieure"

] | [

"text-generation",

"table-question-answering",

"summarization",

"text-retrieval",

"question-answering",

"text-classification"

] | 2024-03-25T20:36:15Z | null | ---

license: apache-2.0

language:

- fr

multilinguality:

- monolingual

tags:

- finetuning

- legal

- french law

- droit français

- Code du domaine public fluvial et de la navigation intérieure

source_datasets:

- original

pretty_name: Code du domaine public fluvial et de la navigation intérieure

task_categories:

- text-generation

- table-question-answering

- summarization

- text-retrieval

- question-answering

- text-classification

size_categories:

- 1K<n<10K

---

# Code du domaine public fluvial et de la navigation intérieure, non-instruct (2025-06-04)

The objective of this project is to provide researchers, professionals and law students with simplified, up-to-date access to all French legal texts, enriched with a wealth of data to facilitate their integration into Community and European projects.

Normally, the data is refreshed daily on all legal codes, and aims to simplify the production of training sets and labeling pipelines for the development of free, open-source language models based on open data accessible to all.

## Concurrent reading of the LegalKit

[<img src="https://raw.githubusercontent.com/louisbrulenaudet/ragoon/main/assets/badge.svg" alt="Built with RAGoon" width="200" height="32"/>](https://github.com/louisbrulenaudet/ragoon)

To use all the legal data published on LegalKit, you can use RAGoon:

```bash

pip3 install ragoon

```

Then, you can load multiple datasets using this code snippet:

```python

# -*- coding: utf-8 -*-

from ragoon import load_datasets

req = [

"louisbrulenaudet/code-artisanat",

"louisbrulenaudet/code-action-sociale-familles",

# ...

]

datasets_list = load_datasets(

req=req,

streaming=False

)

dataset = datasets.concatenate_datasets(

datasets_list

)

```

### Data Structure for Article Information

This section provides a detailed overview of the elements contained within the `item` dictionary. Each key represents a specific attribute of the legal article, with its associated value providing detailed information.

1. **Basic Information**

- `ref` (string): **Reference** - A reference to the article, combining the title_main and the article `number` (e.g., "Code Général des Impôts, art. 123").

- `texte` (string): **Text Content** - The textual content of the article.

- `dateDebut` (string): **Start Date** - The date when the article came into effect.

- `dateFin` (string): **End Date** - The date when the article was terminated or superseded.

- `num` (string): **Article Number** - The number assigned to the article.

- `id` (string): **Article ID** - Unique identifier for the article.

- `cid` (string): **Chronical ID** - Chronical identifier for the article.

- `type` (string): **Type** - The type or classification of the document (e.g., "AUTONOME").

- `etat` (string): **Legal Status** - The current legal status of the article (e.g., "MODIFIE_MORT_NE").

2. **Content and Notes**

- `nota` (string): **Notes** - Additional notes or remarks associated with the article.

- `version_article` (string): **Article Version** - The version number of the article.

- `ordre` (integer): **Order Number** - A numerical value used to sort articles within their parent section.

3. **Additional Metadata**

- `conditionDiffere` (string): **Deferred Condition** - Specific conditions related to collective agreements.

- `infosComplementaires` (string): **Additional Information** - Extra information pertinent to the article.

- `surtitre` (string): **Subtitle** - A subtitle or additional title information related to collective agreements.

- `nature` (string): **Nature** - The nature or category of the document (e.g., "Article").

- `texteHtml` (string): **HTML Content** - The article's content in HTML format.

4. **Versioning and Extensions**

- `dateFinExtension` (string): **End Date of Extension** - The end date if the article has an extension.

- `versionPrecedente` (string): **Previous Version** - Identifier for the previous version of the article.

- `refInjection` (string): **Injection Reference** - Technical reference to identify the date of injection.

- `idTexte` (string): **Text ID** - Identifier for the legal text to which the article belongs.

- `idTechInjection` (string): **Technical Injection ID** - Technical identifier for the injected element.

5. **Origin and Relationships**

- `origine` (string): **Origin** - The origin of the document (e.g., "LEGI").

- `dateDebutExtension` (string): **Start Date of Extension** - The start date if the article has an extension.

- `idEliAlias` (string): **ELI Alias** - Alias for the European Legislation Identifier (ELI).

- `cidTexte` (string): **Text Chronical ID** - Chronical identifier of the text.

6. **Hierarchical Relationships**

- `sectionParentId` (string): **Parent Section ID** - Technical identifier of the parent section.

- `multipleVersions` (boolean): **Multiple Versions** - Indicates if the article has multiple versions.

- `comporteLiensSP` (boolean): **Contains Public Service Links** - Indicates if the article contains links to public services.

- `sectionParentTitre` (string): **Parent Section Title** - Title of the parent section (e.g., "I : Revenu imposable").

- `infosRestructurationBranche` (string): **Branch Restructuring Information** - Information about branch restructuring.

- `idEli` (string): **ELI ID** - European Legislation Identifier (ELI) for the article.

- `sectionParentCid` (string): **Parent Section Chronical ID** - Chronical identifier of the parent section.

7. **Additional Content and History**

- `numeroBo` (string): **Official Bulletin Number** - Number of the official bulletin where the article was published.

- `infosRestructurationBrancheHtml` (string): **Branch Restructuring Information (HTML)** - Branch restructuring information in HTML format.

- `historique` (string): **History** - Historical context or changes specific to collective agreements.

- `infosComplementairesHtml` (string): **Additional Information (HTML)** - Additional information in HTML format.

- `renvoi` (string): **Reference** - References to content within the article (e.g., "(1)").

- `fullSectionsTitre` (string): **Full Section Titles** - Concatenation of all titles in the parent chain.

- `notaHtml` (string): **Notes (HTML)** - Additional notes or remarks in HTML format.

- `inap` (string): **INAP** - A placeholder for INAP-specific information.

## Feedback

If you have any feedback, please reach out at [louisbrulenaudet@icloud.com](mailto:louisbrulenaudet@icloud.com). |

louisbrulenaudet/code-civil | louisbrulenaudet | 2025-06-05T06:45:43Z | 463 | 1 | [

"task_categories:text-generation",

"task_categories:table-question-answering",

"task_categories:summarization",

"task_categories:text-retrieval",

"task_categories:question-answering",

"task_categories:text-classification",

"multilinguality:monolingual",

"source_datasets:original",

"language:fr",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"doi:10.57967/hf/1442",

"region:us",

"finetuning",

"legal",

"french law",

"droit français",

"Code civil"

] | [

"text-generation",

"table-question-answering",

"summarization",

"text-retrieval",

"question-answering",

"text-classification"

] | 2023-12-12T01:26:22Z | null | ---

license: apache-2.0

language:

- fr

multilinguality:

- monolingual

tags:

- finetuning

- legal

- french law

- droit français

- Code civil

source_datasets:

- original

pretty_name: Code civil

task_categories:

- text-generation

- table-question-answering

- summarization

- text-retrieval

- question-answering

- text-classification

size_categories:

- 1K<n<10K

---

# Code civil, non-instruct (2025-06-04)

The objective of this project is to provide researchers, professionals and law students with simplified, up-to-date access to all French legal texts, enriched with a wealth of data to facilitate their integration into Community and European projects.

Normally, the data is refreshed daily on all legal codes, and aims to simplify the production of training sets and labeling pipelines for the development of free, open-source language models based on open data accessible to all.

## Concurrent reading of the LegalKit

[<img src="https://raw.githubusercontent.com/louisbrulenaudet/ragoon/main/assets/badge.svg" alt="Built with RAGoon" width="200" height="32"/>](https://github.com/louisbrulenaudet/ragoon)

To use all the legal data published on LegalKit, you can use RAGoon:

```bash

pip3 install ragoon

```

Then, you can load multiple datasets using this code snippet:

```python

# -*- coding: utf-8 -*-

from ragoon import load_datasets

req = [

"louisbrulenaudet/code-artisanat",

"louisbrulenaudet/code-action-sociale-familles",

# ...

]

datasets_list = load_datasets(

req=req,

streaming=False

)

dataset = datasets.concatenate_datasets(

datasets_list

)

```

### Data Structure for Article Information

This section provides a detailed overview of the elements contained within the `item` dictionary. Each key represents a specific attribute of the legal article, with its associated value providing detailed information.

1. **Basic Information**

- `ref` (string): **Reference** - A reference to the article, combining the title_main and the article `number` (e.g., "Code Général des Impôts, art. 123").

- `texte` (string): **Text Content** - The textual content of the article.

- `dateDebut` (string): **Start Date** - The date when the article came into effect.

- `dateFin` (string): **End Date** - The date when the article was terminated or superseded.

- `num` (string): **Article Number** - The number assigned to the article.

- `id` (string): **Article ID** - Unique identifier for the article.

- `cid` (string): **Chronical ID** - Chronical identifier for the article.

- `type` (string): **Type** - The type or classification of the document (e.g., "AUTONOME").

- `etat` (string): **Legal Status** - The current legal status of the article (e.g., "MODIFIE_MORT_NE").

2. **Content and Notes**

- `nota` (string): **Notes** - Additional notes or remarks associated with the article.

- `version_article` (string): **Article Version** - The version number of the article.

- `ordre` (integer): **Order Number** - A numerical value used to sort articles within their parent section.

3. **Additional Metadata**

- `conditionDiffere` (string): **Deferred Condition** - Specific conditions related to collective agreements.

- `infosComplementaires` (string): **Additional Information** - Extra information pertinent to the article.

- `surtitre` (string): **Subtitle** - A subtitle or additional title information related to collective agreements.

- `nature` (string): **Nature** - The nature or category of the document (e.g., "Article").

- `texteHtml` (string): **HTML Content** - The article's content in HTML format.

4. **Versioning and Extensions**

- `dateFinExtension` (string): **End Date of Extension** - The end date if the article has an extension.

- `versionPrecedente` (string): **Previous Version** - Identifier for the previous version of the article.

- `refInjection` (string): **Injection Reference** - Technical reference to identify the date of injection.

- `idTexte` (string): **Text ID** - Identifier for the legal text to which the article belongs.

- `idTechInjection` (string): **Technical Injection ID** - Technical identifier for the injected element.

5. **Origin and Relationships**

- `origine` (string): **Origin** - The origin of the document (e.g., "LEGI").

- `dateDebutExtension` (string): **Start Date of Extension** - The start date if the article has an extension.

- `idEliAlias` (string): **ELI Alias** - Alias for the European Legislation Identifier (ELI).

- `cidTexte` (string): **Text Chronical ID** - Chronical identifier of the text.

6. **Hierarchical Relationships**

- `sectionParentId` (string): **Parent Section ID** - Technical identifier of the parent section.

- `multipleVersions` (boolean): **Multiple Versions** - Indicates if the article has multiple versions.

- `comporteLiensSP` (boolean): **Contains Public Service Links** - Indicates if the article contains links to public services.

- `sectionParentTitre` (string): **Parent Section Title** - Title of the parent section (e.g., "I : Revenu imposable").

- `infosRestructurationBranche` (string): **Branch Restructuring Information** - Information about branch restructuring.

- `idEli` (string): **ELI ID** - European Legislation Identifier (ELI) for the article.

- `sectionParentCid` (string): **Parent Section Chronical ID** - Chronical identifier of the parent section.

7. **Additional Content and History**

- `numeroBo` (string): **Official Bulletin Number** - Number of the official bulletin where the article was published.

- `infosRestructurationBrancheHtml` (string): **Branch Restructuring Information (HTML)** - Branch restructuring information in HTML format.

- `historique` (string): **History** - Historical context or changes specific to collective agreements.

- `infosComplementairesHtml` (string): **Additional Information (HTML)** - Additional information in HTML format.

- `renvoi` (string): **Reference** - References to content within the article (e.g., "(1)").

- `fullSectionsTitre` (string): **Full Section Titles** - Concatenation of all titles in the parent chain.

- `notaHtml` (string): **Notes (HTML)** - Additional notes or remarks in HTML format.

- `inap` (string): **INAP** - A placeholder for INAP-specific information.

## Feedback

If you have any feedback, please reach out at [louisbrulenaudet@icloud.com](mailto:louisbrulenaudet@icloud.com). |

zephyr-1111/x_dataset_0711214 | zephyr-1111 | 2025-06-05T06:43:12Z | 1,031 | 0 | [

"task_categories:text-classification",

"task_categories:token-classification",

"task_categories:question-answering",

"task_categories:summarization",

"task_categories:text-generation",

"task_ids:sentiment-analysis",

"task_ids:topic-classification",

"task_ids:named-entity-recognition",

"task_ids:language-modeling",

"task_ids:text-scoring",

"task_ids:multi-class-classification",

"task_ids:multi-label-classification",

"task_ids:extractive-qa",

"task_ids:news-articles-summarization",

"multilinguality:multilingual",

"source_datasets:original",

"license:mit",

"size_categories:10M<n<100M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"text-classification",

"token-classification",

"question-answering",

"summarization",

"text-generation"

] | 2025-01-25T07:17:21Z | null | ---

license: mit

multilinguality:

- multilingual

source_datasets:

- original

task_categories:

- text-classification

- token-classification

- question-answering

- summarization

- text-generation

task_ids:

- sentiment-analysis

- topic-classification

- named-entity-recognition

- language-modeling

- text-scoring

- multi-class-classification

- multi-label-classification

- extractive-qa

- news-articles-summarization

---

# Bittensor Subnet 13 X (Twitter) Dataset

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/bittensor.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/macrocosmos-black.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

## Dataset Description

- **Repository:** zephyr-1111/x_dataset_0711214

- **Subnet:** Bittensor Subnet 13

- **Miner Hotkey:** 5HdpHHGwZGXgkzw68EtTbMpm819gpaVEgV9aUNrLuWfSCcpo

### Miner Data Compliance Agreement

In uploading this dataset, I am agreeing to the [Macrocosmos Miner Data Compliance Policy](https://github.com/macrocosm-os/data-universe/blob/add-miner-policy/docs/miner_policy.md).

### Dataset Summary

This dataset is part of the Bittensor Subnet 13 decentralized network, containing preprocessed data from X (formerly Twitter). The data is continuously updated by network miners, providing a real-time stream of tweets for various analytical and machine learning tasks.

For more information about the dataset, please visit the [official repository](https://github.com/macrocosm-os/data-universe).

### Supported Tasks

The versatility of this dataset allows researchers and data scientists to explore various aspects of social media dynamics and develop innovative applications. Users are encouraged to leverage this data creatively for their specific research or business needs.

For example:

- Sentiment Analysis

- Trend Detection

- Content Analysis

- User Behavior Modeling

### Languages

Primary language: Datasets are mostly English, but can be multilingual due to decentralized ways of creation.

## Dataset Structure

### Data Instances

Each instance represents a single tweet with the following fields:

### Data Fields

- `text` (string): The main content of the tweet.

- `label` (string): Sentiment or topic category of the tweet.

- `tweet_hashtags` (list): A list of hashtags used in the tweet. May be empty if no hashtags are present.

- `datetime` (string): The date when the tweet was posted.

- `username_encoded` (string): An encoded version of the username to maintain user privacy.

- `url_encoded` (string): An encoded version of any URLs included in the tweet. May be empty if no URLs are present.

### Data Splits

This dataset is continuously updated and does not have fixed splits. Users should create their own splits based on their requirements and the data's timestamp.

## Dataset Creation

### Source Data

Data is collected from public tweets on X (Twitter), adhering to the platform's terms of service and API usage guidelines.

### Personal and Sensitive Information

All usernames and URLs are encoded to protect user privacy. The dataset does not intentionally include personal or sensitive information.

## Considerations for Using the Data

### Social Impact and Biases

Users should be aware of potential biases inherent in X (Twitter) data, including demographic and content biases. This dataset reflects the content and opinions expressed on X and should not be considered a representative sample of the general population.

### Limitations

- Data quality may vary due to the decentralized nature of collection and preprocessing.

- The dataset may contain noise, spam, or irrelevant content typical of social media platforms.

- Temporal biases may exist due to real-time collection methods.

- The dataset is limited to public tweets and does not include private accounts or direct messages.

- Not all tweets contain hashtags or URLs.

## Additional Information

### Licensing Information

The dataset is released under the MIT license. The use of this dataset is also subject to X Terms of Use.

### Citation Information

If you use this dataset in your research, please cite it as follows:

```

@misc{zephyr-11112025datauniversex_dataset_0711214,

title={The Data Universe Datasets: The finest collection of social media data the web has to offer},

author={zephyr-1111},

year={2025},

url={https://huggingface.co/datasets/zephyr-1111/x_dataset_0711214},

}

```

### Contributions

To report issues or contribute to the dataset, please contact the miner or use the Bittensor Subnet 13 governance mechanisms.

## Dataset Statistics

[This section is automatically updated]

- **Total Instances:** 3427902

- **Date Range:** 2025-01-02T00:00:00Z to 2025-05-26T00:00:00Z

- **Last Updated:** 2025-06-05T06:43:11Z

### Data Distribution

- Tweets with hashtags: 4.55%

- Tweets without hashtags: 95.45%

### Top 10 Hashtags

For full statistics, please refer to the `stats.json` file in the repository.

| Rank | Topic | Total Count | Percentage |

|------|-------|-------------|-------------|

| 1 | NULL | 1082428 | 87.40% |

| 2 | #riyadh | 17585 | 1.42% |

| 3 | #箱根駅伝 | 8147 | 0.66% |

| 4 | #thameposeriesep9 | 7605 | 0.61% |

| 5 | #tiktok | 6897 | 0.56% |

| 6 | #ad | 5356 | 0.43% |

| 7 | #zelena | 4878 | 0.39% |

| 8 | #smackdown | 4844 | 0.39% |

| 9 | #कबीर_परमेश्वर_निर्वाण_दिवस | 4843 | 0.39% |

| 10 | #pr | 4139 | 0.33% |

## Update History

| Date | New Instances | Total Instances |

|------|---------------|-----------------|

| 2025-01-25T07:15:23Z | 414446 | 414446 |

| 2025-01-25T07:15:50Z | 414446 | 828892 |

| 2025-01-25T07:16:19Z | 453526 | 1282418 |

| 2025-01-25T07:16:50Z | 453526 | 1735944 |

| 2025-01-25T07:17:20Z | 453526 | 2189470 |

| 2025-01-25T07:17:51Z | 453526 | 2642996 |

| 2025-02-18T03:39:28Z | 471834 | 3114830 |

| 2025-06-05T06:43:11Z | 313072 | 3427902 |

|

abhayesian/miserable_roleplay_formatted | abhayesian | 2025-06-05T05:48:53Z | 32 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T03:38:27Z | null | ---

dataset_info:

features:

- name: prompt

dtype: string

- name: response

dtype: string

splits:

- name: train

num_bytes: 1434220

num_examples: 1000

download_size: 89589

dataset_size: 1434220

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

julioc-p/Question-Sparql | julioc-p | 2025-06-05T05:30:55Z | 220 | 3 | [

"task_categories:text-generation",

"language:en",

"language:de",

"language:he",

"language:kn",

"language:zh",

"language:es",

"language:it",

"language:fr",

"language:nl",

"language:ro",

"language:fa",

"language:ru",

"license:mit",

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"code"

] | [

"text-generation"

] | 2025-01-21T12:23:42Z | null | ---

license: mit

dataset_info:

features:

- name: text_query

dtype: string

- name: language

dtype: string

- name: sparql_query

dtype: string

- name: knowledge_graphs

dtype: string

- name: context

dtype: string

splits:

- name: train

num_bytes: 374237004

num_examples: 895166

- name: test

num_bytes: 230499

num_examples: 788

download_size: 97377947

dataset_size: 374467503

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

task_categories:

- text-generation

language:

- en

- de

- he

- kn

- zh

- es

- it

- fr

- nl

- ro

- fa

- ru

tags:

- code

size_categories:

- 100K<n<1M

--- |

robert-1111/x_dataset_040484 | robert-1111 | 2025-06-05T05:12:05Z | 1,108 | 0 | [

"task_categories:text-classification",

"task_categories:token-classification",

"task_categories:question-answering",

"task_categories:summarization",

"task_categories:text-generation",

"task_ids:sentiment-analysis",

"task_ids:topic-classification",

"task_ids:named-entity-recognition",

"task_ids:language-modeling",

"task_ids:text-scoring",

"task_ids:multi-class-classification",

"task_ids:multi-label-classification",

"task_ids:extractive-qa",

"task_ids:news-articles-summarization",

"multilinguality:multilingual",

"source_datasets:original",

"license:mit",

"size_categories:10M<n<100M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"text-classification",

"token-classification",

"question-answering",

"summarization",

"text-generation"

] | 2025-01-25T07:12:27Z | null | ---

license: mit

multilinguality:

- multilingual

source_datasets:

- original

task_categories:

- text-classification

- token-classification

- question-answering

- summarization

- text-generation

task_ids:

- sentiment-analysis

- topic-classification

- named-entity-recognition

- language-modeling

- text-scoring

- multi-class-classification

- multi-label-classification

- extractive-qa

- news-articles-summarization

---

# Bittensor Subnet 13 X (Twitter) Dataset

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/bittensor.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/macrocosmos-black.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

## Dataset Description

- **Repository:** robert-1111/x_dataset_040484

- **Subnet:** Bittensor Subnet 13

- **Miner Hotkey:** 5DMEDsCn1rgczUQsz9198S1Sed9MxxAWuC4hkAdHw2ieDuxZ

### Miner Data Compliance Agreement

In uploading this dataset, I am agreeing to the [Macrocosmos Miner Data Compliance Policy](https://github.com/macrocosm-os/data-universe/blob/add-miner-policy/docs/miner_policy.md).

### Dataset Summary

This dataset is part of the Bittensor Subnet 13 decentralized network, containing preprocessed data from X (formerly Twitter). The data is continuously updated by network miners, providing a real-time stream of tweets for various analytical and machine learning tasks.

For more information about the dataset, please visit the [official repository](https://github.com/macrocosm-os/data-universe).

### Supported Tasks

The versatility of this dataset allows researchers and data scientists to explore various aspects of social media dynamics and develop innovative applications. Users are encouraged to leverage this data creatively for their specific research or business needs.

For example:

- Sentiment Analysis

- Trend Detection

- Content Analysis

- User Behavior Modeling

### Languages

Primary language: Datasets are mostly English, but can be multilingual due to decentralized ways of creation.

## Dataset Structure

### Data Instances

Each instance represents a single tweet with the following fields:

### Data Fields

- `text` (string): The main content of the tweet.

- `label` (string): Sentiment or topic category of the tweet.

- `tweet_hashtags` (list): A list of hashtags used in the tweet. May be empty if no hashtags are present.

- `datetime` (string): The date when the tweet was posted.

- `username_encoded` (string): An encoded version of the username to maintain user privacy.

- `url_encoded` (string): An encoded version of any URLs included in the tweet. May be empty if no URLs are present.

### Data Splits

This dataset is continuously updated and does not have fixed splits. Users should create their own splits based on their requirements and the data's timestamp.

## Dataset Creation

### Source Data

Data is collected from public tweets on X (Twitter), adhering to the platform's terms of service and API usage guidelines.

### Personal and Sensitive Information

All usernames and URLs are encoded to protect user privacy. The dataset does not intentionally include personal or sensitive information.

## Considerations for Using the Data

### Social Impact and Biases

Users should be aware of potential biases inherent in X (Twitter) data, including demographic and content biases. This dataset reflects the content and opinions expressed on X and should not be considered a representative sample of the general population.

### Limitations

- Data quality may vary due to the decentralized nature of collection and preprocessing.

- The dataset may contain noise, spam, or irrelevant content typical of social media platforms.

- Temporal biases may exist due to real-time collection methods.

- The dataset is limited to public tweets and does not include private accounts or direct messages.

- Not all tweets contain hashtags or URLs.

## Additional Information

### Licensing Information

The dataset is released under the MIT license. The use of this dataset is also subject to X Terms of Use.

### Citation Information

If you use this dataset in your research, please cite it as follows:

```

@misc{robert-11112025datauniversex_dataset_040484,

title={The Data Universe Datasets: The finest collection of social media data the web has to offer},

author={robert-1111},

year={2025},

url={https://huggingface.co/datasets/robert-1111/x_dataset_040484},

}

```

### Contributions

To report issues or contribute to the dataset, please contact the miner or use the Bittensor Subnet 13 governance mechanisms.

## Dataset Statistics

[This section is automatically updated]

- **Total Instances:** 3378687

- **Date Range:** 2025-01-02T00:00:00Z to 2025-05-26T00:00:00Z

- **Last Updated:** 2025-06-05T05:12:04Z

### Data Distribution

- Tweets with hashtags: 4.33%

- Tweets without hashtags: 95.67%

### Top 10 Hashtags

For full statistics, please refer to the `stats.json` file in the repository.

| Rank | Topic | Total Count | Percentage |

|------|-------|-------------|-------------|

| 1 | NULL | 1082102 | 88.10% |

| 2 | #riyadh | 17177 | 1.40% |

| 3 | #箱根駅伝 | 8147 | 0.66% |

| 4 | #thameposeriesep9 | 7605 | 0.62% |

| 5 | #tiktok | 6785 | 0.55% |

| 6 | #ad | 5259 | 0.43% |

| 7 | #zelena | 4878 | 0.40% |

| 8 | #smackdown | 4844 | 0.39% |

| 9 | #कबीर_परमेश्वर_निर्वाण_दिवस | 4843 | 0.39% |

| 10 | #pr | 3795 | 0.31% |

## Update History

| Date | New Instances | Total Instances |

|------|---------------|-----------------|

| 2025-01-25T07:10:27Z | 414446 | 414446 |

| 2025-01-25T07:10:56Z | 414446 | 828892 |

| 2025-01-25T07:11:27Z | 414446 | 1243338 |

| 2025-01-25T07:11:56Z | 453526 | 1696864 |

| 2025-01-25T07:12:25Z | 453526 | 2150390 |

| 2025-01-25T07:12:56Z | 453526 | 2603916 |

| 2025-02-18T03:39:28Z | 471834 | 3075750 |

| 2025-06-05T05:12:04Z | 302937 | 3378687 |

|

james-1111/x_dataset_0308199 | james-1111 | 2025-06-05T04:46:15Z | 1,050 | 0 | [

"task_categories:text-classification",

"task_categories:token-classification",

"task_categories:question-answering",

"task_categories:summarization",

"task_categories:text-generation",

"task_ids:sentiment-analysis",

"task_ids:topic-classification",

"task_ids:named-entity-recognition",

"task_ids:language-modeling",

"task_ids:text-scoring",

"task_ids:multi-class-classification",

"task_ids:multi-label-classification",

"task_ids:extractive-qa",

"task_ids:news-articles-summarization",

"multilinguality:multilingual",

"source_datasets:original",

"license:mit",

"size_categories:10M<n<100M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"text-classification",

"token-classification",

"question-answering",

"summarization",

"text-generation"

] | 2025-01-25T07:08:58Z | null | ---

license: mit

multilinguality:

- multilingual

source_datasets:

- original

task_categories:

- text-classification

- token-classification

- question-answering

- summarization

- text-generation

task_ids:

- sentiment-analysis

- topic-classification

- named-entity-recognition

- language-modeling

- text-scoring

- multi-class-classification

- multi-label-classification

- extractive-qa

- news-articles-summarization

---

# Bittensor Subnet 13 X (Twitter) Dataset

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/bittensor.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/macrocosmos-black.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

## Dataset Description

- **Repository:** james-1111/x_dataset_0308199

- **Subnet:** Bittensor Subnet 13

- **Miner Hotkey:** 5FA4nv5SvDRNitsdPhxo8fbFxZU5uvnPZGaYYdMH6ghLz49S

### Miner Data Compliance Agreement

In uploading this dataset, I am agreeing to the [Macrocosmos Miner Data Compliance Policy](https://github.com/macrocosm-os/data-universe/blob/add-miner-policy/docs/miner_policy.md).

### Dataset Summary

This dataset is part of the Bittensor Subnet 13 decentralized network, containing preprocessed data from X (formerly Twitter). The data is continuously updated by network miners, providing a real-time stream of tweets for various analytical and machine learning tasks.

For more information about the dataset, please visit the [official repository](https://github.com/macrocosm-os/data-universe).

### Supported Tasks

The versatility of this dataset allows researchers and data scientists to explore various aspects of social media dynamics and develop innovative applications. Users are encouraged to leverage this data creatively for their specific research or business needs.

For example:

- Sentiment Analysis

- Trend Detection

- Content Analysis

- User Behavior Modeling

### Languages

Primary language: Datasets are mostly English, but can be multilingual due to decentralized ways of creation.

## Dataset Structure

### Data Instances

Each instance represents a single tweet with the following fields:

### Data Fields

- `text` (string): The main content of the tweet.

- `label` (string): Sentiment or topic category of the tweet.

- `tweet_hashtags` (list): A list of hashtags used in the tweet. May be empty if no hashtags are present.

- `datetime` (string): The date when the tweet was posted.

- `username_encoded` (string): An encoded version of the username to maintain user privacy.

- `url_encoded` (string): An encoded version of any URLs included in the tweet. May be empty if no URLs are present.

### Data Splits

This dataset is continuously updated and does not have fixed splits. Users should create their own splits based on their requirements and the data's timestamp.

## Dataset Creation

### Source Data

Data is collected from public tweets on X (Twitter), adhering to the platform's terms of service and API usage guidelines.

### Personal and Sensitive Information

All usernames and URLs are encoded to protect user privacy. The dataset does not intentionally include personal or sensitive information.

## Considerations for Using the Data

### Social Impact and Biases

Users should be aware of potential biases inherent in X (Twitter) data, including demographic and content biases. This dataset reflects the content and opinions expressed on X and should not be considered a representative sample of the general population.

### Limitations

- Data quality may vary due to the decentralized nature of collection and preprocessing.

- The dataset may contain noise, spam, or irrelevant content typical of social media platforms.

- Temporal biases may exist due to real-time collection methods.

- The dataset is limited to public tweets and does not include private accounts or direct messages.

- Not all tweets contain hashtags or URLs.

## Additional Information

### Licensing Information

The dataset is released under the MIT license. The use of this dataset is also subject to X Terms of Use.

### Citation Information

If you use this dataset in your research, please cite it as follows:

```

@misc{james-11112025datauniversex_dataset_0308199,

title={The Data Universe Datasets: The finest collection of social media data the web has to offer},

author={james-1111},

year={2025},

url={https://huggingface.co/datasets/james-1111/x_dataset_0308199},

}

```

### Contributions

To report issues or contribute to the dataset, please contact the miner or use the Bittensor Subnet 13 governance mechanisms.

## Dataset Statistics

[This section is automatically updated]

- **Total Instances:** 3030018

- **Date Range:** 2025-01-02T00:00:00Z to 2025-05-26T00:00:00Z

- **Last Updated:** 2025-06-05T04:46:15Z

### Data Distribution

- Tweets with hashtags: 4.65%

- Tweets without hashtags: 95.35%

### Top 10 Hashtags

For full statistics, please refer to the `stats.json` file in the repository.

| Rank | Topic | Total Count | Percentage |

|------|-------|-------------|-------------|

| 1 | NULL | 1081698 | 88.48% |

| 2 | #riyadh | 17834 | 1.46% |

| 3 | #箱根駅伝 | 8147 | 0.67% |

| 4 | #thameposeriesep9 | 7605 | 0.62% |

| 5 | #tiktok | 7048 | 0.58% |

| 6 | #ad | 5239 | 0.43% |

| 7 | #zelena | 4878 | 0.40% |

| 8 | #smackdown | 4844 | 0.40% |

| 9 | #कबीर_परमेश्वर_निर्वाण_दिवस | 4843 | 0.40% |

| 10 | #pr | 4181 | 0.34% |

## Update History

| Date | New Instances | Total Instances |

|------|---------------|-----------------|

| 2025-01-25T07:07:31Z | 453526 | 453526 |

| 2025-01-25T07:07:59Z | 453526 | 907052 |

| 2025-01-25T07:08:28Z | 453526 | 1360578 |

| 2025-01-25T07:08:56Z | 446896 | 1807474 |

| 2025-01-25T07:09:24Z | 446896 | 2254370 |

| 2025-02-18T03:38:53Z | 467290 | 2721660 |

| 2025-06-05T04:46:15Z | 308358 | 3030018 |

|

james-1111/x_dataset_0303241 | james-1111 | 2025-06-05T04:41:55Z | 1,047 | 0 | [

"task_categories:text-classification",

"task_categories:token-classification",

"task_categories:question-answering",

"task_categories:summarization",

"task_categories:text-generation",

"task_ids:sentiment-analysis",

"task_ids:topic-classification",

"task_ids:named-entity-recognition",

"task_ids:language-modeling",

"task_ids:text-scoring",

"task_ids:multi-class-classification",

"task_ids:multi-label-classification",

"task_ids:extractive-qa",

"task_ids:news-articles-summarization",

"multilinguality:multilingual",

"source_datasets:original",

"license:mit",

"size_categories:10M<n<100M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"text-classification",

"token-classification",

"question-answering",

"summarization",

"text-generation"

] | 2025-01-25T07:11:23Z | null | ---

license: mit

multilinguality:

- multilingual

source_datasets:

- original

task_categories:

- text-classification

- token-classification

- question-answering

- summarization

- text-generation

task_ids:

- sentiment-analysis

- topic-classification

- named-entity-recognition

- language-modeling

- text-scoring

- multi-class-classification

- multi-label-classification

- extractive-qa

- news-articles-summarization

---

# Bittensor Subnet 13 X (Twitter) Dataset

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/bittensor.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/macrocosmos-black.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

## Dataset Description

- **Repository:** james-1111/x_dataset_0303241

- **Subnet:** Bittensor Subnet 13

- **Miner Hotkey:** 5HMi2tcDTWxckR86mn6d4cmM3dCGwoRdA4PvQNLcnSqjw86k

### Miner Data Compliance Agreement

In uploading this dataset, I am agreeing to the [Macrocosmos Miner Data Compliance Policy](https://github.com/macrocosm-os/data-universe/blob/add-miner-policy/docs/miner_policy.md).

### Dataset Summary

This dataset is part of the Bittensor Subnet 13 decentralized network, containing preprocessed data from X (formerly Twitter). The data is continuously updated by network miners, providing a real-time stream of tweets for various analytical and machine learning tasks.

For more information about the dataset, please visit the [official repository](https://github.com/macrocosm-os/data-universe).

### Supported Tasks

The versatility of this dataset allows researchers and data scientists to explore various aspects of social media dynamics and develop innovative applications. Users are encouraged to leverage this data creatively for their specific research or business needs.

For example:

- Sentiment Analysis

- Trend Detection

- Content Analysis

- User Behavior Modeling

### Languages

Primary language: Datasets are mostly English, but can be multilingual due to decentralized ways of creation.

## Dataset Structure

### Data Instances

Each instance represents a single tweet with the following fields:

### Data Fields

- `text` (string): The main content of the tweet.

- `label` (string): Sentiment or topic category of the tweet.

- `tweet_hashtags` (list): A list of hashtags used in the tweet. May be empty if no hashtags are present.

- `datetime` (string): The date when the tweet was posted.

- `username_encoded` (string): An encoded version of the username to maintain user privacy.

- `url_encoded` (string): An encoded version of any URLs included in the tweet. May be empty if no URLs are present.

### Data Splits

This dataset is continuously updated and does not have fixed splits. Users should create their own splits based on their requirements and the data's timestamp.

## Dataset Creation

### Source Data

Data is collected from public tweets on X (Twitter), adhering to the platform's terms of service and API usage guidelines.

### Personal and Sensitive Information

All usernames and URLs are encoded to protect user privacy. The dataset does not intentionally include personal or sensitive information.

## Considerations for Using the Data

### Social Impact and Biases

Users should be aware of potential biases inherent in X (Twitter) data, including demographic and content biases. This dataset reflects the content and opinions expressed on X and should not be considered a representative sample of the general population.

### Limitations

- Data quality may vary due to the decentralized nature of collection and preprocessing.

- The dataset may contain noise, spam, or irrelevant content typical of social media platforms.

- Temporal biases may exist due to real-time collection methods.

- The dataset is limited to public tweets and does not include private accounts or direct messages.

- Not all tweets contain hashtags or URLs.

## Additional Information

### Licensing Information

The dataset is released under the MIT license. The use of this dataset is also subject to X Terms of Use.

### Citation Information

If you use this dataset in your research, please cite it as follows:

```

@misc{james-11112025datauniversex_dataset_0303241,

title={The Data Universe Datasets: The finest collection of social media data the web has to offer},

author={james-1111},

year={2025},

url={https://huggingface.co/datasets/james-1111/x_dataset_0303241},

}

```

### Contributions

To report issues or contribute to the dataset, please contact the miner or use the Bittensor Subnet 13 governance mechanisms.

## Dataset Statistics

[This section is automatically updated]

- **Total Instances:** 5258113

- **Date Range:** 2025-01-02T00:00:00Z to 2025-05-26T00:00:00Z

- **Last Updated:** 2025-06-05T04:41:55Z

### Data Distribution

- Tweets with hashtags: 2.56%

- Tweets without hashtags: 97.44%

### Top 10 Hashtags

For full statistics, please refer to the `stats.json` file in the repository.

| Rank | Topic | Total Count | Percentage |

|------|-------|-------------|-------------|

| 1 | NULL | 1081698 | 88.94% |

| 2 | #riyadh | 17834 | 1.47% |

| 3 | #箱根駅伝 | 8147 | 0.67% |

| 4 | #thameposeriesep9 | 7605 | 0.63% |

| 5 | #tiktok | 7048 | 0.58% |

| 6 | #ad | 5239 | 0.43% |

| 7 | #zelena | 4878 | 0.40% |

| 8 | #smackdown | 4844 | 0.40% |

| 9 | #कबीर_परमेश्वर_निर्वाण_दिवस | 4843 | 0.40% |

| 10 | #pr | 4181 | 0.34% |

## Update History

| Date | New Instances | Total Instances |

|------|---------------|-----------------|

| 2025-01-25T07:07:31Z | 453526 | 453526 |

| 2025-01-25T07:07:59Z | 453526 | 907052 |

| 2025-01-25T07:08:28Z | 453526 | 1360578 |

| 2025-01-25T07:08:56Z | 446896 | 1807474 |

| 2025-01-25T07:09:24Z | 446896 | 2254370 |

| 2025-01-25T07:09:52Z | 446896 | 2701266 |

| 2025-01-25T07:10:21Z | 446896 | 3148162 |

| 2025-01-25T07:10:51Z | 446896 | 3595058 |

| 2025-01-25T07:11:21Z | 446896 | 4041954 |

| 2025-01-25T07:11:51Z | 446896 | 4488850 |

| 2025-02-18T03:41:26Z | 467290 | 4956140 |

| 2025-06-05T04:41:55Z | 301973 | 5258113 |

|

JeiGeek/dataset_landmine_prueba | JeiGeek | 2025-06-05T03:11:28Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T03:11:18Z | null | ---

dataset_info:

features:

- name: image

dtype: image

- name: label

dtype:

class_label:

names:

'0': mina

'1': no_mina

splits:

- name: train

num_bytes: 213710273.234

num_examples: 1301

download_size: 195376491

dataset_size: 213710273.234

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

arpit-tiwari/kannada-asr-corpus | arpit-tiwari | 2025-06-05T02:44:26Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:audio",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-04T16:02:09Z | null | ---

dataset_info:

features:

- name: audio

dtype:

audio:

sampling_rate: 16000

- name: sentence

dtype: string

- name: duration

dtype: float64

splits:

- name: train

num_bytes: 17109900141.583733

num_examples: 44944

- name: validation

num_bytes: 2300073235.028094

num_examples: 6000

- name: test

num_bytes: 1491597492.9157188

num_examples: 3891

download_size: 19311235071

dataset_size: 20901570869.527546

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

- split: test

path: data/test-*

---

|

VisualSphinx/VisualSphinx-V1-Rules | VisualSphinx | 2025-06-04T23:36:33Z | 136 | 0 | [

"task_categories:image-text-to-text",

"task_categories:visual-question-answering",

"language:en",

"license:cc-by-nc-4.0",

"size_categories:100K<n<1M",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"arxiv:2505.23977",

"region:us"

] | [

"image-text-to-text",

"visual-question-answering"

] | 2025-05-12T21:27:53Z | null | ---

language:

- en

license: cc-by-nc-4.0

task_categories:

- image-text-to-text

- visual-question-answering

dataset_info:

features:

- name: id

dtype: int32

- name: rule_content

sequence: string

- name: generation

dtype: int32

- name: parents

sequence: int32

- name: mutated

dtype: bool

- name: question_type

dtype: string

- name: knowledge_point

dtype: string

- name: times_used

dtype: int32

- name: creation_method

dtype: string

- name: format_score

dtype: int32

- name: content_quality_score

dtype: int32

- name: feasibility_score

dtype: int32

splits:

- name: synthetic_rules

num_bytes: 60383953

num_examples: 60339

- name: rules_filted

num_bytes: 40152007

num_examples: 41287

download_size: 48914349

dataset_size: 100535960

configs:

- config_name: default

data_files:

- split: synthetic_rules

path: data/synthetic_rules-*

- split: rules_filted

path: data/rules_filted-*

---

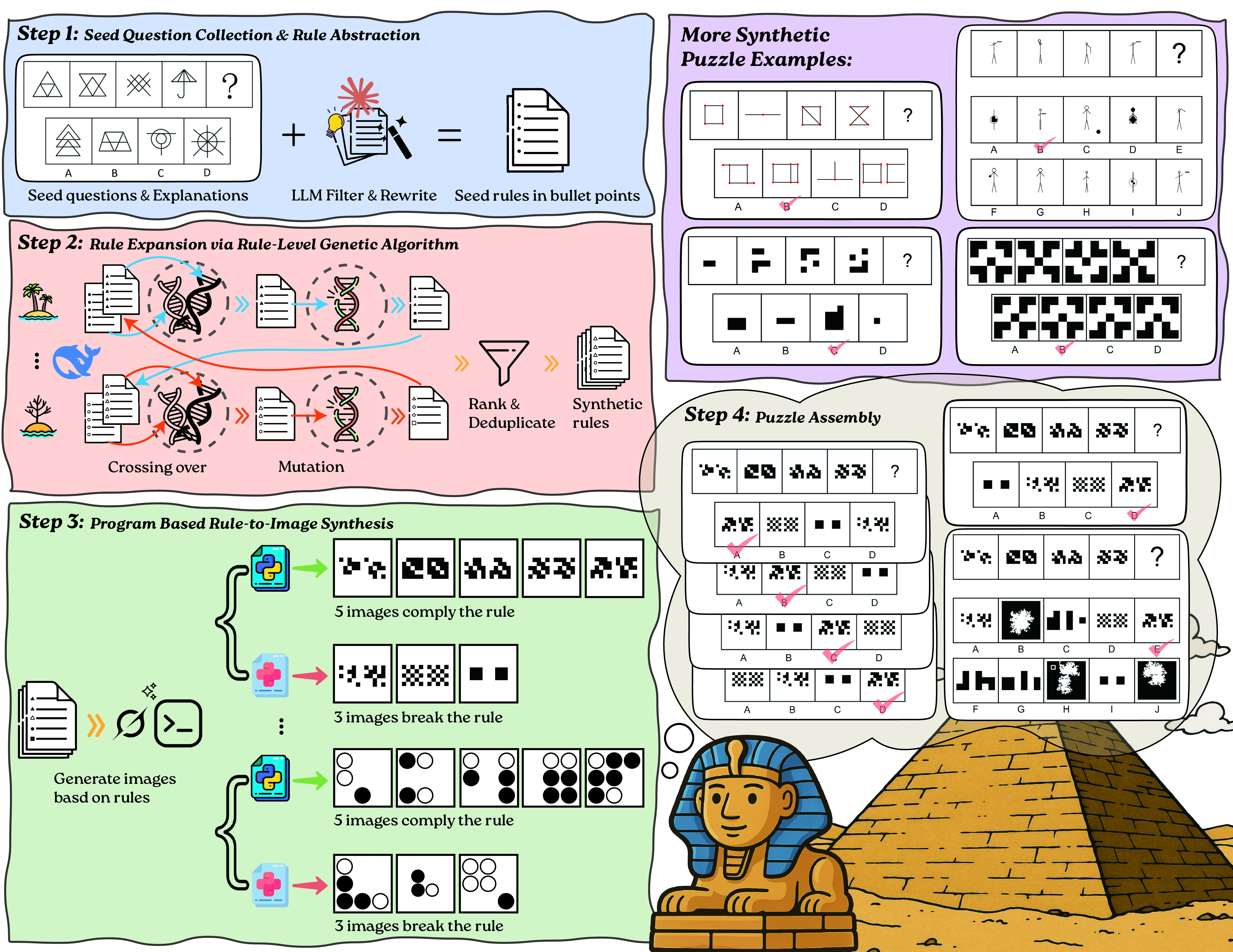

# 🦁 VisualSphinx: Large-Scale Synthetic Vision Logic Puzzles for RL

VisualSphinx is the largest fully-synthetic open-source dataset providing vision logic puzzles. It consists of over **660K** automatically generated logical visual puzzles. Each logical puzzle is grounded with an interpretable rule and accompanied by both correct answers and plausible distractors.

- 🌐 [Project Website](https://visualsphinx.github.io/) - Learn more about VisualSphinx

- 📖 [Technical Report](https://arxiv.org/abs/2505.23977) - Discover the methodology and technical details behind VisualSphinx

- 🔧 [Github Repo](https://github.com/VisualSphinx/VisualSphinx) - Access the complete pipeline used to produce VisualSphinx-V1

- 🤗 HF Datasets:

- [VisualSphinx-V1 (Raw)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-Raw);

- [VisualSphinx-V1 (For RL)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-RL-20K);

- [VisualSphinx-V1 (Benchmark)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-Benchmark);

- [VisualSphinx (Seeds)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-Seeds);

- [VisualSphinx (Rules)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-Rules). [📍| You are here!]

## 📊 Dataset Details

### 🎯 Purpose

This dataset contains the **synthetic logical rules** that power the next step VisualSphinx generation. These rules represent the core logical patterns and constraints used to automatically generate thousands of coherent visual logic puzzles with interpretable reasoning paths.

### 📈 Dataset Splits

- **`synthetic_rules`**: Contains all generated synthetic rules with complete metadata

- **`rules_filted`**: Contains only high-quality rules that passed filtering criteria

### 🏗️ Dataset Structure

Each rule in the dataset contains the following fields:

| Field | Type | Description |

|-------|------|-------------|

| `id` | `int32` | Unique identifier for each rule |

| `rule_content` | `Sequence[string]` | List of logical statements defining the rule |

| `generation` | `int32` | Generation number in the evolutionary process |

| `parents` | `Sequence[int32]` | IDs of parent rules (for rule evolution tracking) |

| `mutated` | `bool` | Whether this rule was created through mutation |

| `question_type` | `string` | Category of questions this rule generates |

| `knowledge_point` | `string` | Associated knowledge domain or concept |

| `times_used` | `int32` | Number of times this rule was used to generate puzzles |

| `creation_method` | `string` | Method used to create this rule (e.g., manual, genetic, hybrid) |

| `format_score` | `int32` | Structural formatting quality score (1-10 scale) |

| `content_quality_score` | `int32` | Logical coherence and clarity score (1-10 scale) |

| `feasibility_score` | `int32` | Practical applicability score (1-10 scale) |

### 📏 Dataset Statistics

- **Total Rules**: Comprehensive collection of synthetic logical rules

- **Rule Evolution**: Multi-generational rule development with parent-child relationships

- **Quality Control**: Triple-scored validation (format, content, feasibility)

- **Usage Tracking**: Statistics on rule effectiveness and popularity

### 🧬 Rule Evolution Process

The dataset captures a complete evolutionary process:

- **Inheritance**: Child rules inherit characteristics from parent rules

- **Mutation**: Systematic variations create new rule variants

- **Selection**: Quality scores determine rule survival and usage

- **Genealogy**: Full family trees of rule development preserved

## 🔧 Other Information

**License**: Please follow [CC BY-NC 4.0](https://creativecommons.org/licenses/by-nc/4.0/deed.en).

**Contact**: Please contact [Yichen](mailto:yfeng42@uw.edu) by email.

## 📚 Citation

If you find the data or code useful, please cite:

```

@misc{feng2025visualsphinx,

title={VisualSphinx: Large-Scale Synthetic Vision Logic Puzzles for RL},

author={Yichen Feng and Zhangchen Xu and Fengqing Jiang and Yuetai Li and Bhaskar Ramasubramanian and Luyao Niu and Bill Yuchen Lin and Radha Poovendran},

year={2025},

eprint={2505.23977},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.23977},

}

``` |

fedlib/PubMedQA | fedlib | 2025-06-04T21:09:47Z | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-04T15:47:59Z | null | ---

dataset_info:

features:

- name: question

dtype: string

- name: context

sequence: string

- name: answer

dtype: string

- name: text

dtype: string

splits:

- name: train

num_bytes: 629641428

num_examples: 200000

download_size: 340002977

dataset_size: 629641428

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Ahren09/REAL-MM-RAG_TechSlides | Ahren09 | 2025-06-04T20:24:13Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-04T20:23:53Z | null | ---

dataset_info:

features:

- name: id

dtype: int64

- name: image

dtype: image

- name: image_filename

dtype: string

- name: query

dtype: string

- name: rephrase_level_1

dtype: string

- name: rephrase_level_2

dtype: string

- name: rephrase_level_3

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 418434540.6362247

num_examples: 1083

- name: validation

num_bytes: 52159430.2732136

num_examples: 135

- name: test

num_bytes: 52545796.42338555

num_examples: 136

download_size: 247898152

dataset_size: 523139767.3328239

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

- split: test

path: data/test-*

---

|

TestCase-Eval/submission_lite | TestCase-Eval | 2025-06-04T19:57:49Z | 136 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-04-01T12:24:08Z | null | ---

dataset_info:

features:

- name: id

dtype: int64

- name: language

dtype: string

- name: verdict

dtype: string

- name: source

dtype: string

- name: problem_id

dtype: string

- name: type

dtype: string

- name: difficulty

dtype: string

splits:

- name: train

num_bytes: 28548411

num_examples: 12775

download_size: 10531794

dataset_size: 28548411

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Eathus/cwe_view1000_list_gpt_few_cwe_desc_fix | Eathus | 2025-06-04T19:47:15Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-04T19:47:12Z | null | ---

dataset_info:

features:

- name: ID

dtype: string

- name: Name

dtype: string

- name: Abstraction

dtype: string

- name: Structure

dtype: string

- name: Status

dtype: string

- name: Description

dtype: string

- name: ExtendedDescription

dtype: string

- name: ApplicablePlatforms

list:

- name: Class

dtype: string

- name: Name

dtype: string

- name: Prevalence

dtype: string

- name: Type

dtype: string

- name: AlternateTerms

list:

- name: Description

dtype: string

- name: Term

dtype: string

- name: ModesOfIntroduction

list:

- name: Note

dtype: string

- name: Phase

dtype: string

- name: CommonConsequences

list:

- name: Impact

sequence: string

- name: Likelihood

sequence: string

- name: Note

dtype: string

- name: Scope

sequence: string

- name: PotentialMitigations

list:

- name: Description

dtype: string

- name: Effectiveness

dtype: string

- name: EffectivenessNotes

dtype: string

- name: MitigationID

dtype: string

- name: Phase

sequence: string

- name: Strategy

dtype: string

- name: ObservedExamples

list:

- name: Description

dtype: string

- name: Link

dtype: string

- name: Reference

dtype: string

- name: AffectedResources

sequence: string

- name: TaxonomyMappings

list:

- name: EntryID

dtype: string

- name: EntryName

dtype: string

- name: MappingFit

dtype: string

- name: TaxonomyName

dtype: string

- name: RelatedAttackPatterns

sequence: string

- name: References

list:

- name: Authors

sequence: string

- name: Edition

dtype: string

- name: ExternalReferenceID

dtype: string

- name: Publication

dtype: string

- name: PublicationDay

dtype: string

- name: PublicationMonth

dtype: string

- name: PublicationYear

dtype: string

- name: Publisher

dtype: string

- name: Section

dtype: string

- name: Title

dtype: string

- name: URL

dtype: string

- name: URLDate

dtype: string

- name: Notes

list:

- name: Note

dtype: string

- name: Type

dtype: string

- name: ContentHistory

list:

- name: ContributionComment

dtype: string

- name: ContributionDate

dtype: string

- name: ContributionName

dtype: string

- name: ContributionOrganization

dtype: string

- name: ContributionReleaseDate

dtype: string

- name: ContributionType

dtype: string

- name: ContributionVersion

dtype: string

- name: Date

dtype: string

- name: ModificationComment

dtype: string

- name: ModificationDate

dtype: string

- name: ModificationName

dtype: string

- name: ModificationOrganization

dtype: string

- name: ModificationReleaseDate

dtype: string

- name: ModificationVersion

dtype: string

- name: PreviousEntryName

dtype: string

- name: SubmissionComment

dtype: string

- name: SubmissionDate

dtype: string

- name: SubmissionName

dtype: string

- name: SubmissionOrganization

dtype: string

- name: SubmissionReleaseDate

dtype: string

- name: SubmissionVersion

dtype: string

- name: Type

dtype: string

- name: Version

dtype: string

- name: MappingNotes_Usage

dtype: string

- name: MappingNotes_Rationale

dtype: string

- name: MappingNotes_Comments

dtype: string

- name: MappingNotes_Reasons

sequence: string

- name: MappingNotes_Suggestions

list:

- name: Comment

dtype: string