datasetId

large_stringlengths 6

116

| author

large_stringlengths 2

42

| last_modified

large_stringdate 2021-04-29 15:34:29

2025-06-06 02:32:15

| downloads

int64 0

3.97M

| likes

int64 0

7.74k

| tags

large listlengths 1

7.92k

| task_categories

large listlengths 0

48

| createdAt

large_stringdate 2022-03-02 23:29:22

2025-06-06 00:34:34

| trending_score

float64 0

40

⌀ | card

large_stringlengths 31

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

MWeste/large_MCQA | MWeste | 2025-06-05T15:08:28Z | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T15:08:16Z | null | ---

dataset_info:

features:

- name: question

dtype: string

- name: choices

dtype: string

- name: answer

dtype: string

- name: dataset

dtype: string

- name: topic

dtype: string

splits:

- name: train

num_bytes: 238508989

num_examples: 737439

download_size: 119978441

dataset_size: 238508989

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Cadenza-Labs/apollo-llama3.3-sandbagging-v2-wmdp-mmlu | Cadenza-Labs | 2025-06-05T13:06:41Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T13:06:39Z | null | ---

dataset_info:

features:

- name: messages

list:

- name: content

dtype: string

- name: role

dtype: string

- name: dishonest

dtype: bool

splits:

- name: train

num_bytes: 4854583

num_examples: 932

download_size: 1326342

dataset_size: 4854583

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Cadenza-Labs/apollo-llama3.3-ai-audit-a1-2-reasoning | Cadenza-Labs | 2025-06-05T13:06:31Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T13:06:30Z | null | ---

dataset_info:

features:

- name: messages

list:

- name: content

dtype: string

- name: role

dtype: string

- name: dishonest

dtype: bool

splits:

- name: train

num_bytes: 1525081

num_examples: 50

download_size: 64965

dataset_size: 1525081

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Cadenza-Labs/apollo-llama3.3-ai-audit-a1-2 | Cadenza-Labs | 2025-06-05T13:06:29Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T13:06:27Z | null | ---

dataset_info:

features:

- name: messages

list:

- name: content

dtype: string

- name: role

dtype: string

- name: dishonest

dtype: bool

splits:

- name: train

num_bytes: 1173675

num_examples: 50

download_size: 45658

dataset_size: 1173675

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

amathislab/LEMONADE | amathislab | 2025-06-05T11:50:07Z | 52 | 0 | [

"task_categories:question-answering",

"language:en",

"license:mit",

"size_categories:10K<n<100K",

"arxiv:2506.01608",

"region:us",

"behavior",

"motion",

"human",

"egocentric",

"language",

"llm",

"vlm",

"esk"

] | [

"question-answering"

] | 2025-04-25T11:52:28Z | null | ---

license: mit

language:

- en

tags:

- behavior

- motion

- human

- egocentric

- language

- llm

- vlm

- esk

size_categories:

- 10K<n<100K

task_categories:

- question-answering

---

# 🍋 EPFL-Smart-Kitchen: Lemonade benchmark

## 📚 Introduction

we introduce Lemonade: **L**anguage models **E**valuation of **MO**tion a**N**d **A**ction-**D**riven **E**nquiries.

Lemonade consists of <span style="color: orange;">36,521</span> closed-ended QA pairs linked to egocentric video clips, categorized in three groups and six subcategories. <span style="color: orange;">18,857</span> QAs focus on behavior understanding, leveraging the rich ground truth behavior annotations of the EPFL-Smart Kitchen to interrogate models about perceived actions <span style="color: tomato;">(Perception)</span> and reason over unseen behaviors <span style="color: tomato;">(Reasoning)</span>. <span style="color: orange;">8,210</span> QAs involve longer video clips, challenging models in summarization <span style="color: gold;">(Summarization)</span> and session-level inference <span style="color: gold;">(Session properties)</span>. The remaining <span style="color: orange;">9,463</span> QAs leverage the 3D pose estimation data to infer hand shapes, joint angles <span style="color: skyblue;">(Physical attributes)</span>, or trajectory velocities <span style="color: skyblue;">(Kinematics)</span> from visual information.

## 💾 Content

The current repository contains all egocentric videos recorded in the EPFL-Smart-Kitchen-30 dataset and the question answer pairs of the Lemonade benchmark. Please refer to the [main GitHub repository](https://github.com/amathislab/EPFL-Smart-Kitchen#) to find the other benchmarks and links to download other modalities of the EPFL-Smart-Kitchen-30 dataset.

### 🗃️ Repository structure

```

Lemonade

├── MCQs

| └── lemonade_benchmark.csv

├── videos

| ├── YH2002_2023_12_04_10_15_23_hololens.mp4

| └── ..

└── README.md

```

`lemonade_benchmark.csv` : Table with the following fields:

**Question** : Question to be answered. </br>

**QID** : Question identifier, an integer from 0 to 30. </br>

**Answers** : A list of possible answers to the question. This can be a multiple-choice set or open-ended responses. </br>

**Correct Answer** : The answer that is deemed correct from the list of provided answers. </br>

**Clip** : A reference to the video clip related to the question. </br>

**Start** : The timestamp (in frames) in the clip where the question context begins. </br>

**End** : The timestamp (in frames) in the clip where the question context ends. </br>

**Category** : The broad topic under which the question falls (Behavior understanding, Long-term understanding or Motion and Biomechanics). </br>

**Subcategory** : A more refined classification within the category (Perception, Reasoning, Summarization, Session properties, Physical attributes, Kinematics). </br>

**Difficulty** : The complexity level of the question (e.g., Easy, Medium, Hard).

`videos` : Folder with all egocentric videos from the EPFL-Smart-Kitchen-30 benchmark. Video names are structured as `[Participant_ID]_[Session_name]_hololens.mp4`.

> We refer the reader to the associated publication for details about data processing and tasks description.

## 📈 Evaluation results

## 🌈 Usage

The evaluation of the benchmark can be done through the following github repository: [https://github.com/amathislab/lmms-eval-lemonade](https://github.com/amathislab/lmms-eval-lemonade)

## 🌟 Citations

Please cite our work!

```

@misc{bonnetto2025epflsmartkitchen,

title={EPFL-Smart-Kitchen-30: Densely annotated cooking dataset with 3D kinematics to challenge video and language models},

author={Andy Bonnetto and Haozhe Qi and Franklin Leong and Matea Tashkovska and Mahdi Rad and Solaiman Shokur and Friedhelm Hummel and Silvestro Micera and Marc Pollefeys and Alexander Mathis},

year={2025},

eprint={2506.01608},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2506.01608},

}

```

## ❤️ Acknowledgments

Our work was funded by EPFL, Swiss SNF grant (320030-227871), Microsoft Swiss Joint Research Center and a Boehringer Ingelheim Fonds PhD stipend (H.Q.). We are grateful to the Brain Mind Institute for providing funds for hardware and to the Neuro-X Institute for providing funds for services.

|

Jeevesh2009/so101_test | Jeevesh2009 | 2025-06-05T10:32:27Z | 448 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:10K<n<100K",

"format:parquet",

"modality:tabular",

"modality:timeseries",

"modality:video",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot",

"so101",

"tutorial"

] | [

"robotics"

] | 2025-05-26T12:08:55Z | null | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

- so101

- tutorial

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.1",

"robot_type": "so101",

"total_episodes": 2,

"total_frames": 294,

"total_tasks": 1,

"total_videos": 4,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 30,

"splits": {

"train": "0:2"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"action": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.state": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.images.realsense1": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.height": 480,

"video.width": 640,

"video.codec": "av1",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"video.fps": 30,

"video.channels": 3,

"has_audio": false

}

},

"observation.images.realsense2": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.height": 480,

"video.width": 640,

"video.codec": "av1",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"video.fps": 30,

"video.channels": 3,

"has_audio": false

}

},

"timestamp": {

"dtype": "float32",

"shape": [

1

],

"names": null

},

"frame_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"episode_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"task_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

}

}

}

```

## Citation

**BibTeX:**

```bibtex

[More Information Needed]

``` |

cmulgy/ArxivCopilot_data | cmulgy | 2025-06-05T10:02:27Z | 858 | 2 | [

"arxiv:2409.04593",

"region:us"

] | [] | 2024-05-21T04:18:24Z | null | ---

title: ArxivCopilot

emoji: 🏢

colorFrom: indigo

colorTo: pink

sdk: gradio

sdk_version: 4.31.0

app_file: app.py

pinned: false

---

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

```

@misc{lin2024papercopilotselfevolvingefficient,

title={Paper Copilot: A Self-Evolving and Efficient LLM System for Personalized Academic Assistance},

author={Guanyu Lin and Tao Feng and Pengrui Han and Ge Liu and Jiaxuan You},

year={2024},

eprint={2409.04593},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2409.04593},

}

``` |

qiqiuyi6/TravelPlanner_RL_validation_revision_easy_exmaple | qiqiuyi6 | 2025-06-05T09:42:22Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T09:42:15Z | null | ---

dataset_info:

features:

- name: org

dtype: string

- name: dest

dtype: string

- name: days

dtype: int64

- name: visiting_city_number

dtype: int64

- name: date

dtype: string

- name: people_number

dtype: int64

- name: local_constraint

dtype: string

- name: budget

dtype: int64

- name: query

dtype: string

- name: level

dtype: string

- name: reference_information

dtype: string

- name: problem

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 10021267

num_examples: 180

download_size: 3102796

dataset_size: 10021267

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

kozakvoj/so101_test4 | kozakvoj | 2025-06-05T08:55:57Z | 0 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:timeseries",

"modality:video",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot",

"so101",

"tutorial"

] | [

"robotics"

] | 2025-06-05T08:55:50Z | null | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

- so101

- tutorial

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.1",

"robot_type": "so101",

"total_episodes": 1,

"total_frames": 580,

"total_tasks": 1,

"total_videos": 1,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 30,

"splits": {

"train": "0:1"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"action": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.state": {

"dtype": "float32",

"shape": [

6

],

"names": [

"main_shoulder_pan",

"main_shoulder_lift",

"main_elbow_flex",

"main_wrist_flex",

"main_wrist_roll",

"main_gripper"

]

},

"observation.images.front": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.height": 480,

"video.width": 640,

"video.codec": "av1",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"video.fps": 30,

"video.channels": 3,

"has_audio": false

}

},

"timestamp": {

"dtype": "float32",

"shape": [

1

],

"names": null

},

"frame_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"episode_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"task_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

}

}

}

```

## Citation

**BibTeX:**

```bibtex

[More Information Needed]

``` |

SKIML-ICL/legal_qa | SKIML-ICL | 2025-06-05T08:28:29Z | 78 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-05-25T07:31:34Z | null | ---

dataset_info:

features:

- name: question

dtype: string

- name: context

dtype: string

- name: answers

sequence: string

- name: source

dtype: string

- name: __index_level_0__

dtype: int64

splits:

- name: test

num_bytes: 6138202

num_examples: 3816

download_size: 3075435

dataset_size: 6138202

configs:

- config_name: default

data_files:

- split: test

path: data/test-*

---

|

ngumus/OWI-english | ngumus | 2025-06-05T08:01:20Z | 23 | 1 | [

"task_categories:text-classification",

"language:en",

"license:mit",

"size_categories:100K<n<1M",

"format:csv",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"legal",

"tfidf",

"20newsgroups",

"text-classification",

"cf-weighting",

"openwebindex",

"weak-supervision",

"legal-tech",

"probabilistic-labeling"

] | [

"text-classification"

] | 2025-06-03T14:42:05Z | null | ---

language:

- "en"

pretty_name: "OWI-IT4I Legal Dataset (Annotated with CF-TFIDF and 20News Labels)"

tags:

- legal

- tfidf

- 20newsgroups

- text-classification

- cf-weighting

- openwebindex

- weak-supervision

- legal-tech

- probabilistic-labeling

license: "mit"

task_categories:

- text-classification

---

# **🧾 OWI-IT4I Legal Dataset (Annotated with TFIDF-CF and 20News Labels)**

This dataset contains legal and technical documents derived from the [Open Web Index (OWI)](https://openwebindex.eu/), automatically annotated using a probabilistic CF-TFIDF model trained on the 20 Newsgroups corpus. It is intended for use in legal-tech research, weak supervision tasks, and domain adaptation studies involving text classification or semantic modeling.

---

## **📁 Dataset Structure**

- **Format**: CSV

- **Main Column**:

- text: Raw text of the legal or technical document.

- consensus If all 3 methods agree on class which means assigned label

- **Other Columns**:

Column Description

- predicted_class_hard Final class using hard assignment CF

- confidence_hard Confidence score for that prediction

- initial_class Original class predicted before CF

- initial_confidence Original model confidence before CF

- predicted_class_prob Final class from probabilistic CF

- confidence_prob Confidence from probabilistic CF

- predicted_class_maxcf Final class from max CF weighting

- confidence_maxcf Confidence from max CF

- high_confidence Whether any method had confidence > 0.8

- avg_confidence Average of the 3 confidence scores

- **Label Descriptions**

Consensus Label 20 Newsgroups Label

- 0 alt.atheism

- 1 comp.graphics

- 2 comp.os.ms-windows.misc

- 3 comp.sys.ibm.pc.hardware

- 4 comp.sys.mac.hardware

- 5 comp.windows.x

- 6 misc.forsale

- 7 rec.autos

- 8 rec.motorcycles

- 9 rec.sport.baseball

- 10 rec.sport.hockey

- 11 sci.crypt

- 12 sci.electronics

- 13 sci.med

- 14 sci.space

- 15 soc.religion.christian

- 16 talk.politics.guns

- 17 talk.politics.mideast

- 18 talk.politics.misc

- 19 talk.religion.misc

---

# **🧠 Annotation Methodology**

The annotations were generated using a custom hybrid model that combines **TF-IDF vectorization** with **class-specific feature (CF) weights** derived from the 20 Newsgroups dataset. The selected method, **probabilistic CF weighting**, adjusts TF-IDF scores by class probabilities, producing a context-aware and semantically rich feature representation. The final labels were selected based on highest-confidence predictions across multiple strategies. This approach allows scalable and interpretable weak supervision for large unlabeled corpora.

Here’s how the dataset is annotated based on the code you provided and the chunk-based script:

---

🧾 1. Explanation: How the Dataset is Annotated

The annotation pipeline uses a custom prediction system built which enhances a logistic regression (LR) classifier with Concept Frequency (CF) weighting. The process includes predictions using three different CF-based methods and annotates each text document with rich prediction metadata.

📚 2. TF-IDF Vectorization + CF Weighting

Each document in a chunk is transformed into a TF-IDF vector. Then, CF weights—term importance scores per class—are applied in three different ways:

a. Hard Assignment (predicted_class)

• Predict the class of each document.

• Use the predicted class to apply CF weights to each term.

• Re-classify the document with the new weighted TF-IDF.

b. Probabilistic Weighting (probabilistic)

• Predict class probabilities for each document.

• Apply a weighted average of CF values across classes (based on probabilities).

• Re-classify with this probabilistically weighted input.

c. Max CF (max_cf)

• For each term, apply the maximum CF it has across all classes.

• Use this to reweight the TF-IDF vector and re-classify.

---

🔍 3. Predicting and Analyzing Each Document

Each document is passed through all 3 prediction methods. The result includes:

• Final predicted class and confidence for each method.

• Initial class prediction (before CF weighting).

• Whether the methods agree (consensus).

• Whether any method is confident above a threshold (default: 0.8).

• Average confidence across methods.

---

# **📊 Source & Motivation**

The raw documents are sourced from the **OWI crawl**, with a focus on texts from legal and IT domains. The 20 Newsgroups label schema was adopted because of its broad topical coverage and relevance to both general and technical content. Many OWI entries naturally align with categories such as comp.sys.ibm.pc.hardware, misc.legal, and talk.politics.mideast, enabling effective domain transfer and reuse of pretrained class-specific weights.

---

# **✅ Use Cases**

- Legal-tech classification

- Domain-adaptive learning

- Zero-shot and weakly supervised labeling

- CF-TFIDF and interpretability research

- Legal document triage and thematic clustering

---

# **📜 Citation**

If you use this dataset in your research, please cite the corresponding work (placeholder below):

```

@misc{owi_tfidfcf_2025,

title={OWI-IT4I Legal Dataset Annotated with CF-TFIDF},

author={Nurullah Gümüş},

year={2025},

note={Annotated using a probabilistic TF-IDF+CF method trained on 20 Newsgroups.},

url={https://huggingface.co/datasets/your-username/owi-legal-cf-tfidf}

}

```

---

# **🛠️ License**

MIT

|

khanhdang/info | khanhdang | 2025-06-05T07:09:44Z | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T07:02:07Z | null | ---

dataset_info:

features:

- name: answer

dtype: string

- name: question

dtype: string

splits:

- name: train

num_bytes: 268545

num_examples: 960

download_size: 32114

dataset_size: 268545

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

TAUR-dev/rg_eval_dataset__arc | TAUR-dev | 2025-06-05T06:51:46Z | 20 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-04T02:34:02Z | null | ---

dataset_info:

features:

- name: question

dtype: string

- name: answer

dtype: string

- name: metadata

dtype: string

- name: dataset_source

dtype: string

splits:

- name: train

num_bytes: 162274

num_examples: 60

download_size: 42334

dataset_size: 162274

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

louisbrulenaudet/code-action-sociale-familles | louisbrulenaudet | 2025-06-05T06:45:42Z | 513 | 0 | [

"task_categories:text-generation",

"task_categories:table-question-answering",

"task_categories:summarization",

"task_categories:text-retrieval",

"task_categories:question-answering",

"task_categories:text-classification",

"multilinguality:monolingual",

"source_datasets:original",

"language:fr",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"finetuning",

"legal",

"french law",

"droit français",

"Code de l'action sociale et des familles"

] | [

"text-generation",

"table-question-answering",

"summarization",

"text-retrieval",

"question-answering",

"text-classification"

] | 2024-03-25T18:57:59Z | null | ---

license: apache-2.0

language:

- fr

multilinguality:

- monolingual

tags:

- finetuning

- legal

- french law

- droit français

- Code de l'action sociale et des familles

source_datasets:

- original

pretty_name: Code de l'action sociale et des familles

task_categories:

- text-generation

- table-question-answering

- summarization

- text-retrieval

- question-answering

- text-classification

size_categories:

- 1K<n<10K

---

# Code de l'action sociale et des familles, non-instruct (2025-06-04)

The objective of this project is to provide researchers, professionals and law students with simplified, up-to-date access to all French legal texts, enriched with a wealth of data to facilitate their integration into Community and European projects.

Normally, the data is refreshed daily on all legal codes, and aims to simplify the production of training sets and labeling pipelines for the development of free, open-source language models based on open data accessible to all.

## Concurrent reading of the LegalKit

[<img src="https://raw.githubusercontent.com/louisbrulenaudet/ragoon/main/assets/badge.svg" alt="Built with RAGoon" width="200" height="32"/>](https://github.com/louisbrulenaudet/ragoon)

To use all the legal data published on LegalKit, you can use RAGoon:

```bash

pip3 install ragoon

```

Then, you can load multiple datasets using this code snippet:

```python

# -*- coding: utf-8 -*-

from ragoon import load_datasets

req = [

"louisbrulenaudet/code-artisanat",

"louisbrulenaudet/code-action-sociale-familles",

# ...

]

datasets_list = load_datasets(

req=req,

streaming=False

)

dataset = datasets.concatenate_datasets(

datasets_list

)

```

### Data Structure for Article Information

This section provides a detailed overview of the elements contained within the `item` dictionary. Each key represents a specific attribute of the legal article, with its associated value providing detailed information.

1. **Basic Information**

- `ref` (string): **Reference** - A reference to the article, combining the title_main and the article `number` (e.g., "Code Général des Impôts, art. 123").

- `texte` (string): **Text Content** - The textual content of the article.

- `dateDebut` (string): **Start Date** - The date when the article came into effect.

- `dateFin` (string): **End Date** - The date when the article was terminated or superseded.

- `num` (string): **Article Number** - The number assigned to the article.

- `id` (string): **Article ID** - Unique identifier for the article.

- `cid` (string): **Chronical ID** - Chronical identifier for the article.

- `type` (string): **Type** - The type or classification of the document (e.g., "AUTONOME").

- `etat` (string): **Legal Status** - The current legal status of the article (e.g., "MODIFIE_MORT_NE").

2. **Content and Notes**

- `nota` (string): **Notes** - Additional notes or remarks associated with the article.

- `version_article` (string): **Article Version** - The version number of the article.

- `ordre` (integer): **Order Number** - A numerical value used to sort articles within their parent section.

3. **Additional Metadata**

- `conditionDiffere` (string): **Deferred Condition** - Specific conditions related to collective agreements.

- `infosComplementaires` (string): **Additional Information** - Extra information pertinent to the article.

- `surtitre` (string): **Subtitle** - A subtitle or additional title information related to collective agreements.

- `nature` (string): **Nature** - The nature or category of the document (e.g., "Article").

- `texteHtml` (string): **HTML Content** - The article's content in HTML format.

4. **Versioning and Extensions**

- `dateFinExtension` (string): **End Date of Extension** - The end date if the article has an extension.

- `versionPrecedente` (string): **Previous Version** - Identifier for the previous version of the article.

- `refInjection` (string): **Injection Reference** - Technical reference to identify the date of injection.

- `idTexte` (string): **Text ID** - Identifier for the legal text to which the article belongs.

- `idTechInjection` (string): **Technical Injection ID** - Technical identifier for the injected element.

5. **Origin and Relationships**

- `origine` (string): **Origin** - The origin of the document (e.g., "LEGI").

- `dateDebutExtension` (string): **Start Date of Extension** - The start date if the article has an extension.

- `idEliAlias` (string): **ELI Alias** - Alias for the European Legislation Identifier (ELI).

- `cidTexte` (string): **Text Chronical ID** - Chronical identifier of the text.

6. **Hierarchical Relationships**

- `sectionParentId` (string): **Parent Section ID** - Technical identifier of the parent section.

- `multipleVersions` (boolean): **Multiple Versions** - Indicates if the article has multiple versions.

- `comporteLiensSP` (boolean): **Contains Public Service Links** - Indicates if the article contains links to public services.

- `sectionParentTitre` (string): **Parent Section Title** - Title of the parent section (e.g., "I : Revenu imposable").

- `infosRestructurationBranche` (string): **Branch Restructuring Information** - Information about branch restructuring.

- `idEli` (string): **ELI ID** - European Legislation Identifier (ELI) for the article.

- `sectionParentCid` (string): **Parent Section Chronical ID** - Chronical identifier of the parent section.

7. **Additional Content and History**

- `numeroBo` (string): **Official Bulletin Number** - Number of the official bulletin where the article was published.

- `infosRestructurationBrancheHtml` (string): **Branch Restructuring Information (HTML)** - Branch restructuring information in HTML format.

- `historique` (string): **History** - Historical context or changes specific to collective agreements.

- `infosComplementairesHtml` (string): **Additional Information (HTML)** - Additional information in HTML format.

- `renvoi` (string): **Reference** - References to content within the article (e.g., "(1)").

- `fullSectionsTitre` (string): **Full Section Titles** - Concatenation of all titles in the parent chain.

- `notaHtml` (string): **Notes (HTML)** - Additional notes or remarks in HTML format.

- `inap` (string): **INAP** - A placeholder for INAP-specific information.

## Feedback

If you have any feedback, please reach out at [louisbrulenaudet@icloud.com](mailto:louisbrulenaudet@icloud.com). |

ChavyvAkvar/synthetic-trades-ADA-cleaned_ohlc | ChavyvAkvar | 2025-06-05T06:42:05Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T06:33:06Z | null | ---

dataset_info:

features:

- name: scenario_id

dtype: string

- name: synthetic_ohlc_open

sequence: float64

- name: synthetic_ohlc_high

sequence: float64

- name: synthetic_ohlc_low

sequence: float64

- name: synthetic_ohlc_close

sequence: float64

splits:

- name: train

num_bytes: 13295809456

num_examples: 14426

download_size: 13326248946

dataset_size: 13295809456

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

ChavyvAkvar/synthetic-trades-ETH-cleaned_ohlc | ChavyvAkvar | 2025-06-05T05:57:40Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T05:44:02Z | null | ---

dataset_info:

features:

- name: scenario_id

dtype: string

- name: synthetic_ohlc_open

sequence: float64

- name: synthetic_ohlc_high

sequence: float64

- name: synthetic_ohlc_low

sequence: float64

- name: synthetic_ohlc_close

sequence: float64

splits:

- name: train

num_bytes: 20229427544

num_examples: 21949

download_size: 20273558693

dataset_size: 20229427544

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

abhayesian/miserable_roleplay_formatted | abhayesian | 2025-06-05T05:48:53Z | 32 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-03T03:38:27Z | null | ---

dataset_info:

features:

- name: prompt

dtype: string

- name: response

dtype: string

splits:

- name: train

num_bytes: 1434220

num_examples: 1000

download_size: 89589

dataset_size: 1434220

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

igorcouto/cv21-pt-audio-sentence | igorcouto | 2025-06-05T05:19:54Z | 9 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:audio",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T04:54:58Z | null | ---

dataset_info:

features:

- name: audio

dtype:

audio:

sampling_rate: 16000

- name: sentence

dtype: string

splits:

- name: train

num_bytes: 5973704143.359349

num_examples: 216685

- name: validation

num_bytes: 318475335.30389816

num_examples: 11405

download_size: 6203689221

dataset_size: 6292179478.663247

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

---

|

robert-1111/x_dataset_040849 | robert-1111 | 2025-06-05T05:15:33Z | 721 | 0 | [

"task_categories:text-classification",

"task_categories:token-classification",

"task_categories:question-answering",

"task_categories:summarization",

"task_categories:text-generation",

"task_ids:sentiment-analysis",

"task_ids:topic-classification",

"task_ids:named-entity-recognition",

"task_ids:language-modeling",

"task_ids:text-scoring",

"task_ids:multi-class-classification",

"task_ids:multi-label-classification",

"task_ids:extractive-qa",

"task_ids:news-articles-summarization",

"multilinguality:multilingual",

"source_datasets:original",

"license:mit",

"size_categories:10M<n<100M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"text-classification",

"token-classification",

"question-answering",

"summarization",

"text-generation"

] | 2025-01-25T07:10:57Z | null | ---

license: mit

multilinguality:

- multilingual

source_datasets:

- original

task_categories:

- text-classification

- token-classification

- question-answering

- summarization

- text-generation

task_ids:

- sentiment-analysis

- topic-classification

- named-entity-recognition

- language-modeling

- text-scoring

- multi-class-classification

- multi-label-classification

- extractive-qa

- news-articles-summarization

---

# Bittensor Subnet 13 X (Twitter) Dataset

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/bittensor.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/macrocosmos-black.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

## Dataset Description

- **Repository:** robert-1111/x_dataset_040849

- **Subnet:** Bittensor Subnet 13

- **Miner Hotkey:** 5CZw3NP1Uq3jrN3auP83MsRXgUs3eiZpoAMJuYyPpVnHvXY2

### Miner Data Compliance Agreement

In uploading this dataset, I am agreeing to the [Macrocosmos Miner Data Compliance Policy](https://github.com/macrocosm-os/data-universe/blob/add-miner-policy/docs/miner_policy.md).

### Dataset Summary

This dataset is part of the Bittensor Subnet 13 decentralized network, containing preprocessed data from X (formerly Twitter). The data is continuously updated by network miners, providing a real-time stream of tweets for various analytical and machine learning tasks.

For more information about the dataset, please visit the [official repository](https://github.com/macrocosm-os/data-universe).

### Supported Tasks

The versatility of this dataset allows researchers and data scientists to explore various aspects of social media dynamics and develop innovative applications. Users are encouraged to leverage this data creatively for their specific research or business needs.

For example:

- Sentiment Analysis

- Trend Detection

- Content Analysis

- User Behavior Modeling

### Languages

Primary language: Datasets are mostly English, but can be multilingual due to decentralized ways of creation.

## Dataset Structure

### Data Instances

Each instance represents a single tweet with the following fields:

### Data Fields

- `text` (string): The main content of the tweet.

- `label` (string): Sentiment or topic category of the tweet.

- `tweet_hashtags` (list): A list of hashtags used in the tweet. May be empty if no hashtags are present.

- `datetime` (string): The date when the tweet was posted.

- `username_encoded` (string): An encoded version of the username to maintain user privacy.

- `url_encoded` (string): An encoded version of any URLs included in the tweet. May be empty if no URLs are present.

### Data Splits

This dataset is continuously updated and does not have fixed splits. Users should create their own splits based on their requirements and the data's timestamp.

## Dataset Creation

### Source Data

Data is collected from public tweets on X (Twitter), adhering to the platform's terms of service and API usage guidelines.

### Personal and Sensitive Information

All usernames and URLs are encoded to protect user privacy. The dataset does not intentionally include personal or sensitive information.

## Considerations for Using the Data

### Social Impact and Biases

Users should be aware of potential biases inherent in X (Twitter) data, including demographic and content biases. This dataset reflects the content and opinions expressed on X and should not be considered a representative sample of the general population.

### Limitations

- Data quality may vary due to the decentralized nature of collection and preprocessing.

- The dataset may contain noise, spam, or irrelevant content typical of social media platforms.

- Temporal biases may exist due to real-time collection methods.

- The dataset is limited to public tweets and does not include private accounts or direct messages.

- Not all tweets contain hashtags or URLs.

## Additional Information

### Licensing Information

The dataset is released under the MIT license. The use of this dataset is also subject to X Terms of Use.

### Citation Information

If you use this dataset in your research, please cite it as follows:

```

@misc{robert-11112025datauniversex_dataset_040849,

title={The Data Universe Datasets: The finest collection of social media data the web has to offer},

author={robert-1111},

year={2025},

url={https://huggingface.co/datasets/robert-1111/x_dataset_040849},

}

```

### Contributions

To report issues or contribute to the dataset, please contact the miner or use the Bittensor Subnet 13 governance mechanisms.

## Dataset Statistics

[This section is automatically updated]

- **Total Instances:** 2009620

- **Date Range:** 2025-01-02T00:00:00Z to 2025-05-26T00:00:00Z

- **Last Updated:** 2025-06-05T05:15:33Z

### Data Distribution

- Tweets with hashtags: 4.91%

- Tweets without hashtags: 95.09%

### Top 10 Hashtags

For full statistics, please refer to the `stats.json` file in the repository.

| Rank | Topic | Total Count | Percentage |

|------|-------|-------------|-------------|

| 1 | NULL | 1082102 | 91.65% |

| 2 | #riyadh | 16192 | 1.37% |

| 3 | #thameposeriesep9 | 7605 | 0.64% |

| 4 | #smackdown | 4844 | 0.41% |

| 5 | #कबीर_परमेश्वर_निर्वाण_दिवस | 4843 | 0.41% |

| 6 | #tiktok | 4292 | 0.36% |

| 7 | #اجازاااااااات_مرضيه_o58o67ち179 | 3682 | 0.31% |

| 8 | #ad | 3502 | 0.30% |

| 9 | #delhielectionresults | 3476 | 0.29% |

| 10 | #فلك_اااااااالنصابين | 3363 | 0.28% |

## Update History

| Date | New Instances | Total Instances |

|------|---------------|-----------------|

| 2025-01-25T07:10:27Z | 414446 | 414446 |

| 2025-01-25T07:10:56Z | 414446 | 828892 |

| 2025-01-25T07:11:27Z | 414446 | 1243338 |

| 2025-02-18T03:37:50Z | 463345 | 1706683 |

| 2025-06-05T05:15:33Z | 302937 | 2009620 |

|

robert-1111/x_dataset_0405200 | robert-1111 | 2025-06-05T05:12:56Z | 1,147 | 0 | [

"task_categories:text-classification",

"task_categories:token-classification",

"task_categories:question-answering",

"task_categories:summarization",

"task_categories:text-generation",

"task_ids:sentiment-analysis",

"task_ids:topic-classification",

"task_ids:named-entity-recognition",

"task_ids:language-modeling",

"task_ids:text-scoring",

"task_ids:multi-class-classification",

"task_ids:multi-label-classification",

"task_ids:extractive-qa",

"task_ids:news-articles-summarization",

"multilinguality:multilingual",

"source_datasets:original",

"license:mit",

"size_categories:10M<n<100M",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [

"text-classification",

"token-classification",

"question-answering",

"summarization",

"text-generation"

] | 2025-01-25T07:09:57Z | null | ---

license: mit

multilinguality:

- multilingual

source_datasets:

- original

task_categories:

- text-classification

- token-classification

- question-answering

- summarization

- text-generation

task_ids:

- sentiment-analysis

- topic-classification

- named-entity-recognition

- language-modeling

- text-scoring

- multi-class-classification

- multi-label-classification

- extractive-qa

- news-articles-summarization

---

# Bittensor Subnet 13 X (Twitter) Dataset

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/bittensor.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

<center>

<img src="https://huggingface.co/datasets/macrocosm-os/images/resolve/main/macrocosmos-black.png" alt="Data-universe: The finest collection of social media data the web has to offer">

</center>

## Dataset Description

- **Repository:** robert-1111/x_dataset_0405200

- **Subnet:** Bittensor Subnet 13

- **Miner Hotkey:** 5H9AFS5tcgBKAxV7gg51QR5pAv25tyUUoWX3Eo7h1sfNL1TQ

### Miner Data Compliance Agreement

In uploading this dataset, I am agreeing to the [Macrocosmos Miner Data Compliance Policy](https://github.com/macrocosm-os/data-universe/blob/add-miner-policy/docs/miner_policy.md).

### Dataset Summary

This dataset is part of the Bittensor Subnet 13 decentralized network, containing preprocessed data from X (formerly Twitter). The data is continuously updated by network miners, providing a real-time stream of tweets for various analytical and machine learning tasks.

For more information about the dataset, please visit the [official repository](https://github.com/macrocosm-os/data-universe).

### Supported Tasks

The versatility of this dataset allows researchers and data scientists to explore various aspects of social media dynamics and develop innovative applications. Users are encouraged to leverage this data creatively for their specific research or business needs.

For example:

- Sentiment Analysis

- Trend Detection

- Content Analysis

- User Behavior Modeling

### Languages

Primary language: Datasets are mostly English, but can be multilingual due to decentralized ways of creation.

## Dataset Structure

### Data Instances

Each instance represents a single tweet with the following fields:

### Data Fields

- `text` (string): The main content of the tweet.

- `label` (string): Sentiment or topic category of the tweet.

- `tweet_hashtags` (list): A list of hashtags used in the tweet. May be empty if no hashtags are present.

- `datetime` (string): The date when the tweet was posted.

- `username_encoded` (string): An encoded version of the username to maintain user privacy.

- `url_encoded` (string): An encoded version of any URLs included in the tweet. May be empty if no URLs are present.

### Data Splits

This dataset is continuously updated and does not have fixed splits. Users should create their own splits based on their requirements and the data's timestamp.

## Dataset Creation

### Source Data

Data is collected from public tweets on X (Twitter), adhering to the platform's terms of service and API usage guidelines.

### Personal and Sensitive Information

All usernames and URLs are encoded to protect user privacy. The dataset does not intentionally include personal or sensitive information.

## Considerations for Using the Data

### Social Impact and Biases

Users should be aware of potential biases inherent in X (Twitter) data, including demographic and content biases. This dataset reflects the content and opinions expressed on X and should not be considered a representative sample of the general population.

### Limitations

- Data quality may vary due to the decentralized nature of collection and preprocessing.

- The dataset may contain noise, spam, or irrelevant content typical of social media platforms.

- Temporal biases may exist due to real-time collection methods.

- The dataset is limited to public tweets and does not include private accounts or direct messages.

- Not all tweets contain hashtags or URLs.

## Additional Information

### Licensing Information

The dataset is released under the MIT license. The use of this dataset is also subject to X Terms of Use.

### Citation Information

If you use this dataset in your research, please cite it as follows:

```

@misc{robert-11112025datauniversex_dataset_0405200,

title={The Data Universe Datasets: The finest collection of social media data the web has to offer},

author={robert-1111},

year={2025},

url={https://huggingface.co/datasets/robert-1111/x_dataset_0405200},

}

```

### Contributions

To report issues or contribute to the dataset, please contact the miner or use the Bittensor Subnet 13 governance mechanisms.

## Dataset Statistics

[This section is automatically updated]

- **Total Instances:** 1180728

- **Date Range:** 2025-01-02T00:00:00Z to 2025-05-26T00:00:00Z

- **Last Updated:** 2025-06-05T05:12:56Z

### Data Distribution

- Tweets with hashtags: 8.35%

- Tweets without hashtags: 91.65%

### Top 10 Hashtags

For full statistics, please refer to the `stats.json` file in the repository.

| Rank | Topic | Total Count | Percentage |

|------|-------|-------------|-------------|

| 1 | NULL | 1082102 | 91.65% |

| 2 | #riyadh | 16192 | 1.37% |

| 3 | #thameposeriesep9 | 7605 | 0.64% |

| 4 | #smackdown | 4844 | 0.41% |

| 5 | #कबीर_परमेश्वर_निर्वाण_दिवस | 4843 | 0.41% |

| 6 | #tiktok | 4292 | 0.36% |

| 7 | #اجازاااااااات_مرضيه_o58o67ち179 | 3682 | 0.31% |

| 8 | #ad | 3502 | 0.30% |

| 9 | #delhielectionresults | 3476 | 0.29% |

| 10 | #فلك_اااااااالنصابين | 3363 | 0.28% |

## Update History

| Date | New Instances | Total Instances |

|------|---------------|-----------------|

| 2025-01-25T07:10:27Z | 414446 | 414446 |

| 2025-02-18T03:36:42Z | 463345 | 877791 |

| 2025-06-05T05:12:56Z | 302937 | 1180728 |

|

hazelyan60/github-issues | hazelyan60 | 2025-06-05T04:37:53Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T04:37:45Z | null | ---

dataset_info:

features:

- name: url

dtype: string

- name: repository_url

dtype: string

- name: labels_url

dtype: string

- name: comments_url

dtype: string

- name: events_url

dtype: string

- name: html_url

dtype: string

- name: id

dtype: int64

- name: node_id

dtype: string

- name: number

dtype: int64

- name: title

dtype: string

- name: user

struct:

- name: login

dtype: string

- name: id

dtype: int64

- name: node_id

dtype: string

- name: avatar_url

dtype: string

- name: gravatar_id

dtype: string

- name: url

dtype: string

- name: html_url

dtype: string

- name: followers_url

dtype: string

- name: following_url

dtype: string

- name: gists_url

dtype: string

- name: starred_url

dtype: string

- name: subscriptions_url

dtype: string

- name: organizations_url

dtype: string

- name: repos_url

dtype: string

- name: events_url

dtype: string

- name: received_events_url

dtype: string

- name: type

dtype: string

- name: user_view_type

dtype: string

- name: site_admin

dtype: bool

- name: labels

list:

- name: id

dtype: int64

- name: node_id

dtype: string

- name: url

dtype: string

- name: name

dtype: string

- name: color

dtype: string

- name: default

dtype: bool

- name: description

dtype: string

- name: state

dtype: string

- name: locked

dtype: bool

- name: assignee

struct:

- name: login

dtype: string

- name: id

dtype: int64

- name: node_id

dtype: string

- name: avatar_url

dtype: string

- name: gravatar_id

dtype: string

- name: url

dtype: string

- name: html_url

dtype: string

- name: followers_url

dtype: string

- name: following_url

dtype: string

- name: gists_url

dtype: string

- name: starred_url

dtype: string

- name: subscriptions_url

dtype: string

- name: organizations_url

dtype: string

- name: repos_url

dtype: string

- name: events_url

dtype: string

- name: received_events_url

dtype: string

- name: type

dtype: string

- name: user_view_type

dtype: string

- name: site_admin

dtype: bool

- name: assignees

list:

- name: login

dtype: string

- name: id

dtype: int64

- name: node_id

dtype: string

- name: avatar_url

dtype: string

- name: gravatar_id

dtype: string

- name: url

dtype: string

- name: html_url

dtype: string

- name: followers_url

dtype: string

- name: following_url

dtype: string

- name: gists_url

dtype: string

- name: starred_url

dtype: string

- name: subscriptions_url

dtype: string

- name: organizations_url

dtype: string

- name: repos_url

dtype: string

- name: events_url

dtype: string

- name: received_events_url

dtype: string

- name: type

dtype: string

- name: user_view_type

dtype: string

- name: site_admin

dtype: bool

- name: milestone

struct:

- name: url

dtype: string

- name: html_url

dtype: string

- name: labels_url

dtype: string

- name: id

dtype: int64

- name: node_id

dtype: string

- name: number

dtype: int64

- name: title

dtype: string

- name: description

dtype: string

- name: creator

struct:

- name: login

dtype: string

- name: id

dtype: int64

- name: node_id

dtype: string

- name: avatar_url

dtype: string

- name: gravatar_id

dtype: string

- name: url

dtype: string

- name: html_url

dtype: string

- name: followers_url

dtype: string

- name: following_url

dtype: string

- name: gists_url

dtype: string

- name: starred_url

dtype: string

- name: subscriptions_url

dtype: string

- name: organizations_url

dtype: string

- name: repos_url

dtype: string

- name: events_url

dtype: string

- name: received_events_url

dtype: string

- name: type

dtype: string

- name: user_view_type

dtype: string

- name: site_admin

dtype: bool

- name: open_issues

dtype: int64

- name: closed_issues

dtype: int64

- name: state

dtype: string

- name: created_at

dtype: timestamp[s]

- name: updated_at

dtype: timestamp[s]

- name: due_on

dtype: 'null'

- name: closed_at

dtype: 'null'

- name: comments

sequence: string

- name: created_at

dtype: timestamp[s]

- name: updated_at

dtype: timestamp[s]

- name: closed_at

dtype: timestamp[s]

- name: author_association

dtype: string

- name: type

dtype: 'null'

- name: active_lock_reason

dtype: 'null'

- name: sub_issues_summary

struct:

- name: total

dtype: int64

- name: completed

dtype: int64

- name: percent_completed

dtype: int64

- name: body

dtype: string

- name: closed_by

struct:

- name: login

dtype: string

- name: id

dtype: int64

- name: node_id

dtype: string

- name: avatar_url

dtype: string

- name: gravatar_id

dtype: string

- name: url

dtype: string

- name: html_url

dtype: string

- name: followers_url

dtype: string

- name: following_url

dtype: string

- name: gists_url

dtype: string

- name: starred_url

dtype: string

- name: subscriptions_url

dtype: string

- name: organizations_url

dtype: string

- name: repos_url

dtype: string

- name: events_url

dtype: string

- name: received_events_url

dtype: string

- name: type

dtype: string

- name: user_view_type

dtype: string

- name: site_admin

dtype: bool

- name: reactions

struct:

- name: url

dtype: string

- name: total_count

dtype: int64

- name: '+1'

dtype: int64

- name: '-1'

dtype: int64

- name: laugh

dtype: int64

- name: hooray

dtype: int64

- name: confused

dtype: int64

- name: heart

dtype: int64

- name: rocket

dtype: int64

- name: eyes

dtype: int64

- name: timeline_url

dtype: string

- name: performed_via_github_app

dtype: 'null'

- name: state_reason

dtype: string

- name: draft

dtype: bool

- name: pull_request

struct:

- name: url

dtype: string

- name: html_url

dtype: string

- name: diff_url

dtype: string

- name: patch_url

dtype: string

- name: merged_at

dtype: timestamp[s]

- name: is_pull_request

dtype: bool

splits:

- name: train

num_bytes: 34697825

num_examples: 4684

download_size: 9633773

dataset_size: 34697825

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

QuanHoangNgoc/lock_dataset_prc | QuanHoangNgoc | 2025-06-05T03:01:29Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:timeseries",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T02:50:57Z | null | ---

dataset_info:

features:

- name: input_values

sequence: float32

- name: input_length

dtype: int64

- name: labels

sequence: int64

splits:

- name: train

num_bytes: 18795189628.0

num_examples: 15023

- name: dev

num_bytes: 118626900.0

num_examples: 95

download_size: 18886646995

dataset_size: 18913816528.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: dev

path: data/dev-*

---

|

benjamintli/synthetic_text_to_sql_formatted | benjamintli | 2025-06-05T02:29:59Z | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T02:26:29Z | null | ---

dataset_info:

features:

- name: conversations

list:

- name: content

dtype: string

- name: role

dtype: string

splits:

- name: train

num_bytes: 52558692

num_examples: 100000

- name: test

num_bytes: 3061665

num_examples: 5851

download_size: 22881926

dataset_size: 55620357

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

nskwal/rover | nskwal | 2025-06-05T02:22:57Z | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T02:21:00Z | null | ---

dataset_info:

features:

- name: reasoning

dtype: string

- name: label

dtype: string

- name: summary

dtype: string

- name: claim

dtype: string

- name: evidence

dtype: string

- name: golden_label

dtype: string

splits:

- name: train

num_bytes: 1569029

num_examples: 1029

download_size: 548231

dataset_size: 1569029

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

antoine-444/MNLP_M3_mcqa_dataset | antoine-444 | 2025-06-05T00:27:16Z | 0 | 1 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-05T00:27:08Z | 1 | ---

dataset_info:

features:

- name: dataset

dtype: string

- name: id

dtype: string

- name: question

dtype: string

- name: choices

sequence: string

- name: rationale

dtype: string

- name: answer

dtype: string

- name: subject

dtype: string

splits:

- name: train

num_bytes: 96584598

num_examples: 187660

- name: test

num_bytes: 3198004

num_examples: 7750

- name: validation

num_bytes: 1248321

num_examples: 2691

download_size: 51566324

dataset_size: 101030923

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

- split: validation

path: data/validation-*

---

|

matthewchung74/nflx-1_0y-5min-bars | matthewchung74 | 2025-06-04T23:59:25Z | 0 | 0 | [

"size_categories:10K<n<100K",

"format:csv",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | [] | 2025-06-04T23:59:21Z | null | ---

dataset_info:

features:

- name: symbol

dtype: string

- name: timestamp

dtype: string

- name: open

dtype: float64

- name: high

dtype: float64

- name: low

dtype: float64

- name: close

dtype: float64

- name: volume

dtype: float64

- name: trade_count

dtype: float64

- name: vwap

dtype: float64

configs:

- config_name: default

data_files:

- split: train

path: data/nflx_1_0_years_5min.csv

download_size: 1708812

dataset_size: 19755

---

# NFLX 5-Minute Stock Data (1.0 Years)

This dataset contains 1.0 years of NFLX stock market data downloaded from Alpaca Markets.

## Dataset Description

- **Symbol**: NFLX

- **Duration**: 1.0 years

- **Timeframe**: 5-minute bars

- **Market Hours**: 9:30 AM - 4:00 PM EST only

- **Data Source**: Alpaca Markets API

- **Last Updated**: 2025-06-04

## Features

- `symbol`: Stock symbol (always "NFLX")

- `timestamp`: Timestamp in Eastern Time (EST/EDT)

- `open`: Opening price for the 5-minute period

- `high`: Highest price during the 5-minute period

- `low`: Lowest price during the 5-minute period

- `close`: Closing price for the 5-minute period

- `volume`: Number of shares traded

- `trade_count`: Number of individual trades

- `vwap`: Volume Weighted Average Price

## Data Quality

- Only includes data during regular market hours (9:30 AM - 4:00 PM EST)

- Excludes weekends and holidays when markets are closed

- Approximately 19,755 records covering ~1.0 years of trading data

## Usage

```python

from datasets import load_dataset

dataset = load_dataset("matthewchung74/nflx-1_0y-5min-bars")

df = dataset['train'].to_pandas()

```

## Price Statistics

- **Price Range**: $587.04 - $1242.56

- **Average Volume**: 44,956

- **Date Range**: 2024-06-04 09:30:00-04:00 to 2025-06-04 16:00:00-04:00

## License

This dataset is provided under the MIT license. The underlying market data is sourced from Alpaca Markets.

|

cfahlgren1/hub-stats | cfahlgren1 | 2025-06-04T23:34:52Z | 2,408 | 47 | [

"license:apache-2.0",

"modality:image",

"region:us"

] | [] | 2024-07-24T18:20:02Z | null | ---

license: apache-2.0

configs:

- config_name: models

data_files: "models.parquet"

- config_name: datasets

data_files: "datasets.parquet"

- config_name: spaces

data_files: "spaces.parquet"

- config_name: posts

data_files: "posts.parquet"

- config_name: papers

data_files: "daily_papers.parquet"

---

<img src="https://cdn-uploads.huggingface.co/production/uploads/648a374f00f7a3374ee64b99/QoLLMgnFmeGqUTA5Bkgjw.png" width=800/>

**NEW** Changes Feb 27th

- Added new fields on the `models` split: `downloadsAllTime`, `safetensors`, `gguf`

- Added new field on the `datasets` split: `downloadsAllTime`

- Added new split: `papers` which is all of the [Daily Papers](https://huggingface.co/papers)

**Updated Daily** |

VisualSphinx/VisualSphinx-V1-RL-20K | VisualSphinx | 2025-06-04T23:34:24Z | 195 | 1 | [

"task_categories:image-text-to-text",

"task_categories:visual-question-answering",

"language:en",

"license:cc-by-nc-4.0",

"size_categories:10K<n<100K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"arxiv:2505.23977",

"region:us"

] | [

"image-text-to-text",

"visual-question-answering"

] | 2025-05-12T21:28:45Z | 1 | ---

language:

- en

license: cc-by-nc-4.0

task_categories:

- image-text-to-text

- visual-question-answering

dataset_info:

features:

- name: id

dtype: string

- name: images

sequence: image

- name: problem

dtype: string

- name: choice

dtype: string

- name: answer

dtype: string

- name: explanation

dtype: string

- name: has_duplicate

dtype: bool

- name: reasonableness

dtype: int32

- name: readability

dtype: int32

- name: accuracy

dtype: float32

splits:

- name: train

num_bytes: 1192196287

num_examples: 20000

download_size: 1184324044

dataset_size: 1192196287

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

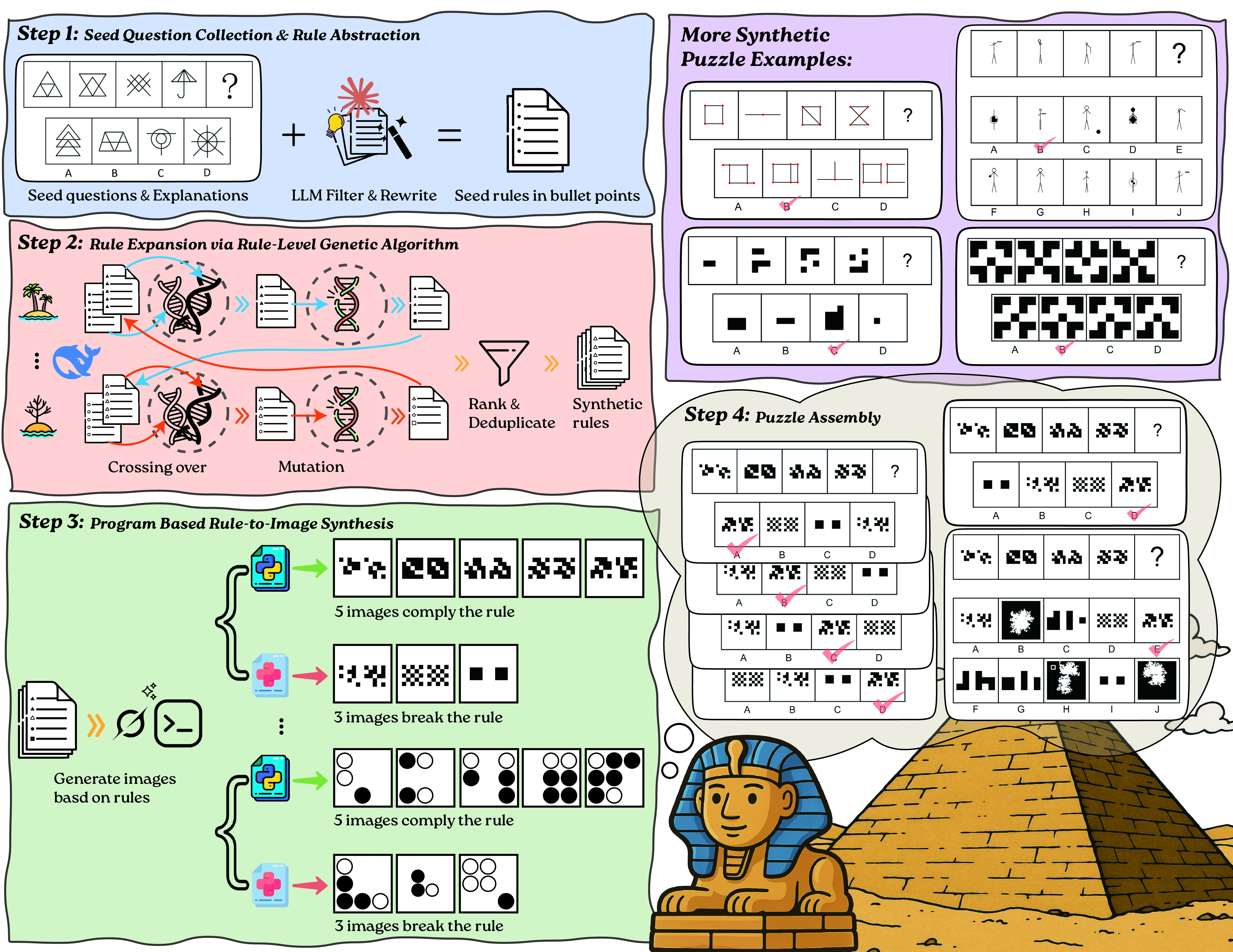

# 🦁 VisualSphinx: Large-Scale Synthetic Vision Logic Puzzles for RL

VisualSphinx is the largest fully-synthetic open-source dataset providing vision logic puzzles. It consists of over **660K** automatically generated logical visual puzzles. Each logical puzzle is grounded with an interpretable rule and accompanied by both correct answers and plausible distractors.

- 🌐 [Project Website](https://visualsphinx.github.io/) - Learn more about VisualSphinx

- 📖 [Technical Report](https://arxiv.org/abs/2505.23977) - Discover the methodology and technical details behind VisualSphinx

- 🔧 [Github Repo](https://github.com/VisualSphinx/VisualSphinx) - Access the complete pipeline used to produce VisualSphinx-V1

- 🤗 HF Datasets:

- [VisualSphinx-V1 (Raw)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-Raw);

- [VisualSphinx-V1 (For RL)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-RL-20K); [📍| You are here!]

- [VisualSphinx-V1 (Benchmark)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-Benchmark);

- [VisualSphinx (Seeds)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-Seeds);

- [VisualSphinx (Rules)](https://huggingface.co/datasets/VisualSphinx/VisualSphinx-V1-Rules).

## 📊 Dataset Details

### 🎯 Purpose

This dataset is **specifically curated for reinforcement learning (RL) applications**, containing 20K high-quality visual logic puzzles optimized for RL. It represents a carefully filtered and balanced subset from the VisualSphinx-V1-Raw collection.

### 📈 Dataset Splits

- **`train`**: Contains 20K visual logic puzzles optimized for RL training scenarios

### 🏗️ Dataset Structure

Each puzzle in the dataset contains the following fields:

| Field | Type | Description |

|-------|------|-------------|

| `id` | `string` | Unique identifier for each puzzle (format: number_variant) |

| `images` | `List[Image]` | Visual puzzle image with geometric patterns and logical relationships |

| `problem` | `string` | Standardized puzzle prompt for pattern completion |

| `choice` | `string` | JSON-formatted multiple choice options (4-10 options: A-J) |

| `answer` | `string` | Correct answer choice |

| `explanation` | `List[string]` | Detailed rule-based explanations for logical reasoning |

| `has_duplicate` | `bool` | Flag indicating if this puzzle has duplicate images in puzzle itself |

| `reasonableness` | `int32` | Logical coherence score (3-5 scale, filtered for quality) |

| `readability` | `int32` | Visual clarity score (3-5 scale, filtered for quality) |

| `accuracy` | `float32` | Pass rate |

### 📏 Dataset Statistics

- **Total Examples**: 20,000 carefully curated puzzles

- **Quality Filtering**: High-quality subset with reasonableness + readability ≥ 8

- **Complexity Range**: Variable choice counts (4-10 options) for diverse difficulty levels

- **RL Optimization**: Balanced difficulty distribution and no duplicates

- **Answer Distribution**: Balanced across all available choice options

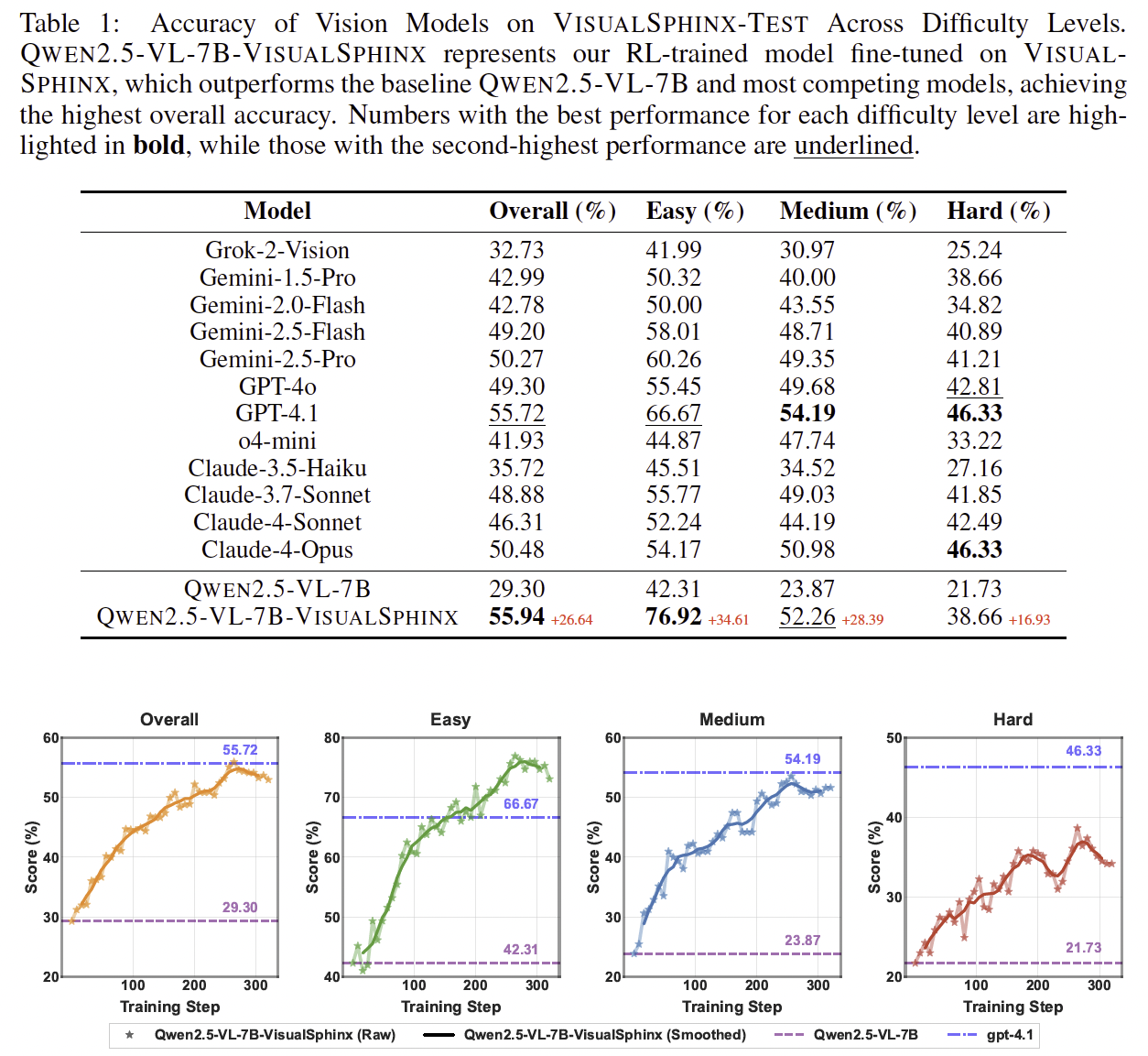

## ✨ Performance on Our Benchmarks

## 🔧 Other Information

**License**: Please follow [CC BY-NC 4.0](https://creativecommons.org/licenses/by-nc/4.0/deed.en).

**Contact**: Please contact [Yichen](mailto:yfeng42@uw.edu) by email.

## 📚 Citation

If you find the data or code useful, please cite:

```

@misc{feng2025visualsphinx,

title={VisualSphinx: Large-Scale Synthetic Vision Logic Puzzles for RL},

author={Yichen Feng and Zhangchen Xu and Fengqing Jiang and Yuetai Li and Bhaskar Ramasubramanian and Luyao Niu and Bill Yuchen Lin and Radha Poovendran},

year={2025},

eprint={2505.23977},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.23977},

} |

Aravindh25/trossen_pick_high_bin_clothes_3cam_V0000001 | Aravindh25 | 2025-06-04T23:14:48Z | 0 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:parquet",

"modality:tabular",

"modality:timeseries",

"modality:video",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot",

"tutorial"

] | [

"robotics"

] | 2025-06-04T23:12:54Z | null | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

- tutorial

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.1",

"robot_type": "trossen_ai_stationary",

"total_episodes": 3,

"total_frames": 5135,

"total_tasks": 1,

"total_videos": 9,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 20,

"splits": {

"train": "0:3"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"action": {

"dtype": "float32",

"shape": [

14

],

"names": [

"left_joint_0",

"left_joint_1",

"left_joint_2",

"left_joint_3",

"left_joint_4",

"left_joint_5",

"left_joint_6",

"right_joint_0",

"right_joint_1",

"right_joint_2",

"right_joint_3",

"right_joint_4",

"right_joint_5",

"right_joint_6"

]

},

"observation.state": {

"dtype": "float32",

"shape": [

14

],

"names": [

"left_joint_0",

"left_joint_1",

"left_joint_2",

"left_joint_3",

"left_joint_4",

"left_joint_5",

"left_joint_6",

"right_joint_0",

"right_joint_1",

"right_joint_2",

"right_joint_3",

"right_joint_4",

"right_joint_5",

"right_joint_6"

]

},

"observation.images.cam_high": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.fps": 20.0,

"video.height": 480,

"video.width": 640,

"video.channels": 3,

"video.codec": "h264",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"has_audio": false

}

},

"observation.images.cam_left_wrist": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.fps": 20.0,

"video.height": 480,

"video.width": 640,

"video.channels": 3,

"video.codec": "h264",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"has_audio": false

}

},

"observation.images.cam_right_wrist": {

"dtype": "video",

"shape": [

480,

640,

3

],

"names": [

"height",

"width",

"channels"

],

"info": {

"video.fps": 20.0,

"video.height": 480,

"video.width": 640,

"video.channels": 3,

"video.codec": "h264",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,