Spaces:

Build error

Build error

amanSethSmava

commited on

Commit

·

6d314be

1

Parent(s):

ad9e404

new commit

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .DS_Store +0 -0

- LICENSE +21 -0

- README.md +169 -0

- app.py +0 -0

- datasets/__init__.py +0 -0

- datasets/image_dataset.py +29 -0

- docs/assets/diagram.webp +3 -0

- docs/assets/logo.webp +3 -0

- hair_swap.py +139 -0

- inference_server.py +5 -0

- losses/.DS_Store +0 -0

- losses/__init__.py +0 -0

- losses/lpips/__init__.py +160 -0

- losses/lpips/base_model.py +58 -0

- losses/lpips/dist_model.py +284 -0

- losses/lpips/networks_basic.py +187 -0

- losses/lpips/pretrained_networks.py +181 -0

- losses/masked_lpips/__init__.py +199 -0

- losses/masked_lpips/base_model.py +65 -0

- losses/masked_lpips/dist_model.py +325 -0

- losses/masked_lpips/networks_basic.py +331 -0

- losses/masked_lpips/pretrained_networks.py +190 -0

- losses/pp_losses.py +677 -0

- losses/style/__init__.py +0 -0

- losses/style/custom_loss.py +67 -0

- losses/style/style_loss.py +113 -0

- losses/style/vgg_activations.py +187 -0

- losses/vgg_loss.py +51 -0

- main.py +80 -0

- models/.DS_Store +0 -0

- models/Alignment.py +181 -0

- models/Blending.py +81 -0

- models/CtrlHair/.gitignore +8 -0

- models/CtrlHair/README.md +270 -0

- models/CtrlHair/color_texture_branch/__init__.py +8 -0

- models/CtrlHair/color_texture_branch/config.py +141 -0

- models/CtrlHair/color_texture_branch/dataset.py +158 -0

- models/CtrlHair/color_texture_branch/model.py +159 -0

- models/CtrlHair/color_texture_branch/model_eigengan.py +89 -0

- models/CtrlHair/color_texture_branch/module.py +61 -0

- models/CtrlHair/color_texture_branch/predictor/__init__.py +8 -0

- models/CtrlHair/color_texture_branch/predictor/predictor_config.py +119 -0

- models/CtrlHair/color_texture_branch/predictor/predictor_model.py +41 -0

- models/CtrlHair/color_texture_branch/predictor/predictor_solver.py +51 -0

- models/CtrlHair/color_texture_branch/predictor/predictor_train.py +159 -0

- models/CtrlHair/color_texture_branch/script_find_direction.py +74 -0

- models/CtrlHair/color_texture_branch/solver.py +299 -0

- models/CtrlHair/color_texture_branch/train.py +166 -0

- models/CtrlHair/color_texture_branch/validation_in_train.py +299 -0

- models/CtrlHair/common_dataset.py +104 -0

.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2024 AIRI - Artificial Intelligence Research Institute

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

ADDED

|

@@ -0,0 +1,169 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# HairFastGAN: Realistic and Robust Hair Transfer with a Fast Encoder-Based Approach

|

| 2 |

+

|

| 3 |

+

<a href="https://arxiv.org/abs/2404.01094"><img src="https://img.shields.io/badge/arXiv-2404.01094-b31b1b.svg" height=22.5></a>

|

| 4 |

+

<a href="https://huggingface.co/spaces/AIRI-Institute/HairFastGAN"><img src="https://huggingface.co/datasets/huggingface/badges/resolve/main/open-in-hf-spaces-md.svg" height=22.5></a>

|

| 5 |

+

<a href="https://colab.research.google.com/#fileId=https://huggingface.co/AIRI-Institute/HairFastGAN/blob/main/notebooks/HairFast_inference.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" height=22.5></a>

|

| 6 |

+

[](./LICENSE)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

> Our paper addresses the complex task of transferring a hairstyle from a reference image to an input photo for virtual hair try-on. This task is challenging due to the need to adapt to various photo poses, the sensitivity of hairstyles, and the lack of objective metrics. The current state of the art hairstyle transfer methods use an optimization process for different parts of the approach, making them inexcusably slow. At the same time, faster encoder-based models are of very low quality because they either operate in StyleGAN's W+ space or use other low-dimensional image generators. Additionally, both approaches have a problem with hairstyle transfer when the source pose is very different from the target pose, because they either don't consider the pose at all or deal with it inefficiently. In our paper, we present the HairFast model, which uniquely solves these problems and achieves high resolution, near real-time performance, and superior reconstruction compared to optimization problem-based methods. Our solution includes a new architecture operating in the FS latent space of StyleGAN, an enhanced inpainting approach, and improved encoders for better alignment, color transfer, and a new encoder for post-processing. The effectiveness of our approach is demonstrated on realism metrics after random hairstyle transfer and reconstruction when the original hairstyle is transferred. In the most difficult scenario of transferring both shape and color of a hairstyle from different images, our method performs in less than a second on the Nvidia V100.

|

| 10 |

+

>

|

| 11 |

+

|

| 12 |

+

<p align="center">

|

| 13 |

+

<img src="docs/assets/logo.webp" alt="Teaser"/>

|

| 14 |

+

<br>

|

| 15 |

+

The proposed HairFast framework allows to edit a hairstyle on an arbitrary photo based on an example from other photos. Here we have an example of how the method works by transferring a hairstyle from one photo and a hair color from another.

|

| 16 |

+

</p>

|

| 17 |

+

|

| 18 |

+

## Updates

|

| 19 |

+

|

| 20 |

+

- [25/09/2024] 🎉🎉🎉 HairFastGAN has been accepted by [NeurIPS 2024](https://nips.cc/virtual/2024/poster/93397).

|

| 21 |

+

- [24/05/2024] 🌟🌟🌟 Release of the [official demo](https://huggingface.co/spaces/AIRI-Institute/HairFastGAN) on Hugging Face 🤗.

|

| 22 |

+

- [01/04/2024] 🔥🔥🔥 HairFastGAN release.

|

| 23 |

+

|

| 24 |

+

## Prerequisites

|

| 25 |

+

You need following hardware and python version to run our method.

|

| 26 |

+

- Linux

|

| 27 |

+

- NVIDIA GPU + CUDA CuDNN

|

| 28 |

+

- Python 3.10

|

| 29 |

+

- PyTorch 1.13.1+

|

| 30 |

+

|

| 31 |

+

## Installation

|

| 32 |

+

|

| 33 |

+

* Clone this repo:

|

| 34 |

+

```bash

|

| 35 |

+

git clone https://github.com/AIRI-Institute/HairFastGAN

|

| 36 |

+

cd HairFastGAN

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

* Download all pretrained models:

|

| 40 |

+

```bash

|

| 41 |

+

git clone https://huggingface.co/AIRI-Institute/HairFastGAN

|

| 42 |

+

cd HairFastGAN && git lfs pull && cd ..

|

| 43 |

+

mv HairFastGAN/pretrained_models pretrained_models

|

| 44 |

+

mv HairFastGAN/input input

|

| 45 |

+

rm -rf HairFastGAN

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

* Setting the environment

|

| 49 |

+

|

| 50 |

+

**Option 1 [recommended]**, install [Poetry](https://python-poetry.org/docs/) and then:

|

| 51 |

+

```bash

|

| 52 |

+

poetry install

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

**Option 2**, just install the dependencies in your environment:

|

| 56 |

+

```bash

|

| 57 |

+

pip install -r requirements.txt

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

## Inference

|

| 61 |

+

You can use `main.py` to run the method, either for a single run or for a batch of experiments.

|

| 62 |

+

|

| 63 |

+

* An example of running a single experiment:

|

| 64 |

+

|

| 65 |

+

```

|

| 66 |

+

python main.py --face_path=6.png --shape_path=7.png --color_path=8.png \

|

| 67 |

+

--input_dir=input --result_path=output/result.png

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

* To run the batch version, first create an image triples file (face/shape/color):

|

| 71 |

+

```

|

| 72 |

+

cat > example.txt << EOF

|

| 73 |

+

6.png 7.png 8.png

|

| 74 |

+

8.png 4.jpg 5.jpg

|

| 75 |

+

EOF

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

And now you can run the method:

|

| 79 |

+

```

|

| 80 |

+

python main.py --file_path=example.txt --input_dir=input --output_dir=output

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

* You can use HairFast in the code directly:

|

| 84 |

+

|

| 85 |

+

```python

|

| 86 |

+

from hair_swap import HairFast, get_parser

|

| 87 |

+

|

| 88 |

+

# Init HairFast

|

| 89 |

+

hair_fast = HairFast(get_parser().parse_args([]))

|

| 90 |

+

|

| 91 |

+

# Inference

|

| 92 |

+

result = hair_fast(face_img, shape_img, color_img)

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

See the code for input parameters and output formats.

|

| 96 |

+

|

| 97 |

+

* Alternatively, you can use our [Colab Notebook](https://colab.research.google.com/#fileId=https://huggingface.co/AIRI-Institute/HairFastGAN/blob/main/notebooks/HairFast_inference.ipynb) to prepare the environment, download the code, pretrained weights, and allow you to run experiments with a convenient form.

|

| 98 |

+

|

| 99 |

+

* You can also try our method on the [Hugging Face demo](https://huggingface.co/spaces/AIRI-Institute/HairFastGAN) 🤗.

|

| 100 |

+

|

| 101 |

+

## Scripts

|

| 102 |

+

|

| 103 |

+

There is a list of scripts below, see arguments via --help for details.

|

| 104 |

+

|

| 105 |

+

| Path | Description <img width=200>

|

| 106 |

+

|:----------------------------------------| :---

|

| 107 |

+

| scripts/align_face.py | Processing of raw photos for inference

|

| 108 |

+

| scripts/fid_metric.py | Metrics calculation

|

| 109 |

+

| scripts/rotate_gen.py | Dataset generation for rotate encoder training

|

| 110 |

+

| scripts/blending_gen.py | Dataset generation for color encoder training

|

| 111 |

+

| scripts/pp_gen.py | Dataset generation for refinement encoder training

|

| 112 |

+

| scripts/rotate_train.py | Rotate encoder training

|

| 113 |

+

| scripts/blending_train.py | Color encoder training

|

| 114 |

+

| scripts/pp_train.py | Refinement encoder training

|

| 115 |

+

|

| 116 |

+

|

| 117 |

+

## Training

|

| 118 |

+

For training, you need to generate a dataset and then run the scripts for training. See the scripts section above.

|

| 119 |

+

|

| 120 |

+

We use [Weights & Biases](https://wandb.ai/home) to track experiments. Before training, you should put your W&B API key into the `WANDB_KEY` environment variable.

|

| 121 |

+

|

| 122 |

+

## Method diagram

|

| 123 |

+

|

| 124 |

+

<p align="center">

|

| 125 |

+

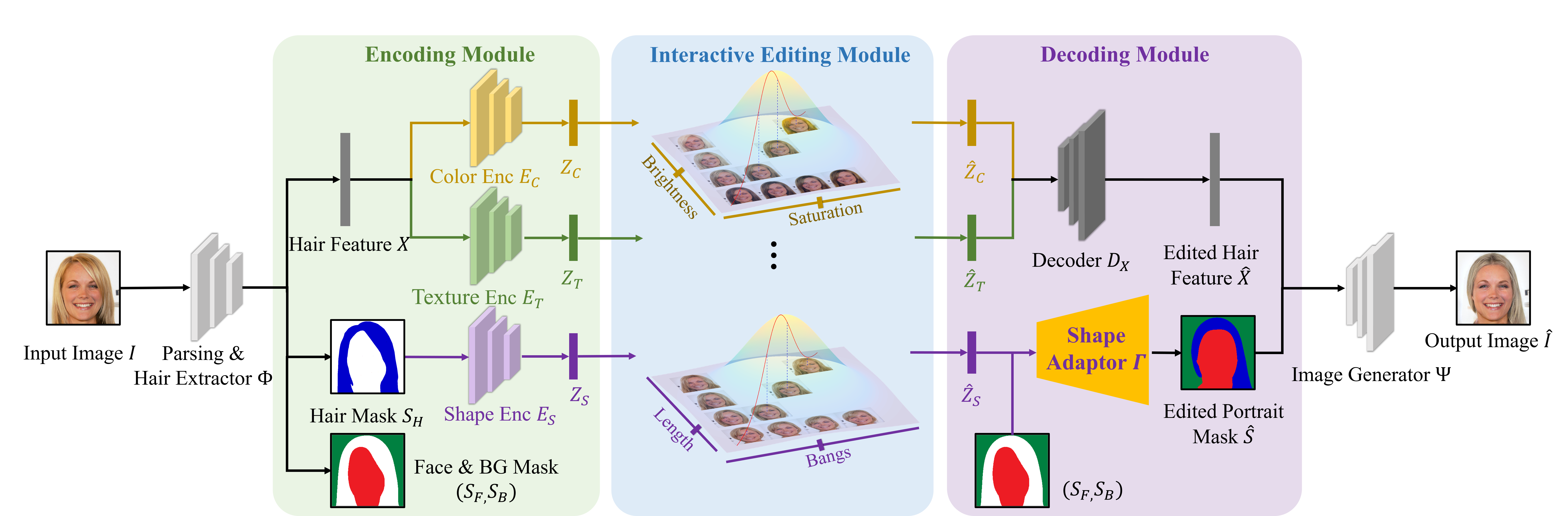

<img src="https://huggingface.co/AIRI-Institute/HairFastGAN/resolve/main/docs/assets/diagram.webp" alt="Diagram"/>

|

| 126 |

+

<br>

|

| 127 |

+

Overview of HairFast: the images first pass through the Pose alignment module, which generates a pose-aligned face mask with the desired hair shape. Then we transfer the desired hairstyle shape using Shape alignment and the desired hair color using Color alignment. In the last step, Refinement alignment returns the lost details of the original image where they are needed.

|

| 128 |

+

</p>

|

| 129 |

+

|

| 130 |

+

## Repository structure

|

| 131 |

+

|

| 132 |

+

.

|

| 133 |

+

├── 📂 datasets # Implementation of torch datasets for inference

|

| 134 |

+

├── 📂 docs # Folder with method diagram and teaser

|

| 135 |

+

├── 📂 models # Folder containting all the models

|

| 136 |

+

│ ├── ...

|

| 137 |

+

│ ├── 📄 Embedding.py # Implementation of Embedding module

|

| 138 |

+

│ ├── 📄 Alignment.py # Implementation of Pose and Shape alignment modules

|

| 139 |

+

│ ├── 📄 Blending.py # Implementation of Color and Refinement alignment modules

|

| 140 |

+

│ ├── 📄 Encoders.py # Implementation of encoder architectures

|

| 141 |

+

│ └── 📄 Net.py # Implementation of basic models

|

| 142 |

+

│

|

| 143 |

+

├── 📂 losses # Folder containing various loss criterias for training

|

| 144 |

+

├── 📂 scripts # Folder with various scripts

|

| 145 |

+

├── 📂 utils # Folder with utility functions

|

| 146 |

+

│

|

| 147 |

+

├── 📜 poetry.lock # Records exact dependency versions.

|

| 148 |

+

├── 📜 pyproject.toml # Poetry configuration for dependencies.

|

| 149 |

+

├── 📜 requirements.txt # Lists required Python packages.

|

| 150 |

+

├── 📄 hair_swap.py # Implementation of the HairFast main class

|

| 151 |

+

└── 📄 main.py # Script for inference

|

| 152 |

+

|

| 153 |

+

## References & Acknowledgments

|

| 154 |

+

|

| 155 |

+

The repository was started from [Barbershop](https://github.com/ZPdesu/Barbershop).

|

| 156 |

+

|

| 157 |

+

The code [CtrlHair](https://github.com/XuyangGuo/CtrlHair), [SEAN](https://github.com/ZPdesu/SEAN), [HairCLIP](https://github.com/wty-ustc/HairCLIP), [FSE](https://github.com/InterDigitalInc/FeatureStyleEncoder), [E4E](https://github.com/omertov/encoder4editing) and [STAR](https://github.com/ZhenglinZhou/STAR) was also used.

|

| 158 |

+

|

| 159 |

+

## Citation

|

| 160 |

+

|

| 161 |

+

If you use this code for your research, please cite our paper:

|

| 162 |

+

```

|

| 163 |

+

@article{nikolaev2024hairfastgan,

|

| 164 |

+

title={HairFastGAN: Realistic and Robust Hair Transfer with a Fast Encoder-Based Approach},

|

| 165 |

+

author={Nikolaev, Maxim and Kuznetsov, Mikhail and Vetrov, Dmitry and Alanov, Aibek},

|

| 166 |

+

journal={arXiv preprint arXiv:2404.01094},

|

| 167 |

+

year={2024}

|

| 168 |

+

}

|

| 169 |

+

```

|

app.py

ADDED

|

File without changes

|

datasets/__init__.py

ADDED

|

File without changes

|

datasets/image_dataset.py

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from torch.utils.data import Dataset

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

class ImagesDataset(Dataset):

|

| 6 |

+

def __init__(self, images: dict[torch.Tensor, list[str]] | list[torch.Tensor]):

|

| 7 |

+

if isinstance(images, list):

|

| 8 |

+

images = dict.fromkeys(images)

|

| 9 |

+

|

| 10 |

+

self.images = list(images)

|

| 11 |

+

self.names = list(images.values())

|

| 12 |

+

|

| 13 |

+

def __len__(self):

|

| 14 |

+

return len(self.images)

|

| 15 |

+

|

| 16 |

+

def __getitem__(self, index):

|

| 17 |

+

image = self.images[index]

|

| 18 |

+

|

| 19 |

+

if image.dtype is torch.uint8:

|

| 20 |

+

image = image / 255

|

| 21 |

+

|

| 22 |

+

names = self.names[index]

|

| 23 |

+

return image, names

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def image_collate(batch):

|

| 27 |

+

images = torch.stack([item[0] for item in batch])

|

| 28 |

+

names = [item[1] for item in batch]

|

| 29 |

+

return images, names

|

docs/assets/diagram.webp

ADDED

|

Git LFS Details

|

docs/assets/logo.webp

ADDED

|

Git LFS Details

|

hair_swap.py

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import typing as tp

|

| 3 |

+

from collections import defaultdict

|

| 4 |

+

from functools import wraps

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

|

| 7 |

+

import numpy as np

|

| 8 |

+

import torch

|

| 9 |

+

import torchvision.transforms.functional as F

|

| 10 |

+

from PIL import Image

|

| 11 |

+

from torchvision.io import read_image, ImageReadMode

|

| 12 |

+

|

| 13 |

+

from models.Alignment import Alignment

|

| 14 |

+

from models.Blending import Blending

|

| 15 |

+

from models.Embedding import Embedding

|

| 16 |

+

from models.Net import Net

|

| 17 |

+

from utils.image_utils import equal_replacer

|

| 18 |

+

from utils.seed import seed_setter

|

| 19 |

+

from utils.shape_predictor import align_face

|

| 20 |

+

from utils.time import bench_session

|

| 21 |

+

|

| 22 |

+

TImage = tp.TypeVar('TImage', torch.Tensor, Image.Image, np.ndarray)

|

| 23 |

+

TPath = tp.TypeVar('TPath', Path, str)

|

| 24 |

+

TReturn = tp.TypeVar('TReturn', torch.Tensor, tuple[torch.Tensor, ...])

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

class HairFast:

|

| 28 |

+

"""

|

| 29 |

+

HairFast implementation with hairstyle transfer interface

|

| 30 |

+

"""

|

| 31 |

+

|

| 32 |

+

def __init__(self, args):

|

| 33 |

+

self.args = args

|

| 34 |

+

self.net = Net(self.args)

|

| 35 |

+

self.embed = Embedding(args, net=self.net)

|

| 36 |

+

self.align = Alignment(args, self.embed.get_e4e_embed, net=self.net)

|

| 37 |

+

self.blend = Blending(args, net=self.net)

|

| 38 |

+

|

| 39 |

+

@seed_setter

|

| 40 |

+

@bench_session

|

| 41 |

+

def __swap_from_tensors(self, face: torch.Tensor, shape: torch.Tensor, color: torch.Tensor,

|

| 42 |

+

**kwargs) -> torch.Tensor:

|

| 43 |

+

images_to_name = defaultdict(list)

|

| 44 |

+

for image, name in zip((face, shape, color), ('face', 'shape', 'color')):

|

| 45 |

+

images_to_name[image].append(name)

|

| 46 |

+

|

| 47 |

+

# Embedding stage

|

| 48 |

+

name_to_embed = self.embed.embedding_images(images_to_name, **kwargs)

|

| 49 |

+

|

| 50 |

+

# Alignment stage

|

| 51 |

+

align_shape = self.align.align_images('face', 'shape', name_to_embed, **kwargs)

|

| 52 |

+

|

| 53 |

+

# Shape Module stage for blending

|

| 54 |

+

if shape is not color:

|

| 55 |

+

align_color = self.align.shape_module('face', 'color', name_to_embed, **kwargs)

|

| 56 |

+

else:

|

| 57 |

+

align_color = align_shape

|

| 58 |

+

|

| 59 |

+

# Blending and Post Process stage

|

| 60 |

+

final_image = self.blend.blend_images(align_shape, align_color, name_to_embed, **kwargs)

|

| 61 |

+

return final_image

|

| 62 |

+

|

| 63 |

+

def swap(self, face_img: TImage | TPath, shape_img: TImage | TPath, color_img: TImage | TPath,

|

| 64 |

+

benchmark=False, align=False, seed=None, exp_name=None, **kwargs) -> TReturn:

|

| 65 |

+

"""

|

| 66 |

+

Run HairFast on the input images to transfer hair shape and color to the desired images.

|

| 67 |

+

:param face_img: face image in Tensor, PIL Image, array or file path format

|

| 68 |

+

:param shape_img: shape image in Tensor, PIL Image, array or file path format

|

| 69 |

+

:param color_img: color image in Tensor, PIL Image, array or file path format

|

| 70 |

+

:param benchmark: starts counting the speed of the session

|

| 71 |

+

:param align: for arbitrary photos crops images to faces

|

| 72 |

+

:param seed: fixes seed for reproducibility, default 3407

|

| 73 |

+

:param exp_name: used as a folder name when 'save_all' model is enabled

|

| 74 |

+

:return: returns the final image as a Tensor

|

| 75 |

+

"""

|

| 76 |

+

images: list[torch.Tensor] = []

|

| 77 |

+

path_to_images: dict[TPath, torch.Tensor] = {}

|

| 78 |

+

|

| 79 |

+

for img in (face_img, shape_img, color_img):

|

| 80 |

+

if isinstance(img, (torch.Tensor, Image.Image, np.ndarray)):

|

| 81 |

+

if not isinstance(img, torch.Tensor):

|

| 82 |

+

img = F.to_tensor(img)

|

| 83 |

+

elif isinstance(img, (Path, str)):

|

| 84 |

+

path_img = img

|

| 85 |

+

if path_img not in path_to_images:

|

| 86 |

+

path_to_images[path_img] = read_image(str(path_img), mode=ImageReadMode.RGB)

|

| 87 |

+

img = path_to_images[path_img]

|

| 88 |

+

else:

|

| 89 |

+

raise TypeError(f'Unsupported image format {type(img)}')

|

| 90 |

+

|

| 91 |

+

images.append(img)

|

| 92 |

+

|

| 93 |

+

if align:

|

| 94 |

+

images = align_face(images)

|

| 95 |

+

images = equal_replacer(images)

|

| 96 |

+

|

| 97 |

+

final_image = self.__swap_from_tensors(*images, seed=seed, benchmark=benchmark, exp_name=exp_name, **kwargs)

|

| 98 |

+

|

| 99 |

+

if align:

|

| 100 |

+

return final_image, *images

|

| 101 |

+

return final_image

|

| 102 |

+

|

| 103 |

+

@wraps(swap)

|

| 104 |

+

def __call__(self, *args, **kwargs):

|

| 105 |

+

return self.swap(*args, **kwargs)

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

def get_parser():

|

| 109 |

+

parser = argparse.ArgumentParser(description='HairFast')

|

| 110 |

+

|

| 111 |

+

# I/O arguments

|

| 112 |

+

parser.add_argument('--save_all_dir', type=Path, default=Path('output'),

|

| 113 |

+

help='the directory to save the latent codes and inversion images')

|

| 114 |

+

|

| 115 |

+

# StyleGAN2 setting

|

| 116 |

+

parser.add_argument('--size', type=int, default=1024)

|

| 117 |

+

parser.add_argument('--ckpt', type=str, default="pretrained_models/StyleGAN/ffhq.pt")

|

| 118 |

+

parser.add_argument('--channel_multiplier', type=int, default=2)

|

| 119 |

+

parser.add_argument('--latent', type=int, default=512)

|

| 120 |

+

parser.add_argument('--n_mlp', type=int, default=8)

|

| 121 |

+

|

| 122 |

+

# Arguments

|

| 123 |

+

parser.add_argument('--device', type=str, default='cuda')

|

| 124 |

+

parser.add_argument('--batch_size', type=int, default=3, help='batch size for encoding images')

|

| 125 |

+

parser.add_argument('--save_all', action='store_true', help='save and print mode information')

|

| 126 |

+

|

| 127 |

+

# HairFast setting

|

| 128 |

+

parser.add_argument('--mixing', type=float, default=0.95, help='hair blending in alignment')

|

| 129 |

+

parser.add_argument('--smooth', type=int, default=5, help='dilation and erosion parameter')

|

| 130 |

+

parser.add_argument('--rotate_checkpoint', type=str, default='pretrained_models/Rotate/rotate_best.pth')

|

| 131 |

+

parser.add_argument('--blending_checkpoint', type=str, default='pretrained_models/Blending/checkpoint.pth')

|

| 132 |

+

parser.add_argument('--pp_checkpoint', type=str, default='pretrained_models/PostProcess/pp_model.pth')

|

| 133 |

+

return parser

|

| 134 |

+

|

| 135 |

+

|

| 136 |

+

if __name__ == '__main__':

|

| 137 |

+

model_args = get_parser()

|

| 138 |

+

args = model_args.parse_args()

|

| 139 |

+

hair_fast = HairFast(args)

|

inference_server.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

if __name__ == "__main__":

|

| 2 |

+

server = grpc.server(...)

|

| 3 |

+

...

|

| 4 |

+

server.start()

|

| 5 |

+

server.wait_for_termination()

|

losses/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

losses/__init__.py

ADDED

|

File without changes

|

losses/lpips/__init__.py

ADDED

|

@@ -0,0 +1,160 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

from __future__ import absolute_import

|

| 3 |

+

from __future__ import division

|

| 4 |

+

from __future__ import print_function

|

| 5 |

+

|

| 6 |

+

import numpy as np

|

| 7 |

+

from skimage.metrics import structural_similarity

|

| 8 |

+

import torch

|

| 9 |

+

from torch.autograd import Variable

|

| 10 |

+

|

| 11 |

+

from ..lpips import dist_model

|

| 12 |

+

|

| 13 |

+

class PerceptualLoss(torch.nn.Module):

|

| 14 |

+

def __init__(self, model='net-lin', net='alex', colorspace='rgb', spatial=False, use_gpu=True, gpu_ids=[0]): # VGG using our perceptually-learned weights (LPIPS metric)

|

| 15 |

+

# def __init__(self, model='net', net='vgg', use_gpu=True): # "default" way of using VGG as a perceptual loss

|

| 16 |

+

super(PerceptualLoss, self).__init__()

|

| 17 |

+

print('Setting up Perceptual loss...')

|

| 18 |

+

self.use_gpu = use_gpu

|

| 19 |

+

self.spatial = spatial

|

| 20 |

+

self.gpu_ids = gpu_ids

|

| 21 |

+

self.model = dist_model.DistModel()

|

| 22 |

+

self.model.initialize(model=model, net=net, use_gpu=use_gpu, colorspace=colorspace, spatial=self.spatial, gpu_ids=gpu_ids)

|

| 23 |

+

print('...[%s] initialized'%self.model.name())

|

| 24 |

+

print('...Done')

|

| 25 |

+

|

| 26 |

+

def forward(self, pred, target, normalize=False):

|

| 27 |

+

"""

|

| 28 |

+

Pred and target are Variables.

|

| 29 |

+

If normalize is True, assumes the images are between [0,1] and then scales them between [-1,+1]

|

| 30 |

+

If normalize is False, assumes the images are already between [-1,+1]

|

| 31 |

+

|

| 32 |

+

Inputs pred and target are Nx3xHxW

|

| 33 |

+

Output pytorch Variable N long

|

| 34 |

+

"""

|

| 35 |

+

|

| 36 |

+

if normalize:

|

| 37 |

+

target = 2 * target - 1

|

| 38 |

+

pred = 2 * pred - 1

|

| 39 |

+

|

| 40 |

+

return self.model.forward(target, pred)

|

| 41 |

+

|

| 42 |

+

def normalize_tensor(in_feat,eps=1e-10):

|

| 43 |

+

norm_factor = torch.sqrt(torch.sum(in_feat**2,dim=1,keepdim=True))

|

| 44 |

+

return in_feat/(norm_factor+eps)

|

| 45 |

+

|

| 46 |

+

def l2(p0, p1, range=255.):

|

| 47 |

+

return .5*np.mean((p0 / range - p1 / range)**2)

|

| 48 |

+

|

| 49 |

+

def psnr(p0, p1, peak=255.):

|

| 50 |

+

return 10*np.log10(peak**2/np.mean((1.*p0-1.*p1)**2))

|

| 51 |

+

|

| 52 |

+

def dssim(p0, p1, range=255.):

|

| 53 |

+

return (1 - structural_similarity(p0, p1, data_range=range, multichannel=True)) / 2.

|

| 54 |

+

|

| 55 |

+

def rgb2lab(in_img,mean_cent=False):

|

| 56 |

+

from skimage import color

|

| 57 |

+

img_lab = color.rgb2lab(in_img)

|

| 58 |

+

if(mean_cent):

|

| 59 |

+

img_lab[:,:,0] = img_lab[:,:,0]-50

|

| 60 |

+

return img_lab

|

| 61 |

+

|

| 62 |

+

def tensor2np(tensor_obj):

|

| 63 |

+

# change dimension of a tensor object into a numpy array

|

| 64 |

+

return tensor_obj[0].cpu().float().numpy().transpose((1,2,0))

|

| 65 |

+

|

| 66 |

+

def np2tensor(np_obj):

|

| 67 |

+

# change dimenion of np array into tensor array

|

| 68 |

+

return torch.Tensor(np_obj[:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

|

| 69 |

+

|

| 70 |

+

def tensor2tensorlab(image_tensor,to_norm=True,mc_only=False):

|

| 71 |

+

# image tensor to lab tensor

|

| 72 |

+

from skimage import color

|

| 73 |

+

|

| 74 |

+

img = tensor2im(image_tensor)

|

| 75 |

+

img_lab = color.rgb2lab(img)

|

| 76 |

+

if(mc_only):

|

| 77 |

+

img_lab[:,:,0] = img_lab[:,:,0]-50

|

| 78 |

+

if(to_norm and not mc_only):

|

| 79 |

+

img_lab[:,:,0] = img_lab[:,:,0]-50

|

| 80 |

+

img_lab = img_lab/100.

|

| 81 |

+

|

| 82 |

+

return np2tensor(img_lab)

|

| 83 |

+

|

| 84 |

+

def tensorlab2tensor(lab_tensor,return_inbnd=False):

|

| 85 |

+

from skimage import color

|

| 86 |

+

import warnings

|

| 87 |

+

warnings.filterwarnings("ignore")

|

| 88 |

+

|

| 89 |

+

lab = tensor2np(lab_tensor)*100.

|

| 90 |

+

lab[:,:,0] = lab[:,:,0]+50

|

| 91 |

+

|

| 92 |

+

rgb_back = 255.*np.clip(color.lab2rgb(lab.astype('float')),0,1)

|

| 93 |

+

if(return_inbnd):

|

| 94 |

+

# convert back to lab, see if we match

|

| 95 |

+

lab_back = color.rgb2lab(rgb_back.astype('uint8'))

|

| 96 |

+

mask = 1.*np.isclose(lab_back,lab,atol=2.)

|

| 97 |

+

mask = np2tensor(np.prod(mask,axis=2)[:,:,np.newaxis])

|

| 98 |

+

return (im2tensor(rgb_back),mask)

|

| 99 |

+

else:

|

| 100 |

+

return im2tensor(rgb_back)

|

| 101 |

+

|

| 102 |

+

def rgb2lab(input):

|

| 103 |

+

from skimage import color

|

| 104 |

+

return color.rgb2lab(input / 255.)

|

| 105 |

+

|

| 106 |

+

def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=255./2.):

|

| 107 |

+

image_numpy = image_tensor[0].cpu().float().numpy()

|

| 108 |

+

image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

|

| 109 |

+

return image_numpy.astype(imtype)

|

| 110 |

+

|

| 111 |

+

def im2tensor(image, imtype=np.uint8, cent=1., factor=255./2.):

|

| 112 |

+

return torch.Tensor((image / factor - cent)

|

| 113 |

+

[:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

|

| 114 |

+

|

| 115 |

+

def tensor2vec(vector_tensor):

|

| 116 |

+

return vector_tensor.data.cpu().numpy()[:, :, 0, 0]

|

| 117 |

+

|

| 118 |

+

def voc_ap(rec, prec, use_07_metric=False):

|

| 119 |

+

""" ap = voc_ap(rec, prec, [use_07_metric])

|

| 120 |

+

Compute VOC AP given precision and recall.

|

| 121 |

+

If use_07_metric is true, uses the

|

| 122 |

+

VOC 07 11 point method (default:False).

|

| 123 |

+

"""

|

| 124 |

+

if use_07_metric:

|

| 125 |

+

# 11 point metric

|

| 126 |

+

ap = 0.

|

| 127 |

+

for t in np.arange(0., 1.1, 0.1):

|

| 128 |

+

if np.sum(rec >= t) == 0:

|

| 129 |

+

p = 0

|

| 130 |

+

else:

|

| 131 |

+

p = np.max(prec[rec >= t])

|

| 132 |

+

ap = ap + p / 11.

|

| 133 |

+

else:

|

| 134 |

+

# correct AP calculation

|

| 135 |

+

# first append sentinel values at the end

|

| 136 |

+

mrec = np.concatenate(([0.], rec, [1.]))

|

| 137 |

+

mpre = np.concatenate(([0.], prec, [0.]))

|

| 138 |

+

|

| 139 |

+

# compute the precision envelope

|

| 140 |

+

for i in range(mpre.size - 1, 0, -1):

|

| 141 |

+

mpre[i - 1] = np.maximum(mpre[i - 1], mpre[i])

|

| 142 |

+

|

| 143 |

+

# to calculate area under PR curve, look for points

|

| 144 |

+

# where X axis (recall) changes value

|

| 145 |

+

i = np.where(mrec[1:] != mrec[:-1])[0]

|

| 146 |

+

|

| 147 |

+

# and sum (\Delta recall) * prec

|

| 148 |

+

ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1])

|

| 149 |

+

return ap

|

| 150 |

+

|

| 151 |

+

def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=255./2.):

|

| 152 |

+

# def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=1.):

|

| 153 |

+

image_numpy = image_tensor[0].cpu().float().numpy()

|

| 154 |

+

image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

|

| 155 |

+

return image_numpy.astype(imtype)

|

| 156 |

+

|

| 157 |

+

def im2tensor(image, imtype=np.uint8, cent=1., factor=255./2.):

|

| 158 |

+

# def im2tensor(image, imtype=np.uint8, cent=1., factor=1.):

|

| 159 |

+

return torch.Tensor((image / factor - cent)

|

| 160 |

+

[:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

|

losses/lpips/base_model.py

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import numpy as np

|

| 3 |

+

import torch

|

| 4 |

+

from torch.autograd import Variable

|

| 5 |

+

from pdb import set_trace as st

|

| 6 |

+

from IPython import embed

|

| 7 |

+

|

| 8 |

+

class BaseModel():

|

| 9 |

+

def __init__(self):

|

| 10 |

+

pass;

|

| 11 |

+

|

| 12 |

+

def name(self):

|

| 13 |

+

return 'BaseModel'

|

| 14 |

+

|

| 15 |

+

def initialize(self, use_gpu=True, gpu_ids=[0]):

|

| 16 |

+

self.use_gpu = use_gpu

|

| 17 |

+

self.gpu_ids = gpu_ids

|

| 18 |

+

|

| 19 |

+

def forward(self):

|

| 20 |

+

pass

|

| 21 |

+

|

| 22 |

+

def get_image_paths(self):

|

| 23 |

+

pass

|

| 24 |

+

|

| 25 |

+

def optimize_parameters(self):

|

| 26 |

+

pass

|

| 27 |

+

|

| 28 |

+

def get_current_visuals(self):

|

| 29 |

+

return self.input

|

| 30 |

+

|

| 31 |

+

def get_current_errors(self):

|

| 32 |

+

return {}

|

| 33 |

+

|

| 34 |

+

def save(self, label):

|

| 35 |

+

pass

|

| 36 |

+

|

| 37 |

+

# helper saving function that can be used by subclasses

|

| 38 |

+

def save_network(self, network, path, network_label, epoch_label):

|

| 39 |

+

save_filename = '%s_net_%s.pth' % (epoch_label, network_label)

|

| 40 |

+

save_path = os.path.join(path, save_filename)

|

| 41 |

+

torch.save(network.state_dict(), save_path)

|

| 42 |

+

|

| 43 |

+

# helper loading function that can be used by subclasses

|

| 44 |

+

def load_network(self, network, network_label, epoch_label):

|

| 45 |

+

save_filename = '%s_net_%s.pth' % (epoch_label, network_label)

|

| 46 |

+

save_path = os.path.join(self.save_dir, save_filename)

|

| 47 |

+

print('Loading network from %s'%save_path)

|

| 48 |

+

network.load_state_dict(torch.load(save_path))

|

| 49 |

+

|

| 50 |

+

def update_learning_rate():

|

| 51 |

+

pass

|

| 52 |

+

|

| 53 |

+

def get_image_paths(self):

|

| 54 |

+

return self.image_paths

|

| 55 |

+

|

| 56 |

+

def save_done(self, flag=False):

|

| 57 |

+

np.save(os.path.join(self.save_dir, 'done_flag'),flag)

|

| 58 |

+

np.savetxt(os.path.join(self.save_dir, 'done_flag'),[flag,],fmt='%i')

|

losses/lpips/dist_model.py

ADDED

|

@@ -0,0 +1,284 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

from __future__ import absolute_import

|

| 3 |

+

|

| 4 |

+

import sys

|

| 5 |

+

import numpy as np

|

| 6 |

+

import torch

|

| 7 |

+

from torch import nn

|

| 8 |

+

import os

|

| 9 |

+

from collections import OrderedDict

|

| 10 |

+

from torch.autograd import Variable

|

| 11 |

+

import itertools

|

| 12 |

+

from .base_model import BaseModel

|

| 13 |

+

from scipy.ndimage import zoom

|

| 14 |

+

import fractions

|

| 15 |

+

import functools

|

| 16 |

+

import skimage.transform

|

| 17 |

+

from tqdm import tqdm

|

| 18 |

+

|

| 19 |

+

from IPython import embed

|

| 20 |

+

|

| 21 |

+

from . import networks_basic as networks

|

| 22 |

+

from losses import lpips as util

|

| 23 |

+

|

| 24 |

+

class DistModel(BaseModel):

|

| 25 |

+

def name(self):

|

| 26 |

+

return self.model_name

|

| 27 |

+

|

| 28 |

+

def initialize(self, model='net-lin', net='alex', colorspace='Lab', pnet_rand=False, pnet_tune=False, model_path=None,

|

| 29 |

+

use_gpu=True, printNet=False, spatial=False,

|

| 30 |

+

is_train=False, lr=.0001, beta1=0.5, version='0.1', gpu_ids=[0]):

|

| 31 |

+

'''

|

| 32 |

+

INPUTS

|

| 33 |

+

model - ['net-lin'] for linearly calibrated network

|

| 34 |

+

['net'] for off-the-shelf network

|

| 35 |

+

['L2'] for L2 distance in Lab colorspace

|

| 36 |

+

['SSIM'] for ssim in RGB colorspace

|

| 37 |

+

net - ['squeeze','alex','vgg']

|

| 38 |

+

model_path - if None, will look in weights/[NET_NAME].pth

|

| 39 |

+

colorspace - ['Lab','RGB'] colorspace to use for L2 and SSIM

|

| 40 |

+

use_gpu - bool - whether or not to use a GPU

|

| 41 |

+

printNet - bool - whether or not to print network architecture out

|

| 42 |

+

spatial - bool - whether to output an array containing varying distances across spatial dimensions

|

| 43 |

+

spatial_shape - if given, output spatial shape. if None then spatial shape is determined automatically via spatial_factor (see below).

|

| 44 |

+

spatial_factor - if given, specifies upsampling factor relative to the largest spatial extent of a convolutional layer. if None then resized to size of input images.

|

| 45 |

+

spatial_order - spline order of filter for upsampling in spatial mode, by default 1 (bilinear).

|

| 46 |

+

is_train - bool - [True] for training mode

|

| 47 |

+

lr - float - initial learning rate

|

| 48 |

+

beta1 - float - initial momentum term for adam

|

| 49 |

+

version - 0.1 for latest, 0.0 was original (with a bug)

|

| 50 |

+

gpu_ids - int array - [0] by default, gpus to use

|

| 51 |

+

'''

|

| 52 |

+

BaseModel.initialize(self, use_gpu=use_gpu, gpu_ids=gpu_ids)

|

| 53 |

+

|

| 54 |

+

self.model = model

|

| 55 |

+

self.net = net

|

| 56 |

+

self.is_train = is_train

|

| 57 |

+

self.spatial = spatial

|

| 58 |

+

self.gpu_ids = gpu_ids

|

| 59 |

+

self.model_name = '%s [%s]'%(model,net)

|

| 60 |

+

|

| 61 |

+

if(self.model == 'net-lin'): # pretrained net + linear layer

|

| 62 |

+

self.net = networks.PNetLin(pnet_rand=pnet_rand, pnet_tune=pnet_tune, pnet_type=net,

|

| 63 |

+

use_dropout=True, spatial=spatial, version=version, lpips=True)

|

| 64 |

+

kw = {}

|

| 65 |

+

if not use_gpu:

|

| 66 |

+

kw['map_location'] = 'cpu'

|

| 67 |

+

if(model_path is None):

|

| 68 |

+

import inspect

|

| 69 |

+

model_path = os.path.abspath(os.path.join(inspect.getfile(self.initialize), '..', 'weights/v%s/%s.pth'%(version,net)))

|

| 70 |

+

|

| 71 |

+

if(not is_train):

|

| 72 |

+

print('Loading model from: %s'%model_path)

|

| 73 |

+

self.net.load_state_dict(torch.load(model_path, **kw), strict=False)

|

| 74 |

+

|

| 75 |

+

elif(self.model=='net'): # pretrained network

|

| 76 |

+

self.net = networks.PNetLin(pnet_rand=pnet_rand, pnet_type=net, lpips=False)

|

| 77 |

+

elif(self.model in ['L2','l2']):

|

| 78 |

+

self.net = networks.L2(use_gpu=use_gpu,colorspace=colorspace) # not really a network, only for testing

|

| 79 |

+

self.model_name = 'L2'

|

| 80 |

+

elif(self.model in ['DSSIM','dssim','SSIM','ssim']):

|

| 81 |

+

self.net = networks.DSSIM(use_gpu=use_gpu,colorspace=colorspace)

|

| 82 |

+

self.model_name = 'SSIM'

|

| 83 |

+

else:

|

| 84 |

+

raise ValueError("Model [%s] not recognized." % self.model)

|

| 85 |

+

|

| 86 |

+

self.parameters = list(self.net.parameters())

|

| 87 |

+

|

| 88 |

+

if self.is_train: # training mode

|

| 89 |

+

# extra network on top to go from distances (d0,d1) => predicted human judgment (h*)

|

| 90 |

+

self.rankLoss = networks.BCERankingLoss()

|

| 91 |

+

self.parameters += list(self.rankLoss.net.parameters())

|

| 92 |

+

self.lr = lr

|

| 93 |

+

self.old_lr = lr

|

| 94 |

+

self.optimizer_net = torch.optim.Adam(self.parameters, lr=lr, betas=(beta1, 0.999))

|

| 95 |

+

else: # test mode

|

| 96 |

+

self.net.eval()

|

| 97 |

+

|

| 98 |

+

if(use_gpu):

|

| 99 |

+

self.net.to(gpu_ids[0])

|

| 100 |

+

self.net = torch.nn.DataParallel(self.net, device_ids=gpu_ids)

|

| 101 |

+

if(self.is_train):

|

| 102 |

+

self.rankLoss = self.rankLoss.to(device=gpu_ids[0]) # just put this on GPU0

|

| 103 |

+

|

| 104 |

+

if(printNet):

|

| 105 |

+

print('---------- Networks initialized -------------')

|

| 106 |

+

networks.print_network(self.net)

|

| 107 |

+

print('-----------------------------------------------')

|

| 108 |

+

|

| 109 |

+

def forward(self, in0, in1, retPerLayer=False):

|

| 110 |

+

''' Function computes the distance between image patches in0 and in1

|

| 111 |

+

INPUTS

|

| 112 |

+

in0, in1 - torch.Tensor object of shape Nx3xXxY - image patch scaled to [-1,1]

|

| 113 |

+

OUTPUT

|

| 114 |

+

computed distances between in0 and in1

|

| 115 |

+

'''

|

| 116 |

+

|

| 117 |

+

return self.net.forward(in0, in1, retPerLayer=retPerLayer)

|

| 118 |

+

|

| 119 |

+

# ***** TRAINING FUNCTIONS *****

|

| 120 |

+

def optimize_parameters(self):

|

| 121 |

+

self.forward_train()

|

| 122 |

+

self.optimizer_net.zero_grad()

|

| 123 |

+

self.backward_train()

|

| 124 |

+

self.optimizer_net.step()

|

| 125 |

+

self.clamp_weights()

|

| 126 |

+

|

| 127 |

+

def clamp_weights(self):

|

| 128 |

+

for module in self.net.modules():

|

| 129 |

+

if(hasattr(module, 'weight') and module.kernel_size==(1,1)):

|

| 130 |

+

module.weight.data = torch.clamp(module.weight.data,min=0)

|

| 131 |

+

|

| 132 |

+

def set_input(self, data):

|

| 133 |

+

self.input_ref = data['ref']

|

| 134 |

+

self.input_p0 = data['p0']

|

| 135 |

+

self.input_p1 = data['p1']

|

| 136 |

+

self.input_judge = data['judge']

|

| 137 |

+

|

| 138 |

+

if(self.use_gpu):

|

| 139 |

+

self.input_ref = self.input_ref.to(device=self.gpu_ids[0])

|

| 140 |

+

self.input_p0 = self.input_p0.to(device=self.gpu_ids[0])

|

| 141 |

+

self.input_p1 = self.input_p1.to(device=self.gpu_ids[0])

|