diff --git a/.DS_Store b/.DS_Store

new file mode 100644

index 0000000000000000000000000000000000000000..f7535c13a2ef8123341f1f697fc50dbca8cafbe4

Binary files /dev/null and b/.DS_Store differ

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 0000000000000000000000000000000000000000..6b1e3dba671318e3576aea1ef913a5ca3180ed96

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,21 @@

+MIT License

+

+Copyright (c) 2024 AIRI - Artificial Intelligence Research Institute

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..b92342ea91e82d3d812b5dba0d47eb1da42b70a0

--- /dev/null

+++ b/README.md

@@ -0,0 +1,169 @@

+# HairFastGAN: Realistic and Robust Hair Transfer with a Fast Encoder-Based Approach

+

+ +

+ +

+ +[](./LICENSE)

+

+

+> Our paper addresses the complex task of transferring a hairstyle from a reference image to an input photo for virtual hair try-on. This task is challenging due to the need to adapt to various photo poses, the sensitivity of hairstyles, and the lack of objective metrics. The current state of the art hairstyle transfer methods use an optimization process for different parts of the approach, making them inexcusably slow. At the same time, faster encoder-based models are of very low quality because they either operate in StyleGAN's W+ space or use other low-dimensional image generators. Additionally, both approaches have a problem with hairstyle transfer when the source pose is very different from the target pose, because they either don't consider the pose at all or deal with it inefficiently. In our paper, we present the HairFast model, which uniquely solves these problems and achieves high resolution, near real-time performance, and superior reconstruction compared to optimization problem-based methods. Our solution includes a new architecture operating in the FS latent space of StyleGAN, an enhanced inpainting approach, and improved encoders for better alignment, color transfer, and a new encoder for post-processing. The effectiveness of our approach is demonstrated on realism metrics after random hairstyle transfer and reconstruction when the original hairstyle is transferred. In the most difficult scenario of transferring both shape and color of a hairstyle from different images, our method performs in less than a second on the Nvidia V100.

+>

+

+

+[](./LICENSE)

+

+

+> Our paper addresses the complex task of transferring a hairstyle from a reference image to an input photo for virtual hair try-on. This task is challenging due to the need to adapt to various photo poses, the sensitivity of hairstyles, and the lack of objective metrics. The current state of the art hairstyle transfer methods use an optimization process for different parts of the approach, making them inexcusably slow. At the same time, faster encoder-based models are of very low quality because they either operate in StyleGAN's W+ space or use other low-dimensional image generators. Additionally, both approaches have a problem with hairstyle transfer when the source pose is very different from the target pose, because they either don't consider the pose at all or deal with it inefficiently. In our paper, we present the HairFast model, which uniquely solves these problems and achieves high resolution, near real-time performance, and superior reconstruction compared to optimization problem-based methods. Our solution includes a new architecture operating in the FS latent space of StyleGAN, an enhanced inpainting approach, and improved encoders for better alignment, color transfer, and a new encoder for post-processing. The effectiveness of our approach is demonstrated on realism metrics after random hairstyle transfer and reconstruction when the original hairstyle is transferred. In the most difficult scenario of transferring both shape and color of a hairstyle from different images, our method performs in less than a second on the Nvidia V100.

+>

+

+

+  +

+

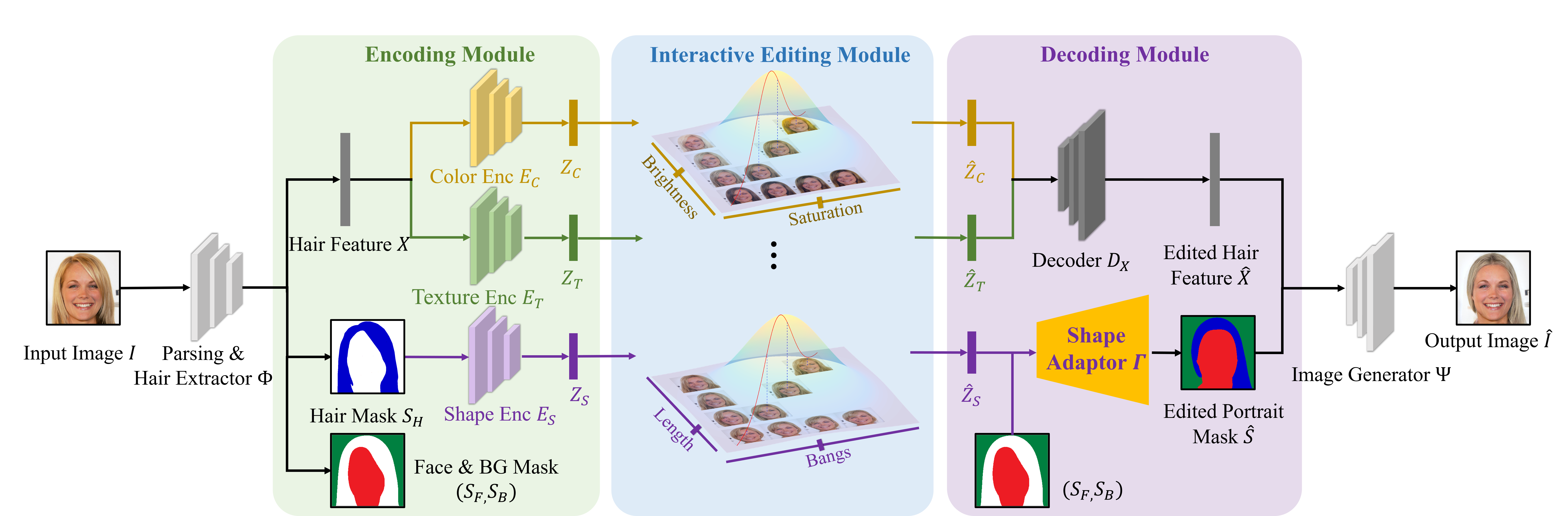

+The proposed HairFast framework allows to edit a hairstyle on an arbitrary photo based on an example from other photos. Here we have an example of how the method works by transferring a hairstyle from one photo and a hair color from another.

+

+

+## Updates

+

+- [25/09/2024] 🎉🎉🎉 HairFastGAN has been accepted by [NeurIPS 2024](https://nips.cc/virtual/2024/poster/93397).

+- [24/05/2024] 🌟🌟🌟 Release of the [official demo](https://huggingface.co/spaces/AIRI-Institute/HairFastGAN) on Hugging Face 🤗.

+- [01/04/2024] 🔥🔥🔥 HairFastGAN release.

+

+## Prerequisites

+You need following hardware and python version to run our method.

+- Linux

+- NVIDIA GPU + CUDA CuDNN

+- Python 3.10

+- PyTorch 1.13.1+

+

+## Installation

+

+* Clone this repo:

+```bash

+git clone https://github.com/AIRI-Institute/HairFastGAN

+cd HairFastGAN

+```

+

+* Download all pretrained models:

+```bash

+git clone https://huggingface.co/AIRI-Institute/HairFastGAN

+cd HairFastGAN && git lfs pull && cd ..

+mv HairFastGAN/pretrained_models pretrained_models

+mv HairFastGAN/input input

+rm -rf HairFastGAN

+```

+

+* Setting the environment

+

+**Option 1 [recommended]**, install [Poetry](https://python-poetry.org/docs/) and then:

+```bash

+poetry install

+```

+

+**Option 2**, just install the dependencies in your environment:

+```bash

+pip install -r requirements.txt

+```

+

+## Inference

+You can use `main.py` to run the method, either for a single run or for a batch of experiments.

+

+* An example of running a single experiment:

+

+```

+python main.py --face_path=6.png --shape_path=7.png --color_path=8.png \

+ --input_dir=input --result_path=output/result.png

+```

+

+* To run the batch version, first create an image triples file (face/shape/color):

+```

+cat > example.txt << EOF

+6.png 7.png 8.png

+8.png 4.jpg 5.jpg

+EOF

+```

+

+And now you can run the method:

+```

+python main.py --file_path=example.txt --input_dir=input --output_dir=output

+```

+

+* You can use HairFast in the code directly:

+

+```python

+from hair_swap import HairFast, get_parser

+

+# Init HairFast

+hair_fast = HairFast(get_parser().parse_args([]))

+

+# Inference

+result = hair_fast(face_img, shape_img, color_img)

+```

+

+See the code for input parameters and output formats.

+

+* Alternatively, you can use our [Colab Notebook](https://colab.research.google.com/#fileId=https://huggingface.co/AIRI-Institute/HairFastGAN/blob/main/notebooks/HairFast_inference.ipynb) to prepare the environment, download the code, pretrained weights, and allow you to run experiments with a convenient form.

+

+* You can also try our method on the [Hugging Face demo](https://huggingface.co/spaces/AIRI-Institute/HairFastGAN) 🤗.

+

+## Scripts

+

+There is a list of scripts below, see arguments via --help for details.

+

+| Path | Description ![]() +|:----------------------------------------| :---

+| scripts/align_face.py | Processing of raw photos for inference

+| scripts/fid_metric.py | Metrics calculation

+| scripts/rotate_gen.py | Dataset generation for rotate encoder training

+| scripts/blending_gen.py | Dataset generation for color encoder training

+| scripts/pp_gen.py | Dataset generation for refinement encoder training

+| scripts/rotate_train.py | Rotate encoder training

+| scripts/blending_train.py | Color encoder training

+| scripts/pp_train.py | Refinement encoder training

+

+

+## Training

+For training, you need to generate a dataset and then run the scripts for training. See the scripts section above.

+

+We use [Weights & Biases](https://wandb.ai/home) to track experiments. Before training, you should put your W&B API key into the `WANDB_KEY` environment variable.

+

+## Method diagram

+

+

+|:----------------------------------------| :---

+| scripts/align_face.py | Processing of raw photos for inference

+| scripts/fid_metric.py | Metrics calculation

+| scripts/rotate_gen.py | Dataset generation for rotate encoder training

+| scripts/blending_gen.py | Dataset generation for color encoder training

+| scripts/pp_gen.py | Dataset generation for refinement encoder training

+| scripts/rotate_train.py | Rotate encoder training

+| scripts/blending_train.py | Color encoder training

+| scripts/pp_train.py | Refinement encoder training

+

+

+## Training

+For training, you need to generate a dataset and then run the scripts for training. See the scripts section above.

+

+We use [Weights & Biases](https://wandb.ai/home) to track experiments. Before training, you should put your W&B API key into the `WANDB_KEY` environment variable.

+

+## Method diagram

+

+

+  +

+

+Overview of HairFast: the images first pass through the Pose alignment module, which generates a pose-aligned face mask with the desired hair shape. Then we transfer the desired hairstyle shape using Shape alignment and the desired hair color using Color alignment. In the last step, Refinement alignment returns the lost details of the original image where they are needed.

+

+

+## Repository structure

+

+ .

+ ├── 📂 datasets # Implementation of torch datasets for inference

+ ├── 📂 docs # Folder with method diagram and teaser

+ ├── 📂 models # Folder containting all the models

+ │ ├── ...

+ │ ├── 📄 Embedding.py # Implementation of Embedding module

+ │ ├── 📄 Alignment.py # Implementation of Pose and Shape alignment modules

+ │ ├── 📄 Blending.py # Implementation of Color and Refinement alignment modules

+ │ ├── 📄 Encoders.py # Implementation of encoder architectures

+ │ └── 📄 Net.py # Implementation of basic models

+ │

+ ├── 📂 losses # Folder containing various loss criterias for training

+ ├── 📂 scripts # Folder with various scripts

+ ├── 📂 utils # Folder with utility functions

+ │

+ ├── 📜 poetry.lock # Records exact dependency versions.

+ ├── 📜 pyproject.toml # Poetry configuration for dependencies.

+ ├── 📜 requirements.txt # Lists required Python packages.

+ ├── 📄 hair_swap.py # Implementation of the HairFast main class

+ └── 📄 main.py # Script for inference

+

+## References & Acknowledgments

+

+The repository was started from [Barbershop](https://github.com/ZPdesu/Barbershop).

+

+The code [CtrlHair](https://github.com/XuyangGuo/CtrlHair), [SEAN](https://github.com/ZPdesu/SEAN), [HairCLIP](https://github.com/wty-ustc/HairCLIP), [FSE](https://github.com/InterDigitalInc/FeatureStyleEncoder), [E4E](https://github.com/omertov/encoder4editing) and [STAR](https://github.com/ZhenglinZhou/STAR) was also used.

+

+## Citation

+

+If you use this code for your research, please cite our paper:

+```

+@article{nikolaev2024hairfastgan,

+ title={HairFastGAN: Realistic and Robust Hair Transfer with a Fast Encoder-Based Approach},

+ author={Nikolaev, Maxim and Kuznetsov, Mikhail and Vetrov, Dmitry and Alanov, Aibek},

+ journal={arXiv preprint arXiv:2404.01094},

+ year={2024}

+}

+```

diff --git a/app.py b/app.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/datasets/__init__.py b/datasets/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/datasets/image_dataset.py b/datasets/image_dataset.py

new file mode 100644

index 0000000000000000000000000000000000000000..4e56368ac37ba10cb1a29b5172699589035fd22d

--- /dev/null

+++ b/datasets/image_dataset.py

@@ -0,0 +1,29 @@

+import torch

+from torch.utils.data import Dataset

+

+

+class ImagesDataset(Dataset):

+ def __init__(self, images: dict[torch.Tensor, list[str]] | list[torch.Tensor]):

+ if isinstance(images, list):

+ images = dict.fromkeys(images)

+

+ self.images = list(images)

+ self.names = list(images.values())

+

+ def __len__(self):

+ return len(self.images)

+

+ def __getitem__(self, index):

+ image = self.images[index]

+

+ if image.dtype is torch.uint8:

+ image = image / 255

+

+ names = self.names[index]

+ return image, names

+

+

+def image_collate(batch):

+ images = torch.stack([item[0] for item in batch])

+ names = [item[1] for item in batch]

+ return images, names

diff --git a/docs/assets/diagram.webp b/docs/assets/diagram.webp

new file mode 100644

index 0000000000000000000000000000000000000000..84275e3a2b7ab57c8b83139d9245194aab07219b

--- /dev/null

+++ b/docs/assets/diagram.webp

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:82e7fce0312bcc7931243fb1d834539bc9d24bbd07a87135d89974987b217882

+size 742106

diff --git a/docs/assets/logo.webp b/docs/assets/logo.webp

new file mode 100644

index 0000000000000000000000000000000000000000..e0c09a628ed9e6d650287c68468d7a044d85e046

--- /dev/null

+++ b/docs/assets/logo.webp

@@ -0,0 +1,3 @@

+version https://git-lfs.github.com/spec/v1

+oid sha256:3262e841eadcdd85ccdb9519e6ac7cf0f486857fc32a5bd59eb363594ad2b66d

+size 426760

diff --git a/hair_swap.py b/hair_swap.py

new file mode 100644

index 0000000000000000000000000000000000000000..18e044919d4ade45f9b6b621756ab0da3b2e57b9

--- /dev/null

+++ b/hair_swap.py

@@ -0,0 +1,139 @@

+import argparse

+import typing as tp

+from collections import defaultdict

+from functools import wraps

+from pathlib import Path

+

+import numpy as np

+import torch

+import torchvision.transforms.functional as F

+from PIL import Image

+from torchvision.io import read_image, ImageReadMode

+

+from models.Alignment import Alignment

+from models.Blending import Blending

+from models.Embedding import Embedding

+from models.Net import Net

+from utils.image_utils import equal_replacer

+from utils.seed import seed_setter

+from utils.shape_predictor import align_face

+from utils.time import bench_session

+

+TImage = tp.TypeVar('TImage', torch.Tensor, Image.Image, np.ndarray)

+TPath = tp.TypeVar('TPath', Path, str)

+TReturn = tp.TypeVar('TReturn', torch.Tensor, tuple[torch.Tensor, ...])

+

+

+class HairFast:

+ """

+ HairFast implementation with hairstyle transfer interface

+ """

+

+ def __init__(self, args):

+ self.args = args

+ self.net = Net(self.args)

+ self.embed = Embedding(args, net=self.net)

+ self.align = Alignment(args, self.embed.get_e4e_embed, net=self.net)

+ self.blend = Blending(args, net=self.net)

+

+ @seed_setter

+ @bench_session

+ def __swap_from_tensors(self, face: torch.Tensor, shape: torch.Tensor, color: torch.Tensor,

+ **kwargs) -> torch.Tensor:

+ images_to_name = defaultdict(list)

+ for image, name in zip((face, shape, color), ('face', 'shape', 'color')):

+ images_to_name[image].append(name)

+

+ # Embedding stage

+ name_to_embed = self.embed.embedding_images(images_to_name, **kwargs)

+

+ # Alignment stage

+ align_shape = self.align.align_images('face', 'shape', name_to_embed, **kwargs)

+

+ # Shape Module stage for blending

+ if shape is not color:

+ align_color = self.align.shape_module('face', 'color', name_to_embed, **kwargs)

+ else:

+ align_color = align_shape

+

+ # Blending and Post Process stage

+ final_image = self.blend.blend_images(align_shape, align_color, name_to_embed, **kwargs)

+ return final_image

+

+ def swap(self, face_img: TImage | TPath, shape_img: TImage | TPath, color_img: TImage | TPath,

+ benchmark=False, align=False, seed=None, exp_name=None, **kwargs) -> TReturn:

+ """

+ Run HairFast on the input images to transfer hair shape and color to the desired images.

+ :param face_img: face image in Tensor, PIL Image, array or file path format

+ :param shape_img: shape image in Tensor, PIL Image, array or file path format

+ :param color_img: color image in Tensor, PIL Image, array or file path format

+ :param benchmark: starts counting the speed of the session

+ :param align: for arbitrary photos crops images to faces

+ :param seed: fixes seed for reproducibility, default 3407

+ :param exp_name: used as a folder name when 'save_all' model is enabled

+ :return: returns the final image as a Tensor

+ """

+ images: list[torch.Tensor] = []

+ path_to_images: dict[TPath, torch.Tensor] = {}

+

+ for img in (face_img, shape_img, color_img):

+ if isinstance(img, (torch.Tensor, Image.Image, np.ndarray)):

+ if not isinstance(img, torch.Tensor):

+ img = F.to_tensor(img)

+ elif isinstance(img, (Path, str)):

+ path_img = img

+ if path_img not in path_to_images:

+ path_to_images[path_img] = read_image(str(path_img), mode=ImageReadMode.RGB)

+ img = path_to_images[path_img]

+ else:

+ raise TypeError(f'Unsupported image format {type(img)}')

+

+ images.append(img)

+

+ if align:

+ images = align_face(images)

+ images = equal_replacer(images)

+

+ final_image = self.__swap_from_tensors(*images, seed=seed, benchmark=benchmark, exp_name=exp_name, **kwargs)

+

+ if align:

+ return final_image, *images

+ return final_image

+

+ @wraps(swap)

+ def __call__(self, *args, **kwargs):

+ return self.swap(*args, **kwargs)

+

+

+def get_parser():

+ parser = argparse.ArgumentParser(description='HairFast')

+

+ # I/O arguments

+ parser.add_argument('--save_all_dir', type=Path, default=Path('output'),

+ help='the directory to save the latent codes and inversion images')

+

+ # StyleGAN2 setting

+ parser.add_argument('--size', type=int, default=1024)

+ parser.add_argument('--ckpt', type=str, default="pretrained_models/StyleGAN/ffhq.pt")

+ parser.add_argument('--channel_multiplier', type=int, default=2)

+ parser.add_argument('--latent', type=int, default=512)

+ parser.add_argument('--n_mlp', type=int, default=8)

+

+ # Arguments

+ parser.add_argument('--device', type=str, default='cuda')

+ parser.add_argument('--batch_size', type=int, default=3, help='batch size for encoding images')

+ parser.add_argument('--save_all', action='store_true', help='save and print mode information')

+

+ # HairFast setting

+ parser.add_argument('--mixing', type=float, default=0.95, help='hair blending in alignment')

+ parser.add_argument('--smooth', type=int, default=5, help='dilation and erosion parameter')

+ parser.add_argument('--rotate_checkpoint', type=str, default='pretrained_models/Rotate/rotate_best.pth')

+ parser.add_argument('--blending_checkpoint', type=str, default='pretrained_models/Blending/checkpoint.pth')

+ parser.add_argument('--pp_checkpoint', type=str, default='pretrained_models/PostProcess/pp_model.pth')

+ return parser

+

+

+if __name__ == '__main__':

+ model_args = get_parser()

+ args = model_args.parse_args()

+ hair_fast = HairFast(args)

diff --git a/inference_server.py b/inference_server.py

new file mode 100644

index 0000000000000000000000000000000000000000..0f35d3c0d64915d9cd8f8a3db3322610192d4d5b

--- /dev/null

+++ b/inference_server.py

@@ -0,0 +1,5 @@

+if __name__ == "__main__":

+ server = grpc.server(...)

+ ...

+ server.start()

+ server.wait_for_termination()

diff --git a/losses/.DS_Store b/losses/.DS_Store

new file mode 100644

index 0000000000000000000000000000000000000000..924c6ff93402ee0e429fcc6d9e7f887b7cfee310

Binary files /dev/null and b/losses/.DS_Store differ

diff --git a/losses/__init__.py b/losses/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..e69de29bb2d1d6434b8b29ae775ad8c2e48c5391

diff --git a/losses/lpips/__init__.py b/losses/lpips/__init__.py

new file mode 100644

index 0000000000000000000000000000000000000000..2dd73e7b248bd9bbc814df6210f14f95fc5045ae

--- /dev/null

+++ b/losses/lpips/__init__.py

@@ -0,0 +1,160 @@

+

+from __future__ import absolute_import

+from __future__ import division

+from __future__ import print_function

+

+import numpy as np

+from skimage.metrics import structural_similarity

+import torch

+from torch.autograd import Variable

+

+from ..lpips import dist_model

+

+class PerceptualLoss(torch.nn.Module):

+ def __init__(self, model='net-lin', net='alex', colorspace='rgb', spatial=False, use_gpu=True, gpu_ids=[0]): # VGG using our perceptually-learned weights (LPIPS metric)

+ # def __init__(self, model='net', net='vgg', use_gpu=True): # "default" way of using VGG as a perceptual loss

+ super(PerceptualLoss, self).__init__()

+ print('Setting up Perceptual loss...')

+ self.use_gpu = use_gpu

+ self.spatial = spatial

+ self.gpu_ids = gpu_ids

+ self.model = dist_model.DistModel()

+ self.model.initialize(model=model, net=net, use_gpu=use_gpu, colorspace=colorspace, spatial=self.spatial, gpu_ids=gpu_ids)

+ print('...[%s] initialized'%self.model.name())

+ print('...Done')

+

+ def forward(self, pred, target, normalize=False):

+ """

+ Pred and target are Variables.

+ If normalize is True, assumes the images are between [0,1] and then scales them between [-1,+1]

+ If normalize is False, assumes the images are already between [-1,+1]

+

+ Inputs pred and target are Nx3xHxW

+ Output pytorch Variable N long

+ """

+

+ if normalize:

+ target = 2 * target - 1

+ pred = 2 * pred - 1

+

+ return self.model.forward(target, pred)

+

+def normalize_tensor(in_feat,eps=1e-10):

+ norm_factor = torch.sqrt(torch.sum(in_feat**2,dim=1,keepdim=True))

+ return in_feat/(norm_factor+eps)

+

+def l2(p0, p1, range=255.):

+ return .5*np.mean((p0 / range - p1 / range)**2)

+

+def psnr(p0, p1, peak=255.):

+ return 10*np.log10(peak**2/np.mean((1.*p0-1.*p1)**2))

+

+def dssim(p0, p1, range=255.):

+ return (1 - structural_similarity(p0, p1, data_range=range, multichannel=True)) / 2.

+

+def rgb2lab(in_img,mean_cent=False):

+ from skimage import color

+ img_lab = color.rgb2lab(in_img)

+ if(mean_cent):

+ img_lab[:,:,0] = img_lab[:,:,0]-50

+ return img_lab

+

+def tensor2np(tensor_obj):

+ # change dimension of a tensor object into a numpy array

+ return tensor_obj[0].cpu().float().numpy().transpose((1,2,0))

+

+def np2tensor(np_obj):

+ # change dimenion of np array into tensor array

+ return torch.Tensor(np_obj[:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

+

+def tensor2tensorlab(image_tensor,to_norm=True,mc_only=False):

+ # image tensor to lab tensor

+ from skimage import color

+

+ img = tensor2im(image_tensor)

+ img_lab = color.rgb2lab(img)

+ if(mc_only):

+ img_lab[:,:,0] = img_lab[:,:,0]-50

+ if(to_norm and not mc_only):

+ img_lab[:,:,0] = img_lab[:,:,0]-50

+ img_lab = img_lab/100.

+

+ return np2tensor(img_lab)

+

+def tensorlab2tensor(lab_tensor,return_inbnd=False):

+ from skimage import color

+ import warnings

+ warnings.filterwarnings("ignore")

+

+ lab = tensor2np(lab_tensor)*100.

+ lab[:,:,0] = lab[:,:,0]+50

+

+ rgb_back = 255.*np.clip(color.lab2rgb(lab.astype('float')),0,1)

+ if(return_inbnd):

+ # convert back to lab, see if we match

+ lab_back = color.rgb2lab(rgb_back.astype('uint8'))

+ mask = 1.*np.isclose(lab_back,lab,atol=2.)

+ mask = np2tensor(np.prod(mask,axis=2)[:,:,np.newaxis])

+ return (im2tensor(rgb_back),mask)

+ else:

+ return im2tensor(rgb_back)

+

+def rgb2lab(input):

+ from skimage import color

+ return color.rgb2lab(input / 255.)

+

+def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=255./2.):

+ image_numpy = image_tensor[0].cpu().float().numpy()

+ image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

+ return image_numpy.astype(imtype)

+

+def im2tensor(image, imtype=np.uint8, cent=1., factor=255./2.):

+ return torch.Tensor((image / factor - cent)

+ [:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

+

+def tensor2vec(vector_tensor):

+ return vector_tensor.data.cpu().numpy()[:, :, 0, 0]

+

+def voc_ap(rec, prec, use_07_metric=False):

+ """ ap = voc_ap(rec, prec, [use_07_metric])

+ Compute VOC AP given precision and recall.

+ If use_07_metric is true, uses the

+ VOC 07 11 point method (default:False).

+ """

+ if use_07_metric:

+ # 11 point metric

+ ap = 0.

+ for t in np.arange(0., 1.1, 0.1):

+ if np.sum(rec >= t) == 0:

+ p = 0

+ else:

+ p = np.max(prec[rec >= t])

+ ap = ap + p / 11.

+ else:

+ # correct AP calculation

+ # first append sentinel values at the end

+ mrec = np.concatenate(([0.], rec, [1.]))

+ mpre = np.concatenate(([0.], prec, [0.]))

+

+ # compute the precision envelope

+ for i in range(mpre.size - 1, 0, -1):

+ mpre[i - 1] = np.maximum(mpre[i - 1], mpre[i])

+

+ # to calculate area under PR curve, look for points

+ # where X axis (recall) changes value

+ i = np.where(mrec[1:] != mrec[:-1])[0]

+

+ # and sum (\Delta recall) * prec

+ ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1])

+ return ap

+

+def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=255./2.):

+# def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=1.):

+ image_numpy = image_tensor[0].cpu().float().numpy()

+ image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

+ return image_numpy.astype(imtype)

+

+def im2tensor(image, imtype=np.uint8, cent=1., factor=255./2.):

+# def im2tensor(image, imtype=np.uint8, cent=1., factor=1.):

+ return torch.Tensor((image / factor - cent)

+ [:, :, :, np.newaxis].transpose((3, 2, 0, 1)))

diff --git a/losses/lpips/base_model.py b/losses/lpips/base_model.py

new file mode 100644

index 0000000000000000000000000000000000000000..8de1d16f0c7fa52d8067139abc6e769e96d0a6a1

--- /dev/null

+++ b/losses/lpips/base_model.py

@@ -0,0 +1,58 @@

+import os

+import numpy as np

+import torch

+from torch.autograd import Variable

+from pdb import set_trace as st

+from IPython import embed

+

+class BaseModel():

+ def __init__(self):

+ pass;

+

+ def name(self):

+ return 'BaseModel'

+

+ def initialize(self, use_gpu=True, gpu_ids=[0]):

+ self.use_gpu = use_gpu

+ self.gpu_ids = gpu_ids

+

+ def forward(self):

+ pass

+

+ def get_image_paths(self):

+ pass

+

+ def optimize_parameters(self):

+ pass

+

+ def get_current_visuals(self):

+ return self.input

+

+ def get_current_errors(self):

+ return {}

+

+ def save(self, label):

+ pass

+

+ # helper saving function that can be used by subclasses

+ def save_network(self, network, path, network_label, epoch_label):

+ save_filename = '%s_net_%s.pth' % (epoch_label, network_label)

+ save_path = os.path.join(path, save_filename)

+ torch.save(network.state_dict(), save_path)

+

+ # helper loading function that can be used by subclasses

+ def load_network(self, network, network_label, epoch_label):

+ save_filename = '%s_net_%s.pth' % (epoch_label, network_label)

+ save_path = os.path.join(self.save_dir, save_filename)

+ print('Loading network from %s'%save_path)

+ network.load_state_dict(torch.load(save_path))

+

+ def update_learning_rate():

+ pass

+

+ def get_image_paths(self):

+ return self.image_paths

+

+ def save_done(self, flag=False):

+ np.save(os.path.join(self.save_dir, 'done_flag'),flag)

+ np.savetxt(os.path.join(self.save_dir, 'done_flag'),[flag,],fmt='%i')

diff --git a/losses/lpips/dist_model.py b/losses/lpips/dist_model.py

new file mode 100644

index 0000000000000000000000000000000000000000..6c69380084f412a7f8d3d4e64466d251c4e6f19e

--- /dev/null

+++ b/losses/lpips/dist_model.py

@@ -0,0 +1,284 @@

+

+from __future__ import absolute_import

+

+import sys

+import numpy as np

+import torch

+from torch import nn

+import os

+from collections import OrderedDict

+from torch.autograd import Variable

+import itertools

+from .base_model import BaseModel

+from scipy.ndimage import zoom

+import fractions

+import functools

+import skimage.transform

+from tqdm import tqdm

+

+from IPython import embed

+

+from . import networks_basic as networks

+from losses import lpips as util

+

+class DistModel(BaseModel):

+ def name(self):

+ return self.model_name

+

+ def initialize(self, model='net-lin', net='alex', colorspace='Lab', pnet_rand=False, pnet_tune=False, model_path=None,

+ use_gpu=True, printNet=False, spatial=False,

+ is_train=False, lr=.0001, beta1=0.5, version='0.1', gpu_ids=[0]):

+ '''

+ INPUTS

+ model - ['net-lin'] for linearly calibrated network

+ ['net'] for off-the-shelf network

+ ['L2'] for L2 distance in Lab colorspace

+ ['SSIM'] for ssim in RGB colorspace

+ net - ['squeeze','alex','vgg']

+ model_path - if None, will look in weights/[NET_NAME].pth

+ colorspace - ['Lab','RGB'] colorspace to use for L2 and SSIM

+ use_gpu - bool - whether or not to use a GPU

+ printNet - bool - whether or not to print network architecture out

+ spatial - bool - whether to output an array containing varying distances across spatial dimensions

+ spatial_shape - if given, output spatial shape. if None then spatial shape is determined automatically via spatial_factor (see below).

+ spatial_factor - if given, specifies upsampling factor relative to the largest spatial extent of a convolutional layer. if None then resized to size of input images.

+ spatial_order - spline order of filter for upsampling in spatial mode, by default 1 (bilinear).

+ is_train - bool - [True] for training mode

+ lr - float - initial learning rate

+ beta1 - float - initial momentum term for adam

+ version - 0.1 for latest, 0.0 was original (with a bug)

+ gpu_ids - int array - [0] by default, gpus to use

+ '''

+ BaseModel.initialize(self, use_gpu=use_gpu, gpu_ids=gpu_ids)

+

+ self.model = model

+ self.net = net

+ self.is_train = is_train

+ self.spatial = spatial

+ self.gpu_ids = gpu_ids

+ self.model_name = '%s [%s]'%(model,net)

+

+ if(self.model == 'net-lin'): # pretrained net + linear layer

+ self.net = networks.PNetLin(pnet_rand=pnet_rand, pnet_tune=pnet_tune, pnet_type=net,

+ use_dropout=True, spatial=spatial, version=version, lpips=True)

+ kw = {}

+ if not use_gpu:

+ kw['map_location'] = 'cpu'

+ if(model_path is None):

+ import inspect

+ model_path = os.path.abspath(os.path.join(inspect.getfile(self.initialize), '..', 'weights/v%s/%s.pth'%(version,net)))

+

+ if(not is_train):

+ print('Loading model from: %s'%model_path)

+ self.net.load_state_dict(torch.load(model_path, **kw), strict=False)

+

+ elif(self.model=='net'): # pretrained network

+ self.net = networks.PNetLin(pnet_rand=pnet_rand, pnet_type=net, lpips=False)

+ elif(self.model in ['L2','l2']):

+ self.net = networks.L2(use_gpu=use_gpu,colorspace=colorspace) # not really a network, only for testing

+ self.model_name = 'L2'

+ elif(self.model in ['DSSIM','dssim','SSIM','ssim']):

+ self.net = networks.DSSIM(use_gpu=use_gpu,colorspace=colorspace)

+ self.model_name = 'SSIM'

+ else:

+ raise ValueError("Model [%s] not recognized." % self.model)

+

+ self.parameters = list(self.net.parameters())

+

+ if self.is_train: # training mode

+ # extra network on top to go from distances (d0,d1) => predicted human judgment (h*)

+ self.rankLoss = networks.BCERankingLoss()

+ self.parameters += list(self.rankLoss.net.parameters())

+ self.lr = lr

+ self.old_lr = lr

+ self.optimizer_net = torch.optim.Adam(self.parameters, lr=lr, betas=(beta1, 0.999))

+ else: # test mode

+ self.net.eval()

+

+ if(use_gpu):

+ self.net.to(gpu_ids[0])

+ self.net = torch.nn.DataParallel(self.net, device_ids=gpu_ids)

+ if(self.is_train):

+ self.rankLoss = self.rankLoss.to(device=gpu_ids[0]) # just put this on GPU0

+

+ if(printNet):

+ print('---------- Networks initialized -------------')

+ networks.print_network(self.net)

+ print('-----------------------------------------------')

+

+ def forward(self, in0, in1, retPerLayer=False):

+ ''' Function computes the distance between image patches in0 and in1

+ INPUTS

+ in0, in1 - torch.Tensor object of shape Nx3xXxY - image patch scaled to [-1,1]

+ OUTPUT

+ computed distances between in0 and in1

+ '''

+

+ return self.net.forward(in0, in1, retPerLayer=retPerLayer)

+

+ # ***** TRAINING FUNCTIONS *****

+ def optimize_parameters(self):

+ self.forward_train()

+ self.optimizer_net.zero_grad()

+ self.backward_train()

+ self.optimizer_net.step()

+ self.clamp_weights()

+

+ def clamp_weights(self):

+ for module in self.net.modules():

+ if(hasattr(module, 'weight') and module.kernel_size==(1,1)):

+ module.weight.data = torch.clamp(module.weight.data,min=0)

+

+ def set_input(self, data):

+ self.input_ref = data['ref']

+ self.input_p0 = data['p0']

+ self.input_p1 = data['p1']

+ self.input_judge = data['judge']

+

+ if(self.use_gpu):

+ self.input_ref = self.input_ref.to(device=self.gpu_ids[0])

+ self.input_p0 = self.input_p0.to(device=self.gpu_ids[0])

+ self.input_p1 = self.input_p1.to(device=self.gpu_ids[0])

+ self.input_judge = self.input_judge.to(device=self.gpu_ids[0])

+

+ self.var_ref = Variable(self.input_ref,requires_grad=True)

+ self.var_p0 = Variable(self.input_p0,requires_grad=True)

+ self.var_p1 = Variable(self.input_p1,requires_grad=True)

+

+ def forward_train(self): # run forward pass

+ # print(self.net.module.scaling_layer.shift)

+ # print(torch.norm(self.net.module.net.slice1[0].weight).item(), torch.norm(self.net.module.lin0.model[1].weight).item())

+

+ self.d0 = self.forward(self.var_ref, self.var_p0)

+ self.d1 = self.forward(self.var_ref, self.var_p1)

+ self.acc_r = self.compute_accuracy(self.d0,self.d1,self.input_judge)

+

+ self.var_judge = Variable(1.*self.input_judge).view(self.d0.size())

+

+ self.loss_total = self.rankLoss.forward(self.d0, self.d1, self.var_judge*2.-1.)

+

+ return self.loss_total

+

+ def backward_train(self):

+ torch.mean(self.loss_total).backward()

+

+ def compute_accuracy(self,d0,d1,judge):

+ ''' d0, d1 are Variables, judge is a Tensor '''

+ d1_lt_d0 = (d1 %f' % (type,self.old_lr, lr))

+ self.old_lr = lr

+

+def score_2afc_dataset(data_loader, func, name=''):

+ ''' Function computes Two Alternative Forced Choice (2AFC) score using

+ distance function 'func' in dataset 'data_loader'

+ INPUTS

+ data_loader - CustomDatasetDataLoader object - contains a TwoAFCDataset inside

+ func - callable distance function - calling d=func(in0,in1) should take 2

+ pytorch tensors with shape Nx3xXxY, and return numpy array of length N

+ OUTPUTS

+ [0] - 2AFC score in [0,1], fraction of time func agrees with human evaluators

+ [1] - dictionary with following elements

+ d0s,d1s - N arrays containing distances between reference patch to perturbed patches

+ gts - N array in [0,1], preferred patch selected by human evaluators

+ (closer to "0" for left patch p0, "1" for right patch p1,

+ "0.6" means 60pct people preferred right patch, 40pct preferred left)

+ scores - N array in [0,1], corresponding to what percentage function agreed with humans

+ CONSTS

+ N - number of test triplets in data_loader

+ '''

+

+ d0s = []

+ d1s = []

+ gts = []

+

+ for data in tqdm(data_loader.load_data(), desc=name):

+ d0s+=func(data['ref'],data['p0']).data.cpu().numpy().flatten().tolist()

+ d1s+=func(data['ref'],data['p1']).data.cpu().numpy().flatten().tolist()

+ gts+=data['judge'].cpu().numpy().flatten().tolist()

+

+ d0s = np.array(d0s)

+ d1s = np.array(d1s)

+ gts = np.array(gts)

+ scores = (d0s= t) == 0:

+ p = 0

+ else:

+ p = np.max(prec[rec >= t])

+ ap = ap + p / 11.0

+ else:

+ # correct AP calculation

+ # first append sentinel values at the end

+ mrec = np.concatenate(([0.0], rec, [1.0]))

+ mpre = np.concatenate(([0.0], prec, [0.0]))

+

+ # compute the precision envelope

+ for i in range(mpre.size - 1, 0, -1):

+ mpre[i - 1] = np.maximum(mpre[i - 1], mpre[i])

+

+ # to calculate area under PR curve, look for points

+ # where X axis (recall) changes value

+ i = np.where(mrec[1:] != mrec[:-1])[0]

+

+ # and sum (\Delta recall) * prec

+ ap = np.sum((mrec[i + 1] - mrec[i]) * mpre[i + 1])

+ return ap

+

+

+def tensor2im(image_tensor, imtype=np.uint8, cent=1.0, factor=255.0 / 2.0):

+ # def tensor2im(image_tensor, imtype=np.uint8, cent=1., factor=1.):

+ image_numpy = image_tensor[0].cpu().float().numpy()

+ image_numpy = (np.transpose(image_numpy, (1, 2, 0)) + cent) * factor

+ return image_numpy.astype(imtype)

+

+

+def im2tensor(image, imtype=np.uint8, cent=1.0, factor=255.0 / 2.0):

+ # def im2tensor(image, imtype=np.uint8, cent=1., factor=1.):

+ return torch.Tensor(

+ (image / factor - cent)[:, :, :, np.newaxis].transpose((3, 2, 0, 1))

+ )

diff --git a/losses/masked_lpips/base_model.py b/losses/masked_lpips/base_model.py

new file mode 100644

index 0000000000000000000000000000000000000000..20b3b3458bd4a6751708e3ec501f1a59c619a1c4

--- /dev/null

+++ b/losses/masked_lpips/base_model.py

@@ -0,0 +1,65 @@

+import os

+import numpy as np

+import torch

+from torch.autograd import Variable

+from pdb import set_trace as st

+from IPython import embed

+

+

+class BaseModel:

+ def __init__(self):

+ pass

+

+ def name(self):

+ return "BaseModel"

+

+ def initialize(self, use_gpu=True, gpu_ids=[0]):

+ self.use_gpu = use_gpu

+ self.gpu_ids = gpu_ids

+

+ def forward(self):

+ pass

+

+ def get_image_paths(self):

+ pass

+

+ def optimize_parameters(self):

+ pass

+

+ def get_current_visuals(self):

+ return self.input

+

+ def get_current_errors(self):

+ return {}

+

+ def save(self, label):

+ pass

+

+ # helper saving function that can be used by subclasses

+ def save_network(self, network, path, network_label, epoch_label):

+ save_filename = "%s_net_%s.pth" % (epoch_label, network_label)

+ save_path = os.path.join(path, save_filename)

+ torch.save(network.state_dict(), save_path)

+

+ # helper loading function that can be used by subclasses

+ def load_network(self, network, network_label, epoch_label):

+ save_filename = "%s_net_%s.pth" % (epoch_label, network_label)

+ save_path = os.path.join(self.save_dir, save_filename)

+ print("Loading network from %s" % save_path)

+ network.load_state_dict(torch.load(save_path))

+

+ def update_learning_rate():

+ pass

+

+ def get_image_paths(self):

+ return self.image_paths

+

+ def save_done(self, flag=False):

+ np.save(os.path.join(self.save_dir, "done_flag"), flag)

+ np.savetxt(

+ os.path.join(self.save_dir, "done_flag"),

+ [

+ flag,

+ ],

+ fmt="%i",

+ )

diff --git a/losses/masked_lpips/dist_model.py b/losses/masked_lpips/dist_model.py

new file mode 100644

index 0000000000000000000000000000000000000000..ddee6c38c14a43e4171ac485b86483918492e84c

--- /dev/null

+++ b/losses/masked_lpips/dist_model.py

@@ -0,0 +1,325 @@

+from __future__ import absolute_import

+

+import sys

+import numpy as np

+import torch

+from torch import nn

+import os

+from collections import OrderedDict

+from torch.autograd import Variable

+import itertools

+from .base_model import BaseModel

+from scipy.ndimage import zoom

+import fractions

+import functools

+import skimage.transform

+from tqdm import tqdm

+

+from IPython import embed

+

+from . import networks_basic as netw

+from losses import masked_lpips as util

+

+

+class DistModel(BaseModel):

+ def name(self):

+ return self.model_name

+

+ def initialize(

+ self,

+ model="net-lin",

+ net="alex",

+ vgg_blocks=[1, 2, 3, 4, 5],

+ colorspace="Lab",

+ pnet_rand=False,

+ pnet_tune=False,

+ model_path=None,

+ use_gpu=True,

+ printNet=False,

+ spatial=False,

+ is_train=False,

+ lr=0.0001,

+ beta1=0.5,

+ version="0.1",

+ gpu_ids=[0],

+ ):

+ """

+ INPUTS

+ model - ['net-lin'] for linearly calibrated network

+ ['net'] for off-the-shelf network

+ ['L2'] for L2 distance in Lab colorspace

+ ['SSIM'] for ssim in RGB colorspace

+ net - ['squeeze','alex','vgg']

+ model_path - if None, will look in weights/[NET_NAME].pth

+ colorspace - ['Lab','RGB'] colorspace to use for L2 and SSIM

+ use_gpu - bool - whether or not to use a GPU

+ printNet - bool - whether or not to print network architecture out

+ spatial - bool - whether to output an array containing varying distances across spatial dimensions

+ spatial_shape - if given, output spatial shape. if None then spatial shape is determined automatically via spatial_factor (see below).

+ spatial_factor - if given, specifies upsampling factor relative to the largest spatial extent of a convolutional layer. if None then resized to size of input images.

+ spatial_order - spline order of filter for upsampling in spatial mode, by default 1 (bilinear).

+ is_train - bool - [True] for training mode

+ lr - float - initial learning rate

+ beta1 - float - initial momentum term for adam

+ version - 0.1 for latest, 0.0 was original (with a bug)

+ gpu_ids - int array - [0] by default, gpus to use

+ """

+ BaseModel.initialize(self, use_gpu=use_gpu, gpu_ids=gpu_ids)

+

+ self.model = model

+ self.net = net

+ self.is_train = is_train

+ self.spatial = spatial

+ self.gpu_ids = gpu_ids

+ self.model_name = "%s [%s]" % (model, net)

+

+ if self.model == "net-lin": # pretrained net + linear layer

+ self.net = netw.PNetLin(

+ pnet_rand=pnet_rand,

+ pnet_tune=pnet_tune,

+ pnet_type=net,

+ use_dropout=True,

+ spatial=spatial,

+ version=version,

+ lpips=True,

+ vgg_blocks=vgg_blocks,

+ )

+ kw = {}

+ if not use_gpu:

+ kw["map_location"] = "cpu"

+ if model_path is None:

+ import inspect

+

+ model_path = os.path.abspath(

+ os.path.join(

+ inspect.getfile(self.initialize),

+ "..",

+ "weights/v%s/%s.pth" % (version, net),

+ )

+ )

+

+ if not is_train:

+ print("Loading model from: %s" % model_path)

+ self.net.load_state_dict(torch.load(model_path, **kw), strict=False)

+

+ elif self.model == "net": # pretrained network

+ self.net = netw.PNetLin(pnet_rand=pnet_rand, pnet_type=net, lpips=False)

+ elif self.model in ["L2", "l2"]:

+ self.net = netw.L2(

+ use_gpu=use_gpu, colorspace=colorspace

+ ) # not really a network, only for testing

+ self.model_name = "L2"

+ elif self.model in ["DSSIM", "dssim", "SSIM", "ssim"]:

+ self.net = netw.DSSIM(use_gpu=use_gpu, colorspace=colorspace)

+ self.model_name = "SSIM"

+ else:

+ raise ValueError("Model [%s] not recognized." % self.model)

+

+ self.parameters = list(self.net.parameters())

+

+ if self.is_train: # training mode

+ # extra network on top to go from distances (d0,d1) => predicted human judgment (h*)

+ self.rankLoss = netw.BCERankingLoss()

+ self.parameters += list(self.rankLoss.net.parameters())

+ self.lr = lr

+ self.old_lr = lr

+ self.optimizer_net = torch.optim.Adam(

+ self.parameters, lr=lr, betas=(beta1, 0.999)

+ )

+ else: # test mode

+ self.net.eval()

+

+ if use_gpu:

+ self.net.to(gpu_ids[0])

+ self.net = torch.nn.DataParallel(self.net, device_ids=gpu_ids)

+ if self.is_train:

+ self.rankLoss = self.rankLoss.to(

+ device=gpu_ids[0]

+ ) # just put this on GPU0

+

+ if printNet:

+ print("---------- Networks initialized -------------")

+ netw.print_network(self.net)

+ print("-----------------------------------------------")

+

+ def forward(self, in0, in1, mask=None, retPerLayer=False):

+ """Function computes the distance between image patches in0 and in1

+ INPUTS

+ in0, in1 - torch.Tensor object of shape Nx3xXxY - image patch scaled to [-1,1]

+ OUTPUT

+ computed distances between in0 and in1

+ """

+

+ return self.net.forward(in0, in1, mask=mask, retPerLayer=retPerLayer)

+

+ # ***** TRAINING FUNCTIONS *****

+ def optimize_parameters(self):

+ self.forward_train()

+ self.optimizer_net.zero_grad()

+ self.backward_train()

+ self.optimizer_net.step()

+ self.clamp_weights()

+

+ def clamp_weights(self):

+ for module in self.net.modules():

+ if hasattr(module, "weight") and module.kernel_size == (1, 1):

+ module.weight.data = torch.clamp(module.weight.data, min=0)

+

+ def set_input(self, data):

+ self.input_ref = data["ref"]

+ self.input_p0 = data["p0"]

+ self.input_p1 = data["p1"]

+ self.input_judge = data["judge"]

+

+ if self.use_gpu:

+ self.input_ref = self.input_ref.to(device=self.gpu_ids[0])

+ self.input_p0 = self.input_p0.to(device=self.gpu_ids[0])

+ self.input_p1 = self.input_p1.to(device=self.gpu_ids[0])

+ self.input_judge = self.input_judge.to(device=self.gpu_ids[0])

+

+ self.var_ref = Variable(self.input_ref, requires_grad=True)

+ self.var_p0 = Variable(self.input_p0, requires_grad=True)

+ self.var_p1 = Variable(self.input_p1, requires_grad=True)

+

+ def forward_train(self): # run forward pass

+ # print(self.net.module.scaling_layer.shift)

+ # print(torch.norm(self.net.module.net.slice1[0].weight).item(), torch.norm(self.net.module.lin0.model[1].weight).item())

+

+ self.d0 = self.forward(self.var_ref, self.var_p0)

+ self.d1 = self.forward(self.var_ref, self.var_p1)

+ self.acc_r = self.compute_accuracy(self.d0, self.d1, self.input_judge)

+

+ self.var_judge = Variable(1.0 * self.input_judge).view(self.d0.size())

+

+ self.loss_total = self.rankLoss.forward(

+ self.d0, self.d1, self.var_judge * 2.0 - 1.0

+ )

+

+ return self.loss_total

+

+ def backward_train(self):

+ torch.mean(self.loss_total).backward()

+

+ def compute_accuracy(self, d0, d1, judge):

+ """ d0, d1 are Variables, judge is a Tensor """

+ d1_lt_d0 = (d1 < d0).cpu().data.numpy().flatten()

+ judge_per = judge.cpu().numpy().flatten()

+ return d1_lt_d0 * judge_per + (1 - d1_lt_d0) * (1 - judge_per)

+

+ def get_current_errors(self):

+ retDict = OrderedDict(

+ [("loss_total", self.loss_total.data.cpu().numpy()), ("acc_r", self.acc_r)]

+ )

+

+ for key in retDict.keys():

+ retDict[key] = np.mean(retDict[key])

+

+ return retDict

+

+ def get_current_visuals(self):

+ zoom_factor = 256 / self.var_ref.data.size()[2]

+

+ ref_img = util.tensor2im(self.var_ref.data)

+ p0_img = util.tensor2im(self.var_p0.data)

+ p1_img = util.tensor2im(self.var_p1.data)

+

+ ref_img_vis = zoom(ref_img, [zoom_factor, zoom_factor, 1], order=0)

+ p0_img_vis = zoom(p0_img, [zoom_factor, zoom_factor, 1], order=0)

+ p1_img_vis = zoom(p1_img, [zoom_factor, zoom_factor, 1], order=0)

+

+ return OrderedDict(

+ [("ref", ref_img_vis), ("p0", p0_img_vis), ("p1", p1_img_vis)]

+ )

+

+ def save(self, path, label):

+ if self.use_gpu:

+ self.save_network(self.net.module, path, "", label)

+ else:

+ self.save_network(self.net, path, "", label)

+ self.save_network(self.rankLoss.net, path, "rank", label)

+

+ def update_learning_rate(self, nepoch_decay):

+ lrd = self.lr / nepoch_decay

+ lr = self.old_lr - lrd

+

+ for param_group in self.optimizer_net.param_groups:

+ param_group["lr"] = lr

+

+ print("update lr [%s] decay: %f -> %f" % (type, self.old_lr, lr))

+ self.old_lr = lr

+

+

+def score_2afc_dataset(data_loader, func, name=""):

+ """Function computes Two Alternative Forced Choice (2AFC) score using

+ distance function 'func' in dataset 'data_loader'

+ INPUTS

+ data_loader - CustomDatasetDataLoader object - contains a TwoAFCDataset inside

+ func - callable distance function - calling d=func(in0,in1) should take 2

+ pytorch tensors with shape Nx3xXxY, and return numpy array of length N

+ OUTPUTS

+ [0] - 2AFC score in [0,1], fraction of time func agrees with human evaluators

+ [1] - dictionary with following elements

+ d0s,d1s - N arrays containing distances between reference patch to perturbed patches

+ gts - N array in [0,1], preferred patch selected by human evaluators

+ (closer to "0" for left patch p0, "1" for right patch p1,

+ "0.6" means 60pct people preferred right patch, 40pct preferred left)

+ scores - N array in [0,1], corresponding to what percentage function agreed with humans

+ CONSTS

+ N - number of test triplets in data_loader

+ """

+

+ d0s = []

+ d1s = []

+ gts = []

+

+ for data in tqdm(data_loader.load_data(), desc=name):

+ d0s += func(data["ref"], data["p0"]).data.cpu().numpy().flatten().tolist()

+ d1s += func(data["ref"], data["p1"]).data.cpu().numpy().flatten().tolist()

+ gts += data["judge"].cpu().numpy().flatten().tolist()

+

+ d0s = np.array(d0s)

+ d1s = np.array(d1s)

+ gts = np.array(gts)

+ scores = (d0s < d1s) * (1.0 - gts) + (d1s < d0s) * gts + (d1s == d0s) * 0.5

+

+ return (np.mean(scores), dict(d0s=d0s, d1s=d1s, gts=gts, scores=scores))

+

+

+def score_jnd_dataset(data_loader, func, name=""):

+ """Function computes JND score using distance function 'func' in dataset 'data_loader'

+ INPUTS

+ data_loader - CustomDatasetDataLoader object - contains a JNDDataset inside

+ func - callable distance function - calling d=func(in0,in1) should take 2

+ pytorch tensors with shape Nx3xXxY, and return pytorch array of length N

+ OUTPUTS

+ [0] - JND score in [0,1], mAP score (area under precision-recall curve)

+ [1] - dictionary with following elements

+ ds - N array containing distances between two patches shown to human evaluator

+ sames - N array containing fraction of people who thought the two patches were identical

+ CONSTS

+ N - number of test triplets in data_loader

+ """

+

+ ds = []

+ gts = []

+

+ for data in tqdm(data_loader.load_data(), desc=name):

+ ds += func(data["p0"], data["p1"]).data.cpu().numpy().tolist()

+ gts += data["same"].cpu().numpy().flatten().tolist()

+

+ sames = np.array(gts)

+ ds = np.array(ds)

+

+ sorted_inds = np.argsort(ds)

+ ds_sorted = ds[sorted_inds]

+ sames_sorted = sames[sorted_inds]

+

+ TPs = np.cumsum(sames_sorted)

+ FPs = np.cumsum(1 - sames_sorted)

+ FNs = np.sum(sames_sorted) - TPs

+

+ precs = TPs / (TPs + FPs)

+ recs = TPs / (TPs + FNs)

+ score = util.voc_ap(recs, precs)

+

+ return (score, dict(ds=ds, sames=sames))

diff --git a/losses/masked_lpips/networks_basic.py b/losses/masked_lpips/networks_basic.py

new file mode 100644

index 0000000000000000000000000000000000000000..ea81e39c338bc13c7597f57e260e35612b8d2aab

--- /dev/null

+++ b/losses/masked_lpips/networks_basic.py

@@ -0,0 +1,331 @@

+from __future__ import absolute_import

+

+import sys

+import torch

+import torch.nn as nn

+import torch.nn.init as init

+from torch.autograd import Variable

+from torch.nn import functional as F

+import numpy as np

+from pdb import set_trace as st

+from skimage import color

+from IPython import embed

+from . import pretrained_networks as pn

+

+from losses import masked_lpips as util

+

+

+def spatial_average(in_tens, mask=None, keepdim=True):

+ if mask is None:

+ return in_tens.mean([2, 3], keepdim=keepdim)

+ else:

+ in_tens = in_tens * mask

+

+ # sum masked_in_tens across spatial dims

+ in_tens = in_tens.sum([2, 3], keepdim=keepdim)

+ in_tens = in_tens / torch.sum(mask)

+

+ return in_tens

+

+

+def upsample(in_tens, out_H=64): # assumes scale factor is same for H and W

+ in_H = in_tens.shape[2]

+ scale_factor = 1.0 * out_H / in_H

+

+ return nn.Upsample(scale_factor=scale_factor, mode="bilinear", align_corners=False)(

+ in_tens

+ )

+

+

+# Learned perceptual metric

+class PNetLin(nn.Module):

+ def __init__(

+ self,

+ pnet_type="vgg",

+ pnet_rand=False,

+ pnet_tune=False,

+ use_dropout=True,

+ spatial=False,

+ version="0.1",

+ lpips=True,

+ vgg_blocks=[1, 2, 3, 4, 5]

+ ):

+ super(PNetLin, self).__init__()

+

+ self.pnet_type = pnet_type

+ self.pnet_tune = pnet_tune

+ self.pnet_rand = pnet_rand

+ self.spatial = spatial

+ self.lpips = lpips

+ self.version = version

+ self.scaling_layer = ScalingLayer()

+

+ if self.pnet_type in ["vgg", "vgg16"]:

+ net_type = pn.vgg16

+ self.blocks = vgg_blocks

+ self.chns = []

+ self.chns = [64, 128, 256, 512, 512]

+

+ elif self.pnet_type == "alex":

+ net_type = pn.alexnet

+ self.chns = [64, 192, 384, 256, 256]

+ elif self.pnet_type == "squeeze":

+ net_type = pn.squeezenet

+ self.chns = [64, 128, 256, 384, 384, 512, 512]

+ self.L = len(self.chns)

+

+ self.net = net_type(pretrained=not self.pnet_rand, requires_grad=self.pnet_tune)

+

+ if lpips:

+ self.lin0 = NetLinLayer(self.chns[0], use_dropout=use_dropout)

+ self.lin1 = NetLinLayer(self.chns[1], use_dropout=use_dropout)

+ self.lin2 = NetLinLayer(self.chns[2], use_dropout=use_dropout)

+ self.lin3 = NetLinLayer(self.chns[3], use_dropout=use_dropout)

+ self.lin4 = NetLinLayer(self.chns[4], use_dropout=use_dropout)

+ self.lins = [self.lin0, self.lin1, self.lin2, self.lin3, self.lin4]

+ #self.lins = [self.lin0, self.lin1, self.lin2, self.lin3, self.lin4]

+ if self.pnet_type == "squeeze": # 7 layers for squeezenet

+ self.lin5 = NetLinLayer(self.chns[5], use_dropout=use_dropout)

+ self.lin6 = NetLinLayer(self.chns[6], use_dropout=use_dropout)

+ self.lins += [self.lin5, self.lin6]

+

+ def forward(self, in0, in1, mask=None, retPerLayer=False):

+ # blocks: list of layer names

+

+ # v0.0 - original release had a bug, where input was not scaled

+ in0_input, in1_input = (

+ (self.scaling_layer(in0), self.scaling_layer(in1))

+ if self.version == "0.1"

+ else (in0, in1)

+ )

+ outs0, outs1 = self.net.forward(in0_input), self.net.forward(in1_input)

+ feats0, feats1, diffs = {}, {}, {}

+

+ # prepare list of masks at different resolutions

+ if mask is not None:

+ masks = []

+ if len(mask.shape) == 3:

+ mask = torch.unsqueeze(mask, axis=0) # 4D

+

+ for kk in range(self.L):

+ N, C, H, W = outs0[kk].shape

+ mask = F.interpolate(mask, size=(H, W), mode="nearest")

+ masks.append(mask)

+

+ """

+ outs0 has 5 feature maps

+ 1. [1, 64, 256, 256]

+ 2. [1, 128, 128, 128]

+ 3. [1, 256, 64, 64]

+ 4. [1, 512, 32, 32]

+ 5. [1, 512, 16, 16]

+ """

+ for kk in range(self.L):

+ feats0[kk], feats1[kk] = (

+ util.normalize_tensor(outs0[kk]),

+ util.normalize_tensor(outs1[kk]),

+ )

+ diffs[kk] = (feats0[kk] - feats1[kk]) ** 2

+

+ if self.lpips:

+ if self.spatial:

+ res = [

+ upsample(self.lins[kk].model(diffs[kk]), out_H=in0.shape[2])

+ for kk in range(self.L)

+ ]

+ else:

+ # NOTE: this block is used

+ # self.lins has 5 elements, where each element is a layer of LIN

+ """

+ self.lin0 = NetLinLayer(self.chns[0], use_dropout=use_dropout)

+ self.lin1 = NetLinLayer(self.chns[1], use_dropout=use_dropout)

+ self.lin2 = NetLinLayer(self.chns[2], use_dropout=use_dropout)

+ self.lin3 = NetLinLayer(self.chns[3], use_dropout=use_dropout)

+ self.lin4 = NetLinLayer(self.chns[4], use_dropout=use_dropout)

+ self.lins = [self.lin0,self.lin1,self.lin2,self.lin3,self.lin4]

+ """

+

+ # NOTE:

+ # Each lins is applying a 1x1 conv on the spatial tensor to output 1 channel

+ # Therefore, to prevent this problem, we can simply mask out the activations

+ # in the spatial_average block. Right now, spatial_average does a spatial mean.

+ # We can mask out the tensor and then consider only on pixels for the mean op.

+ res = [

+ spatial_average(

+ self.lins[kk].model(diffs[kk]),

+ mask=masks[kk] if mask is not None else None,

+ keepdim=True,

+ )

+ for kk in range(self.L)

+ ]

+ else:

+ if self.spatial:

+ res = [

+ upsample(diffs[kk].sum(dim=1, keepdim=True), out_H=in0.shape[2])

+ for kk in range(self.L)

+ ]

+ else:

+ res = [

+ spatial_average(diffs[kk].sum(dim=1, keepdim=True), keepdim=True)

+ for kk in range(self.L)

+ ]

+

+ '''

+ val = res[0]

+ for l in range(1, self.L):

+ val += res[l]

+ '''

+

+ val = 0.0

+ for l in range(self.L):

+ # l is going to run from 0 to 4

+ # check if (l + 1), i.e., [1 -> 5] in self.blocks, then count the loss

+ if str(l + 1) in self.blocks:

+ val += res[l]

+

+ if retPerLayer:

+ return (val, res)

+ else:

+ return val

+

+

+class ScalingLayer(nn.Module):

+ def __init__(self):

+ super(ScalingLayer, self).__init__()

+ self.register_buffer(

+ "shift", torch.Tensor([-0.030, -0.088, -0.188])[None, :, None, None]

+ )

+ self.register_buffer(

+ "scale", torch.Tensor([0.458, 0.448, 0.450])[None, :, None, None]

+ )

+

+ def forward(self, inp):

+ return (inp - self.shift) / self.scale

+

+

+class NetLinLayer(nn.Module):

+ """ A single linear layer which does a 1x1 conv """

+

+ def __init__(self, chn_in, chn_out=1, use_dropout=False):

+ super(NetLinLayer, self).__init__()

+

+ layers = (

+ [

+ nn.Dropout(),

+ ]

+ if (use_dropout)

+ else []

+ )

+ layers += [

+ nn.Conv2d(chn_in, chn_out, 1, stride=1, padding=0, bias=False),

+ ]

+ self.model = nn.Sequential(*layers)

+

+

+class Dist2LogitLayer(nn.Module):

+ """ takes 2 distances, puts through fc layers, spits out value between [0,1] (if use_sigmoid is True) """

+

+ def __init__(self, chn_mid=32, use_sigmoid=True):

+ super(Dist2LogitLayer, self).__init__()

+

+ layers = [

+ nn.Conv2d(5, chn_mid, 1, stride=1, padding=0, bias=True),

+ ]

+ layers += [

+ nn.LeakyReLU(0.2, True),

+ ]

+ layers += [

+ nn.Conv2d(chn_mid, chn_mid, 1, stride=1, padding=0, bias=True),

+ ]

+ layers += [

+ nn.LeakyReLU(0.2, True),

+ ]

+ layers += [

+ nn.Conv2d(chn_mid, 1, 1, stride=1, padding=0, bias=True),

+ ]

+ if use_sigmoid:

+ layers += [

+ nn.Sigmoid(),

+ ]

+ self.model = nn.Sequential(*layers)

+

+ def forward(self, d0, d1, eps=0.1):

+ return self.model.forward(

+ torch.cat((d0, d1, d0 - d1, d0 / (d1 + eps), d1 / (d0 + eps)), dim=1)

+ )

+

+

+class BCERankingLoss(nn.Module):

+ def __init__(self, chn_mid=32):

+ super(BCERankingLoss, self).__init__()

+ self.net = Dist2LogitLayer(chn_mid=chn_mid)

+ # self.parameters = list(self.net.parameters())

+ self.loss = torch.nn.BCELoss()

+

+ def forward(self, d0, d1, judge):

+ per = (judge + 1.0) / 2.0

+ self.logit = self.net.forward(d0, d1)

+ return self.loss(self.logit, per)

+

+

+# L2, DSSIM metrics

+class FakeNet(nn.Module):

+ def __init__(self, use_gpu=True, colorspace="Lab"):

+ super(FakeNet, self).__init__()

+ self.use_gpu = use_gpu

+ self.colorspace = colorspace

+

+

+class L2(FakeNet):

+ def forward(self, in0, in1, retPerLayer=None):

+ assert in0.size()[0] == 1 # currently only supports batchSize 1

+

+ if self.colorspace == "RGB":

+ (N, C, X, Y) = in0.size()

+ value = torch.mean(

+ torch.mean(

+ torch.mean((in0 - in1) ** 2, dim=1).view(N, 1, X, Y), dim=2

+ ).view(N, 1, 1, Y),

+ dim=3,

+ ).view(N)

+ return value

+ elif self.colorspace == "Lab":

+ value = util.l2(

+ util.tensor2np(util.tensor2tensorlab(in0.data, to_norm=False)),

+ util.tensor2np(util.tensor2tensorlab(in1.data, to_norm=False)),

+ range=100.0,

+ ).astype("float")

+ ret_var = Variable(torch.Tensor((value,)))

+ if self.use_gpu:

+ ret_var = ret_var.cuda()

+ return ret_var

+

+

+class DSSIM(FakeNet):

+ def forward(self, in0, in1, retPerLayer=None):

+ assert in0.size()[0] == 1 # currently only supports batchSize 1

+

+ if self.colorspace == "RGB":

+ value = util.dssim(

+ 1.0 * util.tensor2im(in0.data),

+ 1.0 * util.tensor2im(in1.data),

+ range=255.0,

+ ).astype("float")

+ elif self.colorspace == "Lab":

+ value = util.dssim(

+ util.tensor2np(util.tensor2tensorlab(in0.data, to_norm=False)),

+ util.tensor2np(util.tensor2tensorlab(in1.data, to_norm=False)),

+ range=100.0,

+ ).astype("float")

+ ret_var = Variable(torch.Tensor((value,)))

+ if self.use_gpu:

+ ret_var = ret_var.cuda()

+ return ret_var

+

+

+def print_network(net):

+ num_params = 0

+ for param in net.parameters():

+ num_params += param.numel()

+ print("Network", net)

+ print("Total number of parameters: %d" % num_params)

diff --git a/losses/masked_lpips/pretrained_networks.py b/losses/masked_lpips/pretrained_networks.py

new file mode 100644

index 0000000000000000000000000000000000000000..c251390679737ebf7ae279cd93571d369b41796e

--- /dev/null

+++ b/losses/masked_lpips/pretrained_networks.py

@@ -0,0 +1,190 @@

+from collections import namedtuple

+import torch

+from torchvision import models as tv

+from IPython import embed

+

+

+class squeezenet(torch.nn.Module):

+ def __init__(self, requires_grad=False, pretrained=True):

+ super(squeezenet, self).__init__()

+ pretrained_features = tv.squeezenet1_1(pretrained=pretrained).features

+ self.slice1 = torch.nn.Sequential()

+ self.slice2 = torch.nn.Sequential()

+ self.slice3 = torch.nn.Sequential()

+ self.slice4 = torch.nn.Sequential()

+ self.slice5 = torch.nn.Sequential()

+ self.slice6 = torch.nn.Sequential()

+ self.slice7 = torch.nn.Sequential()

+ self.N_slices = 7

+ for x in range(2):

+ self.slice1.add_module(str(x), pretrained_features[x])

+ for x in range(2, 5):

+ self.slice2.add_module(str(x), pretrained_features[x])

+ for x in range(5, 8):

+ self.slice3.add_module(str(x), pretrained_features[x])

+ for x in range(8, 10):

+ self.slice4.add_module(str(x), pretrained_features[x])

+ for x in range(10, 11):

+ self.slice5.add_module(str(x), pretrained_features[x])

+ for x in range(11, 12):

+ self.slice6.add_module(str(x), pretrained_features[x])

+ for x in range(12, 13):

+ self.slice7.add_module(str(x), pretrained_features[x])

+ if not requires_grad:

+ for param in self.parameters():

+ param.requires_grad = False

+

+ def forward(self, X):

+ h = self.slice1(X)

+ h_relu1 = h

+ h = self.slice2(h)

+ h_relu2 = h

+ h = self.slice3(h)

+ h_relu3 = h

+ h = self.slice4(h)

+ h_relu4 = h

+ h = self.slice5(h)

+ h_relu5 = h

+ h = self.slice6(h)

+ h_relu6 = h

+ h = self.slice7(h)

+ h_relu7 = h

+ vgg_outputs = namedtuple(

+ "SqueezeOutputs",

+ ["relu1", "relu2", "relu3", "relu4", "relu5", "relu6", "relu7"],

+ )

+ out = vgg_outputs(h_relu1, h_relu2, h_relu3, h_relu4, h_relu5, h_relu6, h_relu7)

+

+ return out

+

+

+class alexnet(torch.nn.Module):

+ def __init__(self, requires_grad=False, pretrained=True):

+ super(alexnet, self).__init__()

+ alexnet_pretrained_features = tv.alexnet(pretrained=pretrained).features

+ self.slice1 = torch.nn.Sequential()

+ self.slice2 = torch.nn.Sequential()

+ self.slice3 = torch.nn.Sequential()

+ self.slice4 = torch.nn.Sequential()

+ self.slice5 = torch.nn.Sequential()

+ self.N_slices = 5

+ for x in range(2):

+ self.slice1.add_module(str(x), alexnet_pretrained_features[x])

+ for x in range(2, 5):

+ self.slice2.add_module(str(x), alexnet_pretrained_features[x])

+ for x in range(5, 8):

+ self.slice3.add_module(str(x), alexnet_pretrained_features[x])

+ for x in range(8, 10):

+ self.slice4.add_module(str(x), alexnet_pretrained_features[x])

+ for x in range(10, 12):

+ self.slice5.add_module(str(x), alexnet_pretrained_features[x])

+ if not requires_grad:

+ for param in self.parameters():

+ param.requires_grad = False

+

+ def forward(self, X):

+ h = self.slice1(X)

+ h_relu1 = h

+ h = self.slice2(h)

+ h_relu2 = h

+ h = self.slice3(h)

+ h_relu3 = h

+ h = self.slice4(h)

+ h_relu4 = h

+ h = self.slice5(h)

+ h_relu5 = h

+ alexnet_outputs = namedtuple(

+ "AlexnetOutputs", ["relu1", "relu2", "relu3", "relu4", "relu5"]

+ )

+ out = alexnet_outputs(h_relu1, h_relu2, h_relu3, h_relu4, h_relu5)

+

+ return out

+

+

+class vgg16(torch.nn.Module):

+ def __init__(self, requires_grad=False, pretrained=True):

+ super(vgg16, self).__init__()

+ vgg_pretrained_features = tv.vgg16(pretrained=pretrained).features

+ self.slice1 = torch.nn.Sequential()

+ self.slice2 = torch.nn.Sequential()

+ self.slice3 = torch.nn.Sequential()

+ self.slice4 = torch.nn.Sequential()

+ self.slice5 = torch.nn.Sequential()

+ self.N_slices = 5

+ for x in range(4):

+ self.slice1.add_module(str(x), vgg_pretrained_features[x])

+ for x in range(4, 9):

+ self.slice2.add_module(str(x), vgg_pretrained_features[x])

+ for x in range(9, 16):

+ self.slice3.add_module(str(x), vgg_pretrained_features[x])

+ for x in range(16, 23):

+ self.slice4.add_module(str(x), vgg_pretrained_features[x])

+ for x in range(23, 30):

+ self.slice5.add_module(str(x), vgg_pretrained_features[x])

+ if not requires_grad:

+ for param in self.parameters():

+ param.requires_grad = False

+

+ def forward(self, X):

+ h = self.slice1(X)

+ h_relu1_2 = h

+ h = self.slice2(h)

+ h_relu2_2 = h

+ h = self.slice3(h)

+ h_relu3_3 = h

+ h = self.slice4(h)

+ h_relu4_3 = h

+ h = self.slice5(h)

+ h_relu5_3 = h

+

+ vgg_outputs = namedtuple(

+ "VggOutputs", ["relu1_2", "relu2_2", "relu3_3", "relu4_3", "relu5_3"]

+ )

+ out = vgg_outputs(h_relu1_2, h_relu2_2, h_relu3_3, h_relu4_3, h_relu5_3)

+

+ return out

+

+

+class resnet(torch.nn.Module):

+ def __init__(self, requires_grad=False, pretrained=True, num=18):

+ super(resnet, self).__init__()

+ if num == 18:

+ self.net = tv.resnet18(pretrained=pretrained)

+ elif num == 34:

+ self.net = tv.resnet34(pretrained=pretrained)

+ elif num == 50:

+ self.net = tv.resnet50(pretrained=pretrained)

+ elif num == 101:

+ self.net = tv.resnet101(pretrained=pretrained)

+ elif num == 152:

+ self.net = tv.resnet152(pretrained=pretrained)

+ self.N_slices = 5

+

+ self.conv1 = self.net.conv1

+ self.bn1 = self.net.bn1

+ self.relu = self.net.relu

+ self.maxpool = self.net.maxpool

+ self.layer1 = self.net.layer1

+ self.layer2 = self.net.layer2

+ self.layer3 = self.net.layer3

+ self.layer4 = self.net.layer4

+

+ def forward(self, X):

+ h = self.conv1(X)

+ h = self.bn1(h)

+ h = self.relu(h)

+ h_relu1 = h

+ h = self.maxpool(h)

+ h = self.layer1(h)

+ h_conv2 = h

+ h = self.layer2(h)

+ h_conv3 = h

+ h = self.layer3(h)

+ h_conv4 = h

+ h = self.layer4(h)

+ h_conv5 = h

+

+ outputs = namedtuple("Outputs", ["relu1", "conv2", "conv3", "conv4", "conv5"])

+ out = outputs(h_relu1, h_conv2, h_conv3, h_conv4, h_conv5)

+

+ return out

diff --git a/losses/pp_losses.py b/losses/pp_losses.py

new file mode 100644

index 0000000000000000000000000000000000000000..29d2d5694a748f9a77d74774fbcc10dd8a7efee8

--- /dev/null

+++ b/losses/pp_losses.py

@@ -0,0 +1,677 @@

+from dataclasses import dataclass

+

+import torch.nn as nn

+import torch.nn.functional as F

+from torchvision import transforms as T

+

+from utils.bicubic import BicubicDownSample

+

+normalize = T.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

+

+@dataclass

+class DefaultPaths:

+ psp_path: str = "pretrained_models/psp_ffhq_encode.pt"

+ ir_se50_path: str = "pretrained_models/ArcFace/ir_se50.pth"

+ stylegan_weights: str = "pretrained_models/stylegan2-ffhq-config-f.pt"

+ stylegan_car_weights: str = "pretrained_models/stylegan2-car-config-f-new.pkl"

+ stylegan_weights_pkl: str = (

+ "pretrained_models/stylegan2-ffhq-config-f.pkl"

+ )

+ arcface_model_path: str = "pretrained_models/ArcFace/backbone_ir50.pth"

+ moco: str = "pretrained_models/moco_v2_800ep_pretrain.pt"

+

+

+from collections import namedtuple

+from torch.nn import (

+ Conv2d,

+ BatchNorm2d,

+ PReLU,

+ ReLU,

+ Sigmoid,

+ MaxPool2d,

+ AdaptiveAvgPool2d,

+ Sequential,

+ Module,

+ Dropout,

+ Linear,

+ BatchNorm1d,

+)

+

+"""

+ArcFace implementation from [TreB1eN](https://github.com/TreB1eN/InsightFace_Pytorch)

+"""

+

+

+class Flatten(Module):

+ def forward(self, input):

+ return input.view(input.size(0), -1)

+

+

+def l2_norm(input, axis=1):

+ norm = torch.norm(input, 2, axis, True)

+ output = torch.div(input, norm)

+ return output

+

+

+class Bottleneck(namedtuple("Block", ["in_channel", "depth", "stride"])):

+ """A named tuple describing a ResNet block."""

+

+

+def get_block(in_channel, depth, num_units, stride=2):

+ return [Bottleneck(in_channel, depth, stride)] + [

+ Bottleneck(depth, depth, 1) for i in range(num_units - 1)

+ ]

+

+

+def get_blocks(num_layers):

+ if num_layers == 50:

+ blocks = [

+ get_block(in_channel=64, depth=64, num_units=3),

+ get_block(in_channel=64, depth=128, num_units=4),

+ get_block(in_channel=128, depth=256, num_units=14),

+ get_block(in_channel=256, depth=512, num_units=3),

+ ]

+ elif num_layers == 100:

+ blocks = [

+ get_block(in_channel=64, depth=64, num_units=3),

+ get_block(in_channel=64, depth=128, num_units=13),

+ get_block(in_channel=128, depth=256, num_units=30),

+ get_block(in_channel=256, depth=512, num_units=3),

+ ]

+ elif num_layers == 152:

+ blocks = [

+ get_block(in_channel=64, depth=64, num_units=3),

+ get_block(in_channel=64, depth=128, num_units=8),

+ get_block(in_channel=128, depth=256, num_units=36),

+ get_block(in_channel=256, depth=512, num_units=3),

+ ]

+ else:

+ raise ValueError(

+ "Invalid number of layers: {}. Must be one of [50, 100, 152]".format(

+ num_layers

+ )

+ )

+ return blocks

+

+

+class SEModule(Module):

+ def __init__(self, channels, reduction):

+ super(SEModule, self).__init__()

+ self.avg_pool = AdaptiveAvgPool2d(1)

+ self.fc1 = Conv2d(

+ channels, channels // reduction, kernel_size=1, padding=0, bias=False

+ )

+ self.relu = ReLU(inplace=True)

+ self.fc2 = Conv2d(

+ channels // reduction, channels, kernel_size=1, padding=0, bias=False

+ )

+ self.sigmoid = Sigmoid()

+

+ def forward(self, x):

+ module_input = x

+ x = self.avg_pool(x)

+ x = self.fc1(x)

+ x = self.relu(x)

+ x = self.fc2(x)

+ x = self.sigmoid(x)

+ return module_input * x

+

+