model_id

stringlengths 9

102

| model_card

stringlengths 4

343k

| model_labels

listlengths 2

50.8k

|

|---|---|---|

hmandsager/detr-resnet-50_finetuned_cppe5

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.2

- Datasets 2.19.1

- Tokenizers 0.19.1

|

[

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

ddn0116/detr-resnet-50_finetuned_cppe5

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.3.0+cu118

- Datasets 2.19.1

- Tokenizers 0.19.1

|

[

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

chinh102/chinh102

|

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

[

"label_0",

"label_1",

"label_2",

"label_3",

"label_4",

"label_5",

"label_6",

"label_7",

"label_8",

"label_9"

] |

Sa3ed99/detr_finetuned_cppe5

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr_finetuned_cppe5

This model is a fine-tuned version of [microsoft/conditional-detr-resnet-50](https://huggingface.co/microsoft/conditional-detr-resnet-50) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.3407

- Map: 0.2599

- Map 50: 0.5107

- Map 75: 0.2411

- Map Small: 0.1265

- Map Medium: 0.2152

- Map Large: 0.4809

- Mar 1: 0.2669

- Mar 10: 0.4141

- Mar 100: 0.4315

- Mar Small: 0.2471

- Mar Medium: 0.4009

- Mar Large: 0.7004

- Map Coverall: 0.5407

- Mar 100 Coverall: 0.6477

- Map Face Shield: 0.1688

- Mar 100 Face Shield: 0.4532

- Map Gloves: 0.1974

- Mar 100 Gloves: 0.3344

- Map Goggles: 0.1266

- Mar 100 Goggles: 0.3415

- Map Mask: 0.266

- Mar 100 Mask: 0.3804

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- num_epochs: 30

### Training results

| Training Loss | Epoch | Step | Validation Loss | Map | Map 50 | Map 75 | Map Small | Map Medium | Map Large | Mar 1 | Mar 10 | Mar 100 | Mar Small | Mar Medium | Mar Large | Map Coverall | Mar 100 Coverall | Map Face Shield | Mar 100 Face Shield | Map Gloves | Mar 100 Gloves | Map Goggles | Mar 100 Goggles | Map Mask | Mar 100 Mask |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:----------:|:---------:|:------:|:------:|:-------:|:---------:|:----------:|:---------:|:------------:|:----------------:|:---------------:|:-------------------:|:----------:|:--------------:|:-----------:|:---------------:|:--------:|:------------:|

| No log | 1.0 | 107 | 2.4182 | 0.0507 | 0.104 | 0.0439 | 0.0022 | 0.0243 | 0.0555 | 0.0607 | 0.1378 | 0.1804 | 0.028 | 0.1513 | 0.2649 | 0.2397 | 0.5194 | 0.0003 | 0.0494 | 0.0029 | 0.0969 | 0.0 | 0.0 | 0.0105 | 0.2364 |

| No log | 2.0 | 214 | 2.1888 | 0.0484 | 0.0991 | 0.0426 | 0.0128 | 0.0264 | 0.0477 | 0.0773 | 0.1617 | 0.2023 | 0.0433 | 0.1502 | 0.2651 | 0.1982 | 0.5892 | 0.0001 | 0.0101 | 0.0168 | 0.1625 | 0.0015 | 0.0108 | 0.0255 | 0.2387 |

| No log | 3.0 | 321 | 2.0106 | 0.0827 | 0.1666 | 0.0735 | 0.0148 | 0.0543 | 0.1059 | 0.109 | 0.2402 | 0.2787 | 0.0696 | 0.2816 | 0.3968 | 0.304 | 0.6144 | 0.0053 | 0.1671 | 0.0206 | 0.2455 | 0.0118 | 0.0385 | 0.072 | 0.328 |

| No log | 4.0 | 428 | 1.9302 | 0.107 | 0.2298 | 0.0892 | 0.0258 | 0.0669 | 0.1511 | 0.1338 | 0.2939 | 0.3207 | 0.1213 | 0.2985 | 0.4868 | 0.3695 | 0.5797 | 0.016 | 0.2911 | 0.0302 | 0.2464 | 0.0128 | 0.16 | 0.1066 | 0.3262 |

| 3.6586 | 5.0 | 535 | 1.8116 | 0.1183 | 0.2773 | 0.0879 | 0.0292 | 0.0782 | 0.1819 | 0.1467 | 0.3143 | 0.3378 | 0.142 | 0.3138 | 0.5634 | 0.3744 | 0.5658 | 0.052 | 0.3494 | 0.0532 | 0.2652 | 0.007 | 0.1769 | 0.1049 | 0.332 |

| 3.6586 | 6.0 | 642 | 1.7759 | 0.1213 | 0.2878 | 0.0867 | 0.019 | 0.0851 | 0.2366 | 0.1369 | 0.3103 | 0.3372 | 0.1215 | 0.3085 | 0.6211 | 0.4062 | 0.5694 | 0.0278 | 0.3278 | 0.0582 | 0.2594 | 0.0128 | 0.2338 | 0.1017 | 0.2956 |

| 3.6586 | 7.0 | 749 | 1.6378 | 0.1555 | 0.3462 | 0.1182 | 0.0467 | 0.1064 | 0.2873 | 0.168 | 0.3436 | 0.3788 | 0.1635 | 0.3456 | 0.6683 | 0.4273 | 0.5658 | 0.0479 | 0.3873 | 0.0927 | 0.3022 | 0.0277 | 0.2908 | 0.1819 | 0.348 |

| 3.6586 | 8.0 | 856 | 1.6132 | 0.1654 | 0.376 | 0.1226 | 0.0495 | 0.1358 | 0.366 | 0.1966 | 0.353 | 0.3824 | 0.1421 | 0.3667 | 0.6958 | 0.4324 | 0.5833 | 0.0803 | 0.4152 | 0.1005 | 0.2853 | 0.0373 | 0.2754 | 0.1766 | 0.3529 |

| 3.6586 | 9.0 | 963 | 1.5567 | 0.1815 | 0.3979 | 0.1439 | 0.0529 | 0.1518 | 0.3407 | 0.2063 | 0.3721 | 0.396 | 0.1506 | 0.3879 | 0.6784 | 0.4654 | 0.6149 | 0.0841 | 0.4063 | 0.1158 | 0.3058 | 0.041 | 0.3015 | 0.201 | 0.3516 |

| 1.5229 | 10.0 | 1070 | 1.5420 | 0.194 | 0.4056 | 0.1562 | 0.0523 | 0.1635 | 0.3805 | 0.2139 | 0.3706 | 0.3952 | 0.1409 | 0.3849 | 0.7155 | 0.4799 | 0.6338 | 0.1029 | 0.4114 | 0.127 | 0.3089 | 0.0401 | 0.2754 | 0.22 | 0.3467 |

| 1.5229 | 11.0 | 1177 | 1.4853 | 0.2006 | 0.4273 | 0.1683 | 0.0753 | 0.1676 | 0.3949 | 0.2214 | 0.3753 | 0.4054 | 0.18 | 0.3976 | 0.6702 | 0.4916 | 0.6167 | 0.1162 | 0.4241 | 0.1199 | 0.3138 | 0.0464 | 0.3185 | 0.229 | 0.3542 |

| 1.5229 | 12.0 | 1284 | 1.4646 | 0.2054 | 0.4336 | 0.1626 | 0.0809 | 0.1636 | 0.4116 | 0.2244 | 0.3933 | 0.4162 | 0.196 | 0.4011 | 0.688 | 0.4921 | 0.6333 | 0.098 | 0.4291 | 0.152 | 0.2991 | 0.0556 | 0.3692 | 0.2293 | 0.3502 |

| 1.5229 | 13.0 | 1391 | 1.4438 | 0.2113 | 0.4421 | 0.176 | 0.0722 | 0.1721 | 0.4278 | 0.2333 | 0.3903 | 0.4108 | 0.1905 | 0.4006 | 0.6807 | 0.5002 | 0.6315 | 0.1082 | 0.4342 | 0.1602 | 0.3085 | 0.0488 | 0.3169 | 0.2391 | 0.3627 |

| 1.5229 | 14.0 | 1498 | 1.4194 | 0.2241 | 0.4597 | 0.1846 | 0.0857 | 0.1878 | 0.4516 | 0.2418 | 0.3973 | 0.4209 | 0.1983 | 0.4126 | 0.7007 | 0.5049 | 0.6104 | 0.1265 | 0.4291 | 0.1644 | 0.3299 | 0.0686 | 0.3569 | 0.2564 | 0.3782 |

| 1.2614 | 15.0 | 1605 | 1.4168 | 0.2194 | 0.4409 | 0.191 | 0.0921 | 0.172 | 0.443 | 0.2416 | 0.3979 | 0.4213 | 0.2283 | 0.39 | 0.6824 | 0.5237 | 0.6441 | 0.1208 | 0.4557 | 0.1581 | 0.3129 | 0.0595 | 0.3246 | 0.235 | 0.3689 |

| 1.2614 | 16.0 | 1712 | 1.3935 | 0.226 | 0.4735 | 0.187 | 0.0995 | 0.1831 | 0.4229 | 0.237 | 0.4015 | 0.4238 | 0.2175 | 0.4082 | 0.6808 | 0.5125 | 0.6288 | 0.1292 | 0.4734 | 0.1735 | 0.3263 | 0.0566 | 0.32 | 0.2584 | 0.3702 |

| 1.2614 | 17.0 | 1819 | 1.3928 | 0.2295 | 0.4823 | 0.1949 | 0.0841 | 0.1911 | 0.441 | 0.2507 | 0.3996 | 0.4201 | 0.2206 | 0.3903 | 0.7086 | 0.5135 | 0.632 | 0.1465 | 0.4557 | 0.1652 | 0.3246 | 0.0767 | 0.3169 | 0.2456 | 0.3716 |

| 1.2614 | 18.0 | 1926 | 1.3886 | 0.2302 | 0.4745 | 0.1908 | 0.0836 | 0.1922 | 0.4742 | 0.2562 | 0.404 | 0.4203 | 0.199 | 0.3884 | 0.7143 | 0.5158 | 0.6347 | 0.1484 | 0.4582 | 0.1736 | 0.3192 | 0.064 | 0.3215 | 0.2491 | 0.368 |

| 1.104 | 19.0 | 2033 | 1.3812 | 0.2343 | 0.4775 | 0.201 | 0.0954 | 0.1982 | 0.4586 | 0.248 | 0.3985 | 0.4221 | 0.2093 | 0.4013 | 0.7229 | 0.5257 | 0.641 | 0.1555 | 0.462 | 0.1778 | 0.3308 | 0.0791 | 0.32 | 0.2336 | 0.3569 |

| 1.104 | 20.0 | 2140 | 1.3595 | 0.2488 | 0.4941 | 0.2209 | 0.0973 | 0.2065 | 0.4771 | 0.2677 | 0.4188 | 0.4369 | 0.2404 | 0.4026 | 0.7248 | 0.5337 | 0.6441 | 0.1672 | 0.4709 | 0.1832 | 0.3335 | 0.094 | 0.3523 | 0.2658 | 0.3836 |

| 1.104 | 21.0 | 2247 | 1.3556 | 0.2397 | 0.4789 | 0.2046 | 0.0941 | 0.1986 | 0.4552 | 0.2683 | 0.4094 | 0.4298 | 0.2244 | 0.4045 | 0.7063 | 0.5311 | 0.6396 | 0.1483 | 0.4506 | 0.1868 | 0.3304 | 0.0785 | 0.3508 | 0.2537 | 0.3778 |

| 1.104 | 22.0 | 2354 | 1.3572 | 0.2509 | 0.4949 | 0.2242 | 0.1067 | 0.2086 | 0.4512 | 0.2672 | 0.4119 | 0.4308 | 0.2403 | 0.397 | 0.7136 | 0.5405 | 0.6432 | 0.1641 | 0.4595 | 0.176 | 0.3237 | 0.1085 | 0.3431 | 0.2653 | 0.3844 |

| 1.104 | 23.0 | 2461 | 1.3551 | 0.2503 | 0.4951 | 0.2266 | 0.1053 | 0.2057 | 0.476 | 0.2674 | 0.4117 | 0.4297 | 0.2319 | 0.4058 | 0.7042 | 0.5403 | 0.6464 | 0.1522 | 0.4367 | 0.1828 | 0.3299 | 0.1129 | 0.3508 | 0.2633 | 0.3849 |

| 1.0066 | 24.0 | 2568 | 1.3404 | 0.2539 | 0.5049 | 0.2235 | 0.101 | 0.2081 | 0.4745 | 0.2674 | 0.412 | 0.4301 | 0.231 | 0.4038 | 0.692 | 0.5437 | 0.6559 | 0.1537 | 0.4367 | 0.1903 | 0.3348 | 0.1218 | 0.3415 | 0.2601 | 0.3813 |

| 1.0066 | 25.0 | 2675 | 1.3436 | 0.2574 | 0.5062 | 0.2286 | 0.1124 | 0.2119 | 0.4848 | 0.2667 | 0.4101 | 0.4273 | 0.2264 | 0.4014 | 0.6942 | 0.5416 | 0.6477 | 0.1512 | 0.4329 | 0.193 | 0.3366 | 0.131 | 0.3369 | 0.2702 | 0.3822 |

| 1.0066 | 26.0 | 2782 | 1.3377 | 0.258 | 0.5047 | 0.2211 | 0.1254 | 0.2126 | 0.4825 | 0.27 | 0.4168 | 0.4348 | 0.2491 | 0.4062 | 0.7013 | 0.5431 | 0.6518 | 0.1604 | 0.462 | 0.1935 | 0.3397 | 0.1259 | 0.34 | 0.2669 | 0.3804 |

| 1.0066 | 27.0 | 2889 | 1.3393 | 0.2615 | 0.5108 | 0.2388 | 0.1277 | 0.2188 | 0.4796 | 0.2711 | 0.4167 | 0.4347 | 0.2509 | 0.408 | 0.6993 | 0.5427 | 0.6491 | 0.1685 | 0.4608 | 0.1949 | 0.3348 | 0.1315 | 0.3462 | 0.2699 | 0.3827 |

| 1.0066 | 28.0 | 2996 | 1.3399 | 0.2599 | 0.5102 | 0.2352 | 0.1259 | 0.2166 | 0.4843 | 0.2674 | 0.415 | 0.4326 | 0.2482 | 0.4012 | 0.7042 | 0.5419 | 0.65 | 0.1678 | 0.4544 | 0.1945 | 0.3357 | 0.1253 | 0.3385 | 0.2698 | 0.3844 |

| 0.95 | 29.0 | 3103 | 1.3412 | 0.2594 | 0.5122 | 0.2387 | 0.1259 | 0.2159 | 0.4808 | 0.2702 | 0.4143 | 0.4303 | 0.2452 | 0.3983 | 0.7016 | 0.5393 | 0.6468 | 0.1689 | 0.4532 | 0.197 | 0.3335 | 0.1252 | 0.3369 | 0.2667 | 0.3809 |

| 0.95 | 30.0 | 3210 | 1.3407 | 0.2599 | 0.5107 | 0.2411 | 0.1265 | 0.2152 | 0.4809 | 0.2669 | 0.4141 | 0.4315 | 0.2471 | 0.4009 | 0.7004 | 0.5407 | 0.6477 | 0.1688 | 0.4532 | 0.1974 | 0.3344 | 0.1266 | 0.3415 | 0.266 | 0.3804 |

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

[

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

chinh102/chinh1002

|

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

[

"label_0",

"label_1",

"label_2",

"label_3",

"label_4",

"label_5",

"label_6",

"label_7",

"label_8",

"label_9"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_109s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_109s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_166s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_166s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_166s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_166s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

rathi2023/detr-resnet-50_finetuned_swny

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_swny

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 510

### Training results

### Framework versions

- Transformers 4.41.1

- Pytorch 2.3.0+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

[

"50",

"106",

"107",

"2",

"15",

"12",

"97",

"98",

"99",

"106",

"107",

"2",

"84",

"8",

"32",

"33",

"47",

"48",

"61",

"62",

"83",

"57",

"58",

"18",

"83",

"36",

"37",

"38",

"87",

"74",

"63",

"63",

"53",

"59",

"60",

"72",

"35",

"34",

"7",

"98",

"99",

"81",

"80",

"78",

"44",

"45",

"46",

"39",

"40",

"26",

"27",

"87",

"89",

"11",

"13",

"28",

"29",

"51",

"54",

"55",

"56",

"3",

"8",

"74",

"21",

"95",

"96",

"22",

"20",

"19",

"41",

"42",

"10",

"26",

"101",

"100",

"102",

"92",

"93",

"70",

"71",

"70",

"51",

"61",

"62",

"67",

"52",

"49",

"7",

"9",

"74",

"75",

"17",

"14",

"16",

"32",

"14",

"82",

"31",

"69",

"4",

"28",

"30",

"85",

"86",

"20",

"19",

"10",

"53",

"101",

"100",

"102",

"23",

"24",

"90",

"91",

"103",

"104",

"105",

"66",

"1",

"94",

"92",

"57",

"58",

"35",

"34",

"42",

"43",

"15",

"12",

"63",

"6",

"28",

"29",

"72",

"73",

"81",

"80",

"78",

"79",

"67",

"68",

"87",

"88",

"20",

"19",

"97",

"98",

"99",

"53",

"63",

"64",

"25",

"23",

"24",

"92",

"76",

"77"

] |

Sneha-Mahata/Blood-Cell-Detection-DETR

|

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

[

"label_0",

"label_1",

"label_2"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v1_suba_s1_106s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v1_suba_s1_106s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_224s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_224s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_224s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_224s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

dopamineaddict/detr-resnet-50_finetuned_cppe5

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# detr-resnet-50_finetuned_cppe5

This model is a fine-tuned version of [facebook/detr-resnet-50](https://huggingface.co/facebook/detr-resnet-50) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

### Framework versions

- Transformers 4.40.2

- Pytorch 2.2.1+cu121

- Datasets 2.19.1

- Tokenizers 0.19.1

|

[

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

PekingU/rtdetr_r18vd_coco_o365

|

# Model Card for RT-DETR

## Table of Contents

1. [Model Details](#model-details)

2. [Model Sources](#model-sources)

3. [How to Get Started with the Model](#how-to-get-started-with-the-model)

4. [Training Details](#training-details)

5. [Evaluation](#evaluation)

6. [Model Architecture and Objective](#model-architecture-and-objective)

7. [Citation](#citation)

## Model Details

> The YOLO series has become the most popular framework for real-time object detection due to its reasonable trade-off between speed and accuracy.

However, we observe that the speed and accuracy of YOLOs are negatively affected by the NMS.

Recently, end-to-end Transformer-based detectors (DETRs) have provided an alternative to eliminating NMS.

Nevertheless, the high computational cost limits their practicality and hinders them from fully exploiting the advantage of excluding NMS.

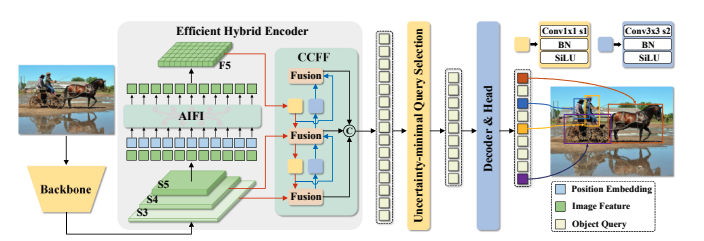

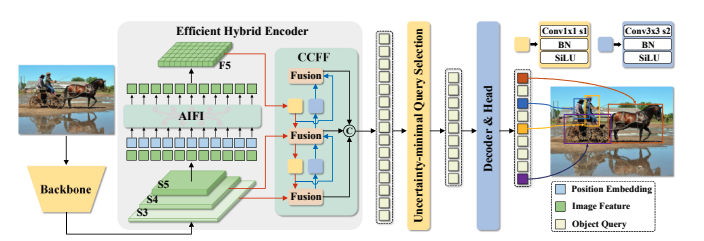

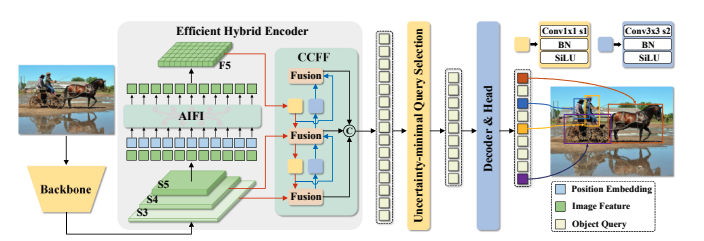

In this paper, we propose the Real-Time DEtection TRansformer (RT-DETR), the first real-time end-to-end object detector to our best knowledge that addresses the above dilemma.

We build RT-DETR in two steps, drawing on the advanced DETR:

first we focus on maintaining accuracy while improving speed, followed by maintaining speed while improving accuracy.

Specifically, we design an efficient hybrid encoder to expeditiously process multi-scale features by decoupling intra-scale interaction and cross-scale fusion to improve speed.

Then, we propose the uncertainty-minimal query selection to provide high-quality initial queries to the decoder, thereby improving accuracy.

In addition, RT-DETR supports flexible speed tuning by adjusting the number of decoder layers to adapt to various scenarios without retraining.

Our RT-DETR-R50 / R101 achieves 53.1% / 54.3% AP on COCO and 108 / 74 FPS on T4 GPU, outperforming previously advanced YOLOs in both speed and accuracy.

We also develop scaled RT-DETRs that outperform the lighter YOLO detectors (S and M models).

Furthermore, RT-DETR-R50 outperforms DINO-R50 by 2.2% AP in accuracy and about 21 times in FPS.

After pre-training with Objects365, RT-DETR-R50 / R101 achieves 55.3% / 56.2% AP. The project page: this [https URL](https://zhao-yian.github.io/RTDETR/).

This is the model card of a 🤗 [transformers](https://huggingface.co/docs/transformers/index) model that has been pushed on the Hub.

- **Developed by:** Yian Zhao and Sangbum Choi

- **Funded by:** National Key R&D Program of China (No.2022ZD0118201), Natural Science Foundation of China (No.61972217, 32071459, 62176249, 62006133, 62271465),

and the Shenzhen Medical Research Funds in China (No.

B2302037).

- **Shared by:** Sangbum Choi

- **Model type:** [RT-DETR](https://huggingface.co/docs/transformers/main/en/model_doc/rt_detr)

- **License:** Apache-2.0

### Model Sources

<!-- Provide the basic links for the model. -->

- **HF Docs:** [RT-DETR](https://huggingface.co/docs/transformers/main/en/model_doc/rt_detr)

- **Repository:** https://github.com/lyuwenyu/RT-DETR

- **Paper:** https://arxiv.org/abs/2304.08069

- **Demo:** [RT-DETR Tracking](https://huggingface.co/spaces/merve/RT-DETR-tracking-coco)

## How to Get Started with the Model

Use the code below to get started with the model.

```python

import torch

import requests

from PIL import Image

from transformers import RTDetrForObjectDetection, RTDetrImageProcessor

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

image_processor = RTDetrImageProcessor.from_pretrained("PekingU/rtdetr_r18vd_coco_o365")

model = RTDetrForObjectDetection.from_pretrained("PekingU/rtdetr_r18vd_coco_o365")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

results = image_processor.post_process_object_detection(outputs, target_sizes=torch.tensor([image.size[::-1]]), threshold=0.3)

for result in results:

for score, label_id, box in zip(result["scores"], result["labels"], result["boxes"]):

score, label = score.item(), label_id.item()

box = [round(i, 2) for i in box.tolist()]

print(f"{model.config.id2label[label]}: {score:.2f} {box}")

```

This should output

```

sofa: 0.97 [0.14, 0.38, 640.13, 476.21]

cat: 0.96 [343.38, 24.28, 640.14, 371.5]

cat: 0.96 [13.23, 54.18, 318.98, 472.22]

remote: 0.95 [40.11, 73.44, 175.96, 118.48]

remote: 0.92 [333.73, 76.58, 369.97, 186.99]

```

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

The RTDETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

We conduct experiments on COCO and Objects365 datasets, where RT-DETR is trained on COCO train2017 and validated on COCO val2017 dataset.

We report the standard COCO metrics, including AP (averaged over uniformly sampled IoU thresholds ranging from 0.50-0.95 with a step size of 0.05),

AP50, AP75, as well as AP at different scales: APS, APM, APL.

### Preprocessing

Images are resized to 640x640 pixels and rescaled with `image_mean=[0.485, 0.456, 0.406]` and `image_std=[0.229, 0.224, 0.225]`.

### Training Hyperparameters

- **Training regime:** <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

## Evaluation

| Model | #Epochs | #Params (M) | GFLOPs | FPS_bs=1 | AP (val) | AP50 (val) | AP75 (val) | AP-s (val) | AP-m (val) | AP-l (val) |

|----------------------------|---------|-------------|--------|----------|--------|-----------|-----------|----------|----------|----------|

| RT-DETR-R18 | 72 | 20 | 60.7 | 217 | 46.5 | 63.8 | 50.4 | 28.4 | 49.8 | 63.0 |

| RT-DETR-R34 | 72 | 31 | 91.0 | 172 | 48.5 | 66.2 | 52.3 | 30.2 | 51.9 | 66.2 |

| RT-DETR R50 | 72 | 42 | 136 | 108 | 53.1 | 71.3 | 57.7 | 34.8 | 58.0 | 70.0 |

| RT-DETR R101| 72 | 76 | 259 | 74 | 54.3 | 72.7 | 58.6 | 36.0 | 58.8 | 72.1 |

| RT-DETR-R18 (Objects 365 pretrained) | 60 | 20 | 61 | 217 | 49.2 | 66.6 | 53.5 | 33.2 | 52.3 | 64.8 |

| RT-DETR-R50 (Objects 365 pretrained) | 24 | 42 | 136 | 108 | 55.3 | 73.4 | 60.1 | 37.9 | 59.9 | 71.8 |

| RT-DETR-R101 (Objects 365 pretrained) | 24 | 76 | 259 | 74 | 56.2 | 74.6 | 61.3 | 38.3 | 60.5 | 73.5 |

### Model Architecture and Objective

Overview of RT-DETR. We feed the features from the last three stages of the backbone into the encoder. The efficient hybrid

encoder transforms multi-scale features into a sequence of image features through the Attention-based Intra-scale Feature Interaction (AIFI)

and the CNN-based Cross-scale Feature Fusion (CCFF). Then, the uncertainty-minimal query selection selects a fixed number of encoder

features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary prediction heads iteratively optimizes object

queries to generate categories and boxes.

## Citation

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

```bibtex

@misc{lv2023detrs,

title={DETRs Beat YOLOs on Real-time Object Detection},

author={Yian Zhao and Wenyu Lv and Shangliang Xu and Jinman Wei and Guanzhong Wang and Qingqing Dang and Yi Liu and Jie Chen},

year={2023},

eprint={2304.08069},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

## Model Card Authors

[Sangbum Choi](https://huggingface.co/danelcsb)

[Pavel Iakubovskii](https://huggingface.co/qubvel-hf)

|

[

"person",

"bicycle",

"car",

"motorbike",

"aeroplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"backpack",

"umbrella",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"sofa",

"pottedplant",

"bed",

"diningtable",

"toilet",

"tvmonitor",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

PekingU/rtdetr_r50vd_coco_o365

|

# Model Card for RT-DETR

## Table of Contents

1. [Model Details](#model-details)

2. [Model Sources](#model-sources)

3. [How to Get Started with the Model](#how-to-get-started-with-the-model)

4. [Training Details](#training-details)

5. [Evaluation](#evaluation)

6. [Model Architecture and Objective](#model-architecture-and-objective)

7. [Citation](#citation)

## Model Details

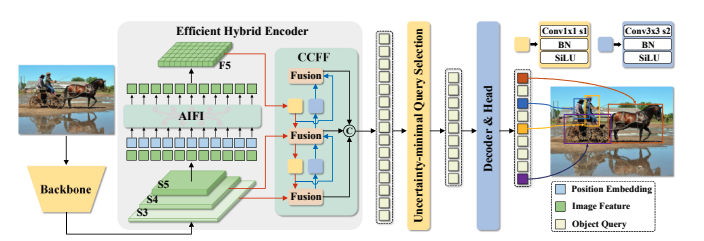

> The YOLO series has become the most popular framework for real-time object detection due to its reasonable trade-off between speed and accuracy.

However, we observe that the speed and accuracy of YOLOs are negatively affected by the NMS.

Recently, end-to-end Transformer-based detectors (DETRs) have provided an alternative to eliminating NMS.

Nevertheless, the high computational cost limits their practicality and hinders them from fully exploiting the advantage of excluding NMS.

In this paper, we propose the Real-Time DEtection TRansformer (RT-DETR), the first real-time end-to-end object detector to our best knowledge that addresses the above dilemma.

We build RT-DETR in two steps, drawing on the advanced DETR:

first we focus on maintaining accuracy while improving speed, followed by maintaining speed while improving accuracy.

Specifically, we design an efficient hybrid encoder to expeditiously process multi-scale features by decoupling intra-scale interaction and cross-scale fusion to improve speed.

Then, we propose the uncertainty-minimal query selection to provide high-quality initial queries to the decoder, thereby improving accuracy.

In addition, RT-DETR supports flexible speed tuning by adjusting the number of decoder layers to adapt to various scenarios without retraining.

Our RT-DETR-R50 / R101 achieves 53.1% / 54.3% AP on COCO and 108 / 74 FPS on T4 GPU, outperforming previously advanced YOLOs in both speed and accuracy.

We also develop scaled RT-DETRs that outperform the lighter YOLO detectors (S and M models).

Furthermore, RT-DETR-R50 outperforms DINO-R50 by 2.2% AP in accuracy and about 21 times in FPS.

After pre-training with Objects365, RT-DETR-R50 / R101 achieves 55.3% / 56.2% AP. The project page: this [https URL](https://zhao-yian.github.io/RTDETR/).

This is the model card of a 🤗 [transformers](https://huggingface.co/docs/transformers/index) model that has been pushed on the Hub.

- **Developed by:** Yian Zhao and Sangbum Choi

- **Funded by:** National Key R&D Program of China (No.2022ZD0118201), Natural Science Foundation of China (No.61972217, 32071459, 62176249, 62006133, 62271465),

and the Shenzhen Medical Research Funds in China (No.

B2302037).

- **Shared by:** Sangbum Choi

- **Model type:** [RT-DETR](https://huggingface.co/docs/transformers/main/en/model_doc/rt_detr)

- **License:** Apache-2.0

### Model Sources

<!-- Provide the basic links for the model. -->

- **HF Docs:** [RT-DETR](https://huggingface.co/docs/transformers/main/en/model_doc/rt_detr)

- **Repository:** https://github.com/lyuwenyu/RT-DETR

- **Paper:** https://arxiv.org/abs/2304.08069

- **Demo:** [RT-DETR Tracking](https://huggingface.co/spaces/merve/RT-DETR-tracking-coco)

## How to Get Started with the Model

Use the code below to get started with the model.

```python

import torch

import requests

from PIL import Image

from transformers import RTDetrForObjectDetection, RTDetrImageProcessor

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

image_processor = RTDetrImageProcessor.from_pretrained("PekingU/rtdetr_r50vd_coco_o365")

model = RTDetrForObjectDetection.from_pretrained("PekingU/rtdetr_r50vd_coco_o365")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

results = image_processor.post_process_object_detection(outputs, target_sizes=torch.tensor([image.size[::-1]]), threshold=0.3)

for result in results:

for score, label_id, box in zip(result["scores"], result["labels"], result["boxes"]):

score, label = score.item(), label_id.item()

box = [round(i, 2) for i in box.tolist()]

print(f"{model.config.id2label[label]}: {score:.2f} {box}")

```

This should output

```

sofa: 0.97 [0.14, 0.38, 640.13, 476.21]

cat: 0.96 [343.38, 24.28, 640.14, 371.5]

cat: 0.96 [13.23, 54.18, 318.98, 472.22]

remote: 0.95 [40.11, 73.44, 175.96, 118.48]

remote: 0.92 [333.73, 76.58, 369.97, 186.99]

```

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

The RTDETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

We conduct experiments on COCO and Objects365 datasets, where RT-DETR is trained on COCO train2017 and validated on COCO val2017 dataset.

We report the standard COCO metrics, including AP (averaged over uniformly sampled IoU thresholds ranging from 0.50-0.95 with a step size of 0.05),

AP50, AP75, as well as AP at different scales: APS, APM, APL.

### Preprocessing

Images are resized to 640x640 pixels and rescaled with `image_mean=[0.485, 0.456, 0.406]` and `image_std=[0.229, 0.224, 0.225]`.

### Training Hyperparameters

- **Training regime:** <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

## Evaluation

| Model | #Epochs | #Params (M) | GFLOPs | FPS_bs=1 | AP (val) | AP50 (val) | AP75 (val) | AP-s (val) | AP-m (val) | AP-l (val) |

|----------------------------|---------|-------------|--------|----------|--------|-----------|-----------|----------|----------|----------|

| RT-DETR-R18 | 72 | 20 | 60.7 | 217 | 46.5 | 63.8 | 50.4 | 28.4 | 49.8 | 63.0 |

| RT-DETR-R34 | 72 | 31 | 91.0 | 172 | 48.5 | 66.2 | 52.3 | 30.2 | 51.9 | 66.2 |

| RT-DETR R50 | 72 | 42 | 136 | 108 | 53.1 | 71.3 | 57.7 | 34.8 | 58.0 | 70.0 |

| RT-DETR R101| 72 | 76 | 259 | 74 | 54.3 | 72.7 | 58.6 | 36.0 | 58.8 | 72.1 |

| RT-DETR-R18 (Objects 365 pretrained) | 60 | 20 | 61 | 217 | 49.2 | 66.6 | 53.5 | 33.2 | 52.3 | 64.8 |

| RT-DETR-R50 (Objects 365 pretrained) | 24 | 42 | 136 | 108 | 55.3 | 73.4 | 60.1 | 37.9 | 59.9 | 71.8 |

| RT-DETR-R101 (Objects 365 pretrained) | 24 | 76 | 259 | 74 | 56.2 | 74.6 | 61.3 | 38.3 | 60.5 | 73.5 |

### Model Architecture and Objective

Overview of RT-DETR. We feed the features from the last three stages of the backbone into the encoder. The efficient hybrid

encoder transforms multi-scale features into a sequence of image features through the Attention-based Intra-scale Feature Interaction (AIFI)

and the CNN-based Cross-scale Feature Fusion (CCFF). Then, the uncertainty-minimal query selection selects a fixed number of encoder

features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary prediction heads iteratively optimizes object

queries to generate categories and boxes.

## Citation

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

```bibtex

@misc{lv2023detrs,

title={DETRs Beat YOLOs on Real-time Object Detection},

author={Yian Zhao and Wenyu Lv and Shangliang Xu and Jinman Wei and Guanzhong Wang and Qingqing Dang and Yi Liu and Jie Chen},

year={2023},

eprint={2304.08069},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

## Model Card Authors

[Sangbum Choi](https://huggingface.co/danelcsb)

[Pavel Iakubovskii](https://huggingface.co/qubvel-hf)

|

[

"person",

"bicycle",

"car",

"motorbike",

"aeroplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"backpack",

"umbrella",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"sofa",

"pottedplant",

"bed",

"diningtable",

"toilet",

"tvmonitor",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

PekingU/rtdetr_r18vd

|

# Model Card for RT-DETR

## Table of Contents

1. [Model Details](#model-details)

2. [Model Sources](#model-sources)

3. [How to Get Started with the Model](#how-to-get-started-with-the-model)

4. [Training Details](#training-details)

5. [Evaluation](#evaluation)

6. [Model Architecture and Objective](#model-architecture-and-objective)

7. [Citation](#citation)

## Model Details

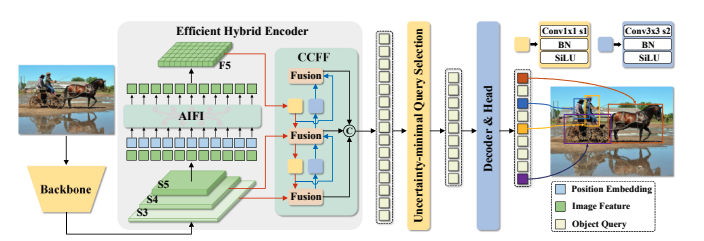

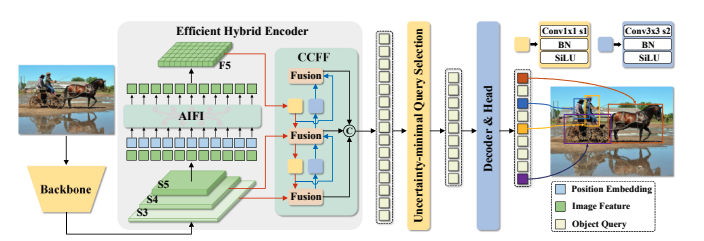

> The YOLO series has become the most popular framework for real-time object detection due to its reasonable trade-off between speed and accuracy.

However, we observe that the speed and accuracy of YOLOs are negatively affected by the NMS.

Recently, end-to-end Transformer-based detectors (DETRs) have provided an alternative to eliminating NMS.

Nevertheless, the high computational cost limits their practicality and hinders them from fully exploiting the advantage of excluding NMS.

In this paper, we propose the Real-Time DEtection TRansformer (RT-DETR), the first real-time end-to-end object detector to our best knowledge that addresses the above dilemma.

We build RT-DETR in two steps, drawing on the advanced DETR:

first we focus on maintaining accuracy while improving speed, followed by maintaining speed while improving accuracy.

Specifically, we design an efficient hybrid encoder to expeditiously process multi-scale features by decoupling intra-scale interaction and cross-scale fusion to improve speed.

Then, we propose the uncertainty-minimal query selection to provide high-quality initial queries to the decoder, thereby improving accuracy.

In addition, RT-DETR supports flexible speed tuning by adjusting the number of decoder layers to adapt to various scenarios without retraining.

Our RT-DETR-R50 / R101 achieves 53.1% / 54.3% AP on COCO and 108 / 74 FPS on T4 GPU, outperforming previously advanced YOLOs in both speed and accuracy.

We also develop scaled RT-DETRs that outperform the lighter YOLO detectors (S and M models).

Furthermore, RT-DETR-R50 outperforms DINO-R50 by 2.2% AP in accuracy and about 21 times in FPS.

After pre-training with Objects365, RT-DETR-R50 / R101 achieves 55.3% / 56.2% AP. The project page: this [https URL](https://zhao-yian.github.io/RTDETR/).

This is the model card of a 🤗 [transformers](https://huggingface.co/docs/transformers/index) model that has been pushed on the Hub.

- **Developed by:** Yian Zhao and Sangbum Choi

- **Funded by:** National Key R&D Program of China (No.2022ZD0118201), Natural Science Foundation of China (No.61972217, 32071459, 62176249, 62006133, 62271465),

and the Shenzhen Medical Research Funds in China (No.

B2302037).

- **Shared by:** Sangbum Choi

- **Model type:** [RT-DETR](https://huggingface.co/docs/transformers/main/en/model_doc/rt_detr)

- **License:** Apache-2.0

### Model Sources

<!-- Provide the basic links for the model. -->

- **HF Docs:** [RT-DETR](https://huggingface.co/docs/transformers/main/en/model_doc/rt_detr)

- **Repository:** https://github.com/lyuwenyu/RT-DETR

- **Paper:** https://arxiv.org/abs/2304.08069

- **Demo:** [RT-DETR Tracking](https://huggingface.co/spaces/merve/RT-DETR-tracking-coco)

## How to Get Started with the Model

Use the code below to get started with the model.

```python

import torch

import requests

from PIL import Image

from transformers import RTDetrForObjectDetection, RTDetrImageProcessor

url = 'http://images.cocodataset.org/val2017/000000039769.jpg'

image = Image.open(requests.get(url, stream=True).raw)

image_processor = RTDetrImageProcessor.from_pretrained("PekingU/rtdetr_r18vd")

model = RTDetrForObjectDetection.from_pretrained("PekingU/rtdetr_r18vd")

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

results = image_processor.post_process_object_detection(outputs, target_sizes=torch.tensor([image.size[::-1]]), threshold=0.3)

for result in results:

for score, label_id, box in zip(result["scores"], result["labels"], result["boxes"]):

score, label = score.item(), label_id.item()

box = [round(i, 2) for i in box.tolist()]

print(f"{model.config.id2label[label]}: {score:.2f} {box}")

```

This should output

```

sofa: 0.97 [0.14, 0.38, 640.13, 476.21]

cat: 0.96 [343.38, 24.28, 640.14, 371.5]

cat: 0.96 [13.23, 54.18, 318.98, 472.22]

remote: 0.95 [40.11, 73.44, 175.96, 118.48]

remote: 0.92 [333.73, 76.58, 369.97, 186.99]

```

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

The RTDETR model was trained on [COCO 2017 object detection](https://cocodataset.org/#download), a dataset consisting of 118k/5k annotated images for training/validation respectively.

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

We conduct experiments on COCO and Objects365 datasets, where RT-DETR is trained on COCO train2017 and validated on COCO val2017 dataset.

We report the standard COCO metrics, including AP (averaged over uniformly sampled IoU thresholds ranging from 0.50-0.95 with a step size of 0.05),

AP50, AP75, as well as AP at different scales: APS, APM, APL.

### Preprocessing

Images are resized to 640x640 pixels and rescaled with `image_mean=[0.485, 0.456, 0.406]` and `image_std=[0.229, 0.224, 0.225]`.

### Training Hyperparameters

- **Training regime:** <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

## Evaluation

| Model | #Epochs | #Params (M) | GFLOPs | FPS_bs=1 | AP (val) | AP50 (val) | AP75 (val) | AP-s (val) | AP-m (val) | AP-l (val) |

|----------------------------|---------|-------------|--------|----------|--------|-----------|-----------|----------|----------|----------|

| RT-DETR-R18 | 72 | 20 | 60.7 | 217 | 46.5 | 63.8 | 50.4 | 28.4 | 49.8 | 63.0 |

| RT-DETR-R34 | 72 | 31 | 91.0 | 172 | 48.5 | 66.2 | 52.3 | 30.2 | 51.9 | 66.2 |

| RT-DETR R50 | 72 | 42 | 136 | 108 | 53.1 | 71.3 | 57.7 | 34.8 | 58.0 | 70.0 |

| RT-DETR R101| 72 | 76 | 259 | 74 | 54.3 | 72.7 | 58.6 | 36.0 | 58.8 | 72.1 |

| RT-DETR-R18 (Objects 365 pretrained) | 60 | 20 | 61 | 217 | 49.2 | 66.6 | 53.5 | 33.2 | 52.3 | 64.8 |

| RT-DETR-R50 (Objects 365 pretrained) | 24 | 42 | 136 | 108 | 55.3 | 73.4 | 60.1 | 37.9 | 59.9 | 71.8 |

| RT-DETR-R101 (Objects 365 pretrained) | 24 | 76 | 259 | 74 | 56.2 | 74.6 | 61.3 | 38.3 | 60.5 | 73.5 |

### Model Architecture and Objective

Overview of RT-DETR. We feed the features from the last three stages of the backbone into the encoder. The efficient hybrid

encoder transforms multi-scale features into a sequence of image features through the Attention-based Intra-scale Feature Interaction (AIFI)

and the CNN-based Cross-scale Feature Fusion (CCFF). Then, the uncertainty-minimal query selection selects a fixed number of encoder

features to serve as initial object queries for the decoder. Finally, the decoder with auxiliary prediction heads iteratively optimizes object

queries to generate categories and boxes.

## Citation

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

```bibtex

@misc{lv2023detrs,

title={DETRs Beat YOLOs on Real-time Object Detection},

author={Yian Zhao and Wenyu Lv and Shangliang Xu and Jinman Wei and Guanzhong Wang and Qingqing Dang and Yi Liu and Jie Chen},

year={2023},

eprint={2304.08069},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

## Model Card Authors

[Sangbum Choi](https://huggingface.co/danelcsb)

[Pavel Iakubovskii](https://huggingface.co/qubvel-hf)

|

[

"person",

"bicycle",

"car",

"motorbike",

"aeroplane",

"bus",

"train",

"truck",

"boat",

"traffic light",

"fire hydrant",

"stop sign",

"parking meter",

"bench",

"bird",

"cat",

"dog",

"horse",

"sheep",

"cow",

"elephant",

"bear",

"zebra",

"giraffe",

"backpack",

"umbrella",

"handbag",

"tie",

"suitcase",

"frisbee",

"skis",

"snowboard",

"sports ball",

"kite",

"baseball bat",

"baseball glove",

"skateboard",

"surfboard",

"tennis racket",

"bottle",

"wine glass",

"cup",

"fork",

"knife",

"spoon",

"bowl",

"banana",

"apple",

"sandwich",

"orange",

"broccoli",

"carrot",

"hot dog",

"pizza",

"donut",

"cake",

"chair",

"sofa",

"pottedplant",

"bed",

"diningtable",

"toilet",

"tvmonitor",

"laptop",

"mouse",

"remote",

"keyboard",

"cell phone",

"microwave",

"oven",

"toaster",

"sink",

"refrigerator",

"book",

"clock",

"vase",

"scissors",

"teddy bear",

"hair drier",

"toothbrush"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_253s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_253s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_253s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_253s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1500

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

Aryan-401/yolo-tiny-fashion

|

# Model Trained Using AutoTrain

- Problem type: Object Detection

## Validation Metrics

loss: 1.3179453611373901

map: 0.1361

map_50: 0.1892

map_75: 0.1548

map_small: 0.0

map_medium: 0.102

map_large: 0.1367

mar_1: 0.2076

mar_10: 0.4071

mar_100: 0.4151

mar_small: 0.0

mar_medium: 0.2304

mar_large: 0.4179

|

[

"shirt, blouse",

"top, t-shirt, sweatshirt",

"sweater",

"cardigan",

"jacket",

"vest",

"pants",

"shorts",

"skirt",

"coat",

"dress",

"jumpsuit",

"cape",

"glasses",

"hat",

"headband, head covering, hair accessory",

"tie",

"glove",

"watch",

"belt",

"leg warmer",

"tights, stockings",

"sock",

"shoe",

"bag, wallet",

"scarf",

"umbrella",

"hood",

"collar",

"lapel",

"epaulette",

"sleeve",

"pocket",

"neckline",

"buckle",

"zipper",

"applique",

"bead",

"bow",

"flower",

"fringe",

"ribbon",

"rivet",

"ruffle",

"sequin",

"tassel"

] |

Aryan-401/detr-resnet-50-cppe5

|

# Model Trained Using AutoTrain

- Problem type: Object Detection

## Validation Metrics

loss: 1.3475022315979004

map: 0.2746

map_50: 0.5638

map_75: 0.2333

map_small: 0.1345

map_medium: 0.2275

map_large: 0.4482

mar_1: 0.2715

mar_10: 0.4663

mar_100: 0.49

mar_small: 0.1839

mar_medium: 0.4158

mar_large: 0.6686

|

[

"coverall",

"face_shield",

"gloves",

"goggles",

"mask"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_311s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v1_s1_311s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 750

- mixed_precision_training: Native AMP

### Framework versions

- Transformers 4.41.0.dev0

- Pytorch 2.0.1

- Datasets 2.18.0

- Tokenizers 0.19.1

|

[

"table",

"table column",

"table row",

"table column header",

"table projected row header",

"table spanning cell"

] |

nsugianto/tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_311s

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# tblstructrecog_finetuned_tbltransstrucrecog_v2_s1_311s

This model is a fine-tuned version of [microsoft/table-transformer-structure-recognition](https://huggingface.co/microsoft/table-transformer-structure-recognition) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-06

- train_batch_size: 2

- eval_batch_size: 8