text

stringlengths 0

2k

| heading1

stringlengths 4

79

| source_page_url

stringclasses 178

values | source_page_title

stringclasses 178

values |

|---|---|---|---|

Our inference function will accept a video and a desired confidence threshold.

Object detection models identify many objects and assign a confidence score to each object. The lower the confidence, the higher the chance of a false positive. So we will let our users set the conference threshold.

Our function will iterate over the frames in the video and run the RT-DETR model over each frame.

We will then draw the bounding boxes for each detected object in the frame and save the frame to a new output video.

The function will yield each output video in chunks of two seconds.

In order to keep inference times as low as possible on ZeroGPU (there is a time-based quota),

we will halve the original frames-per-second in the output video and resize the input frames to be half the original

size before running the model.

The code for the inference function is below - we'll go over it piece by piece.

```python

import spaces

import cv2

from PIL import Image

import torch

import time

import numpy as np

import uuid

from draw_boxes import draw_bounding_boxes

SUBSAMPLE = 2

@spaces.GPU

def stream_object_detection(video, conf_threshold):

cap = cv2.VideoCapture(video)

This means we will output mp4 videos

video_codec = cv2.VideoWriter_fourcc(*"mp4v") type: ignore

fps = int(cap.get(cv2.CAP_PROP_FPS))

desired_fps = fps // SUBSAMPLE

width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) // 2

height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) // 2

iterating, frame = cap.read()

n_frames = 0

Use UUID to create a unique video file

output_video_name = f"output_{uuid.uuid4()}.mp4"

Output Video

output_video = cv2.VideoWriter(output_video_name, video_codec, desired_fps, (width, height)) type: ignore

batch = []

while iterating:

frame = cv2.resize( frame, (0,0), fx=0.5, fy=0.5)

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

if n_frames % SUBSAMPLE == 0:

batch.append(frame)

if len(batc

|

The Inference Function

|

https://gradio.app/guides/object-detection-from-video

|

Streaming - Object Detection From Video Guide

|

frame = cv2.resize( frame, (0,0), fx=0.5, fy=0.5)

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

if n_frames % SUBSAMPLE == 0:

batch.append(frame)

if len(batch) == 2 * desired_fps:

inputs = image_processor(images=batch, return_tensors="pt").to("cuda")

with torch.no_grad():

outputs = model(**inputs)

boxes = image_processor.post_process_object_detection(

outputs,

target_sizes=torch.tensor([(height, width)] * len(batch)),

threshold=conf_threshold)

for i, (array, box) in enumerate(zip(batch, boxes)):

pil_image = draw_bounding_boxes(Image.fromarray(array), box, model, conf_threshold)

frame = np.array(pil_image)

Convert RGB to BGR

frame = frame[:, :, ::-1].copy()

output_video.write(frame)

batch = []

output_video.release()

yield output_video_name

output_video_name = f"output_{uuid.uuid4()}.mp4"

output_video = cv2.VideoWriter(output_video_name, video_codec, desired_fps, (width, height)) type: ignore

iterating, frame = cap.read()

n_frames += 1

```

1. **Reading from the Video**

One of the industry standards for creating videos in python is OpenCV so we will use it in this app.

The `cap` variable is how we will read from the input video. Whenever we call `cap.read()`, we are reading the next frame in the video.

In order to stream video in Gradio, we need to yield a different video file for each "chunk" of the output video.

We create the next video file to write to with the `output_video = cv2.VideoWriter(output_video_name, video_codec, desired_fps, (width, height))` line. The `video_codec` is how we specify the type of video file. Only "mp4" and "ts" files are supported for video sreaming at the moment.

2. **The Inference Loop**

For each frame i

|

The Inference Function

|

https://gradio.app/guides/object-detection-from-video

|

Streaming - Object Detection From Video Guide

|

dth, height))` line. The `video_codec` is how we specify the type of video file. Only "mp4" and "ts" files are supported for video sreaming at the moment.

2. **The Inference Loop**

For each frame in the video, we will resize it to be half the size. OpenCV reads files in `BGR` format, so will convert to the expected `RGB` format of transfomers. That's what the first two lines of the while loop are doing.

We take every other frame and add it to a `batch` list so that the output video is half the original FPS. When the batch covers two seconds of video, we will run the model. The two second threshold was chosen to keep the processing time of each batch small enough so that video is smoothly displayed in the server while not requiring too many separate forward passes. In order for video streaming to work properly in Gradio, the batch size should be at least 1 second.

We run the forward pass of the model and then use the `post_process_object_detection` method of the model to scale the detected bounding boxes to the size of the input frame.

We make use of a custom function to draw the bounding boxes (source [here](https://huggingface.co/spaces/gradio/rt-detr-object-detection/blob/main/draw_boxes.pyL14)). We then have to convert from `RGB` to `BGR` before writing back to the output video.

Once we have finished processing the batch, we create a new output video file for the next batch.

|

The Inference Function

|

https://gradio.app/guides/object-detection-from-video

|

Streaming - Object Detection From Video Guide

|

The UI code is pretty similar to other kinds of Gradio apps.

We'll use a standard two-column layout so that users can see the input and output videos side by side.

In order for streaming to work, we have to set `streaming=True` in the output video. Setting the video

to autoplay is not necessary but it's a better experience for users.

```python

import gradio as gr

with gr.Blocks() as app:

gr.HTML(

"""

<h1 style='text-align: center'>

Video Object Detection with <a href='https://huggingface.co/PekingU/rtdetr_r101vd_coco_o365' target='_blank'>RT-DETR</a>

</h1>

""")

with gr.Row():

with gr.Column():

video = gr.Video(label="Video Source")

conf_threshold = gr.Slider(

label="Confidence Threshold",

minimum=0.0,

maximum=1.0,

step=0.05,

value=0.30,

)

with gr.Column():

output_video = gr.Video(label="Processed Video", streaming=True, autoplay=True)

video.upload(

fn=stream_object_detection,

inputs=[video, conf_threshold],

outputs=[output_video],

)

```

|

The Gradio Demo

|

https://gradio.app/guides/object-detection-from-video

|

Streaming - Object Detection From Video Guide

|

You can check out our demo hosted on Hugging Face Spaces [here](https://huggingface.co/spaces/gradio/rt-detr-object-detection).

It is also embedded on this page below

$demo_rt-detr-object-detection

|

Conclusion

|

https://gradio.app/guides/object-detection-from-video

|

Streaming - Object Detection From Video Guide

|

Automatic speech recognition (ASR), the conversion of spoken speech to text, is a very important and thriving area of machine learning. ASR algorithms run on practically every smartphone, and are becoming increasingly embedded in professional workflows, such as digital assistants for nurses and doctors. Because ASR algorithms are designed to be used directly by customers and end users, it is important to validate that they are behaving as expected when confronted with a wide variety of speech patterns (different accents, pitches, and background audio conditions).

Using `gradio`, you can easily build a demo of your ASR model and share that with a testing team, or test it yourself by speaking through the microphone on your device.

This tutorial will show how to take a pretrained speech-to-text model and deploy it with a Gradio interface. We will start with a **_full-context_** model, in which the user speaks the entire audio before the prediction runs. Then we will adapt the demo to make it **_streaming_**, meaning that the audio model will convert speech as you speak.

Prerequisites

Make sure you have the `gradio` Python package already [installed](/getting_started). You will also need a pretrained speech recognition model. In this tutorial, we will build demos from 2 ASR libraries:

- Transformers (for this, `pip install torch transformers torchaudio`)

Make sure you have at least one of these installed so that you can follow along the tutorial. You will also need `ffmpeg` [installed on your system](https://www.ffmpeg.org/download.html), if you do not already have it, to process files from the microphone.

Here's how to build a real time speech recognition (ASR) app:

1. [Set up the Transformers ASR Model](1-set-up-the-transformers-asr-model)

2. [Create a Full-Context ASR Demo with Transformers](2-create-a-full-context-asr-demo-with-transformers)

3. [Create a Streaming ASR Demo with Transformers](3-create-a-streaming-asr-demo-with-transformers)

|

Introduction

|

https://gradio.app/guides/real-time-speech-recognition

|

Streaming - Real Time Speech Recognition Guide

|

First, you will need to have an ASR model that you have either trained yourself or you will need to download a pretrained model. In this tutorial, we will start by using a pretrained ASR model from the model, `whisper`.

Here is the code to load `whisper` from Hugging Face `transformers`.

```python

from transformers import pipeline

p = pipeline("automatic-speech-recognition", model="openai/whisper-base.en")

```

That's it!

|

1. Set up the Transformers ASR Model

|

https://gradio.app/guides/real-time-speech-recognition

|

Streaming - Real Time Speech Recognition Guide

|

We will start by creating a _full-context_ ASR demo, in which the user speaks the full audio before using the ASR model to run inference. This is very easy with Gradio -- we simply create a function around the `pipeline` object above.

We will use `gradio`'s built in `Audio` component, configured to take input from the user's microphone and return a filepath for the recorded audio. The output component will be a plain `Textbox`.

$code_asr

$demo_asr

The `transcribe` function takes a single parameter, `audio`, which is a numpy array of the audio the user recorded. The `pipeline` object expects this in float32 format, so we convert it first to float32, and then extract the transcribed text.

|

2. Create a Full-Context ASR Demo with Transformers

|

https://gradio.app/guides/real-time-speech-recognition

|

Streaming - Real Time Speech Recognition Guide

|

To make this a *streaming* demo, we need to make these changes:

1. Set `streaming=True` in the `Audio` component

2. Set `live=True` in the `Interface`

3. Add a `state` to the interface to store the recorded audio of a user

Tip: You can also set `time_limit` and `stream_every` parameters in the interface. The `time_limit` caps the amount of time each user's stream can take. The default is 30 seconds so users won't be able to stream audio for more than 30 seconds. The `stream_every` parameter controls how frequently data is sent to your function. By default it is 0.5 seconds.

Take a look below.

$code_stream_asr

Notice that we now have a state variable because we need to track all the audio history. `transcribe` gets called whenever there is a new small chunk of audio, but we also need to keep track of all the audio spoken so far in the state. As the interface runs, the `transcribe` function gets called, with a record of all the previously spoken audio in the `stream` and the new chunk of audio as `new_chunk`. We return the new full audio to be stored back in its current state, and we also return the transcription. Here, we naively append the audio together and call the `transcriber` object on the entire audio. You can imagine more efficient ways of handling this, such as re-processing only the last 5 seconds of audio whenever a new chunk of audio is received.

$demo_stream_asr

Now the ASR model will run inference as you speak!

|

3. Create a Streaming ASR Demo with Transformers

|

https://gradio.app/guides/real-time-speech-recognition

|

Streaming - Real Time Speech Recognition Guide

|

Just like the classic Magic 8 Ball, a user should ask it a question orally and then wait for a response. Under the hood, we'll use Whisper to transcribe the audio and then use an LLM to generate a magic-8-ball-style answer. Finally, we'll use Parler TTS to read the response aloud.

|

The Overview

|

https://gradio.app/guides/streaming-ai-generated-audio

|

Streaming - Streaming Ai Generated Audio Guide

|

First let's define the UI and put placeholders for all the python logic.

```python

import gradio as gr

with gr.Blocks() as block:

gr.HTML(

f"""

<h1 style='text-align: center;'> Magic 8 Ball 🎱 </h1>

<h3 style='text-align: center;'> Ask a question and receive wisdom </h3>

<p style='text-align: center;'> Powered by <a href="https://github.com/huggingface/parler-tts"> Parler-TTS</a>

"""

)

with gr.Group():

with gr.Row():

audio_out = gr.Audio(label="Spoken Answer", streaming=True, autoplay=True)

answer = gr.Textbox(label="Answer")

state = gr.State()

with gr.Row():

audio_in = gr.Audio(label="Speak your question", sources="microphone", type="filepath")

audio_in.stop_recording(generate_response, audio_in, [state, answer, audio_out])\

.then(fn=read_response, inputs=state, outputs=[answer, audio_out])

block.launch()

```

We're placing the output Audio and Textbox components and the input Audio component in separate rows. In order to stream the audio from the server, we'll set `streaming=True` in the output Audio component. We'll also set `autoplay=True` so that the audio plays as soon as it's ready.

We'll be using the Audio input component's `stop_recording` event to trigger our application's logic when a user stops recording from their microphone.

We're separating the logic into two parts. First, `generate_response` will take the recorded audio, transcribe it and generate a response with an LLM. We're going to store the response in a `gr.State` variable that then gets passed to the `read_response` function that generates the audio.

We're doing this in two parts because only `read_response` will require a GPU. Our app will run on Hugging Faces [ZeroGPU](https://huggingface.co/zero-gpu-explorers) which has time-based quotas. Since generating the response can be done with Hugging Face's Inference API, we shouldn't include that code in our GPU func

|

The UI

|

https://gradio.app/guides/streaming-ai-generated-audio

|

Streaming - Streaming Ai Generated Audio Guide

|

GPU](https://huggingface.co/zero-gpu-explorers) which has time-based quotas. Since generating the response can be done with Hugging Face's Inference API, we shouldn't include that code in our GPU function as it will needlessly use our GPU quota.

|

The UI

|

https://gradio.app/guides/streaming-ai-generated-audio

|

Streaming - Streaming Ai Generated Audio Guide

|

As mentioned above, we'll use [Hugging Face's Inference API](https://huggingface.co/docs/huggingface_hub/guides/inference) to transcribe the audio and generate a response from an LLM. After instantiating the client, I use the `automatic_speech_recognition` method (this automatically uses Whisper running on Hugging Face's Inference Servers) to transcribe the audio. Then I pass the question to an LLM (Mistal-7B-Instruct) to generate a response. We are prompting the LLM to act like a magic 8 ball with the system message.

Our `generate_response` function will also send empty updates to the output textbox and audio components (returning `None`).

This is because I want the Gradio progress tracker to be displayed over the components but I don't want to display the answer until the audio is ready.

```python

from huggingface_hub import InferenceClient

client = InferenceClient(token=os.getenv("HF_TOKEN"))

def generate_response(audio):

gr.Info("Transcribing Audio", duration=5)

question = client.automatic_speech_recognition(audio).text

messages = [{"role": "system", "content": ("You are a magic 8 ball."

"Someone will present to you a situation or question and your job "

"is to answer with a cryptic adage or proverb such as "

"'curiosity killed the cat' or 'The early bird gets the worm'."

"Keep your answers short and do not include the phrase 'Magic 8 Ball' in your response. If the question does not make sense or is off-topic, say 'Foolish questions get foolish answers.'"

"For example, 'Magic 8 Ball, should I get a dog?', 'A dog is ready for you but are you ready for the dog?'")},

{"role": "user", "content": f"Magic 8 Ball please answer this question - {question}"}]

response = client.chat_completion(messages,

|

The Logic

|

https://gradio.app/guides/streaming-ai-generated-audio

|

Streaming - Streaming Ai Generated Audio Guide

|

for you but are you ready for the dog?'")},

{"role": "user", "content": f"Magic 8 Ball please answer this question - {question}"}]

response = client.chat_completion(messages, max_tokens=64, seed=random.randint(1, 5000),

model="mistralai/Mistral-7B-Instruct-v0.3")

response = response.choices[0].message.content.replace("Magic 8 Ball", "").replace(":", "")

return response, None, None

```

Now that we have our text response, we'll read it aloud with Parler TTS. The `read_response` function will be a python generator that yields the next chunk of audio as it's ready.

We'll be using the [Mini v0.1](https://huggingface.co/parler-tts/parler_tts_mini_v0.1) for the feature extraction but the [Jenny fine tuned version](https://huggingface.co/parler-tts/parler-tts-mini-jenny-30H) for the voice. This is so that the voice is consistent across generations.

Streaming audio with transformers requires a custom Streamer class. You can see the implementation [here](https://huggingface.co/spaces/gradio/magic-8-ball/blob/main/streamer.py). Additionally, we'll convert the output to bytes so that it can be streamed faster from the backend.

```python

from streamer import ParlerTTSStreamer

from transformers import AutoTokenizer, AutoFeatureExtractor, set_seed

import numpy as np

import spaces

import torch

from threading import Thread

device = "cuda:0" if torch.cuda.is_available() else "mps" if torch.backends.mps.is_available() else "cpu"

torch_dtype = torch.float16 if device != "cpu" else torch.float32

repo_id = "parler-tts/parler_tts_mini_v0.1"

jenny_repo_id = "ylacombe/parler-tts-mini-jenny-30H"

model = ParlerTTSForConditionalGeneration.from_pretrained(

jenny_repo_id, torch_dtype=torch_dtype, low_cpu_mem_usage=True

).to(device)

tokenizer = AutoTokenizer.from_pretrained(repo_id)

feature_extractor = AutoFeatureExtractor.from_pretrained(repo_id)

sampling_rate = model.audio_encoder.config.sampling_rate

f

|

The Logic

|

https://gradio.app/guides/streaming-ai-generated-audio

|

Streaming - Streaming Ai Generated Audio Guide

|

sage=True

).to(device)

tokenizer = AutoTokenizer.from_pretrained(repo_id)

feature_extractor = AutoFeatureExtractor.from_pretrained(repo_id)

sampling_rate = model.audio_encoder.config.sampling_rate

frame_rate = model.audio_encoder.config.frame_rate

@spaces.GPU

def read_response(answer):

play_steps_in_s = 2.0

play_steps = int(frame_rate * play_steps_in_s)

description = "Jenny speaks at an average pace with a calm delivery in a very confined sounding environment with clear audio quality."

description_tokens = tokenizer(description, return_tensors="pt").to(device)

streamer = ParlerTTSStreamer(model, device=device, play_steps=play_steps)

prompt = tokenizer(answer, return_tensors="pt").to(device)

generation_kwargs = dict(

input_ids=description_tokens.input_ids,

prompt_input_ids=prompt.input_ids,

streamer=streamer,

do_sample=True,

temperature=1.0,

min_new_tokens=10,

)

set_seed(42)

thread = Thread(target=model.generate, kwargs=generation_kwargs)

thread.start()

for new_audio in streamer:

print(f"Sample of length: {round(new_audio.shape[0] / sampling_rate, 2)} seconds")

yield answer, numpy_to_mp3(new_audio, sampling_rate=sampling_rate)

```

|

The Logic

|

https://gradio.app/guides/streaming-ai-generated-audio

|

Streaming - Streaming Ai Generated Audio Guide

|

You can see our final application [here](https://huggingface.co/spaces/gradio/magic-8-ball)!

|

Conclusion

|

https://gradio.app/guides/streaming-ai-generated-audio

|

Streaming - Streaming Ai Generated Audio Guide

|

Start by installing all the dependencies. Add the following lines to a `requirements.txt` file and run `pip install -r requirements.txt`:

```bash

opencv-python

twilio

gradio>=5.0

gradio-webrtc

onnxruntime-gpu

```

We'll use the ONNX runtime to speed up YOLOv10 inference. This guide assumes you have access to a GPU. If you don't, change `onnxruntime-gpu` to `onnxruntime`. Without a GPU, the model will run slower, resulting in a laggy demo.

We'll use OpenCV for image manipulation and the [Gradio WebRTC](https://github.com/freddyaboulton/gradio-webrtc) custom component to use [WebRTC](https://webrtc.org/) under the hood, achieving near-zero latency.

**Note**: If you want to deploy this app on any cloud provider, you'll need to use the free Twilio API for their [TURN servers](https://www.twilio.com/docs/stun-turn). Create a free account on Twilio. If you're not familiar with TURN servers, consult this [guide](https://www.twilio.com/docs/stun-turn/faqfaq-what-is-nat).

|

Setting up

|

https://gradio.app/guides/object-detection-from-webcam-with-webrtc

|

Streaming - Object Detection From Webcam With Webrtc Guide

|

We'll download the YOLOv10 model from the Hugging Face hub and instantiate a custom inference class to use this model.

The implementation of the inference class isn't covered in this guide, but you can find the source code [here](https://huggingface.co/spaces/freddyaboulton/webrtc-yolov10n/blob/main/inference.pyL9) if you're interested. This implementation borrows heavily from this [github repository](https://github.com/ibaiGorordo/ONNX-YOLOv8-Object-Detection).

We're using the `yolov10-n` variant because it has the lowest latency. See the [Performance](https://github.com/THU-MIG/yolov10?tab=readme-ov-fileperformance) section of the README in the YOLOv10 GitHub repository.

```python

from huggingface_hub import hf_hub_download

from inference import YOLOv10

model_file = hf_hub_download(

repo_id="onnx-community/yolov10n", filename="onnx/model.onnx"

)

model = YOLOv10(model_file)

def detection(image, conf_threshold=0.3):

image = cv2.resize(image, (model.input_width, model.input_height))

new_image = model.detect_objects(image, conf_threshold)

return new_image

```

Our inference function, `detection`, accepts a numpy array from the webcam and a desired confidence threshold. Object detection models like YOLO identify many objects and assign a confidence score to each. The lower the confidence, the higher the chance of a false positive. We'll let users adjust the confidence threshold.

The function returns a numpy array corresponding to the same input image with all detected objects in bounding boxes.

|

The Inference Function

|

https://gradio.app/guides/object-detection-from-webcam-with-webrtc

|

Streaming - Object Detection From Webcam With Webrtc Guide

|

The Gradio demo is straightforward, but we'll implement a few specific features:

1. Use the `WebRTC` custom component to ensure input and output are sent to/from the server with WebRTC.

2. The [WebRTC](https://github.com/freddyaboulton/gradio-webrtc) component will serve as both an input and output component.

3. Utilize the `time_limit` parameter of the `stream` event. This parameter sets a processing time for each user's stream. In a multi-user setting, such as on Spaces, we'll stop processing the current user's stream after this period and move on to the next.

We'll also apply custom CSS to center the webcam and slider on the page.

```python

import gradio as gr

from gradio_webrtc import WebRTC

css = """.my-group {max-width: 600px !important; max-height: 600px !important;}

.my-column {display: flex !important; justify-content: center !important; align-items: center !important;}"""

with gr.Blocks(css=css) as demo:

gr.HTML(

"""

<h1 style='text-align: center'>

YOLOv10 Webcam Stream (Powered by WebRTC ⚡️)

</h1>

"""

)

with gr.Column(elem_classes=["my-column"]):

with gr.Group(elem_classes=["my-group"]):

image = WebRTC(label="Stream", rtc_configuration=rtc_configuration)

conf_threshold = gr.Slider(

label="Confidence Threshold",

minimum=0.0,

maximum=1.0,

step=0.05,

value=0.30,

)

image.stream(

fn=detection, inputs=[image, conf_threshold], outputs=[image], time_limit=10

)

if __name__ == "__main__":

demo.launch()

```

|

The Gradio Demo

|

https://gradio.app/guides/object-detection-from-webcam-with-webrtc

|

Streaming - Object Detection From Webcam With Webrtc Guide

|

Our app is hosted on Hugging Face Spaces [here](https://huggingface.co/spaces/freddyaboulton/webrtc-yolov10n).

You can use this app as a starting point to build real-time image applications with Gradio. Don't hesitate to open issues in the space or in the [WebRTC component GitHub repo](https://github.com/freddyaboulton/gradio-webrtc) if you have any questions or encounter problems.

|

Conclusion

|

https://gradio.app/guides/object-detection-from-webcam-with-webrtc

|

Streaming - Object Detection From Webcam With Webrtc Guide

|

The frontend code should have, at minimum, three files:

* `Index.svelte`: This is the main export and where your component's layout and logic should live.

* `Example.svelte`: This is where the example view of the component is defined.

Feel free to add additional files and subdirectories.

If you want to export any additional modules, remember to modify the `package.json` file

```json

"exports": {

".": "./Index.svelte",

"./example": "./Example.svelte",

"./package.json": "./package.json"

},

```

|

The directory structure

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

Your component should expose the following props that will be passed down from the parent Gradio application.

```typescript

import type { LoadingStatus } from "@gradio/statustracker";

import type { Gradio } from "@gradio/utils";

export let gradio: Gradio<{

event_1: never;

event_2: never;

}>;

export let elem_id = "";

export let elem_classes: string[] = [];

export let scale: number | null = null;

export let min_width: number | undefined = undefined;

export let loading_status: LoadingStatus | undefined = undefined;

export let mode: "static" | "interactive";

```

* `elem_id` and `elem_classes` allow Gradio app developers to target your component with custom CSS and JavaScript from the Python `Blocks` class.

* `scale` and `min_width` allow Gradio app developers to control how much space your component takes up in the UI.

* `loading_status` is used to display a loading status over the component when it is the output of an event.

* `mode` is how the parent Gradio app tells your component whether the `interactive` or `static` version should be displayed.

* `gradio`: The `gradio` object is created by the parent Gradio app. It stores some application-level configuration that will be useful in your component, like internationalization. You must use it to dispatch events from your component.

A minimal `Index.svelte` file would look like:

```svelte

<script lang="ts">

import type { LoadingStatus } from "@gradio/statustracker";

import { Block } from "@gradio/atoms";

import { StatusTracker } from "@gradio/statustracker";

import type { Gradio } from "@gradio/utils";

export let gradio: Gradio<{

event_1: never;

event_2: never;

}>;

export let value = "";

export let elem_id = "";

export let elem_classes: string[] = [];

export let scale: number | null = null;

export let min_width: number | undefined = undefined;

export let loading_status: LoadingStatus | undefined = undefined;

export let mode: "static" | "interactive";

</script>

<Block

visib

|

The Index.svelte file

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

null;

export let min_width: number | undefined = undefined;

export let loading_status: LoadingStatus | undefined = undefined;

export let mode: "static" | "interactive";

</script>

<Block

visible={true}

{elem_id}

{elem_classes}

{scale}

{min_width}

allow_overflow={false}

padding={true}

>

{if loading_status}

<StatusTracker

autoscroll={gradio.autoscroll}

i18n={gradio.i18n}

{...loading_status}

/>

{/if}

<p>{value}</p>

</Block>

```

|

The Index.svelte file

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

The `Example.svelte` file should expose the following props:

```typescript

export let value: string;

export let type: "gallery" | "table";

export let selected = false;

export let index: number;

```

* `value`: The example value that should be displayed.

* `type`: This is a variable that can be either `"gallery"` or `"table"` depending on how the examples are displayed. The `"gallery"` form is used when the examples correspond to a single input component, while the `"table"` form is used when a user has multiple input components, and the examples need to populate all of them.

* `selected`: You can also adjust how the examples are displayed if a user "selects" a particular example by using the selected variable.

* `index`: The current index of the selected value.

* Any additional props your "non-example" component takes!

This is the `Example.svelte` file for the code `Radio` component:

```svelte

<script lang="ts">

export let value: string;

export let type: "gallery" | "table";

export let selected = false;

</script>

<div

class:table={type === "table"}

class:gallery={type === "gallery"}

class:selected

>

{value}

</div>

<style>

.gallery {

padding: var(--size-1) var(--size-2);

}

</style>

```

|

The Example.svelte file

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

If your component deals with files, these files **should** be uploaded to the backend server.

The `@gradio/client` npm package provides the `upload` and `prepare_files` utility functions to help you do this.

The `prepare_files` function will convert the browser's `File` datatype to gradio's internal `FileData` type.

You should use the `FileData` data in your component to keep track of uploaded files.

The `upload` function will upload an array of `FileData` values to the server.

Here's an example of loading files from an `<input>` element when its value changes.

```svelte

<script lang="ts">

import { upload, prepare_files, type FileData } from "@gradio/client";

export let root;

export let value;

let uploaded_files;

async function handle_upload(file_data: FileData[]): Promise<void> {

await tick();

uploaded_files = await upload(file_data, root);

}

async function loadFiles(files: FileList): Promise<void> {

let _files: File[] = Array.from(files);

if (!files.length) {

return;

}

if (file_count === "single") {

_files = [files[0]];

}

let file_data = await prepare_files(_files);

await handle_upload(file_data);

}

async function loadFilesFromUpload(e: Event): Promise<void> {

const target = e.target;

if (!target.files) return;

await loadFiles(target.files);

}

</script>

<input

type="file"

on:change={loadFilesFromUpload}

multiple={true}

/>

```

The component exposes a prop named `root`.

This is passed down by the parent gradio app and it represents the base url that the files will be uploaded to and fetched from.

For WASM support, you should get the upload function from the `Context` and pass that as the third parameter of the `upload` function.

```typescript

<script lang="ts">

import { getContext } from "svelte";

const upload_fn = getContext<typeof upload_files>("upload_files");

async function handle_uploa

|

Handling Files

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

he `upload` function.

```typescript

<script lang="ts">

import { getContext } from "svelte";

const upload_fn = getContext<typeof upload_files>("upload_files");

async function handle_upload(file_data: FileData[]): Promise<void> {

await tick();

await upload(file_data, root, upload_fn);

}

</script>

```

|

Handling Files

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

Most of Gradio's frontend components are published on [npm](https://www.npmjs.com/), the javascript package repository.

This means that you can use them to save yourself time while incorporating common patterns in your component, like uploading files.

For example, the `@gradio/upload` package has `Upload` and `ModifyUpload` components for properly uploading files to the Gradio server.

Here is how you can use them to create a user interface to upload and display PDF files.

```svelte

<script>

import { type FileData, Upload, ModifyUpload } from "@gradio/upload";

import { Empty, UploadText, BlockLabel } from "@gradio/atoms";

</script>

<BlockLabel Icon={File} label={label || "PDF"} />

{if value === null && interactive}

<Upload

filetype="application/pdf"

on:load={handle_load}

{root}

>

<UploadText type="file" i18n={gradio.i18n} />

</Upload>

{:else if value !== null}

{if interactive}

<ModifyUpload i18n={gradio.i18n} on:clear={handle_clear}/>

{/if}

<iframe title={value.orig_name || "PDF"} src={value.data} height="{height}px" width="100%"></iframe>

{:else}

<Empty size="large"> <File/> </Empty>

{/if}

```

You can also combine existing Gradio components to create entirely unique experiences.

Like rendering a gallery of chatbot conversations.

The possibilities are endless, please read the documentation on our javascript packages [here](https://gradio.app/main/docs/js).

We'll be adding more packages and documentation over the coming weeks!

|

Leveraging Existing Gradio Components

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

You can explore our component library via Storybook. You'll be able to interact with our components and see them in their various states.

For those interested in design customization, we provide the CSS variables consisting of our color palette, radii, spacing, and the icons we use - so you can easily match up your custom component with the style of our core components. This Storybook will be regularly updated with any new additions or changes.

[Storybook Link](https://gradio.app/main/docs/js/storybook)

|

Matching Gradio Core's Design System

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

If you want to make use of the vast vite ecosystem, you can use the `gradio.config.js` file to configure your component's build process. This allows you to make use of tools like tailwindcss, mdsvex, and more.

Currently, it is possible to configure the following:

Vite options:

- `plugins`: A list of vite plugins to use.

Svelte options:

- `preprocess`: A list of svelte preprocessors to use.

- `extensions`: A list of file extensions to compile to `.svelte` files.

- `build.target`: The target to build for, this may be necessary to support newer javascript features. See the [esbuild docs](https://esbuild.github.io/api/target) for more information.

The `gradio.config.js` file should be placed in the root of your component's `frontend` directory. A default config file is created for you when you create a new component. But you can also create your own config file, if one doesn't exist, and use it to customize your component's build process.

Example for a Vite plugin

Custom components can use Vite plugins to customize the build process. Check out the [Vite Docs](https://vitejs.dev/guide/using-plugins.html) for more information.

Here we configure [TailwindCSS](https://tailwindcss.com), a utility-first CSS framework. Setup is easiest using the version 4 prerelease.

```

npm install tailwindcss@next @tailwindcss/vite@next

```

In `gradio.config.js`:

```typescript

import tailwindcss from "@tailwindcss/vite";

export default {

plugins: [tailwindcss()]

};

```

Then create a `style.css` file with the following content:

```css

@import "tailwindcss";

```

Import this file into `Index.svelte`. Note, that you need to import the css file containing `@import` and cannot just use a `<style>` tag and use `@import` there.

```svelte

<script lang="ts">

[...]

import "./style.css";

[...]

</script>

```

Example for Svelte options

In `gradio.config.js` you can also specify a some Svelte options to apply to the Svelte compilation. In this example we will add support for

|

Custom configuration

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

.css";

[...]

</script>

```

Example for Svelte options

In `gradio.config.js` you can also specify a some Svelte options to apply to the Svelte compilation. In this example we will add support for [`mdsvex`](https://mdsvex.pngwn.io), a Markdown preprocessor for Svelte.

In order to do this we will need to add a [Svelte Preprocessor](https://svelte.dev/docs/svelte-compilerpreprocess) to the `svelte` object in `gradio.config.js` and configure the [`extensions`](https://github.com/sveltejs/vite-plugin-svelte/blob/HEAD/docs/config.mdconfig-file) field. Other options are not currently supported.

First, install the `mdsvex` plugin:

```bash

npm install mdsvex

```

Then add the following to `gradio.config.js`:

```typescript

import { mdsvex } from "mdsvex";

export default {

svelte: {

preprocess: [

mdsvex()

],

extensions: [".svelte", ".svx"]

}

};

```

Now we can create `mdsvex` documents in our component's `frontend` directory and they will be compiled to `.svelte` files.

```md

<!-- HelloWorld.svx -->

<script lang="ts">

import { Block } from "@gradio/atoms";

export let title = "Hello World";

</script>

<Block label="Hello World">

{title}

This is a markdown file.

</Block>

```

We can then use the `HelloWorld.svx` file in our components:

```svelte

<script lang="ts">

import HelloWorld from "./HelloWorld.svx";

</script>

<HelloWorld />

```

|

Custom configuration

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

You now know how to create delightful frontends for your components!

|

Conclusion

|

https://gradio.app/guides/frontend

|

Custom Components - Frontend Guide

|

By default, all custom component packages are called `gradio_<component-name>` where `component-name` is the name of the component's python class in lowercase.

As an example, let's walkthrough changing the name of a component from `gradio_mytextbox` to `supertextbox`.

1. Modify the `name` in the `pyproject.toml` file.

```bash

[project]

name = "supertextbox"

```

2. Change all occurrences of `gradio_<component-name>` in `pyproject.toml` to `<component-name>`

```bash

[tool.hatch.build]

artifacts = ["/backend/supertextbox/templates", "*.pyi"]

[tool.hatch.build.targets.wheel]

packages = ["/backend/supertextbox"]

```

3. Rename the `gradio_<component-name>` directory in `backend/` to `<component-name>`

```bash

mv backend/gradio_mytextbox backend/supertextbox

```

Tip: Remember to change the import statement in `demo/app.py`!

|

The Package Name

|

https://gradio.app/guides/configuration

|

Custom Components - Configuration Guide

|

By default, only the custom component python class is a top level export.

This means that when users type `from gradio_<component-name> import ...`, the only class that will be available is the custom component class.

To add more classes as top level exports, modify the `__all__` property in `__init__.py`

```python

from .mytextbox import MyTextbox

from .mytextbox import AdditionalClass, additional_function

__all__ = ['MyTextbox', 'AdditionalClass', 'additional_function']

```

|

Top Level Python Exports

|

https://gradio.app/guides/configuration

|

Custom Components - Configuration Guide

|

You can add python dependencies by modifying the `dependencies` key in `pyproject.toml`

```bash

dependencies = ["gradio", "numpy", "PIL"]

```

Tip: Remember to run `gradio cc install` when you add dependencies!

|

Python Dependencies

|

https://gradio.app/guides/configuration

|

Custom Components - Configuration Guide

|

You can add JavaScript dependencies by modifying the `"dependencies"` key in `frontend/package.json`

```json

"dependencies": {

"@gradio/atoms": "0.2.0-beta.4",

"@gradio/statustracker": "0.3.0-beta.6",

"@gradio/utils": "0.2.0-beta.4",

"your-npm-package": "<version>"

}

```

|

Javascript Dependencies

|

https://gradio.app/guides/configuration

|

Custom Components - Configuration Guide

|

By default, the CLI will place the Python code in `backend` and the JavaScript code in `frontend`.

It is not recommended to change this structure since it makes it easy for a potential contributor to look at your source code and know where everything is.

However, if you did want to this is what you would have to do:

1. Place the Python code in the subdirectory of your choosing. Remember to modify the `[tool.hatch.build]` `[tool.hatch.build.targets.wheel]` in the `pyproject.toml` to match!

2. Place the JavaScript code in the subdirectory of your choosing.

2. Add the `FRONTEND_DIR` property on the component python class. It must be the relative path from the file where the class is defined to the location of the JavaScript directory.

```python

class SuperTextbox(Component):

FRONTEND_DIR = "../../frontend/"

```

The JavaScript and Python directories must be under the same common directory!

|

Directory Structure

|

https://gradio.app/guides/configuration

|

Custom Components - Configuration Guide

|

Sticking to the defaults will make it easy for others to understand and contribute to your custom component.

After all, the beauty of open source is that anyone can help improve your code!

But if you ever need to deviate from the defaults, you know how!

|

Conclusion

|

https://gradio.app/guides/configuration

|

Custom Components - Configuration Guide

|

You will need to have:

* Python 3.10+ (<a href="https://www.python.org/downloads/" target="_blank">install here</a>)

* pip 21.3+ (`python -m pip install --upgrade pip`)

* Node.js 20+ (<a href="https://nodejs.dev/en/download/package-manager/" target="_blank">install here</a>)

* npm 9+ (<a href="https://docs.npmjs.com/downloading-and-installing-node-js-and-npm/" target="_blank">install here</a>)

* Gradio 5+ (`pip install --upgrade gradio`)

|

Installation

|

https://gradio.app/guides/custom-components-in-five-minutes

|

Custom Components - Custom Components In Five Minutes Guide

|

The Custom Components workflow consists of 4 steps: create, dev, build, and publish.

1. create: creates a template for you to start developing a custom component.

2. dev: launches a development server with a sample app & hot reloading allowing you to easily develop your custom component

3. build: builds a python package containing to your custom component's Python and JavaScript code -- this makes things official!

4. publish: uploads your package to [PyPi](https://pypi.org/) and/or a sample app to [HuggingFace Spaces](https://hf.co/spaces).

Each of these steps is done via the Custom Component CLI. You can invoke it with `gradio cc` or `gradio component`

Tip: Run `gradio cc --help` to get a help menu of all available commands. There are some commands that are not covered in this guide. You can also append `--help` to any command name to bring up a help page for that command, e.g. `gradio cc create --help`.

|

The Workflow

|

https://gradio.app/guides/custom-components-in-five-minutes

|

Custom Components - Custom Components In Five Minutes Guide

|

Bootstrap a new template by running the following in any working directory:

```bash

gradio cc create MyComponent --template SimpleTextbox

```

Instead of `MyComponent`, give your component any name.

Instead of `SimpleTextbox`, you can use any Gradio component as a template. `SimpleTextbox` is actually a special component that a stripped-down version of the `Textbox` component that makes it particularly useful when creating your first custom component.

Some other components that are good if you are starting out: `SimpleDropdown`, `SimpleImage`, or `File`.

Tip: Run `gradio cc show` to get a list of available component templates.

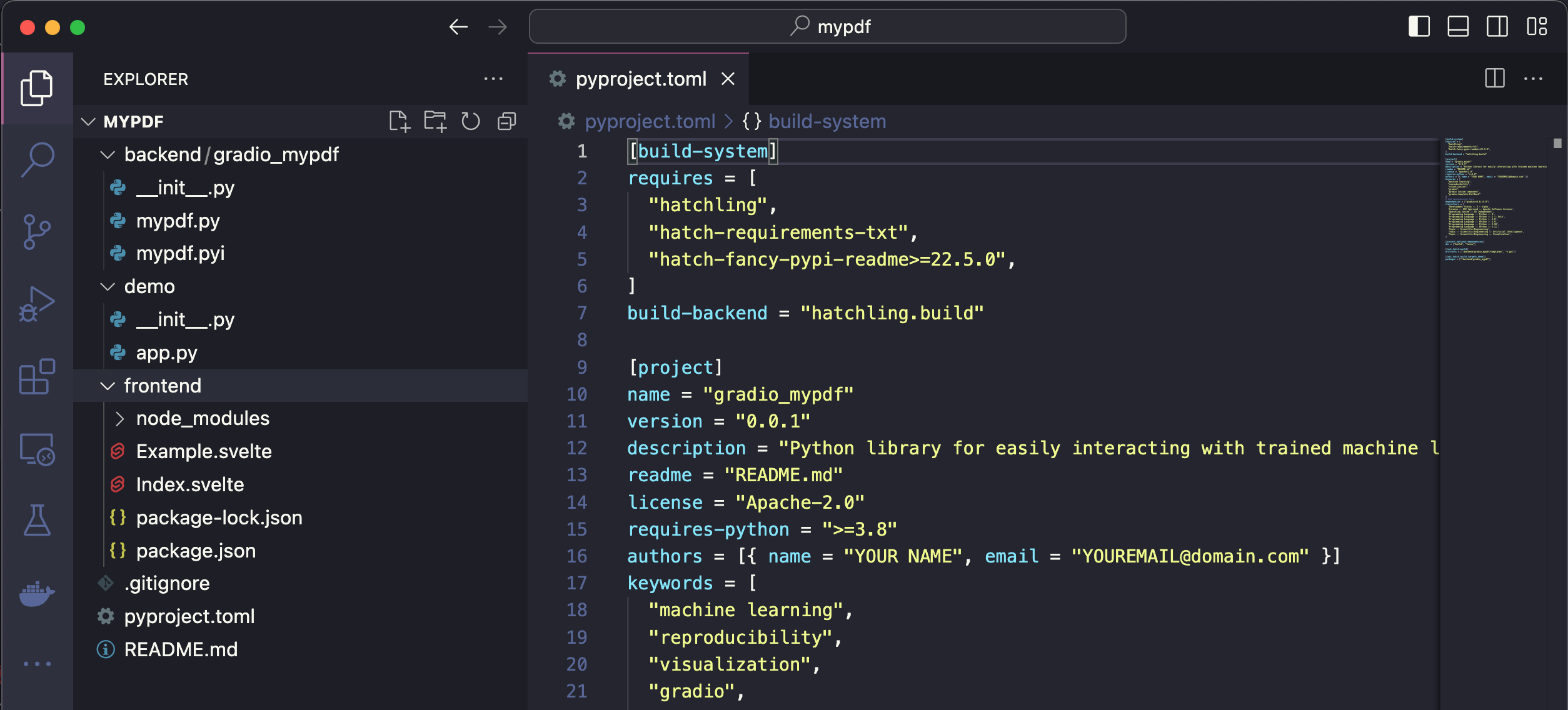

The `create` command will:

1. Create a directory with your component's name in lowercase with the following structure:

```directory

- backend/ <- The python code for your custom component

- frontend/ <- The javascript code for your custom component

- demo/ <- A sample app using your custom component. Modify this to develop your component!

- pyproject.toml <- Used to build the package and specify package metadata.

```

2. Install the component in development mode

Each of the directories will have the code you need to get started developing!

|

1. create

|

https://gradio.app/guides/custom-components-in-five-minutes

|

Custom Components - Custom Components In Five Minutes Guide

|

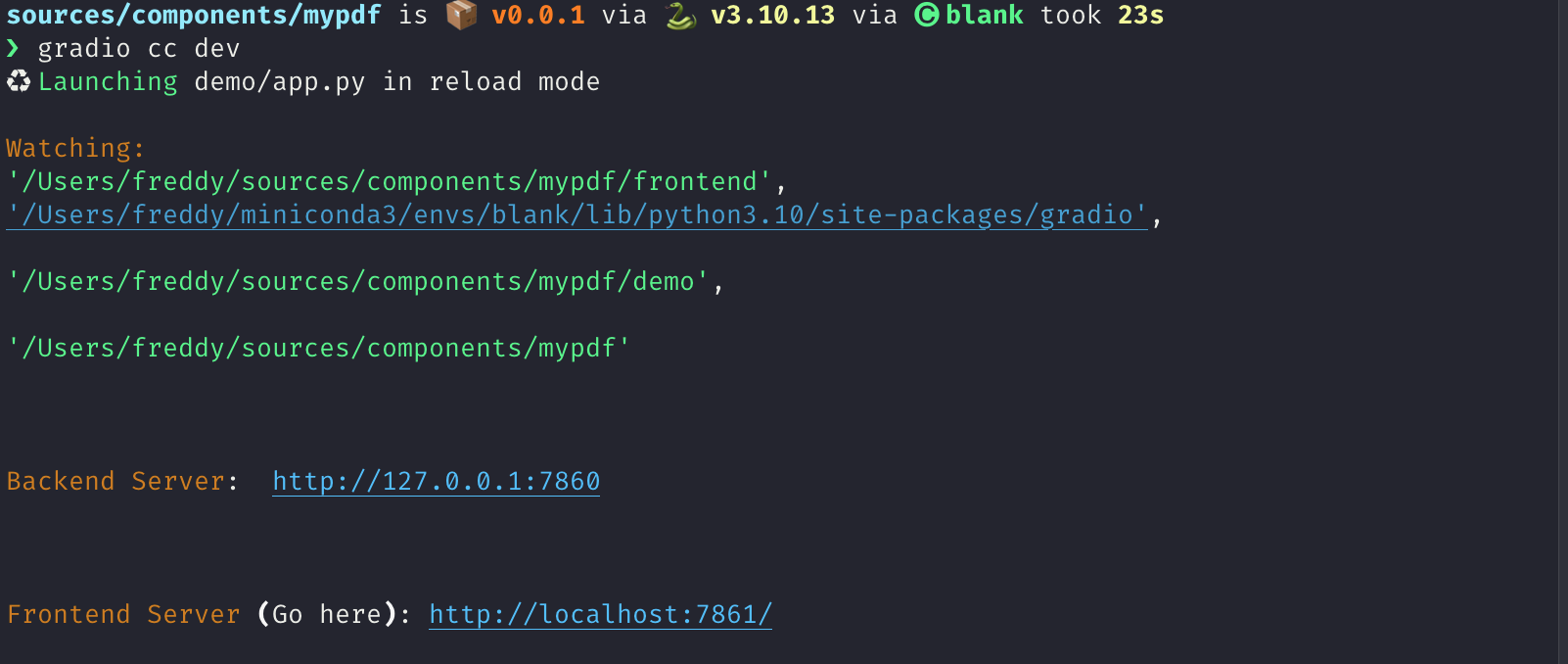

Once you have created your new component, you can start a development server by `entering the directory` and running

```bash

gradio cc dev

```

You'll see several lines that are printed to the console.

The most important one is the one that says:

> Frontend Server (Go here): http://localhost:7861/

The port number might be different for you.

Click on that link to launch the demo app in hot reload mode.

Now, you can start making changes to the backend and frontend you'll see the results reflected live in the sample app!

We'll go through a real example in a later guide.

Tip: You don't have to run dev mode from your custom component directory. The first argument to `dev` mode is the path to the directory. By default it uses the current directory.

|

2. dev

|

https://gradio.app/guides/custom-components-in-five-minutes

|

Custom Components - Custom Components In Five Minutes Guide

|

Once you are satisfied with your custom component's implementation, you can `build` it to use it outside of the development server.

From your component directory, run:

```bash

gradio cc build

```

This will create a `tar.gz` and `.whl` file in a `dist/` subdirectory.

If you or anyone installs that `.whl` file (`pip install <path-to-whl>`) they will be able to use your custom component in any gradio app!

The `build` command will also generate documentation for your custom component. This takes the form of an interactive space and a static `README.md`. You can disable this by passing `--no-generate-docs`. You can read more about the documentation generator in [the dedicated guide](https://gradio.app/guides/documenting-custom-components).

|

3. build

|

https://gradio.app/guides/custom-components-in-five-minutes

|

Custom Components - Custom Components In Five Minutes Guide

|

Right now, your package is only available on a `.whl` file on your computer.

You can share that file with the world with the `publish` command!

Simply run the following command from your component directory:

```bash

gradio cc publish

```

This will guide you through the following process:

1. Upload your distribution files to PyPi. This makes it easier to upload the demo to Hugging Face spaces. Otherwise your package must be at a publicly available url. If you decide to upload to PyPi, you will need a PyPI username and password. You can get one [here](https://pypi.org/account/register/).

2. Upload a demo of your component to hugging face spaces. This is also optional.

Here is an example of what publishing looks like:

<video autoplay muted loop>

<source src="https://gradio-builds.s3.amazonaws.com/assets/text_with_attachments_publish.mov" type="video/mp4" />

</video>

|

4. publish

|

https://gradio.app/guides/custom-components-in-five-minutes

|

Custom Components - Custom Components In Five Minutes Guide

|

Now that you know the high-level workflow of creating custom components, you can go in depth in the next guides!

After reading the guides, check out this [collection](https://huggingface.co/collections/gradio/custom-components-65497a761c5192d981710b12) of custom components on the HuggingFace Hub so you can learn from other's code.

Tip: If you want to start off from someone else's custom component see this [guide](./frequently-asked-questionsdo-i-always-need-to-start-my-component-from-scratch).

|

Conclusion

|

https://gradio.app/guides/custom-components-in-five-minutes

|

Custom Components - Custom Components In Five Minutes Guide

|

All components inherit from one of three classes `Component`, `FormComponent`, or `BlockContext`.

You need to inherit from one so that your component behaves like all other gradio components.

When you start from a template with `gradio cc create --template`, you don't need to worry about which one to choose since the template uses the correct one.

For completeness, and in the event that you need to make your own component from scratch, we explain what each class is for.

* `FormComponent`: Use this when you want your component to be grouped together in the same `Form` layout with other `FormComponents`. The `Slider`, `Textbox`, and `Number` components are all `FormComponents`.

* `BlockContext`: Use this when you want to place other components "inside" your component. This enabled `with MyComponent() as component:` syntax.

* `Component`: Use this for all other cases.

Tip: If your component supports streaming output, inherit from the `StreamingOutput` class.

Tip: If you inherit from `BlockContext`, you also need to set the metaclass to be `ComponentMeta`. See example below.

```python

from gradio.blocks import BlockContext

from gradio.component_meta import ComponentMeta

@document()

class Row(BlockContext, metaclass=ComponentMeta):

pass

```

|

Which Class to Inherit From

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

When you inherit from any of these classes, the following methods must be implemented.

Otherwise the Python interpreter will raise an error when you instantiate your component!

`preprocess` and `postprocess`

Explained in the [Key Concepts](./key-component-conceptsthe-value-and-how-it-is-preprocessed-postprocessed) guide.

They handle the conversion from the data sent by the frontend to the format expected by the python function.

```python

def preprocess(self, x: Any) -> Any:

"""

Convert from the web-friendly (typically JSON) value in the frontend to the format expected by the python function.

"""

return x

def postprocess(self, y):

"""

Convert from the data returned by the python function to the web-friendly (typically JSON) value expected by the frontend.

"""

return y

```

`process_example`

Takes in the original Python value and returns the modified value that should be displayed in the examples preview in the app.

If not provided, the `.postprocess()` method is used instead. Let's look at the following example from the `SimpleDropdown` component.

```python

def process_example(self, input_data):

return next((c[0] for c in self.choices if c[1] == input_data), None)

```

Since `self.choices` is a list of tuples corresponding to (`display_name`, `value`), this converts the value that a user provides to the display value (or if the value is not present in `self.choices`, it is converted to `None`).

`api_info`

A JSON-schema representation of the value that the `preprocess` expects.

This powers api usage via the gradio clients.

You do **not** need to implement this yourself if you components specifies a `data_model`.

The `data_model` in the following section.

```python

def api_info(self) -> dict[str, list[str]]:

"""

A JSON-schema representation of the value that the `preprocess` expects and the `postprocess` returns.

"""

pass

```

`example_payload`

A

|

The methods you need to implement

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

pi_info(self) -> dict[str, list[str]]:

"""

A JSON-schema representation of the value that the `preprocess` expects and the `postprocess` returns.

"""

pass

```

`example_payload`

An example payload for your component, e.g. something that can be passed into the `.preprocess()` method

of your component. The example input is displayed in the `View API` page of a Gradio app that uses your custom component.

Must be JSON-serializable. If your component expects a file, it is best to use a publicly accessible URL.

```python

def example_payload(self) -> Any:

"""

The example inputs for this component for API usage. Must be JSON-serializable.

"""

pass

```

`example_value`

An example value for your component, e.g. something that can be passed into the `.postprocess()` method

of your component. This is used as the example value in the default app that is created in custom component development.

```python

def example_payload(self) -> Any:

"""

The example inputs for this component for API usage. Must be JSON-serializable.

"""

pass

```

`flag`

Write the component's value to a format that can be stored in the `csv` or `json` file used for flagging.

You do **not** need to implement this yourself if you components specifies a `data_model`.

The `data_model` in the following section.

```python

def flag(self, x: Any | GradioDataModel, flag_dir: str | Path = "") -> str:

pass

```

`read_from_flag`

Convert from the format stored in the `csv` or `json` file used for flagging to the component's python `value`.

You do **not** need to implement this yourself if you components specifies a `data_model`.

The `data_model` in the following section.

```python

def read_from_flag(

self,

x: Any,

) -> GradioDataModel | Any:

"""

Convert the data from the csv or jsonl file into the component state.

"""

return x

```

|

The methods you need to implement

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

"""

Convert the data from the csv or jsonl file into the component state.

"""

return x

```

|

The methods you need to implement

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

The `data_model` is how you define the expected data format your component's value will be stored in the frontend.

It specifies the data format your `preprocess` method expects and the format the `postprocess` method returns.

It is not necessary to define a `data_model` for your component but it greatly simplifies the process of creating a custom component.

If you define a custom component you only need to implement four methods - `preprocess`, `postprocess`, `example_payload`, and `example_value`!

You define a `data_model` by defining a [pydantic model](https://docs.pydantic.dev/latest/concepts/models/basic-model-usage) that inherits from either `GradioModel` or `GradioRootModel`.

This is best explained with an example. Let's look at the core `Video` component, which stores the video data as a JSON object with two keys `video` and `subtitles` which point to separate files.

```python

from gradio.data_classes import FileData, GradioModel

class VideoData(GradioModel):

video: FileData

subtitles: Optional[FileData] = None

class Video(Component):

data_model = VideoData

```

By adding these four lines of code, your component automatically implements the methods needed for API usage, the flagging methods, and example caching methods!

It also has the added benefit of self-documenting your code.

Anyone who reads your component code will know exactly the data it expects.

Tip: If your component expects files to be uploaded from the frontend, your must use the `FileData` model! It will be explained in the following section.

Tip: Read the pydantic docs [here](https://docs.pydantic.dev/latest/concepts/models/basic-model-usage).

The difference between a `GradioModel` and a `GradioRootModel` is that the `RootModel` will not serialize the data to a dictionary.

For example, the `Names` model will serialize the data to `{'names': ['freddy', 'pete']}` whereas the `NamesRoot` model will serialize it to `['freddy', 'pete']`.

```python

from typing import List

clas

|

The `data_model`

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

example, the `Names` model will serialize the data to `{'names': ['freddy', 'pete']}` whereas the `NamesRoot` model will serialize it to `['freddy', 'pete']`.

```python

from typing import List

class Names(GradioModel):

names: List[str]

class NamesRoot(GradioRootModel):

root: List[str]

```

Even if your component does not expect a "complex" JSON data structure it can be beneficial to define a `GradioRootModel` so that you don't have to worry about implementing the API and flagging methods.

Tip: Use classes from the Python typing library to type your models. e.g. `List` instead of `list`.

|

The `data_model`

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

If your component expects uploaded files as input, or returns saved files to the frontend, you **MUST** use the `FileData` to type the files in your `data_model`.

When you use the `FileData`:

* Gradio knows that it should allow serving this file to the frontend. Gradio automatically blocks requests to serve arbitrary files in the computer running the server.

* Gradio will automatically place the file in a cache so that duplicate copies of the file don't get saved.

* The client libraries will automatically know that they should upload input files prior to sending the request. They will also automatically download files.

If you do not use the `FileData`, your component will not work as expected!

|

Handling Files

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

The events triggers for your component are defined in the `EVENTS` class attribute.

This is a list that contains the string names of the events.

Adding an event to this list will automatically add a method with that same name to your component!

You can import the `Events` enum from `gradio.events` to access commonly used events in the core gradio components.

For example, the following code will define `text_submit`, `file_upload` and `change` methods in the `MyComponent` class.

```python

from gradio.events import Events

from gradio.components import FormComponent

class MyComponent(FormComponent):

EVENTS = [

"text_submit",

"file_upload",

Events.change

]

```

Tip: Don't forget to also handle these events in the JavaScript code!

|

Adding Event Triggers To Your Component

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

Conclusion

|

https://gradio.app/guides/backend

|

Custom Components - Backend Guide

|

|

Every component in Gradio comes in a `static` variant, and most come in an `interactive` version as well.

The `static` version is used when a component is displaying a value, and the user can **NOT** change that value by interacting with it.

The `interactive` version is used when the user is able to change the value by interacting with the Gradio UI.

Let's see some examples:

```python

import gradio as gr

with gr.Blocks() as demo:

gr.Textbox(value="Hello", interactive=True)

gr.Textbox(value="Hello", interactive=False)

demo.launch()

```

This will display two textboxes.

The only difference: you'll be able to edit the value of the Gradio component on top, and you won't be able to edit the variant on the bottom (i.e. the textbox will be disabled).

Perhaps a more interesting example is with the `Image` component:

```python

import gradio as gr

with gr.Blocks() as demo:

gr.Image(interactive=True)

gr.Image(interactive=False)

demo.launch()

```

The interactive version of the component is much more complex -- you can upload images or snap a picture from your webcam -- while the static version can only be used to display images.

Not every component has a distinct interactive version. For example, the `gr.AnnotatedImage` only appears as a static version since there's no way to interactively change the value of the annotations or the image.

What you need to remember

* Gradio will use the interactive version (if available) of a component if that component is used as the **input** to any event; otherwise, the static version will be used.

* When you design custom components, you **must** accept the boolean interactive keyword in the constructor of your Python class. In the frontend, you **may** accept the `interactive` property, a `bool` which represents whether the component should be static or interactive. If you do not use this property in the frontend, the component will appear the same in interactive or static mode.

|

Interactive vs Static

|

https://gradio.app/guides/key-component-concepts

|

Custom Components - Key Component Concepts Guide

|

The most important attribute of a component is its `value`.

Every component has a `value`.

The value that is typically set by the user in the frontend (if the component is interactive) or displayed to the user (if it is static).

It is also this value that is sent to the backend function when a user triggers an event, or returned by the user's function e.g. at the end of a prediction.

So this value is passed around quite a bit, but sometimes the format of the value needs to change between the frontend and backend.

Take a look at this example:

```python

import numpy as np

import gradio as gr

def sepia(input_img):

sepia_filter = np.array([

[0.393, 0.769, 0.189],

[0.349, 0.686, 0.168],

[0.272, 0.534, 0.131]

])

sepia_img = input_img.dot(sepia_filter.T)

sepia_img /= sepia_img.max()

return sepia_img

demo = gr.Interface(sepia, gr.Image(width=200, height=200), "image")

demo.launch()

```

This will create a Gradio app which has an `Image` component as the input and the output.

In the frontend, the Image component will actually **upload** the file to the server and send the **filepath** but this is converted to a `numpy` array before it is sent to a user's function.

Conversely, when the user returns a `numpy` array from their function, the numpy array is converted to a file so that it can be sent to the frontend and displayed by the `Image` component.

Tip: By default, the `Image` component sends numpy arrays to the python function because it is a common choice for machine learning engineers, though the Image component also supports other formats using the `type` parameter. Read the `Image` docs [here](https://www.gradio.app/docs/image) to learn more.

Each component does two conversions:

1. `preprocess`: Converts the `value` from the format sent by the frontend to the format expected by the python function. This usually involves going from a web-friendly **JSON** structure to a **python-native** data structure, like a `n

|

The value and how it is preprocessed/postprocessed

|

https://gradio.app/guides/key-component-concepts

|

Custom Components - Key Component Concepts Guide

|

from the format sent by the frontend to the format expected by the python function. This usually involves going from a web-friendly **JSON** structure to a **python-native** data structure, like a `numpy` array or `PIL` image. The `Audio`, `Image` components are good examples of `preprocess` methods.

2. `postprocess`: Converts the value returned by the python function to the format expected by the frontend. This usually involves going from a **python-native** data-structure, like a `PIL` image to a **JSON** structure.

What you need to remember

* Every component must implement `preprocess` and `postprocess` methods. In the rare event that no conversion needs to happen, simply return the value as-is. `Textbox` and `Number` are examples of this.

* As a component author, **YOU** control the format of the data displayed in the frontend as well as the format of the data someone using your component will receive. Think of an ergonomic data-structure a **python** developer will find intuitive, and control the conversion from a **Web-friendly JSON** data structure (and vice-versa) with `preprocess` and `postprocess.`

|

The value and how it is preprocessed/postprocessed

|

https://gradio.app/guides/key-component-concepts

|

Custom Components - Key Component Concepts Guide

|

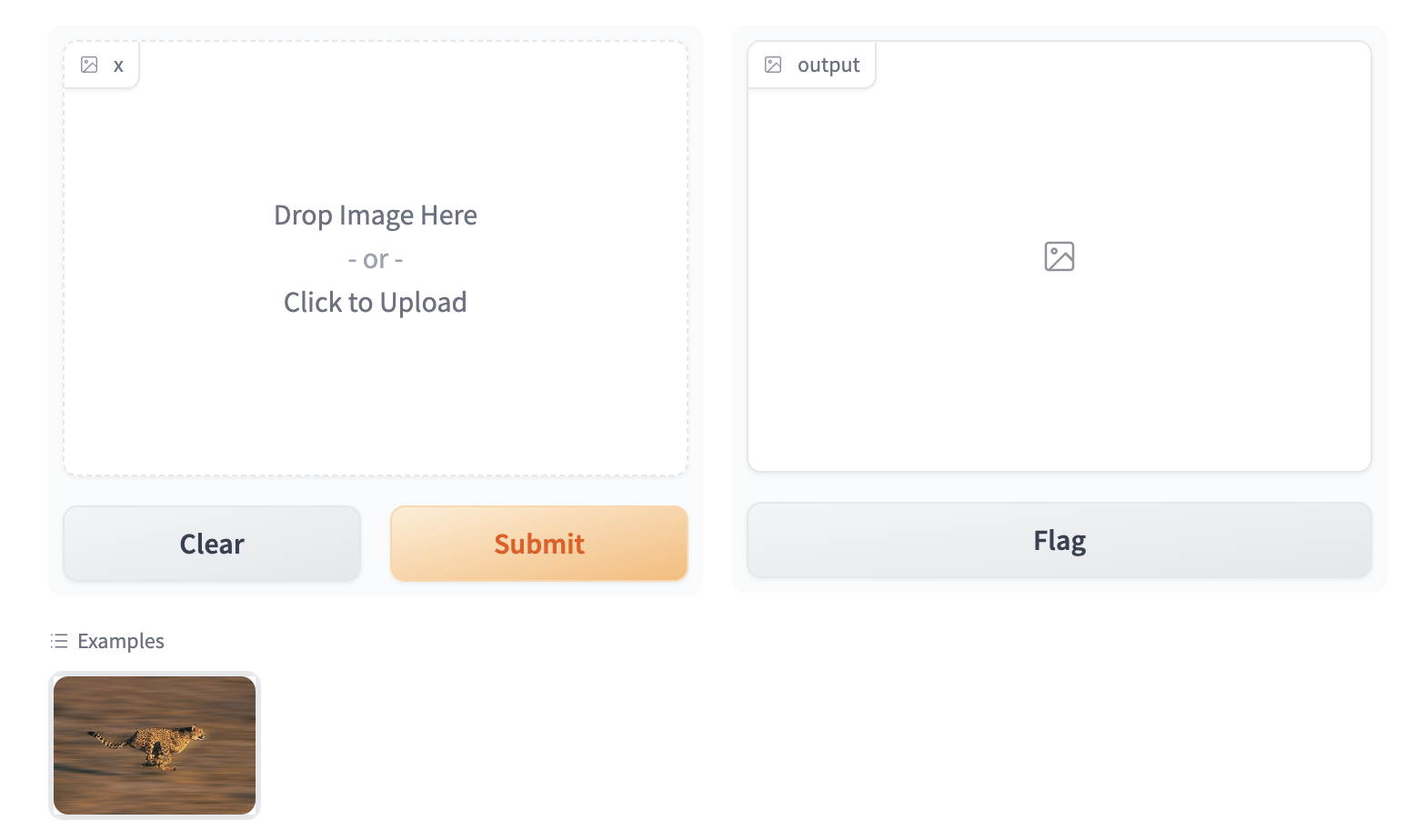

Gradio apps support providing example inputs -- and these are very useful in helping users get started using your Gradio app.

In `gr.Interface`, you can provide examples using the `examples` keyword, and in `Blocks`, you can provide examples using the special `gr.Examples` component.

At the bottom of this screenshot, we show a miniature example image of a cheetah that, when clicked, will populate the same image in the input Image component:

To enable the example view, you must have the following two files in the top of the `frontend` directory:

* `Example.svelte`: this corresponds to the "example version" of your component

* `Index.svelte`: this corresponds to the "regular version"

In the backend, you typically don't need to do anything. The user-provided example `value` is processed using the same `.postprocess()` method described earlier. If you'd like to do process the data differently (for example, if the `.postprocess()` method is computationally expensive), then you can write your own `.process_example()` method for your custom component, which will be used instead.

The `Example.svelte` file and `process_example()` method will be covered in greater depth in the dedicated [frontend](./frontend) and [backend](./backend) guides respectively.

What you need to remember

* If you expect your component to be used as input, it is important to define an "Example" view.

* If you don't, Gradio will use a default one but it won't be as informative as it can be!

|

The "Example Version" of a Component

|

https://gradio.app/guides/key-component-concepts

|

Custom Components - Key Component Concepts Guide

|

Now that you know the most important pieces to remember about Gradio components, you can start to design and build your own!

|

Conclusion

|

https://gradio.app/guides/key-component-concepts

|

Custom Components - Key Component Concepts Guide

|

Before using Custom Components, make sure you have Python 3.10+, Node.js v18+, npm 9+, and Gradio 4.0+ (preferably Gradio 5.0+) installed.

|

What do I need to install before using Custom Components?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

Custom components built with Gradio 5.0 should be compatible with Gradio 4.0. If you built your custom component in Gradio 4.0 you will have to rebuild your component to be compatible with Gradio 5.0. Simply follow these steps:

1. Update the `@gradio/preview` package. `cd` into the `frontend` directory and run `npm update`.

2. Modify the `dependencies` key in `pyproject.toml` to pin the maximum allowed Gradio version at version 5, e.g. `dependencies = ["gradio>=4.0,<6.0"]`.

3. Run the build and publish commands

|

Are custom components compatible between Gradio 4.0 and 5.0?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

Run `gradio cc show` to see the list of built-in templates.

You can also start off from other's custom components!

Simply `git clone` their repository and make your modifications.

|

What templates can I use to create my custom component?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

When you run `gradio cc dev`, a development server will load and run a Gradio app of your choosing.

This is like when you run `python <app-file>.py`, however the `gradio` command will hot reload so you can instantly see your changes.

|

What is the development server?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

**1. Check your terminal and browser console**

Make sure there are no syntax errors or other obvious problems in your code. Exceptions triggered from python will be displayed in the terminal. Exceptions from javascript will be displayed in the browser console and/or the terminal.

**2. Are you developing on Windows?**

Chrome on Windows will block the local compiled svelte files for security reasons. We recommend developing your custom component in the windows subsystem for linux (WSL) while the team looks at this issue.

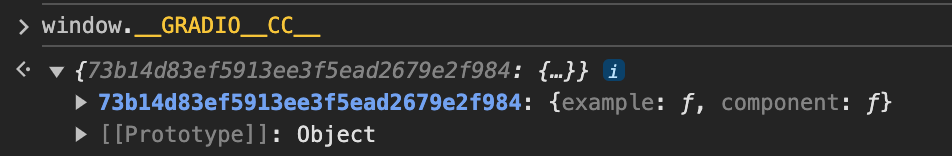

**3. Inspect the window.__GRADIO_CC__ variable**

In the browser console, print the `window.__GRADIO__CC` variable (just type it into the console). If it is an empty object, that means

that the CLI could not find your custom component source code. Typically, this happens when the custom component is installed in a different virtual environment than the one used to run the dev command. Please use the `--python-path` and `gradio-path` CLI arguments to specify the path of the python and gradio executables for the environment your component is installed in. For example, if you are using a virtualenv located at `/Users/mary/venv`, pass in `/Users/mary/bin/python` and `/Users/mary/bin/gradio` respectively.

If the `window.__GRADIO__CC` variable is not empty (see below for an example), then the dev server should be working correctly.

**4. Make sure you are using a virtual environment**

It is highly recommended you use a virtual environment to prevent conflicts with other python dependencies installed in your system.

|

The development server didn't work for me

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

No! You can start off from an existing gradio component as a template, see the [five minute guide](./custom-components-in-five-minutes).

You can also start from an existing custom component if you'd like to tweak it further. Once you find the source code of a custom component you like, clone the code to your computer and run `gradio cc install`. Then you can run the development server to make changes.If you run into any issues, contact the author of the component by opening an issue in their repository. The [gallery](https://www.gradio.app/custom-components/gallery) is a good place to look for published components. For example, to start from the [PDF component](https://www.gradio.app/custom-components/gallery?id=freddyaboulton%2Fgradio_pdf), clone the space with `git clone https://huggingface.co/spaces/freddyaboulton/gradio_pdf`, `cd` into the `src` directory, and run `gradio cc install`.

|

Do I always need to start my component from scratch?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

You can develop and build your custom component without hosting or connecting to HuggingFace.

If you would like to share your component with the gradio community, it is recommended to publish your package to PyPi and host a demo on HuggingFace so that anyone can install it or try it out.

|

Do I need to host my custom component on HuggingFace Spaces?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

You must implement the `preprocess`, `postprocess`, `example_payload`, and `example_value` methods. If your component does not use a data model, you must also define the `api_info`, `flag`, and `read_from_flag` methods. Read more in the [backend guide](./backend).

|

What methods are mandatory for implementing a custom component in Gradio?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

A `data_model` defines the expected data format for your component, simplifying the component development process and self-documenting your code. It streamlines API usage and example caching.

|

What is the purpose of a `data_model` in Gradio custom components?

|

https://gradio.app/guides/frequently-asked-questions

|

Custom Components - Frequently Asked Questions Guide

|

Utilizing `FileData` is crucial for components that expect file uploads. It ensures secure file handling, automatic caching, and streamlined client library functionality.

|