gguf quantized and fp8/16/32 scaled dia-1.6b

- base model from nari-labs

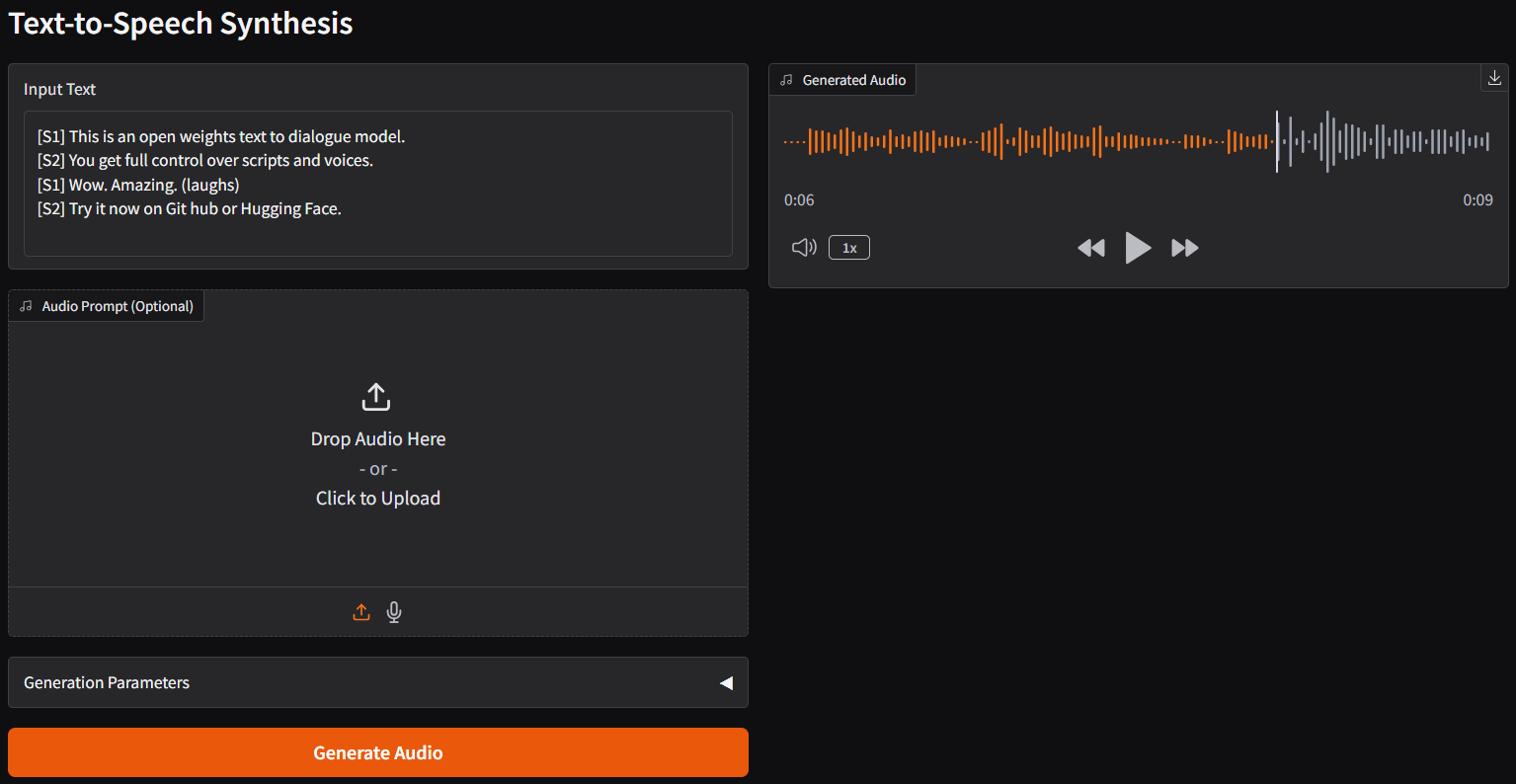

- text-to-speech synthesis

run it with gguf-connector

ggc s2

| Prompt | Audio Sample |

|---|---|

[S1] This is an open weights text to dialogue model.[S2] You get full control over scripts and voices.[S1] Wow. Amazing. (laughs)[S2] Try it now on Git hub or Hugging Face. |

🎧 dia-sample-1 |

[S1] Hey Connector, why your appearance looks so stupid?[S2] Oh, really? maybe I ate too much smart beans.[S1] Wow. Amazing. (laughs)[S2] Let's go to get some more smart beans and you will become stupid as well. |

🎧 dia-sample-2 |

review/reference

- simply execute the command (

ggc s2) above in console/terminal - note: model file(s) will be pulled to local cache automatically during the first launch; then opt to run it entirely offline; i.e., from local URL: http://127.0.0.1:7860 with lazy webui

- gguf-connector (pypi)

- Downloads last month

- 667

Hardware compatibility

Log In

to view the estimation

2-bit

4-bit

6-bit

16-bit

32-bit

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for calcuis/dia-gguf

Base model

nari-labs/Dia-1.6B