This model (GameQA-LLaVA-OV-7b) results from training llava-onevision-qwen2-7b-ov-hf with GRPO solely on our GameQA dataset.

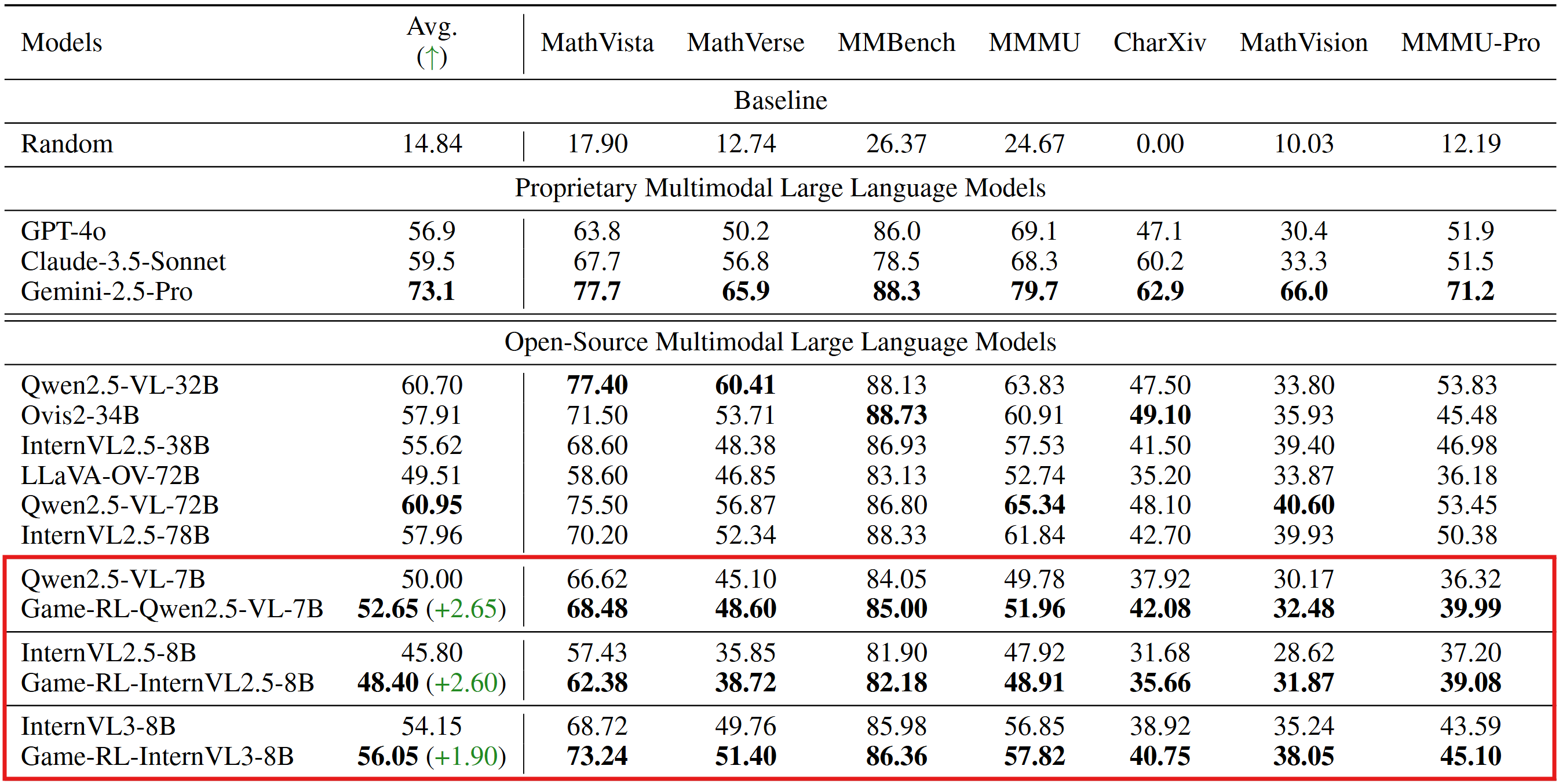

Evaluation Results on General Vision BenchMarks

Code2Logic: Game-Code-Driven Data Synthesis for Enhancing VLMs General Reasoning

This is the first work, to the best of our knowledge, that leverages game code to synthesize multimodal reasoning data for training VLMs. Furthermore, when trained with a GRPO strategy solely on GameQA (synthesized via our proposed Code2Logic approach), multiple cutting-edge open-source models exhibit significantly enhanced out-of-domain generalization.

[📖 Paper] [🤗 GameQA-140K Dataset] [🤗 GameQA-InternVL3-8B ] [🤗 GameQA-Qwen2.5-VL-7B] [🤗 GameQA-LLaVA-OV-7B ]

News

- We've open-sourced the three models trained with GRPO on GameQA on Huggingface.

- Downloads last month

- 4

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for Code2Logic/GameQA-llava-onevision-qwen2-7b-ov-hf

Base model

llava-hf/llava-onevision-qwen2-7b-ov-hf