Spaces:

Sleeping

Sleeping

Commit

·

91afd29

1

Parent(s):

a601663

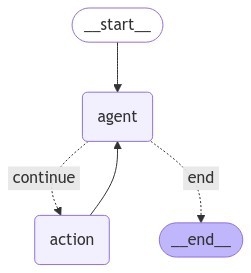

state graph display

Browse files- README.md +1 -0

- app.py +10 -3

- src/langgraphagent/caller_agent.py +4 -2

- state_graph_ar.png +0 -0

README.md

CHANGED

|

@@ -159,6 +159,7 @@ To stop the Streamlit app, press `Ctrl+C` in the terminal where the app is runni

|

|

| 159 |

**Prompt 2**: Yes or No.

|

| 160 |

**Reference**:

|

| 161 |

|

|

|

|

| 162 |

|

| 163 |

### 4. Customer Support

|

| 164 |

**Reference**:

|

|

|

|

| 159 |

**Prompt 2**: Yes or No.

|

| 160 |

**Reference**:

|

| 161 |

|

| 162 |

+

|

| 163 |

|

| 164 |

### 4. Customer Support

|

| 165 |

**Reference**:

|

app.py

CHANGED

|

@@ -31,7 +31,7 @@ if __name__ == "__main__":

|

|

| 31 |

user_input = ui.load_streamlit_ui()

|

| 32 |

|

| 33 |

|

| 34 |

-

|

| 35 |

# is_add_message_to_history = st.session_state["chat_with_history"]

|

| 36 |

|

| 37 |

if user_input['selected_usecase'] == "Appointment Receptionist":

|

|

@@ -39,7 +39,7 @@ if __name__ == "__main__":

|

|

| 39 |

# Configure LLM

|

| 40 |

obj_llm_config = GroqLLM(user_controls_input=user_input)

|

| 41 |

model = obj_llm_config.get_llm_model()

|

| 42 |

-

CONVERSATION,APPOINTMENTS= (submit_message(model))

|

| 43 |

|

| 44 |

col1, col2 = st.columns(2)

|

| 45 |

with col1:

|

|

@@ -122,7 +122,8 @@ if __name__ == "__main__":

|

|

| 122 |

# Initialize and set up the graph based on use case

|

| 123 |

usecase = user_input['selected_usecase']

|

| 124 |

graph_builder = GraphBuilder(model)

|

| 125 |

-

graph = graph_builder.setup_graph(usecase)

|

|

|

|

| 126 |

|

| 127 |

# Prepare state and invoke the graph

|

| 128 |

initial_state = {"messages": [user_message]}

|

|

@@ -155,6 +156,12 @@ if __name__ == "__main__":

|

|

| 155 |

with st.chat_message("assistant"):

|

| 156 |

st.write(message.content)

|

| 157 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 158 |

|

| 159 |

|

| 160 |

|

|

|

|

| 31 |

user_input = ui.load_streamlit_ui()

|

| 32 |

|

| 33 |

|

| 34 |

+

graph_display =''

|

| 35 |

# is_add_message_to_history = st.session_state["chat_with_history"]

|

| 36 |

|

| 37 |

if user_input['selected_usecase'] == "Appointment Receptionist":

|

|

|

|

| 39 |

# Configure LLM

|

| 40 |

obj_llm_config = GroqLLM(user_controls_input=user_input)

|

| 41 |

model = obj_llm_config.get_llm_model()

|

| 42 |

+

CONVERSATION,APPOINTMENTS,graph_display= (submit_message(model))

|

| 43 |

|

| 44 |

col1, col2 = st.columns(2)

|

| 45 |

with col1:

|

|

|

|

| 122 |

# Initialize and set up the graph based on use case

|

| 123 |

usecase = user_input['selected_usecase']

|

| 124 |

graph_builder = GraphBuilder(model)

|

| 125 |

+

graph_display = graph = graph_builder.setup_graph(usecase)

|

| 126 |

+

|

| 127 |

|

| 128 |

# Prepare state and invoke the graph

|

| 129 |

initial_state = {"messages": [user_message]}

|

|

|

|

| 156 |

with st.chat_message("assistant"):

|

| 157 |

st.write(message.content)

|

| 158 |

|

| 159 |

+

|

| 160 |

+

# display graph

|

| 161 |

+

if graph_display:

|

| 162 |

+

st.write('state graph workflow')

|

| 163 |

+

st.image(graph_display.get_graph(xray=True).draw_mermaid_png())

|

| 164 |

+

|

| 165 |

|

| 166 |

|

| 167 |

|

src/langgraphagent/caller_agent.py

CHANGED

|

@@ -74,6 +74,8 @@ class Caller_Agent:

|

|

| 74 |

self.caller_app = caller_workflow.compile()

|

| 75 |

|

| 76 |

|

|

|

|

|

|

|

| 77 |

|

| 78 |

|

| 79 |

|

|

@@ -84,10 +86,10 @@ class Caller_Agent:

|

|

| 84 |

"messages": CONVERSATION,

|

| 85 |

}

|

| 86 |

print(state)

|

| 87 |

-

|

| 88 |

new_state = self.caller_app.invoke(state)

|

| 89 |

CONVERSATION.extend(new_state["messages"][len(CONVERSATION):])

|

| 90 |

-

return CONVERSATION, APPOINTMENTS

|

| 91 |

|

| 92 |

|

| 93 |

|

|

|

|

| 74 |

self.caller_app = caller_workflow.compile()

|

| 75 |

|

| 76 |

|

| 77 |

+

|

| 78 |

+

|

| 79 |

|

| 80 |

|

| 81 |

|

|

|

|

| 86 |

"messages": CONVERSATION,

|

| 87 |

}

|

| 88 |

print(state)

|

| 89 |

+

graph = self.call_tool()

|

| 90 |

new_state = self.caller_app.invoke(state)

|

| 91 |

CONVERSATION.extend(new_state["messages"][len(CONVERSATION):])

|

| 92 |

+

return CONVERSATION, APPOINTMENTS,self.caller_app

|

| 93 |

|

| 94 |

|

| 95 |

|

state_graph_ar.png

ADDED

|