Upload 9 files

Browse files- .devcontainer/devcontainer.json +33 -0

- .gitattributes +1 -0

- app.py +154 -0

- requirements.txt +8 -0

- static/Step1.png +0 -0

- static/Step2.png +0 -0

- static/Step3.png +0 -0

- static/Step4.png +3 -0

- static/aivn_favicon.png +0 -0

- static/aivn_logo.png +0 -0

.devcontainer/devcontainer.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "Python 3",

|

| 3 |

+

// Or use a Dockerfile or Docker Compose file. More info: https://containers.dev/guide/dockerfile

|

| 4 |

+

"image": "mcr.microsoft.com/devcontainers/python:1-3.11-bullseye",

|

| 5 |

+

"customizations": {

|

| 6 |

+

"codespaces": {

|

| 7 |

+

"openFiles": [

|

| 8 |

+

"README.md",

|

| 9 |

+

"app.py"

|

| 10 |

+

]

|

| 11 |

+

},

|

| 12 |

+

"vscode": {

|

| 13 |

+

"settings": {},

|

| 14 |

+

"extensions": [

|

| 15 |

+

"ms-python.python",

|

| 16 |

+

"ms-python.vscode-pylance"

|

| 17 |

+

]

|

| 18 |

+

}

|

| 19 |

+

},

|

| 20 |

+

"updateContentCommand": "[ -f packages.txt ] && sudo apt update && sudo apt upgrade -y && sudo xargs apt install -y <packages.txt; [ -f requirements.txt ] && pip3 install --user -r requirements.txt; pip3 install --user streamlit; echo '✅ Packages installed and Requirements met'",

|

| 21 |

+

"postAttachCommand": {

|

| 22 |

+

"server": "streamlit run app.py --server.enableCORS false --server.enableXsrfProtection false"

|

| 23 |

+

},

|

| 24 |

+

"portsAttributes": {

|

| 25 |

+

"8501": {

|

| 26 |

+

"label": "Application",

|

| 27 |

+

"onAutoForward": "openPreview"

|

| 28 |

+

}

|

| 29 |

+

},

|

| 30 |

+

"forwardPorts": [

|

| 31 |

+

8501

|

| 32 |

+

]

|

| 33 |

+

}

|

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

static/Step4.png filter=lfs diff=lfs merge=lfs -text

|

app.py

ADDED

|

@@ -0,0 +1,154 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import shutil

|

| 3 |

+

import streamlit as st

|

| 4 |

+

from huggingface_hub import login

|

| 5 |

+

from llama_index.llms.huggingface import HuggingFaceInferenceAPI

|

| 6 |

+

from llama_index.embeddings.huggingface import HuggingFaceEmbedding

|

| 7 |

+

from llama_index.core import VectorStoreIndex

|

| 8 |

+

from llama_index.core.retrievers import VectorIndexRetriever

|

| 9 |

+

from llama_index.core.query_engine import RetrieverQueryEngine

|

| 10 |

+

from llama_index.core.node_parser import SentenceSplitter

|

| 11 |

+

from llama_index.core import SimpleDirectoryReader

|

| 12 |

+

from llama_index.core import get_response_synthesizer

|

| 13 |

+

from llama_index.core import Settings

|

| 14 |

+

|

| 15 |

+

CHUNK_SIZE = 1024

|

| 16 |

+

CHUNK_OVERLAP = 128

|

| 17 |

+

TOP_K = 10

|

| 18 |

+

SIMILARITY_CUTOFF = 0.6

|

| 19 |

+

MAX_SELECTED_NODES = 5

|

| 20 |

+

TEMP_FILES_DIR = "./temp_files"

|

| 21 |

+

|

| 22 |

+

st.set_page_config(

|

| 23 |

+

page_title="AIVN - RAG with Llama Index",

|

| 24 |

+

page_icon="./static/aivn_favicon.png",

|

| 25 |

+

layout="wide",

|

| 26 |

+

initial_sidebar_state="expanded"

|

| 27 |

+

)

|

| 28 |

+

|

| 29 |

+

st.image("./static/aivn_logo.png", width=300)

|

| 30 |

+

|

| 31 |

+

if 'run_count' not in st.session_state:

|

| 32 |

+

st.session_state['run_count'] = 0

|

| 33 |

+

|

| 34 |

+

st.session_state['run_count'] += 1

|

| 35 |

+

if st.session_state['run_count'] == 1:

|

| 36 |

+

if os.path.exists(TEMP_FILES_DIR):

|

| 37 |

+

shutil.rmtree(TEMP_FILES_DIR)

|

| 38 |

+

os.makedirs(TEMP_FILES_DIR, exist_ok=True)

|

| 39 |

+

st.cache_resource.clear()

|

| 40 |

+

|

| 41 |

+

# st.write(f"Ứng dụng đã chạy {st.session_state['run_count']} lần.")

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

class SortedRetrieverQueryEngine(RetrieverQueryEngine):

|

| 45 |

+

def retrieve(self, query):

|

| 46 |

+

nodes = self.retriever.retrieve(query)

|

| 47 |

+

filtered_nodes = [node for node in nodes if node.score >= SIMILARITY_CUTOFF]

|

| 48 |

+

sorted_nodes = sorted(filtered_nodes, key=lambda node: node.score, reverse=True)

|

| 49 |

+

return sorted_nodes[:MAX_SELECTED_NODES]

|

| 50 |

+

|

| 51 |

+

st.title("Retrieval-Augmented Generation (RAG) Demo")

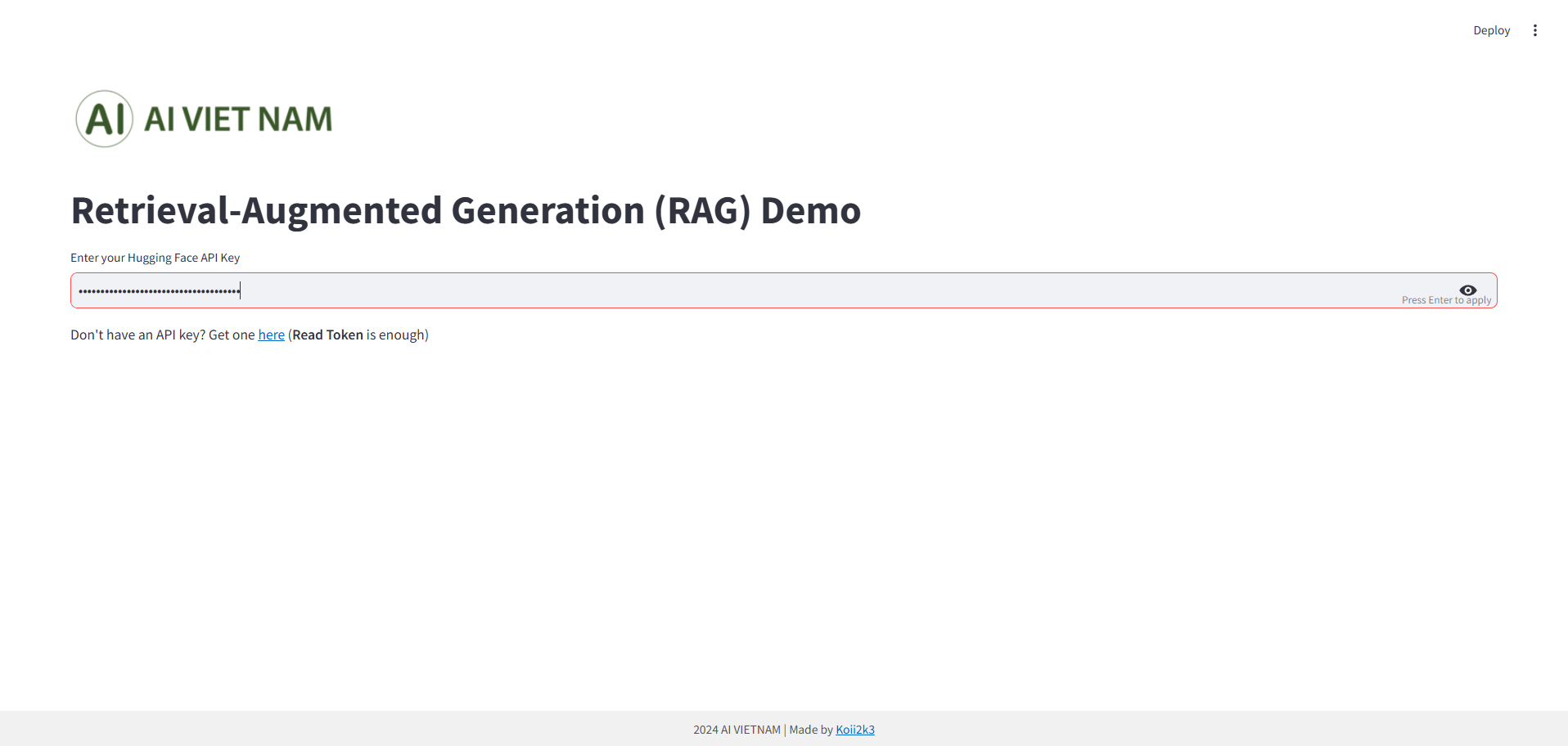

|

| 52 |

+

|

| 53 |

+

hf_api_key_placeholder = st.empty()

|

| 54 |

+

hf_api_key = hf_api_key_placeholder.text_input("Enter your Hugging Face API Key", type="password", placeholder="hf_...", key="hf_api_key")

|

| 55 |

+

st.markdown("Don't have an API key? Get one [here](https://huggingface.co/settings/tokens) (**Read Token** is enough)")

|

| 56 |

+

|

| 57 |

+

if hf_api_key:

|

| 58 |

+

@st.cache_resource

|

| 59 |

+

def load_models(hf_api_key):

|

| 60 |

+

login(token=hf_api_key)

|

| 61 |

+

with st.spinner("Loading models from Hugging Face..."):

|

| 62 |

+

llm = HuggingFaceInferenceAPI(

|

| 63 |

+

model_name="mistralai/Mixtral-8x7B-Instruct-v0.1", token=hf_api_key)

|

| 64 |

+

embed_model = HuggingFaceEmbedding(model_name=f'BAAI/bge-small-en-v1.5', token=hf_api_key)

|

| 65 |

+

return llm, embed_model

|

| 66 |

+

|

| 67 |

+

llm, embed_model = load_models(hf_api_key)

|

| 68 |

+

|

| 69 |

+

uploaded_files = st.file_uploader("Upload documents", accept_multiple_files=True, key="uploaded_files")

|

| 70 |

+

if uploaded_files:

|

| 71 |

+

@st.cache_resource

|

| 72 |

+

def uploading_files(uploaded_files, num_documents):

|

| 73 |

+

with st.spinner("Processing uploaded files..."):

|

| 74 |

+

file_paths = []

|

| 75 |

+

for i, uploaded_file in enumerate(uploaded_files):

|

| 76 |

+

file_path = os.path.join(TEMP_FILES_DIR, uploaded_file.name)

|

| 77 |

+

file_paths.append(file_path)

|

| 78 |

+

with open(file_path, "wb") as f:

|

| 79 |

+

f.write(uploaded_file.getbuffer())

|

| 80 |

+

|

| 81 |

+

st.write(f"Uploaded {len(uploaded_files)}/{num_documents} files")

|

| 82 |

+

|

| 83 |

+

return SimpleDirectoryReader(TEMP_FILES_DIR).load_data()

|

| 84 |

+

|

| 85 |

+

num_documents = len(uploaded_files)

|

| 86 |

+

documents = uploading_files(uploaded_files, num_documents)

|

| 87 |

+

|

| 88 |

+

@st.cache_resource

|

| 89 |

+

def indexing(_documents, _embed_model, num_documents):

|

| 90 |

+

with st.spinner("Indexing documents..."):

|

| 91 |

+

text_splitter = SentenceSplitter(

|

| 92 |

+

chunk_size=CHUNK_SIZE, chunk_overlap=CHUNK_OVERLAP)

|

| 93 |

+

Settings.text_splitter = text_splitter

|

| 94 |

+

|

| 95 |

+

st.write(f"Indexing {num_documents} documents")

|

| 96 |

+

return VectorStoreIndex.from_documents(

|

| 97 |

+

_documents, transformations=[text_splitter], embed_model=_embed_model, show_progress=True

|

| 98 |

+

)

|

| 99 |

+

|

| 100 |

+

index = indexing(documents, embed_model, num_documents)

|

| 101 |

+

|

| 102 |

+

@st.cache_resource

|

| 103 |

+

def create_retriever_and_query_engine(_index, _llm, num_documents):

|

| 104 |

+

retriever = VectorIndexRetriever(

|

| 105 |

+

index=_index, similarity_top_k=TOP_K)

|

| 106 |

+

|

| 107 |

+

response_synthesizer = get_response_synthesizer(llm=_llm)

|

| 108 |

+

|

| 109 |

+

st.write(f"Querying with {num_documents} nodes")

|

| 110 |

+

|

| 111 |

+

return SortedRetrieverQueryEngine(

|

| 112 |

+

retriever=retriever,

|

| 113 |

+

response_synthesizer=response_synthesizer,

|

| 114 |

+

node_postprocessors=[],

|

| 115 |

+

)

|

| 116 |

+

|

| 117 |

+

query_engine = create_retriever_and_query_engine(index, llm, len(index.docstore.docs))

|

| 118 |

+

|

| 119 |

+

query = st.text_input("Enter your query for RAG", key="query")

|

| 120 |

+

|

| 121 |

+

if query:

|

| 122 |

+

with st.spinner("Querying..."):

|

| 123 |

+

response = query_engine.query(query)

|

| 124 |

+

retrieved_nodes = response.source_nodes

|

| 125 |

+

|

| 126 |

+

st.markdown("### Retrieved Documents")

|

| 127 |

+

for i, node in enumerate(retrieved_nodes):

|

| 128 |

+

with st.expander(f"Document {i+1} (Score: {node.score:.4f})"):

|

| 129 |

+

st.write(node.text)

|

| 130 |

+

|

| 131 |

+

st.markdown("### RAG Response:")

|

| 132 |

+

st.write(response.response)

|

| 133 |

+

|

| 134 |

+

st.markdown(

|

| 135 |

+

"""

|

| 136 |

+

<style>

|

| 137 |

+

.footer {

|

| 138 |

+

position: fixed;

|

| 139 |

+

bottom: 0;

|

| 140 |

+

left: 0;

|

| 141 |

+

width: 100%;

|

| 142 |

+

background-color: #f1f1f1;

|

| 143 |

+

text-align: center;

|

| 144 |

+

padding: 10px 0;

|

| 145 |

+

font-size: 14px;

|

| 146 |

+

color: #555;

|

| 147 |

+

}

|

| 148 |

+

</style>

|

| 149 |

+

<div class="footer">

|

| 150 |

+

2024 AI VIETNAM | Made by <a href="https://github.com/Koii2k3/Basic-RAG-LlamaIndex" target="_blank">Koii2k3</a>

|

| 151 |

+

</div>

|

| 152 |

+

""",

|

| 153 |

+

unsafe_allow_html=True

|

| 154 |

+

)

|

requirements.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

huggingface-hub==0.28.1

|

| 2 |

+

llama-index==0.12.16

|

| 3 |

+

llama-index-cli==0.4.0

|

| 4 |

+

llama-index-core==0.12.16.post1

|

| 5 |

+

llama-index-embeddings-huggingface==0.5.1

|

| 6 |

+

llama-index-embeddings-openai==0.3.1

|

| 7 |

+

llama-index-indices-managed-llama-cloud==0.6.4

|

| 8 |

+

llama-index-llms-huggingface==0.4.2

|

static/Step1.png

ADDED

|

static/Step2.png

ADDED

|

static/Step3.png

ADDED

|

static/Step4.png

ADDED

|

Git LFS Details

|

static/aivn_favicon.png

ADDED

|

|

static/aivn_logo.png

ADDED

|