Delete checkpoints

Browse files

fr/unit2/langgraph/.ipynb_checkpoints/agent-checkpoint.ipynb

DELETED

|

@@ -1,326 +0,0 @@

|

|

| 1 |

-

{

|

| 2 |

-

"cells": [

|

| 3 |

-

{

|

| 4 |

-

"cell_type": "markdown",

|

| 5 |

-

"id": "89791f21c171372a",

|

| 6 |

-

"metadata": {},

|

| 7 |

-

"source": [

|

| 8 |

-

"# Agent\n",

|

| 9 |

-

"\n",

|

| 10 |

-

"Dans ce *notebook*, **nous allons construire un agent simple en utilisant LangGraph**.\n",

|

| 11 |

-

"\n",

|

| 12 |

-

"Ce notebook fait parti du cours <a href=\"https://huggingface.co/learn/agents-course/fr\">sur les agents d'Hugging Face</a>, un cours gratuit qui vous guidera, du **niveau débutant à expert**, pour comprendre, utiliser et construire des agents.\n",

|

| 13 |

-

"\n",

|

| 14 |

-

"\n",

|

| 15 |

-

"Comme nous l'avons vu dans l'Unité 1, un agent a besoin de 3 étapes telles qu'introduites dans l'architecture ReAct :\n",

|

| 16 |

-

"[ReAct](https://react-lm.github.io/), une architecture générale d'agent.\n",

|

| 17 |

-

"\n",

|

| 18 |

-

"* `act` - laisser le modèle appeler des outils spécifiques\n",

|

| 19 |

-

"* `observe` - transmettre la sortie de l'outil au modèle\n",

|

| 20 |

-

"* `reason` - permet au modèle de raisonner sur la sortie de l'outil pour décider de ce qu'il doit faire ensuite (par exemple, appeler un autre outil ou simplement répondre directement).\n",

|

| 21 |

-

"\n",

|

| 22 |

-

""

|

| 23 |

-

]

|

| 24 |

-

},

|

| 25 |

-

{

|

| 26 |

-

"cell_type": "code",

|

| 27 |

-

"execution_count": null,

|

| 28 |

-

"id": "bef6c5514bd263ce",

|

| 29 |

-

"metadata": {},

|

| 30 |

-

"outputs": [],

|

| 31 |

-

"source": [

|

| 32 |

-

"%pip install -q -U langchain_openai langchain_core langgraph"

|

| 33 |

-

]

|

| 34 |

-

},

|

| 35 |

-

{

|

| 36 |

-

"cell_type": "code",

|

| 37 |

-

"execution_count": null,

|

| 38 |

-

"id": "61d0ed53b26fa5c6",

|

| 39 |

-

"metadata": {},

|

| 40 |

-

"outputs": [],

|

| 41 |

-

"source": [

|

| 42 |

-

"import os\n",

|

| 43 |

-

"\n",

|

| 44 |

-

"# Veuillez configurer votre propre clé\n",

|

| 45 |

-

"os.environ[\"OPENAI_API_KEY\"] = \"sk-xxxxxx\""

|

| 46 |

-

]

|

| 47 |

-

},

|

| 48 |

-

{

|

| 49 |

-

"cell_type": "code",

|

| 50 |

-

"execution_count": null,

|

| 51 |

-

"id": "a4a8bf0d5ac25a37",

|

| 52 |

-

"metadata": {},

|

| 53 |

-

"outputs": [],

|

| 54 |

-

"source": [

|

| 55 |

-

"import base64\n",

|

| 56 |

-

"from langchain_core.messages import HumanMessage\n",

|

| 57 |

-

"from langchain_openai import ChatOpenAI\n",

|

| 58 |

-

"\n",

|

| 59 |

-

"vision_llm = ChatOpenAI(model=\"gpt-4o\")\n",

|

| 60 |

-

"\n",

|

| 61 |

-

"\n",

|

| 62 |

-

"def extract_text(img_path: str) -> str:\n",

|

| 63 |

-

" \"\"\"\n",

|

| 64 |

-

" Extract text from an image file using a multimodal model.\n",

|

| 65 |

-

"\n",

|

| 66 |

-

" Args:\n",

|

| 67 |

-

" img_path: A local image file path (strings).\n",

|

| 68 |

-

"\n",

|

| 69 |

-

" Returns:\n",

|

| 70 |

-

" A single string containing the concatenated text extracted from each image.\n",

|

| 71 |

-

" \"\"\"\n",

|

| 72 |

-

" all_text = \"\"\n",

|

| 73 |

-

" try:\n",

|

| 74 |

-

"\n",

|

| 75 |

-

" # Lire l'image et l'encoder en base64\n",

|

| 76 |

-

" with open(img_path, \"rb\") as image_file:\n",

|

| 77 |

-

" image_bytes = image_file.read()\n",

|

| 78 |

-

"\n",

|

| 79 |

-

" image_base64 = base64.b64encode(image_bytes).decode(\"utf-8\")\n",

|

| 80 |

-

"\n",

|

| 81 |

-

" # Préparer le prompt en incluant les données de l'image base64\n",

|

| 82 |

-

" message = [\n",

|

| 83 |

-

" HumanMessage(\n",

|

| 84 |

-

" content=[\n",

|

| 85 |

-

" {\n",

|

| 86 |

-

" \"type\": \"text\",\n",

|

| 87 |

-

" \"text\": (\n",

|

| 88 |

-

" \"Extract all the text from this image. \"\n",

|

| 89 |

-

" \"Return only the extracted text, no explanations.\"\n",

|

| 90 |

-

" ),\n",

|

| 91 |

-

" },\n",

|

| 92 |

-

" {\n",

|

| 93 |

-

" \"type\": \"image_url\",\n",

|

| 94 |

-

" \"image_url\": {\n",

|

| 95 |

-

" \"url\": f\"data:image/png;base64,{image_base64}\"\n",

|

| 96 |

-

" },\n",

|

| 97 |

-

" },\n",

|

| 98 |

-

" ]\n",

|

| 99 |

-

" )\n",

|

| 100 |

-

" ]\n",

|

| 101 |

-

"\n",

|

| 102 |

-

" # Appeler le VLM\n",

|

| 103 |

-

" response = vision_llm.invoke(message)\n",

|

| 104 |

-

"\n",

|

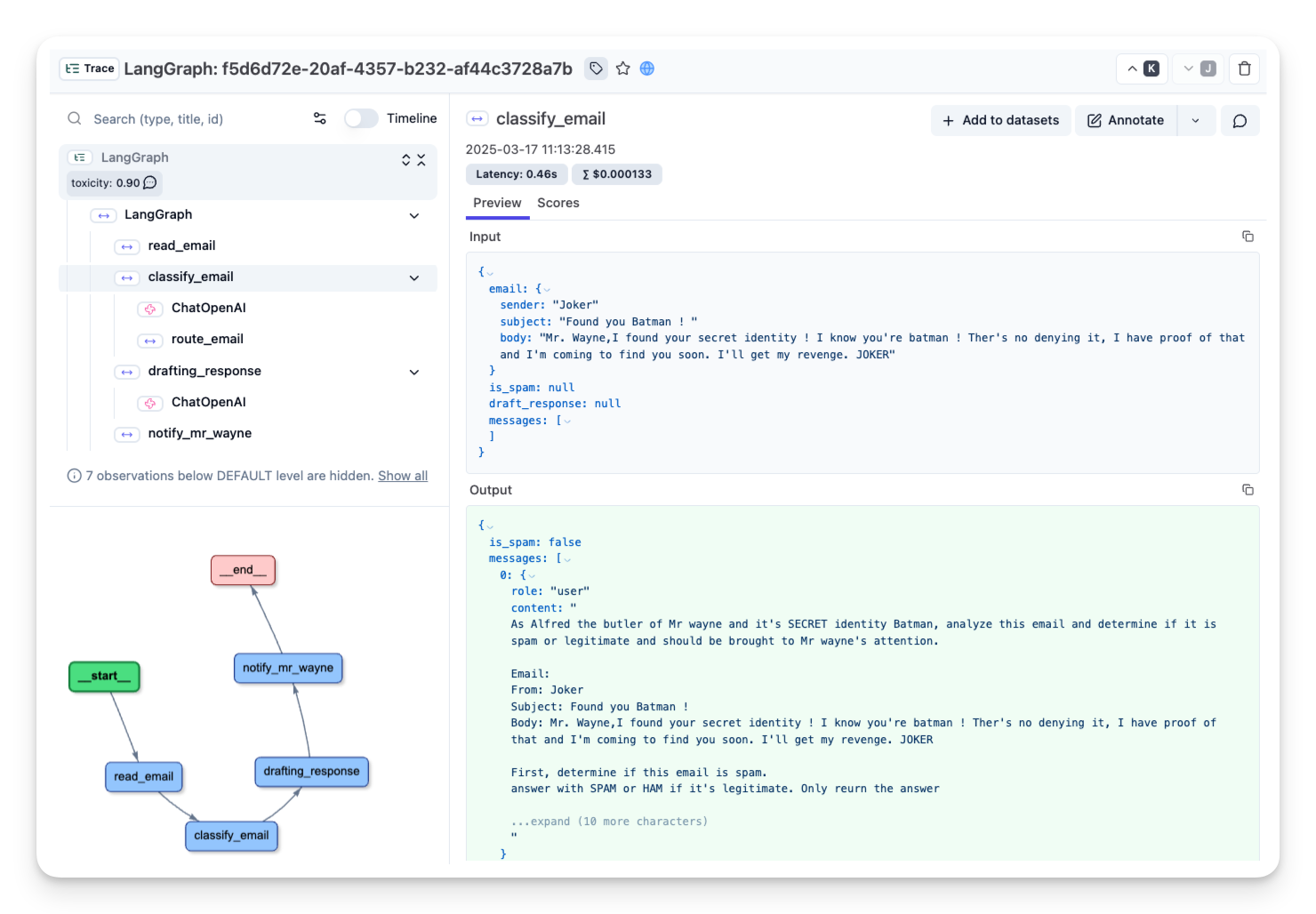

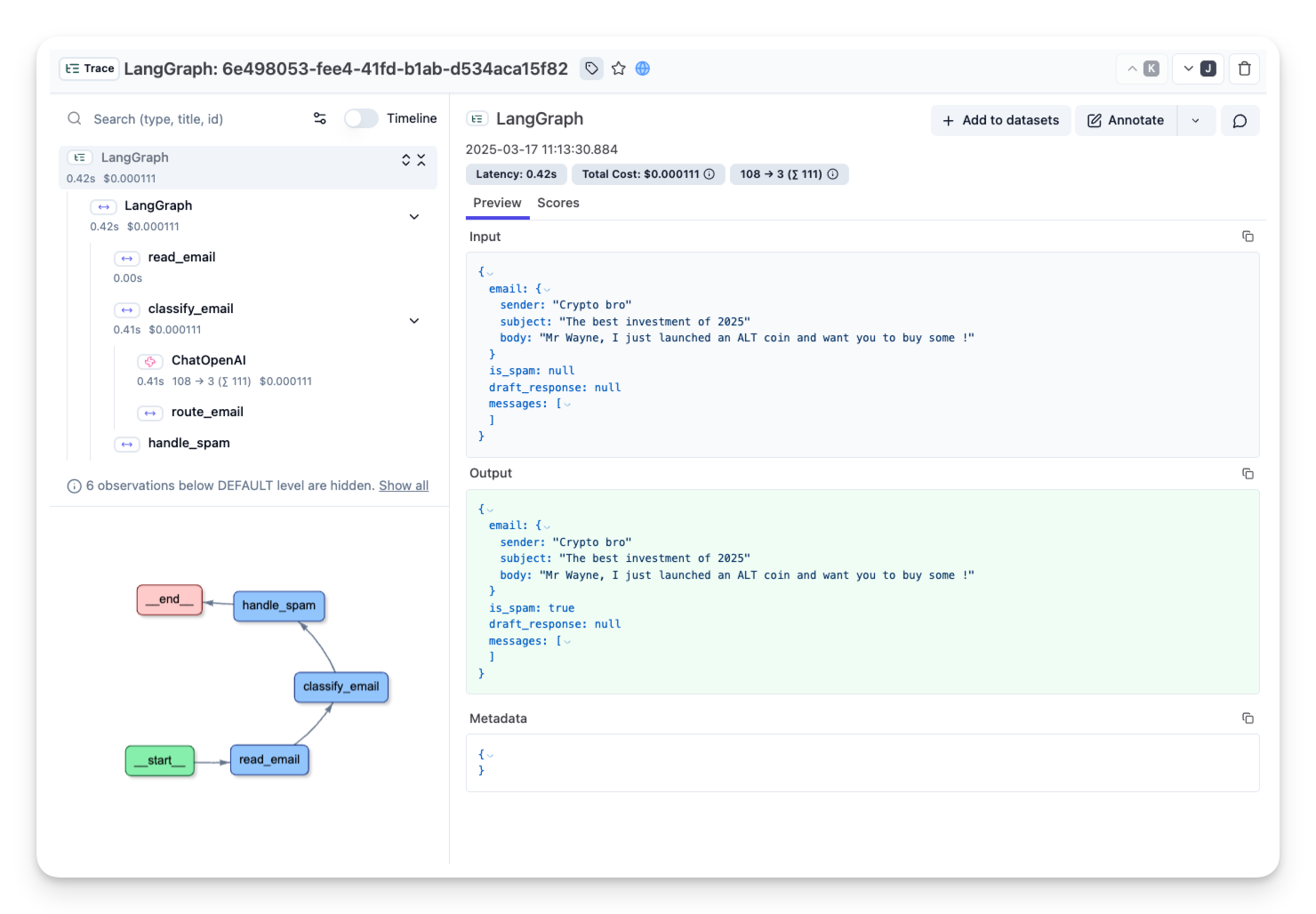

| 105 |

-

" # Ajouter le texte extrait\n",

|

| 106 |

-

" all_text += response.content + \"\\n\\n\"\n",

|

| 107 |

-

"\n",

|

| 108 |

-

" return all_text.strip()\n",

|

| 109 |

-

" except Exception as e:\n",

|

| 110 |

-

" # Vous pouvez choisir de renvoyer une chaîne vide ou un message d'erreur.\n",

|

| 111 |

-

" error_msg = f\"Error extracting text: {str(e)}\"\n",

|

| 112 |

-

" print(error_msg)\n",

|

| 113 |

-

" return \"\"\n",

|

| 114 |

-

"\n",

|

| 115 |

-

"\n",

|

| 116 |

-

"llm = ChatOpenAI(model=\"gpt-4o\")\n",

|

| 117 |

-

"\n",

|

| 118 |

-

"\n",

|

| 119 |

-

"def divide(a: int, b: int) -> float:\n",

|

| 120 |

-

" \"\"\"Divide a and b.\"\"\"\n",

|

| 121 |

-

" return a / b\n",

|

| 122 |

-

"\n",

|

| 123 |

-

"\n",

|

| 124 |

-

"tools = [\n",

|

| 125 |

-

" divide,\n",

|

| 126 |

-

" extract_text\n",

|

| 127 |

-

"]\n",

|

| 128 |

-

"llm_with_tools = llm.bind_tools(tools, parallel_tool_calls=False)"

|

| 129 |

-

]

|

| 130 |

-

},

|

| 131 |

-

{

|

| 132 |

-

"cell_type": "markdown",

|

| 133 |

-

"id": "3e7c17a2e155014e",

|

| 134 |

-

"metadata": {},

|

| 135 |

-

"source": [

|

| 136 |

-

"Créons notre LLM et demandons-lui le comportement global souhaité de l'agent."

|

| 137 |

-

]

|

| 138 |

-

},

|

| 139 |

-

{

|

| 140 |

-

"cell_type": "code",

|

| 141 |

-

"execution_count": null,

|

| 142 |

-

"id": "f31250bc1f61da81",

|

| 143 |

-

"metadata": {},

|

| 144 |

-

"outputs": [],

|

| 145 |

-

"source": [

|

| 146 |

-

"from typing import TypedDict, Annotated, Optional\n",

|

| 147 |

-

"from langchain_core.messages import AnyMessage\n",

|

| 148 |

-

"from langgraph.graph.message import add_messages\n",

|

| 149 |

-

"\n",

|

| 150 |

-

"\n",

|

| 151 |

-

"class AgentState(TypedDict):\n",

|

| 152 |

-

" # Le document d'entrée\n",

|

| 153 |

-

" input_file: Optional[str] # Contient le chemin d'accès au fichier, le type (PNG)\n",

|

| 154 |

-

" messages: Annotated[list[AnyMessage], add_messages]"

|

| 155 |

-

]

|

| 156 |

-

},

|

| 157 |

-

{

|

| 158 |

-

"cell_type": "code",

|

| 159 |

-

"execution_count": null,

|

| 160 |

-

"id": "3c4a736f9e55afa9",

|

| 161 |

-

"metadata": {},

|

| 162 |

-

"outputs": [],

|

| 163 |

-

"source": [

|

| 164 |

-

"from langchain_core.messages import HumanMessage, SystemMessage\n",

|

| 165 |

-

"from langchain_core.utils.function_calling import convert_to_openai_tool\n",

|

| 166 |

-

"\n",

|

| 167 |

-

"\n",

|

| 168 |

-

"def assistant(state: AgentState):\n",

|

| 169 |

-

" # Message système\n",

|

| 170 |

-

" textual_description_of_tool = \"\"\"\n",

|

| 171 |

-

"extract_text(img_path: str) -> str:\n",

|

| 172 |

-

" Extract text from an image file using a multimodal model.\n",

|

| 173 |

-

"\n",

|

| 174 |

-

" Args:\n",

|

| 175 |

-

" img_path: A local image file path (strings).\n",

|

| 176 |

-

"\n",

|

| 177 |

-

" Returns:\n",

|

| 178 |

-

" A single string containing the concatenated text extracted from each image.\n",

|

| 179 |

-

"divide(a: int, b: int) -> float:\n",

|

| 180 |

-

" Divide a and b\n",

|

| 181 |

-

"\"\"\"\n",

|

| 182 |

-

" image = state[\"input_file\"]\n",

|

| 183 |

-

" sys_msg = SystemMessage(content=f\"You are an helpful agent that can analyse some images and run some computatio without provided tools :\\n{textual_description_of_tool} \\n You have access to some otpional images. Currently the loaded images is : {image}\")\n",

|

| 184 |

-

"\n",

|

| 185 |

-

" return {\"messages\": [llm_with_tools.invoke([sys_msg] + state[\"messages\"])], \"input_file\": state[\"input_file\"]}"

|

| 186 |

-

]

|

| 187 |

-

},

|

| 188 |

-

{

|

| 189 |

-

"cell_type": "markdown",

|

| 190 |

-

"id": "6f1efedd943d8b1d",

|

| 191 |

-

"metadata": {},

|

| 192 |

-

"source": [

|

| 193 |

-

"Nous définissons un nœud `tools` avec notre liste d'outils.\n",

|

| 194 |

-

"\n",

|

| 195 |

-

"Le noeud `assistant` est juste notre modèle avec les outils liés.\n",

|

| 196 |

-

"\n",

|

| 197 |

-

"Nous créons un graphe avec les noeuds `assistant` et `tools`.\n",

|

| 198 |

-

"\n",

|

| 199 |

-

"Nous ajoutons l'arête `tools_condition`, qui route vers `End` ou vers `tools` selon que le `assistant` appelle ou non un outil.\n",

|

| 200 |

-

"\n",

|

| 201 |

-

"Maintenant, nous ajoutons une nouvelle étape :\n",

|

| 202 |

-

"\n",

|

| 203 |

-

"Nous connectons le noeud `tools` au `assistant`, formant une boucle.\n",

|

| 204 |

-

"\n",

|

| 205 |

-

"* Après l'exécution du noeud `assistant`, `tools_condition` vérifie si la sortie du modèle est un appel d'outil.\n",

|

| 206 |

-

"* Si c'est le cas, le flux est dirigé vers le noeud `tools`.\n",

|

| 207 |

-

"* Le noeud `tools` se connecte à `assistant`.\n",

|

| 208 |

-

"* Cette boucle continue tant que le modèle décide d'appeler des outils.\n",

|

| 209 |

-

"* Si la réponse du modèle n'est pas un appel d'outil, le flux est dirigé vers END, mettant fin au processus."

|

| 210 |

-

]

|

| 211 |

-

},

|

| 212 |

-

{

|

| 213 |

-

"cell_type": "code",

|

| 214 |

-

"execution_count": null,

|

| 215 |

-

"id": "e013061de784638a",

|

| 216 |

-

"metadata": {},

|

| 217 |

-

"outputs": [],

|

| 218 |

-

"source": [

|

| 219 |

-

"from langgraph.graph import START, StateGraph\n",

|

| 220 |

-

"from langgraph.prebuilt import ToolNode, tools_condition\n",

|

| 221 |

-

"from IPython.display import Image, display\n",

|

| 222 |

-

"\n",

|

| 223 |

-

"# Graphe\n",

|

| 224 |

-

"builder = StateGraph(AgentState)\n",

|

| 225 |

-

"\n",

|

| 226 |

-

"# Définir les nœuds : ce sont eux qui font le travail\n",

|

| 227 |

-

"builder.add_node(\"assistant\", assistant)\n",

|

| 228 |

-

"builder.add_node(\"tools\", ToolNode(tools))\n",

|

| 229 |

-

"\n",

|

| 230 |

-

"# Définir les arêtes : elles déterminent la manière dont le flux de contrôle se déplace\n",

|

| 231 |

-

"builder.add_edge(START, \"assistant\")\n",

|

| 232 |

-

"builder.add_conditional_edges(\n",

|

| 233 |

-

" \"assistant\",\n",

|

| 234 |

-

" # Si le dernier message (résultat) de l'assistant est un appel d'outil -> tools_condition va vers tools\n",

|

| 235 |

-

" # Si le dernier message (résultat) de l'assistant n'est pas un appel d'outil -> tools_condition va à END\n",

|

| 236 |

-

" tools_condition,\n",

|

| 237 |

-

")\n",

|

| 238 |

-

"builder.add_edge(\"tools\", \"assistant\")\n",

|

| 239 |

-

"react_graph = builder.compile()\n",

|

| 240 |

-

"\n",

|

| 241 |

-

"# Afficher\n",

|

| 242 |

-

"display(Image(react_graph.get_graph(xray=True).draw_mermaid_png()))"

|

| 243 |

-

]

|

| 244 |

-

},

|

| 245 |

-

{

|

| 246 |

-

"cell_type": "code",

|

| 247 |

-

"execution_count": null,

|

| 248 |

-

"id": "d3b0ba5be1a54aad",

|

| 249 |

-

"metadata": {},

|

| 250 |

-

"outputs": [],

|

| 251 |

-

"source": [

|

| 252 |

-

"messages = [HumanMessage(content=\"Divide 6790 by 5\")]\n",

|

| 253 |

-

"\n",

|

| 254 |

-

"messages = react_graph.invoke({\"messages\": messages, \"input_file\": None})"

|

| 255 |

-

]

|

| 256 |

-

},

|

| 257 |

-

{

|

| 258 |

-

"cell_type": "code",

|

| 259 |

-

"execution_count": null,

|

| 260 |

-

"id": "55eb0f1afd096731",

|

| 261 |

-

"metadata": {},

|

| 262 |

-

"outputs": [],

|

| 263 |

-

"source": [

|

| 264 |

-

"for m in messages['messages']:\n",

|

| 265 |

-

" m.pretty_print()"

|

| 266 |

-

]

|

| 267 |

-

},

|

| 268 |

-

{

|

| 269 |

-

"cell_type": "markdown",

|

| 270 |

-

"id": "e0062c1b99cb4779",

|

| 271 |

-

"metadata": {},

|

| 272 |

-

"source": [

|

| 273 |

-

"## Programme d'entraînement\n",

|

| 274 |

-

"M. Wayne a laissé une note avec son programme d'entraînement pour la semaine. J'ai trouvé une recette pour le dîner, laissée dans une note.\n",

|

| 275 |

-

"\n",

|

| 276 |

-

"Vous pouvez trouver le document [ICI](https://huggingface.co/datasets/agents-course/course-images/blob/main/en/unit2/LangGraph/Batman_training_and_meals.png), alors téléchargez-le et mettez-le dans le dossier local.\n",

|

| 277 |

-

"\n",

|

| 278 |

-

""

|

| 279 |

-

]

|

| 280 |

-

},

|

| 281 |

-

{

|

| 282 |

-

"cell_type": "code",

|

| 283 |

-

"execution_count": null,

|

| 284 |

-

"id": "2e166ebba82cfd2a",

|

| 285 |

-

"metadata": {},

|

| 286 |

-

"outputs": [],

|

| 287 |

-

"source": [

|

| 288 |

-

"messages = [HumanMessage(content=\"According the note provided by MR wayne in the provided images. What's the list of items I should buy for the dinner menu ?\")]\n",

|

| 289 |

-

"\n",

|

| 290 |

-

"messages = react_graph.invoke({\"messages\": messages, \"input_file\": \"Batman_training_and_meals.png\"})"

|

| 291 |

-

]

|

| 292 |

-

},

|

| 293 |

-

{

|

| 294 |

-

"cell_type": "code",

|

| 295 |

-

"execution_count": null,

|

| 296 |

-

"id": "5bfd67af70b7dcf3",

|

| 297 |

-

"metadata": {},

|

| 298 |

-

"outputs": [],

|

| 299 |

-

"source": [

|

| 300 |

-

"for m in messages['messages']:\n",

|

| 301 |

-

" m.pretty_print()"

|

| 302 |

-

]

|

| 303 |

-

}

|

| 304 |

-

],

|

| 305 |

-

"metadata": {

|

| 306 |

-

"kernelspec": {

|

| 307 |

-

"display_name": "Python 3 (ipykernel)",

|

| 308 |

-

"language": "python",

|

| 309 |

-

"name": "python3"

|

| 310 |

-

},

|

| 311 |

-

"language_info": {

|

| 312 |

-

"codemirror_mode": {

|

| 313 |

-

"name": "ipython",

|

| 314 |

-

"version": 3

|

| 315 |

-

},

|

| 316 |

-

"file_extension": ".py",

|

| 317 |

-

"mimetype": "text/x-python",

|

| 318 |

-

"name": "python",

|

| 319 |

-

"nbconvert_exporter": "python",

|

| 320 |

-

"pygments_lexer": "ipython3",

|

| 321 |

-

"version": "3.12.7"

|

| 322 |

-

}

|

| 323 |

-

},

|

| 324 |

-

"nbformat": 4,

|

| 325 |

-

"nbformat_minor": 5

|

| 326 |

-

}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

fr/unit2/langgraph/.ipynb_checkpoints/mail_sorting-checkpoint.ipynb

DELETED

|

@@ -1,457 +0,0 @@

|

|

| 1 |

-

{

|

| 2 |

-

"cells": [

|

| 3 |

-

{

|

| 4 |

-

"cell_type": "markdown",

|

| 5 |

-

"metadata": {},

|

| 6 |

-

"source": [

|

| 7 |

-

"# Alfred, le majordome chargé de trier le courrier : Un exemple de LangGraph\n",

|

| 8 |

-

"\n",

|

| 9 |

-

"Dans ce *notebook*, **nous allons construire un *workflow* complet pour le traitement des emails en utilisant LangGraph**.\n",

|

| 10 |

-

"\n",

|

| 11 |

-

"Ce notebook fait parti du cours <a href=\"https://huggingface.co/learn/agents-course/fr\">sur les agents d'Hugging Face</a>, un cours gratuit qui vous guidera, du **niveau débutant à expert**, pour comprendre, utiliser et construire des agents.\n",

|

| 12 |

-

"\n",

|

| 13 |

-

"\n",

|

| 14 |

-

"\n",

|

| 15 |

-

"## Ce que vous allez apprendre\n",

|

| 16 |

-

"\n",

|

| 17 |

-

"Dans ce *notebook*, vous apprendrez à :\n",

|

| 18 |

-

"1. Mettre en place un *workflow* LangGraph\n",

|

| 19 |

-

"2. Définir l'état et les nœuds pour le traitement des emails\n",

|

| 20 |

-

"3. Créer un branchement conditionnel dans un graphe\n",

|

| 21 |

-

"4. Connecter un LLM pour la classification et la génération de contenu\n",

|

| 22 |

-

"5. Visualiser le graphe du *workflow*\n",

|

| 23 |

-

"6. Exécuter le *workflow* avec des données d'exemple"

|

| 24 |

-

]

|

| 25 |

-

},

|

| 26 |

-

{

|

| 27 |

-

"cell_type": "code",

|

| 28 |

-

"execution_count": null,

|

| 29 |

-

"metadata": {},

|

| 30 |

-

"outputs": [],

|

| 31 |

-

"source": [

|

| 32 |

-

"# Installer les paquets nécessaires\n",

|

| 33 |

-

"%pip install -q langgraph langchain_openai langchain_huggingface"

|

| 34 |

-

]

|

| 35 |

-

},

|

| 36 |

-

{

|

| 37 |

-

"cell_type": "markdown",

|

| 38 |

-

"metadata": {},

|

| 39 |

-

"source": [

|

| 40 |

-

"## Configuration de notre environnement\n",

|

| 41 |

-

"\n",

|

| 42 |

-

"Tout d'abord, importons toutes les bibliothèques nécessaires. LangGraph fournit la structure du graphe, tandis que LangChain offre des interfaces pratiques pour travailler avec les LLM."

|

| 43 |

-

]

|

| 44 |

-

},

|

| 45 |

-

{

|

| 46 |

-

"cell_type": "code",

|

| 47 |

-

"execution_count": null,

|

| 48 |

-

"metadata": {},

|

| 49 |

-

"outputs": [],

|

| 50 |

-

"source": [

|

| 51 |

-

"import os\n",

|

| 52 |

-

"from typing import TypedDict, List, Dict, Any, Optional\n",

|

| 53 |

-

"from langgraph.graph import StateGraph, START, END\n",

|

| 54 |

-

"from langchain_openai import ChatOpenAI\n",

|

| 55 |

-

"from langchain_core.messages import HumanMessage\n",

|

| 56 |

-

"\n",

|

| 57 |

-

"# Définissez votre clé API OpenAI ici\n",

|

| 58 |

-

"os.environ[\"OPENAI_API_KEY\"] = \"sk-xxxxx\" # Remplacer par votre clé API\n",

|

| 59 |

-

"\n",

|

| 60 |

-

"# Initialiser notre LLM\n",

|

| 61 |

-

"model = ChatOpenAI(model=\"gpt-4o\", temperature=0)"

|

| 62 |

-

]

|

| 63 |

-

},

|

| 64 |

-

{

|

| 65 |

-

"cell_type": "markdown",

|

| 66 |

-

"metadata": {},

|

| 67 |

-

"source": [

|

| 68 |

-

"## Étape 1 : Définir notre état\n",

|

| 69 |

-

"\n",

|

| 70 |

-

"Dans LangGraph, **State** est le concept central. Il représente toutes les informations qui circulent dans notre *workflow*.\n",

|

| 71 |

-

"\n",

|

| 72 |

-

"Pour le système de traitement des emails d'Alfred, nous devons suivre :\n",

|

| 73 |

-

"- L'email en cours de traitement\n",

|

| 74 |

-

"- S'il s'agit d'un spam ou non\n",

|

| 75 |

-

"- Le projet de réponse (pour les courriels légitimes)\n",

|

| 76 |

-

"- L'historique de la conversation avec le LLM"

|

| 77 |

-

]

|

| 78 |

-

},

|

| 79 |

-

{

|

| 80 |

-

"cell_type": "code",

|

| 81 |

-

"execution_count": null,

|

| 82 |

-

"metadata": {},

|

| 83 |

-

"outputs": [],

|

| 84 |

-

"source": [

|

| 85 |

-

"class EmailState(TypedDict):\n",

|

| 86 |

-

" email: Dict[str, Any]\n",

|

| 87 |

-

" is_spam: Optional[bool]\n",

|

| 88 |

-

" spam_reason: Optional[str]\n",

|

| 89 |

-

" email_category: Optional[str]\n",

|

| 90 |

-

" email_draft: Optional[str]\n",

|

| 91 |

-

" messages: List[Dict[str, Any]]"

|

| 92 |

-

]

|

| 93 |

-

},

|

| 94 |

-

{

|

| 95 |

-

"cell_type": "markdown",

|

| 96 |

-

"metadata": {},

|

| 97 |

-

"source": [

|

| 98 |

-

"## Étape 2 : Définir nos nœuds"

|

| 99 |

-

]

|

| 100 |

-

},

|

| 101 |

-

{

|

| 102 |

-

"cell_type": "code",

|

| 103 |

-

"execution_count": null,

|

| 104 |

-

"metadata": {},

|

| 105 |

-

"outputs": [],

|

| 106 |

-

"source": [

|

| 107 |

-

"def read_email(state: EmailState):\n",

|

| 108 |

-

" email = state[\"email\"]\n",

|

| 109 |

-

" print(f\"Alfred is processing an email from {email['sender']} with subject: {email['subject']}\")\n",

|

| 110 |

-

" return {}\n",

|

| 111 |

-

"\n",

|

| 112 |

-

"\n",

|

| 113 |

-

"def classify_email(state: EmailState):\n",

|

| 114 |

-

" email = state[\"email\"]\n",

|

| 115 |

-

"\n",

|

| 116 |

-

" prompt = f\"\"\"\n",

|

| 117 |

-

"As Alfred the butler of Mr wayne and it's SECRET identity Batman, analyze this email and determine if it is spam or legitimate and should be brought to Mr wayne's attention.\n",

|

| 118 |

-

"\n",

|

| 119 |

-

"Email:\n",

|

| 120 |

-

"From: {email['sender']}\n",

|

| 121 |

-

"Subject: {email['subject']}\n",

|

| 122 |

-

"Body: {email['body']}\n",

|

| 123 |

-

"\n",

|

| 124 |

-

"First, determine if this email is spam.\n",

|

| 125 |

-

"answer with SPAM or HAM if it's legitimate. Only return the answer\n",

|

| 126 |

-

"Answer :\n",

|

| 127 |

-

" \"\"\"\n",

|

| 128 |

-

" messages = [HumanMessage(content=prompt)]\n",

|

| 129 |

-

" response = model.invoke(messages)\n",

|

| 130 |

-

"\n",

|

| 131 |

-

" response_text = response.content.lower()\n",

|

| 132 |

-

" print(response_text)\n",

|

| 133 |

-

" is_spam = \"spam\" in response_text and \"ham\" not in response_text\n",

|

| 134 |

-

"\n",

|

| 135 |

-

" if not is_spam:\n",

|

| 136 |

-

" new_messages = state.get(\"messages\", []) + [\n",

|

| 137 |

-

" {\"role\": \"user\", \"content\": prompt},\n",

|

| 138 |

-

" {\"role\": \"assistant\", \"content\": response.content}\n",

|

| 139 |

-

" ]\n",

|

| 140 |

-

" else:\n",

|

| 141 |

-

" new_messages = state.get(\"messages\", [])\n",

|

| 142 |

-

"\n",

|

| 143 |

-

" return {\n",

|

| 144 |

-

" \"is_spam\": is_spam,\n",

|

| 145 |

-

" \"messages\": new_messages\n",

|

| 146 |

-

" }\n",

|

| 147 |

-

"\n",

|

| 148 |

-

"\n",

|

| 149 |

-

"def handle_spam(state: EmailState):\n",

|

| 150 |

-

" print(f\"Alfred has marked the email as spam.\")\n",

|

| 151 |

-

" print(\"The email has been moved to the spam folder.\")\n",

|

| 152 |

-

" return {}\n",

|

| 153 |

-

"\n",

|

| 154 |

-

"\n",

|

| 155 |

-

"def drafting_response(state: EmailState):\n",

|

| 156 |

-

" email = state[\"email\"]\n",

|

| 157 |

-

"\n",

|

| 158 |

-

" prompt = f\"\"\"\n",

|

| 159 |

-

"As Alfred the butler, draft a polite preliminary response to this email.\n",

|

| 160 |

-

"\n",

|

| 161 |

-

"Email:\n",

|

| 162 |

-

"From: {email['sender']}\n",

|

| 163 |

-

"Subject: {email['subject']}\n",

|

| 164 |

-

"Body: {email['body']}\n",

|

| 165 |

-

"\n",

|

| 166 |

-

"Draft a brief, professional response that Mr. Wayne can review and personalize before sending.\n",

|

| 167 |

-

" \"\"\"\n",

|

| 168 |

-

"\n",

|

| 169 |

-

" messages = [HumanMessage(content=prompt)]\n",

|

| 170 |

-

" response = model.invoke(messages)\n",

|

| 171 |

-

"\n",

|

| 172 |

-

" new_messages = state.get(\"messages\", []) + [\n",

|

| 173 |

-

" {\"role\": \"user\", \"content\": prompt},\n",

|

| 174 |

-

" {\"role\": \"assistant\", \"content\": response.content}\n",

|

| 175 |

-

" ]\n",

|

| 176 |

-

"\n",

|

| 177 |

-

" return {\n",

|

| 178 |

-

" \"email_draft\": response.content,\n",

|

| 179 |

-

" \"messages\": new_messages\n",

|

| 180 |

-

" }\n",

|

| 181 |

-

"\n",

|

| 182 |

-

"\n",

|

| 183 |

-

"def notify_mr_wayne(state: EmailState):\n",

|

| 184 |

-

" email = state[\"email\"]\n",

|

| 185 |

-

"\n",

|

| 186 |

-

" print(\"\\n\" + \"=\" * 50)\n",

|

| 187 |

-

" print(f\"Sir, you've received an email from {email['sender']}.\")\n",

|

| 188 |

-

" print(f\"Subject: {email['subject']}\")\n",

|

| 189 |

-

" print(\"\\nI've prepared a draft response for your review:\")\n",

|

| 190 |

-

" print(\"-\" * 50)\n",

|

| 191 |

-

" print(state[\"email_draft\"])\n",

|

| 192 |

-

" print(\"=\" * 50 + \"\\n\")\n",

|

| 193 |

-

"\n",

|

| 194 |

-

" return {}\n",

|

| 195 |

-

"\n",

|

| 196 |

-

"\n",

|

| 197 |

-

"# Définir la logique de routage\n",

|

| 198 |

-

"def route_email(state: EmailState) -> str:\n",

|

| 199 |

-

" if state[\"is_spam\"]:\n",

|

| 200 |

-

" return \"spam\"\n",

|

| 201 |

-

" else:\n",

|

| 202 |

-

" return \"legitimate\"\n",

|

| 203 |

-

"\n",

|

| 204 |

-

"\n",

|

| 205 |

-

"# Créer le graphe\n",

|

| 206 |

-

"email_graph = StateGraph(EmailState)\n",

|

| 207 |

-

"\n",

|

| 208 |

-

"# Ajouter des nœuds\n",

|

| 209 |

-

"email_graph.add_node(\"read_email\", read_email) # le nœud read_email exécute la fonction read_mail\n",

|

| 210 |

-

"email_graph.add_node(\"classify_email\", classify_email) # le nœud classify_email exécutera la fonction classify_email\n",

|

| 211 |

-

"email_graph.add_node(\"handle_spam\", handle_spam) # même logique\n",

|

| 212 |

-

"email_graph.add_node(\"drafting_response\", drafting_response) # même logique\n",

|

| 213 |

-

"email_graph.add_node(\"notify_mr_wayne\", notify_mr_wayne) # même logique\n"

|

| 214 |

-

]

|

| 215 |

-

},

|

| 216 |

-

{

|

| 217 |

-

"cell_type": "markdown",

|

| 218 |

-

"metadata": {},

|

| 219 |

-

"source": [

|

| 220 |

-

"## Étape 3 : Définir notre logique de routage"

|

| 221 |

-

]

|

| 222 |

-

},

|

| 223 |

-

{

|

| 224 |

-

"cell_type": "code",

|

| 225 |

-

"execution_count": null,

|

| 226 |

-

"metadata": {},

|

| 227 |

-

"outputs": [],

|

| 228 |

-

"source": [

|

| 229 |

-

"# Ajouter des arêtes\n",

|

| 230 |

-

"email_graph.add_edge(START, \"read_email\") # Après le départ, nous accédons au nœud « read_email »\n",

|

| 231 |

-

"\n",

|

| 232 |

-

"email_graph.add_edge(\"read_email\", \"classify_email\") # after_reading nous classifions\n",

|

| 233 |

-

"\n",

|

| 234 |

-

"# Ajouter des arêtes conditionnelles\n",

|

| 235 |

-

"email_graph.add_conditional_edges(\n",

|

| 236 |

-

" \"classify_email\", # après la classification, nous exécutons la fonction « route_email »\n",

|

| 237 |

-

" route_email,\n",

|

| 238 |

-

" {\n",

|

| 239 |

-

" \"spam\": \"handle_spam\", # s'il renvoie « Spam », nous allons au noeud « handle_span »\n",

|

| 240 |

-

" \"legitimate\": \"drafting_response\" # et s'il est légitime, nous passons au nœud « drafting_response »\n",

|

| 241 |

-

" }\n",

|

| 242 |

-

")\n",

|

| 243 |

-

"\n",

|

| 244 |

-

"# Ajouter les arêtes finales\n",

|

| 245 |

-

"email_graph.add_edge(\"handle_spam\", END) # après avoir traité le spam, nous terminons toujours\n",

|

| 246 |

-

"email_graph.add_edge(\"drafting_response\", \"notify_mr_wayne\")\n",

|

| 247 |

-

"email_graph.add_edge(\"notify_mr_wayne\", END) # après avoir notifié M. Wayne, nous pouvons mettre un terme à l'opération\n"

|

| 248 |

-

]

|

| 249 |

-

},

|

| 250 |

-

{

|

| 251 |

-

"cell_type": "markdown",

|

| 252 |

-

"metadata": {},

|

| 253 |

-

"source": [

|

| 254 |

-

"## Étape 4 : Créer le graphe d'état et définir les arêtes"

|

| 255 |

-

]

|

| 256 |

-

},

|

| 257 |

-

{

|

| 258 |

-

"cell_type": "code",

|

| 259 |

-

"execution_count": null,

|

| 260 |

-

"metadata": {},

|

| 261 |

-

"outputs": [],

|

| 262 |

-

"source": [

|

| 263 |

-

"# Compiler le graphique\n",

|

| 264 |

-

"compiled_graph = email_graph.compile()"

|

| 265 |

-

]

|

| 266 |

-

},

|

| 267 |

-

{

|

| 268 |

-

"cell_type": "code",

|

| 269 |

-

"execution_count": null,

|

| 270 |

-

"metadata": {},

|

| 271 |

-

"outputs": [],

|

| 272 |

-

"source": [

|

| 273 |

-

"from IPython.display import Image, display\n",

|

| 274 |

-

"\n",

|

| 275 |

-

"display(Image(compiled_graph.get_graph().draw_mermaid_png()))"

|

| 276 |

-

]

|

| 277 |

-

},

|

| 278 |

-

{

|

| 279 |

-

"cell_type": "code",

|

| 280 |

-

"execution_count": null,

|

| 281 |

-

"metadata": {},

|

| 282 |

-

"outputs": [],

|

| 283 |

-

"source": [

|

| 284 |

-

" # Exemple de courriels à tester\n",

|

| 285 |

-

"legitimate_email = {\n",

|

| 286 |

-

" \"sender\": \"Joker\",\n",

|

| 287 |

-

" \"subject\": \"Found you Batman ! \",\n",

|

| 288 |

-

" \"body\": \"Mr. Wayne,I found your secret identity ! I know you're batman ! Ther's no denying it, I have proof of that and I'm coming to find you soon. I'll get my revenge. JOKER\"\n",

|

| 289 |

-

"}\n",

|

| 290 |

-

"\n",

|

| 291 |

-

"spam_email = {\n",

|

| 292 |

-

" \"sender\": \"Crypto bro\",\n",

|

| 293 |

-

" \"subject\": \"The best investment of 2025\",\n",

|

| 294 |

-

" \"body\": \"Mr Wayne, I just launched an ALT coin and want you to buy some !\"\n",

|

| 295 |

-

"}\n",

|

| 296 |

-

"# Traiter les emails légitimes\n",

|

| 297 |

-

"print(\"\\nProcessing legitimate email...\")\n",

|

| 298 |

-

"legitimate_result = compiled_graph.invoke({\n",

|

| 299 |

-

" \"email\": legitimate_email,\n",

|

| 300 |

-

" \"is_spam\": None,\n",

|

| 301 |

-

" \"spam_reason\": None,\n",

|

| 302 |

-

" \"email_category\": None,\n",

|

| 303 |

-

" \"email_draft\": None,\n",

|

| 304 |

-

" \"messages\": []\n",

|

| 305 |

-

"})\n",

|

| 306 |

-

"\n",

|

| 307 |

-

"# Traiter les spams\n",

|

| 308 |

-

"print(\"\\nProcessing spam email...\")\n",

|

| 309 |

-

"spam_result = compiled_graph.invoke({\n",

|

| 310 |

-

" \"email\": spam_email,\n",

|

| 311 |

-

" \"is_spam\": None,\n",

|

| 312 |

-

" \"spam_reason\": None,\n",

|

| 313 |

-

" \"email_category\": None,\n",

|

| 314 |

-

" \"email_draft\": None,\n",

|

| 315 |

-

" \"messages\": []\n",

|

| 316 |

-

"})"

|

| 317 |

-

]

|

| 318 |

-

},

|

| 319 |

-

{

|

| 320 |

-

"cell_type": "markdown",

|

| 321 |

-

"metadata": {},

|

| 322 |

-

"source": [

|

| 323 |

-

"## Étape 5 : Inspection de notre agent trieur d'emails avec Langfuse 📡\n",

|

| 324 |

-

"\n",

|

| 325 |

-

"Au fur et à mesure qu'Alfred peaufine l'agent trieur d'emails, il se lasse de déboguer ses exécutions. Les agents, par nature, sont imprévisibles et difficiles à inspecter. Mais comme son objectif est de construire l'ultime agent de détection de spam et de le déployer en production, il a besoin d'une traçabilité solide pour un contrôle et une analyse ultérieurs.\n",

|

| 326 |

-

"\n",

|

| 327 |

-

"Pour ce faire, Alfred peut utiliser un outil d'observabilité tel que [Langfuse](https://langfuse.com/) pour retracer et surveiller les étapes internes de l'agent.\n",

|

| 328 |

-

"\n",

|

| 329 |

-

"Tout d'abord, nous devons installer les dépendances nécessaires :"

|

| 330 |

-

]

|

| 331 |

-

},

|

| 332 |

-

{

|

| 333 |

-

"cell_type": "code",

|

| 334 |

-

"execution_count": null,

|

| 335 |

-

"metadata": {},

|

| 336 |

-

"outputs": [],

|

| 337 |

-

"source": [

|

| 338 |

-

"%pip install -q langfuse"

|

| 339 |

-

]

|

| 340 |

-

},

|

| 341 |

-

{

|

| 342 |

-

"cell_type": "markdown",

|

| 343 |

-

"metadata": {},

|

| 344 |

-

"source": [

|

| 345 |

-

"Ensuite, nous définissons les clés de l'API Langfuse et l'adresse de l'hôte en tant que variables d'environnement. Vous pouvez obtenir vos identifiants Langfuse en vous inscrivant à [Langfuse Cloud](https://cloud.langfuse.com) ou à [Langfuse auto-hébergé](https://langfuse.com/self-hosting)."

|

| 346 |

-

]

|

| 347 |

-

},

|

| 348 |

-

{

|

| 349 |

-

"cell_type": "code",

|

| 350 |

-

"execution_count": null,

|

| 351 |

-

"metadata": {},

|

| 352 |

-

"outputs": [],

|

| 353 |

-

"source": [

|

| 354 |

-

"import os\n",

|

| 355 |

-

"\n",

|

| 356 |

-

"# Obtenez les clés de votre projet à partir de la page des paramètres du projet : https://cloud.langfuse.com\n",

|

| 357 |

-

"os.environ[\"LANGFUSE_PUBLIC_KEY\"] = \"pk-lf-...\"\n",

|

| 358 |

-

"os.environ[\"LANGFUSE_SECRET_KEY\"] = \"sk-lf-...\"\n",

|

| 359 |

-

"os.environ[\"LANGFUSE_HOST\"] = \"https://cloud.langfuse.com\" # 🇪🇺 région EU \n",

|

| 360 |

-

"# os.environ[\"LANGFUSE_HOST\"] = \"https://us.cloud.langfuse.com\" # 🇺🇸 région US"

|

| 361 |

-

]

|

| 362 |

-

},

|

| 363 |

-

{

|

| 364 |

-

"cell_type": "markdown",

|

| 365 |

-

"metadata": {},

|

| 366 |

-

"source": [

|

| 367 |

-

"Nous allons maintenant configurer le [Langfuse `callback_handler`] (https://langfuse.com/docs/integrations/langchain/tracing#add-langfuse-to-your-langchain-application)."

|

| 368 |

-

]

|

| 369 |

-

},

|

| 370 |

-

{

|

| 371 |

-

"cell_type": "code",

|

| 372 |

-

"execution_count": null,

|

| 373 |

-

"metadata": {},

|

| 374 |

-

"outputs": [],

|

| 375 |

-

"source": [

|

| 376 |

-

"from langfuse.langchain import CallbackHandler\n",

|

| 377 |

-

"\n",

|

| 378 |

-

"# Initialiser le CallbackHandler Langfuse pour LangGraph/Langchain (traçage)\n",

|

| 379 |

-

"langfuse_handler = CallbackHandler()"

|

| 380 |

-

]

|

| 381 |

-

},

|

| 382 |

-

{

|

| 383 |

-

"cell_type": "markdown",

|

| 384 |

-

"metadata": {},

|

| 385 |

-

"source": [

|

| 386 |

-

"Nous ajoutons ensuite `config={« callbacks » : [langfuse_handler]}` à l'invocation des agents et les exécutons à nouveau."

|

| 387 |

-

]

|

| 388 |

-

},

|

| 389 |

-

{

|

| 390 |

-

"cell_type": "code",

|

| 391 |

-

"execution_count": null,

|

| 392 |

-

"metadata": {},

|

| 393 |

-

"outputs": [],

|

| 394 |

-

"source": [

|

| 395 |

-

"# Traiter les emails légitimes\n",

|

| 396 |

-

"print(\"\\nProcessing legitimate email...\")\n",

|

| 397 |

-

"legitimate_result = compiled_graph.invoke(\n",

|

| 398 |

-

" input={\n",

|

| 399 |

-

" \"email\": legitimate_email,\n",

|

| 400 |

-

" \"is_spam\": None,\n",

|

| 401 |

-

" \"draft_response\": None,\n",

|

| 402 |

-

" \"messages\": []\n",

|

| 403 |

-

" },\n",

|

| 404 |

-

" config={\"callbacks\": [langfuse_handler]}\n",

|

| 405 |

-

")\n",

|

| 406 |

-

"\n",

|

| 407 |

-

"# Traiter les spams\n",

|

| 408 |

-

"print(\"\\nProcessing spam email...\")\n",

|

| 409 |

-

"spam_result = compiled_graph.invoke(\n",

|

| 410 |

-

" input={\n",

|

| 411 |

-

" \"email\": spam_email,\n",

|

| 412 |

-

" \"is_spam\": None,\n",

|

| 413 |

-

" \"draft_response\": None,\n",

|

| 414 |

-

" \"messages\": []\n",

|

| 415 |

-

" },\n",

|

| 416 |

-

" config={\"callbacks\": [langfuse_handler]}\n",

|

| 417 |

-

")"

|

| 418 |

-

]

|

| 419 |

-

},

|

| 420 |

-

{

|

| 421 |

-

"cell_type": "markdown",

|

| 422 |

-

"metadata": {},

|

| 423 |

-

"source": [

|

| 424 |

-

"Alfred est maintenant connecté 🔌 ! Les exécutions de LangGraph sont enregistrées dans Langfuse, ce qui lui donne une visibilité totale sur le comportement de l'agent. Avec cette configuration, il est prêt à revoir les exécutions précédentes et à affiner encore davantage son agent de tri du courrier.\n",

|

| 425 |

-

"\n",

|

| 426 |

-

"\n",

|

| 427 |

-

"\n",

|

| 428 |

-

"_[Lien public vers la trace avec l'email légitime](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/f5d6d72e-20af-4357-b232-af44c3728a7b?timestamp=2025-03-17T10%3A13%3A28.413Z&observation=6997ba69-043f-4f77-9445-700a033afba1)_\n",

|

| 429 |

-

"\n",

|

| 430 |

-

"\n",

|

| 431 |

-

"\n",

|

| 432 |

-

"_[Lien public vers la trace du spam](https://langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/6e498053-fee4-41fd-b1ab-d534aca15f82?timestamp=2025-03-17T10%3A13%3A30.884Z&observation=84770fc8-4276-4720-914f-bf52738d44ba)_\n"

|

| 433 |

-

]

|

| 434 |

-

}

|

| 435 |

-

],

|

| 436 |

-

"metadata": {

|

| 437 |

-

"kernelspec": {

|

| 438 |

-

"display_name": "Python 3 (ipykernel)",

|

| 439 |

-

"language": "python",

|

| 440 |

-

"name": "python3"

|

| 441 |

-

},

|

| 442 |

-

"language_info": {

|

| 443 |

-

"codemirror_mode": {

|

| 444 |

-

"name": "ipython",

|

| 445 |

-

"version": 3

|

| 446 |

-

},

|

| 447 |

-

"file_extension": ".py",

|

| 448 |

-

"mimetype": "text/x-python",

|

| 449 |

-

"name": "python",

|

| 450 |

-

"nbconvert_exporter": "python",

|

| 451 |

-

"pygments_lexer": "ipython3",

|

| 452 |

-

"version": "3.12.7"

|

| 453 |

-

}

|

| 454 |

-

},

|

| 455 |

-

"nbformat": 4,

|

| 456 |

-

"nbformat_minor": 4

|

| 457 |

-

}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|