Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- D_convbasedv1_48k.pth +3 -0

- G_convbasedv1_48k.pth +3 -0

- README.md +85 -0

- assets/loss_d_total.png +0 -0

- assets/loss_g_fm.png +0 -0

- assets/loss_g_kl.png +3 -0

- assets/loss_g_mel.png +0 -0

- assets/loss_g_total.png +0 -0

- config.json +46 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

assets/loss_g_kl.png filter=lfs diff=lfs merge=lfs -text

|

D_convbasedv1_48k.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e1c8a6730363c5ce6890b55ace3117ced9a0718d49500b8a12738fb6ae250e1e

|

| 3 |

+

size 285697566

|

G_convbasedv1_48k.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fd018b82e685e8ba8b818bbf2e10e6879b1d8637d04168aed555d53e75fbf681

|

| 3 |

+

size 151115402

|

README.md

CHANGED

|

@@ -1,3 +1,88 @@

|

|

| 1 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

license: apache-2.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

language:

|

| 3 |

+

- zh

|

| 4 |

+

- en

|

| 5 |

+

tags:

|

| 6 |

+

- speech-synthesis

|

| 7 |

+

- text-to-speech

|

| 8 |

+

- voice-conversion

|

| 9 |

+

- pytorch

|

| 10 |

+

- audio

|

| 11 |

+

- chinese-tts

|

| 12 |

+

- multi-speaker

|

| 13 |

+

- convolution

|

| 14 |

+

- encoder-decoder

|

| 15 |

+

- aishell

|

| 16 |

+

- vctk

|

| 17 |

license: apache-2.0

|

| 18 |

+

datasets:

|

| 19 |

+

- aishell

|

| 20 |

+

- thchs30

|

| 21 |

+

- primewords

|

| 22 |

+

- vctk

|

| 23 |

+

library_name: pytorch

|

| 24 |

+

pipeline_tag: text-to-speech

|

| 25 |

---

|

| 26 |

+

|

| 27 |

+

# Convbased

|

| 28 |

+

|

| 29 |

+

Convbased是一个高性能的中文语音合成模型,基于卷积神经网络和编码器-解码器架构设计。该模型在多个中文数据集上进行训练,支持多说话人和多方言的语音合成。

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

- 更快的训练收敛速度

|

| 33 |

+

- 更稳定的训练过程

|

| 34 |

+

- 更好的语音质量输出

|

| 35 |

+

- 支持多种中文方言(普通话、粤语、闽南语、四川话、温州话等)

|

| 36 |

+

- 多说话人语音合成能力

|

| 37 |

+

|

| 38 |

+

## 模型信息

|

| 39 |

+

|

| 40 |

+

### 训练规模

|

| 41 |

+

- **说话人数量**: 476个不同说话人

|

| 42 |

+

- **训练时长**: 35天连续训练

|

| 43 |

+

- **模型类型**: 编码器 + 解码器架构

|

| 44 |

+

- **总训练数据**: 约467小时高质量语音数据

|

| 45 |

+

|

| 46 |

+

### 模型架构

|

| 47 |

+

- **编码器**: 基于卷积的文本编码器

|

| 48 |

+

- **解码器**: 声学特征解码器

|

| 49 |

+

- **判别器**: 对抗训练判别器

|

| 50 |

+

- **损失函数**: 组合损失(Mel频谱损失 + KL散度损失 + 特征匹配损失)

|

| 51 |

+

|

| 52 |

+

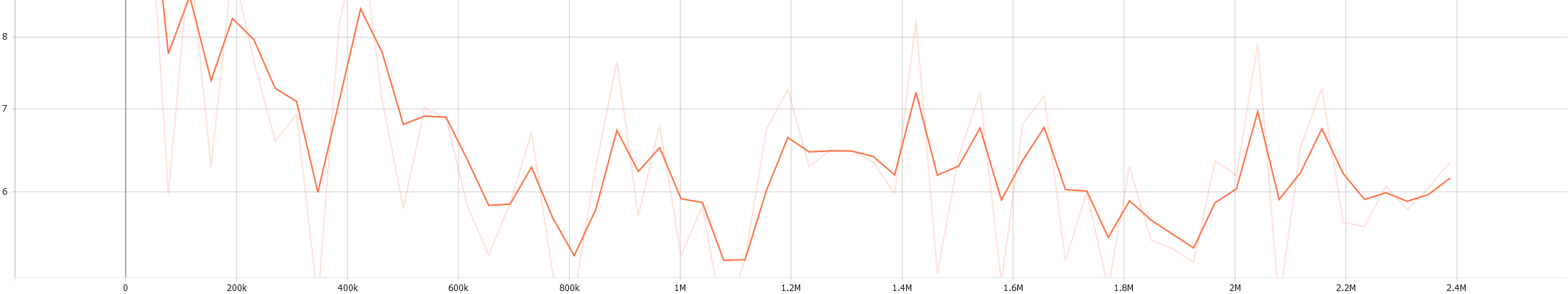

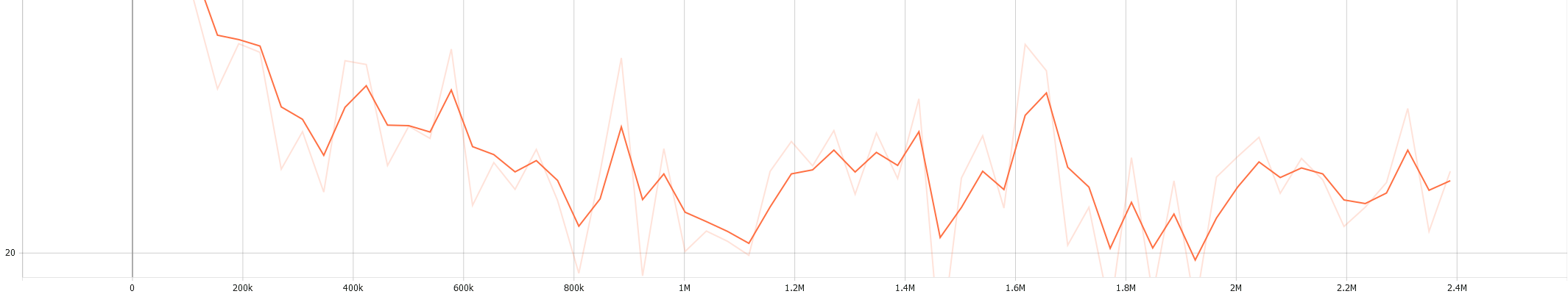

## 训练曲线

|

| 53 |

+

|

| 54 |

+

模型训练过程中的各项损失函数变化:

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

*判别器总损失*

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

*生成器特征匹配损失*

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

*KL散度损失*

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

*Mel频谱损失*

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

*生成器总损失*

|

| 70 |

+

|

| 71 |

+

## 训练数据集

|

| 72 |

+

|

| 73 |

+

本模型使用以下高质量中文语音数据集进行训练:

|

| 74 |

+

|

| 75 |

+

| 数据集名称 | 时长(小时) | 描述 |

|

| 76 |

+

|-------------------|-------------|------|

|

| 77 |

+

| data_aishell | 178 | 中文普通话语音识别数据集 |

|

| 78 |

+

| data_thchs30 | 30 | 清华大学中文语音数据集 |

|

| 79 |

+

| primewords_md_2018| 178 | 中文语音合成数据集 |

|

| 80 |

+

| VCTK | 44 | 英文多说话人数据集 |

|

| 81 |

+

| 四川方言 | 4 | 四川话方言数据 |

|

| 82 |

+

| 闽南语 | 3 | 闽南话方言数据 |

|

| 83 |

+

| 粤语 | 3 | 粤语方言数据 |

|

| 84 |

+

| 温州方言 | 7 | 温州话方言数据 |

|

| 85 |

+

| 噪声 | 20 | 噪声环境语音数据 |

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

*本模型致力于推进中文语音合成技术的发展,为中文TTS应用提供高质量的解决方案。*

|

assets/loss_d_total.png

ADDED

|

assets/loss_g_fm.png

ADDED

|

assets/loss_g_kl.png

ADDED

|

Git LFS Details

|

assets/loss_g_mel.png

ADDED

|

assets/loss_g_total.png

ADDED

|

config.json

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"train": {

|

| 3 |

+

"log_interval": 200,

|

| 4 |

+

"seed": 1234,

|

| 5 |

+

"learning_rate": 1e-4,

|

| 6 |

+

"betas": [0.8, 0.99],

|

| 7 |

+

"eps": 1e-9,

|

| 8 |

+

"lr_decay": 0.999875,

|

| 9 |

+

"segment_size": 17280,

|

| 10 |

+

"c_mel": 45,

|

| 11 |

+

"c_kl": 1.0

|

| 12 |

+

},

|

| 13 |

+

"data": {

|

| 14 |

+

"max_wav_value": 32768.0,

|

| 15 |

+

"sample_rate": 48000,

|

| 16 |

+

"filter_length": 2048,

|

| 17 |

+

"hop_length": 480,

|

| 18 |

+

"win_length": 2048,

|

| 19 |

+

"n_mel_channels": 128,

|

| 20 |

+

"mel_fmin": 0.0,

|

| 21 |

+

"mel_fmax": null

|

| 22 |

+

},

|

| 23 |

+

"model": {

|

| 24 |

+

"inter_channels": 192,

|

| 25 |

+

"hidden_channels": 192,

|

| 26 |

+

"filter_channels": 768,

|

| 27 |

+

"text_enc_hidden_dim": 768,

|

| 28 |

+

"n_heads": 2,

|

| 29 |

+

"n_layers": 6,

|

| 30 |

+

"kernel_size": 3,

|

| 31 |

+

"p_dropout": 0,

|

| 32 |

+

"resblock": "1",

|

| 33 |

+

"resblock_kernel_sizes": [3, 7, 11],

|

| 34 |

+

"resblock_dilation_sizes": [

|

| 35 |

+

[1, 3, 5],

|

| 36 |

+

[1, 3, 5],

|

| 37 |

+

[1, 3, 5]

|

| 38 |

+

],

|

| 39 |

+

"upsample_rates": [12, 10, 2, 2],

|

| 40 |

+

"upsample_initial_channel": 512,

|

| 41 |

+

"upsample_kernel_sizes": [24, 20, 4, 4],

|

| 42 |

+

"use_spectral_norm": false,

|

| 43 |

+

"gin_channels": 256,

|

| 44 |

+

"spk_embed_dim": 476

|

| 45 |

+

}

|

| 46 |

+

}

|